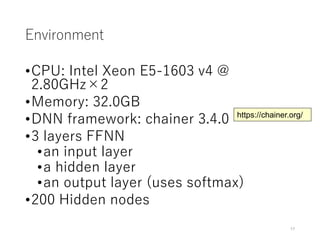

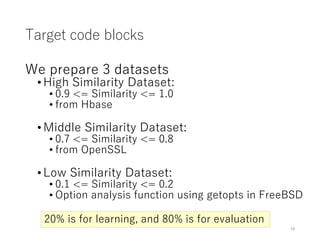

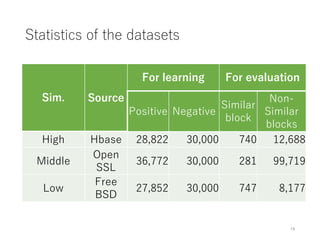

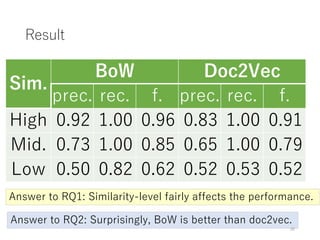

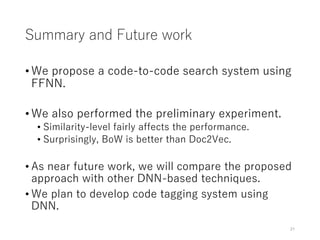

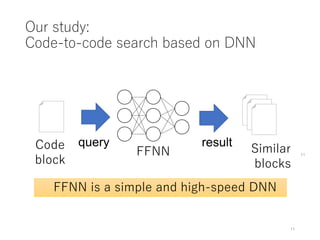

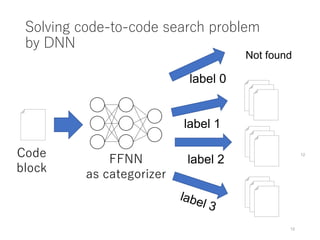

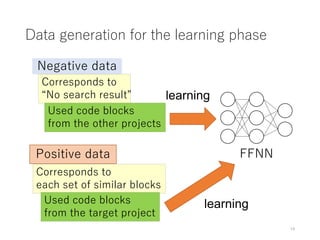

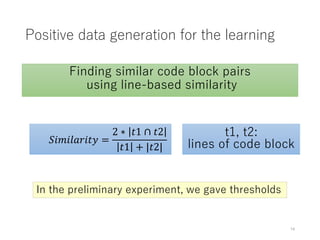

This document discusses a code-to-code search system based on deep neural networks (DNN) utilizing a feedforward neural network (FFNN) for code tagging and clone detection. Preliminary experiments indicate that similarity levels significantly impact performance and that the Bag of Words (BOW) representation outperforms Doc2Vec in this context. The authors propose further research to refine their techniques and develop a comprehensive code tagging system.

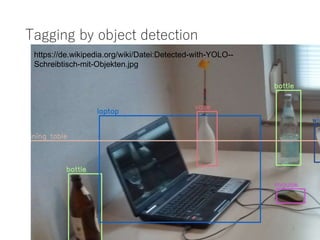

![YOLO: Real-time Object Detection

Using DNN (Deep Neural Network) [1]

2

[1] Redmon et al.: You only look once: Unified, real-time object detection. CVPR 2016.

https://de.wikipedia.org/wiki/Datei:Detected-with-YOLO--Schreibtisch-mit-Objekten.jpg](https://image.slidesharecdn.com/iwsc2019-190224120753/85/Code-Search-Based-on-Deep-Neural-Network-and-Code-Mutation-2-320.jpg)

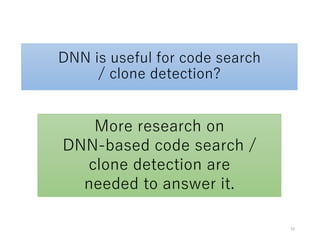

![DNN is useful for code search

/ clone detection?

• F-Score is extremely high

• 0.98 (DeepSim, BigCloneBench) [Zhao and Huang 2018]

• Incremental detection can be performed efficiently

• Once a DNN model is built.

9

Positive side

Negative side

• A DNN model is often unable to be useful for another

dataset

• It takes much time for learning phase](https://image.slidesharecdn.com/iwsc2019-190224120753/85/Code-Search-Based-on-Deep-Neural-Network-and-Code-Mutation-9-320.jpg)

![Deep learning needs much feed

Generating code blocks by

mutating similar block pairs

• Line insertion/deletion

• Replacement of identifier names

• Replacement of operators

Mutation operators [Roy and Cordy 2009]

15](https://image.slidesharecdn.com/iwsc2019-190224120753/85/Code-Search-Based-on-Deep-Neural-Network-and-Code-Mutation-15-320.jpg)