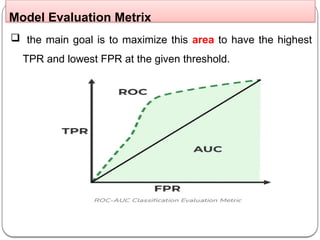

This document discusses machine learning (ML), defining it as a method for programming computers to learn and improve from experience without explicit programming. It details various types of ML such as supervised, unsupervised, and reinforcement learning, and highlights the importance of model evaluation and selection to avoid overfitting and underfitting. The document also addresses hyperparameters and their tuning to achieve optimal model performance.

![ Supervised learning: (function approximation) [labels data

well]

output <- input2

Learn to predict an output when given an input vector.

E.g.: Features: age, gender, smoking, drinking, etc…

Labels: having the disease, does not have the disease

Supervised Learning](https://image.slidesharecdn.com/chapter31-250128193307-90d64baa/85/chapter-Three-artificial-intelligence-1-pptx-16-320.jpg)