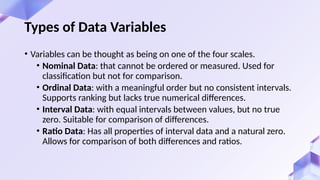

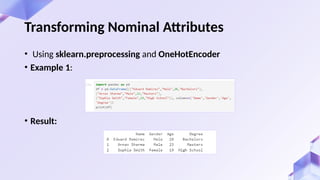

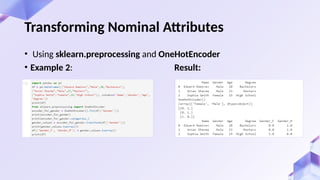

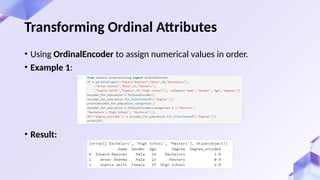

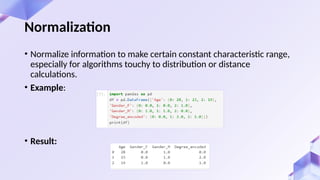

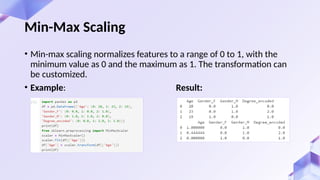

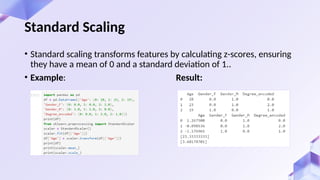

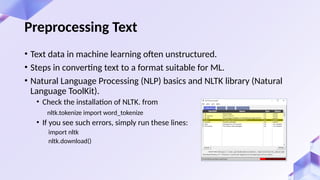

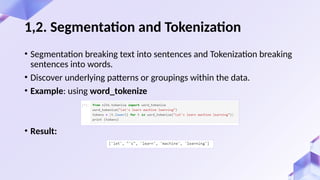

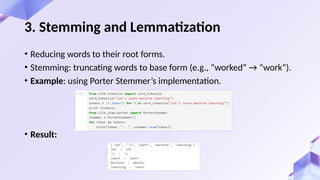

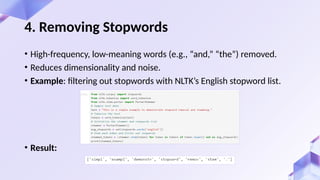

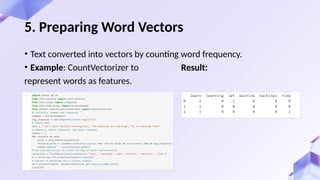

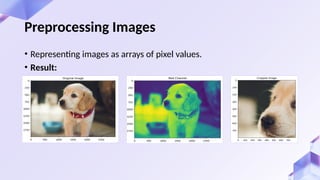

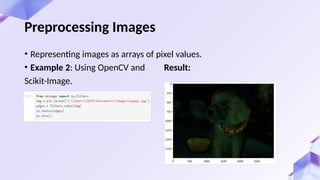

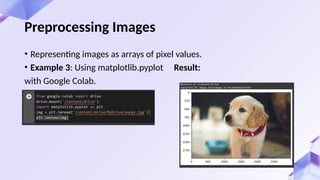

Chapter 6 discusses the critical processes of data preparation for machine learning, including data collection, transformation, and normalization. It explains different types of data variables, feature extraction, and the steps involved in converting text and images into suitable formats for machine learning models. Effective data preparation is emphasized as vital for creating robust models through proper encoding and normalization techniques.