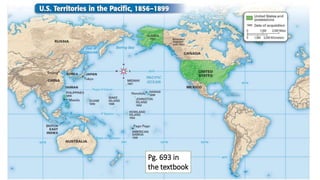

The United States began expanding its overseas territories and influence in the late 1800s, ending its policy of isolationism. Hawaii became a U.S. territory in 1898 after American business interests overthrew the Hawaiian monarchy. The U.S. also sought to increase trade with Japan and China through agreements like the Treaty of Wanghia in 1858. However, foreign powers competed for economic and political control in China, leading to conflicts like the Boxer Rebellion in 1900 where Chinese nationalists laid siege to foreign territories. The U.S. gained colonies in the Caribbean and Pacific after the Spanish-American War of 1898, marking its emergence as a world power.