Small and Medium Enterprises (SMEs): Offering micro and small enterprise loans to support entrepreneurship and job creation.

Large Corporations: Including partnerships with entities like Lemi National Cement PLC, indicating a focus on supporting larger economic projects.

Agricultural Sector: Providing loans for energy supply technologies and agricultural inputs

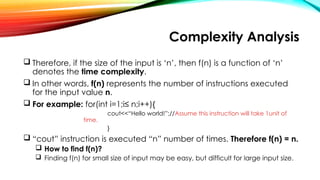

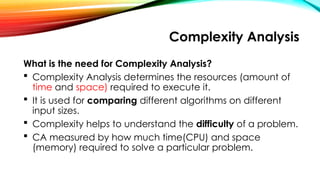

![Complexity Analysis

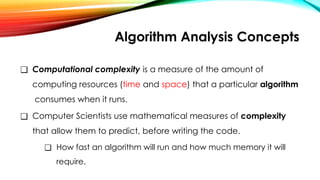

Different types of Complexity exist:

1. Constant Complexity, Lookup an arbitrary index , A[0], A[6], A[99],…

2. Logarithmic Complexity, binary search

3. Linear Complexity, linear search

4. Quadratic Complexity, Square NxN Matrix

5. Polynomial Complexity, triple loop

6. Exponential Complexity, Tower of Hanoi

7. Factorial Complexity, Factorials of a number, Permutation.](https://image.slidesharecdn.com/chapter1introductiontodatastructuresandalgorithms2-250922082613-8eb360e6/85/CHAPTER-1-Introduction-to-Data-Structures-and-Algorithms-2-pptx-20-320.jpg)