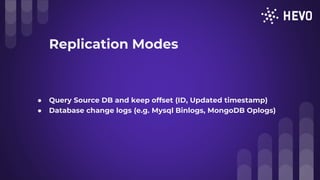

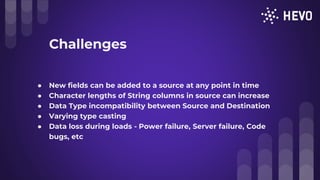

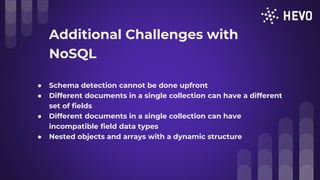

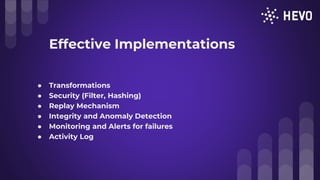

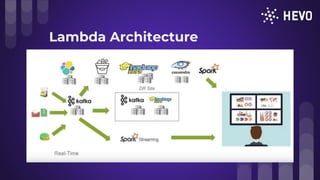

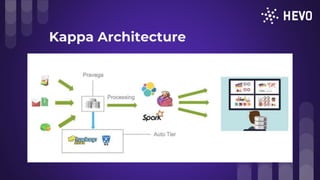

The document discusses challenges in building a data pipeline including making it highly scalable, available with low latency and zero data loss while supporting multiple data sources. It covers expectations for real-time vs batch processing and explores stream and batch architectures using tools like Apache Storm, Spark and Kafka. Challenges of data replication, schema detection and transformations with NoSQL are also examined. Effective implementations should include monitoring, security and replay mechanisms. Finally, lambda and kappa architectures for combining stream and batch processing are presented.