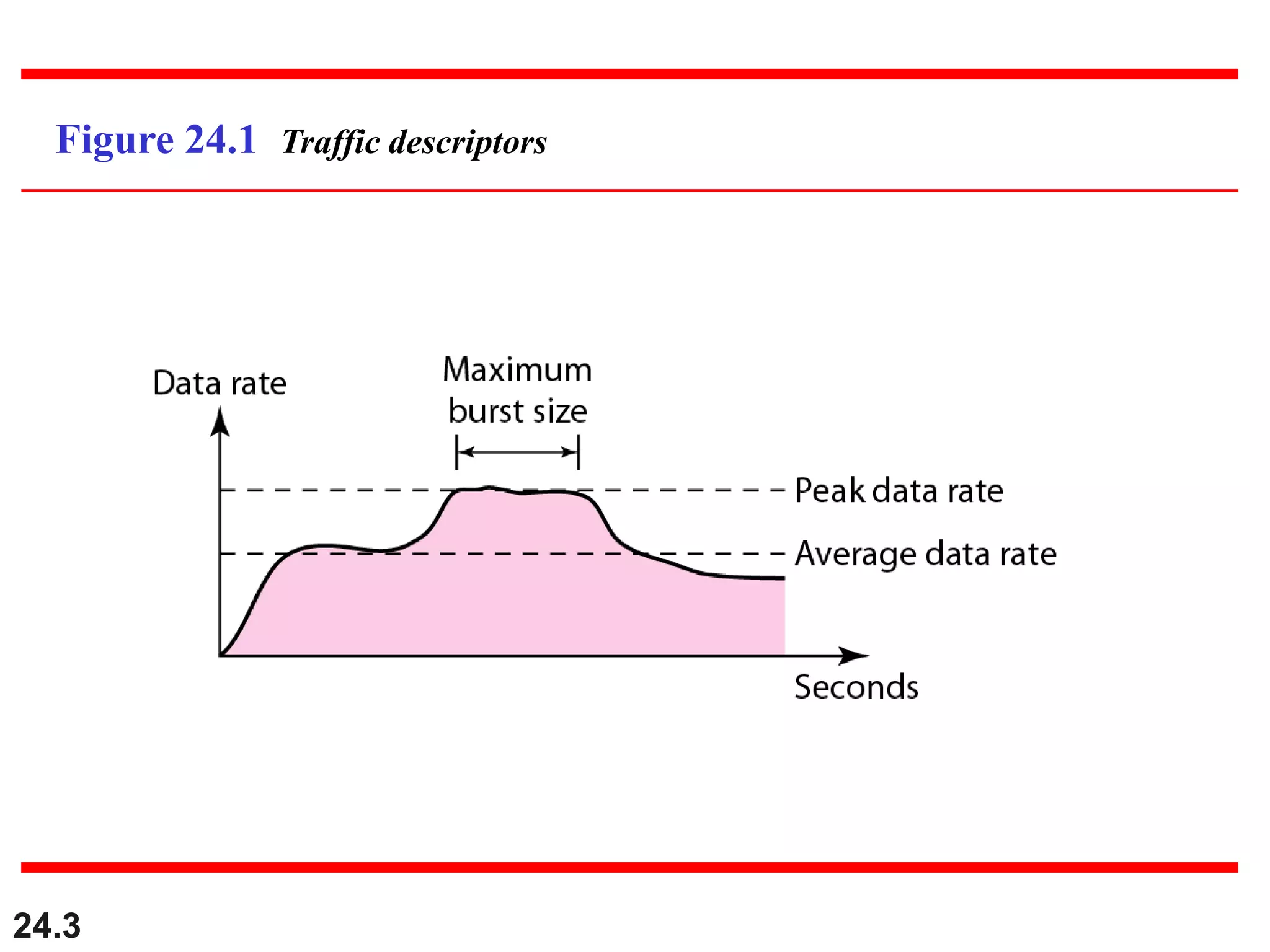

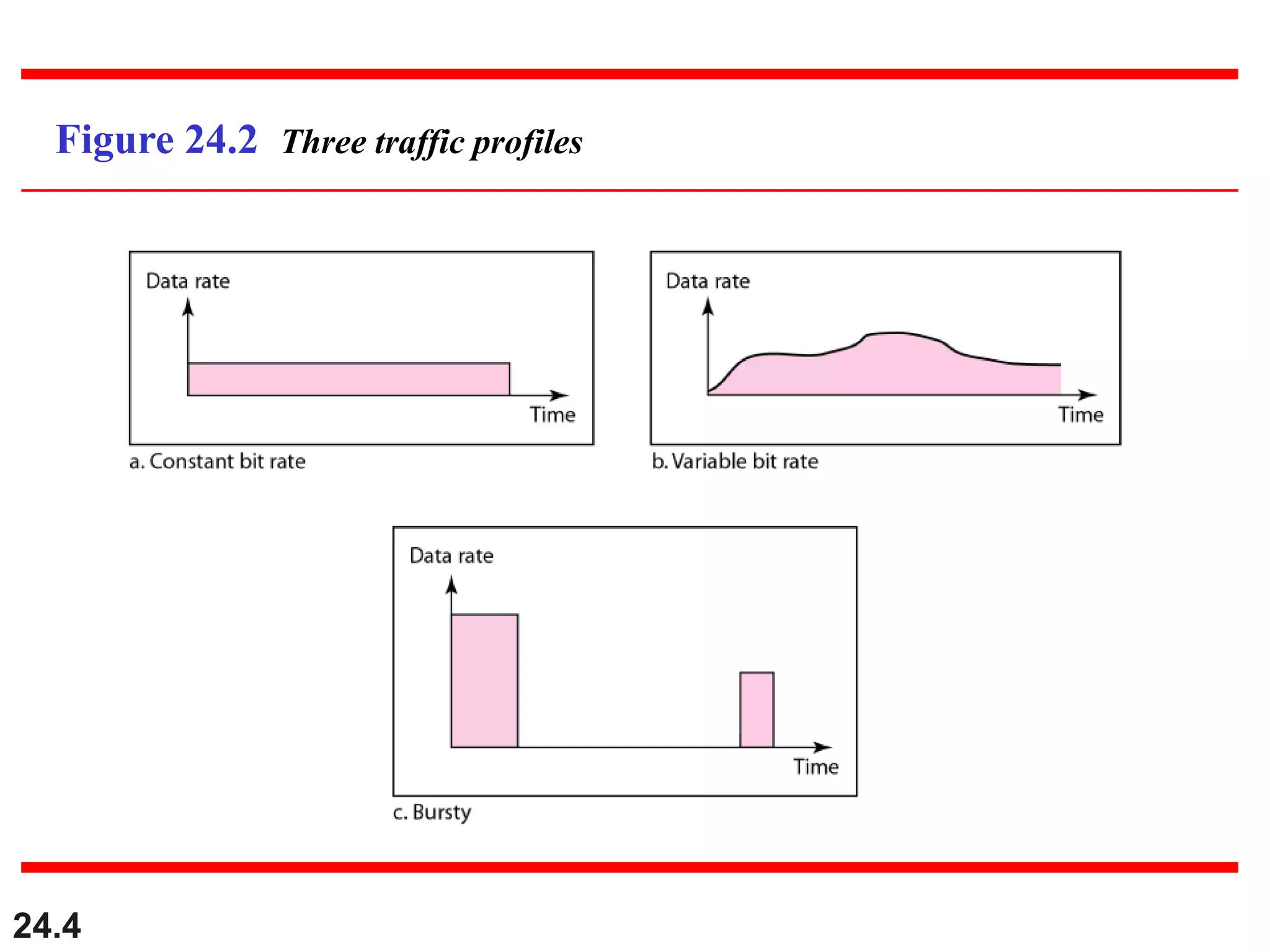

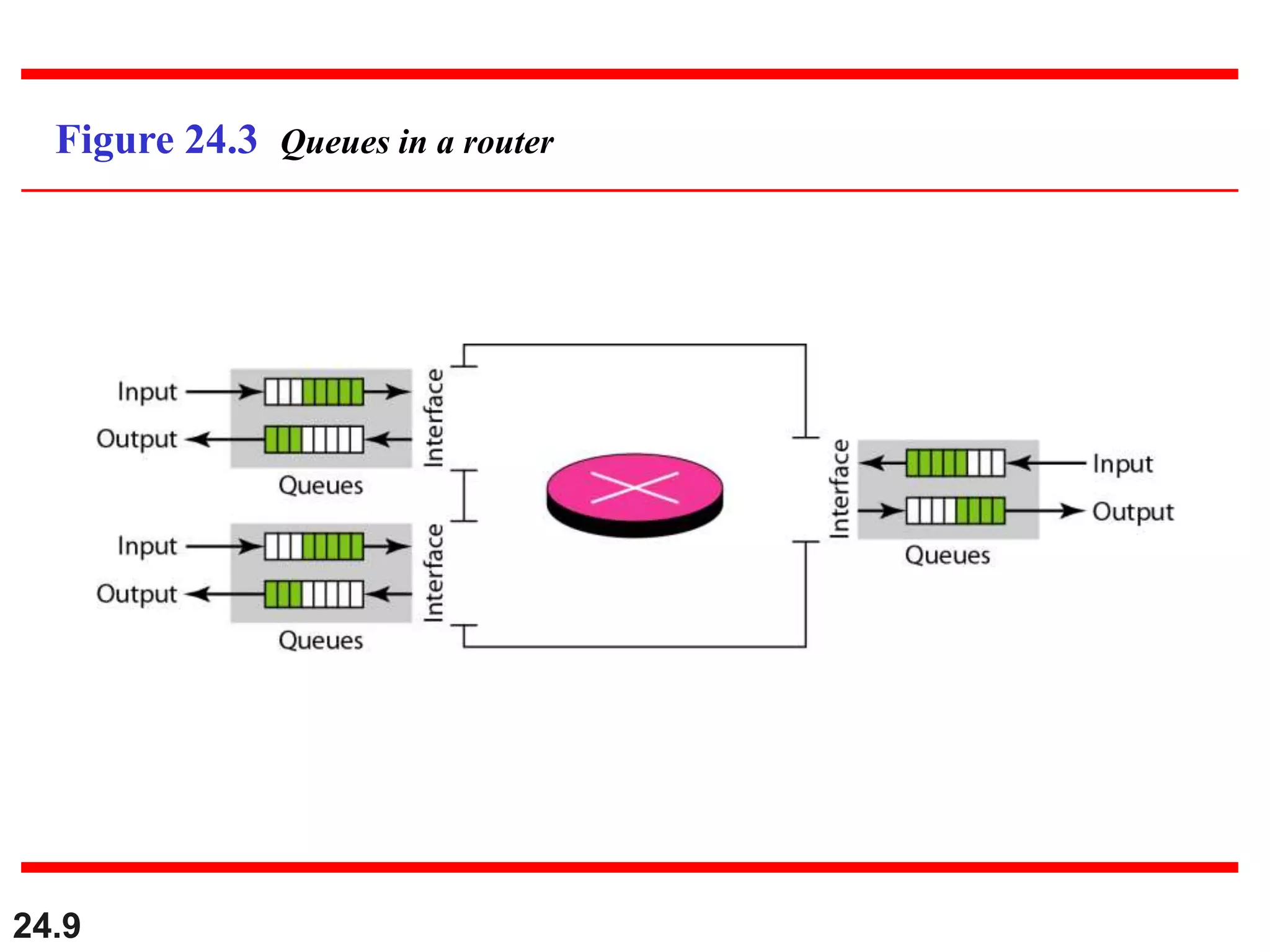

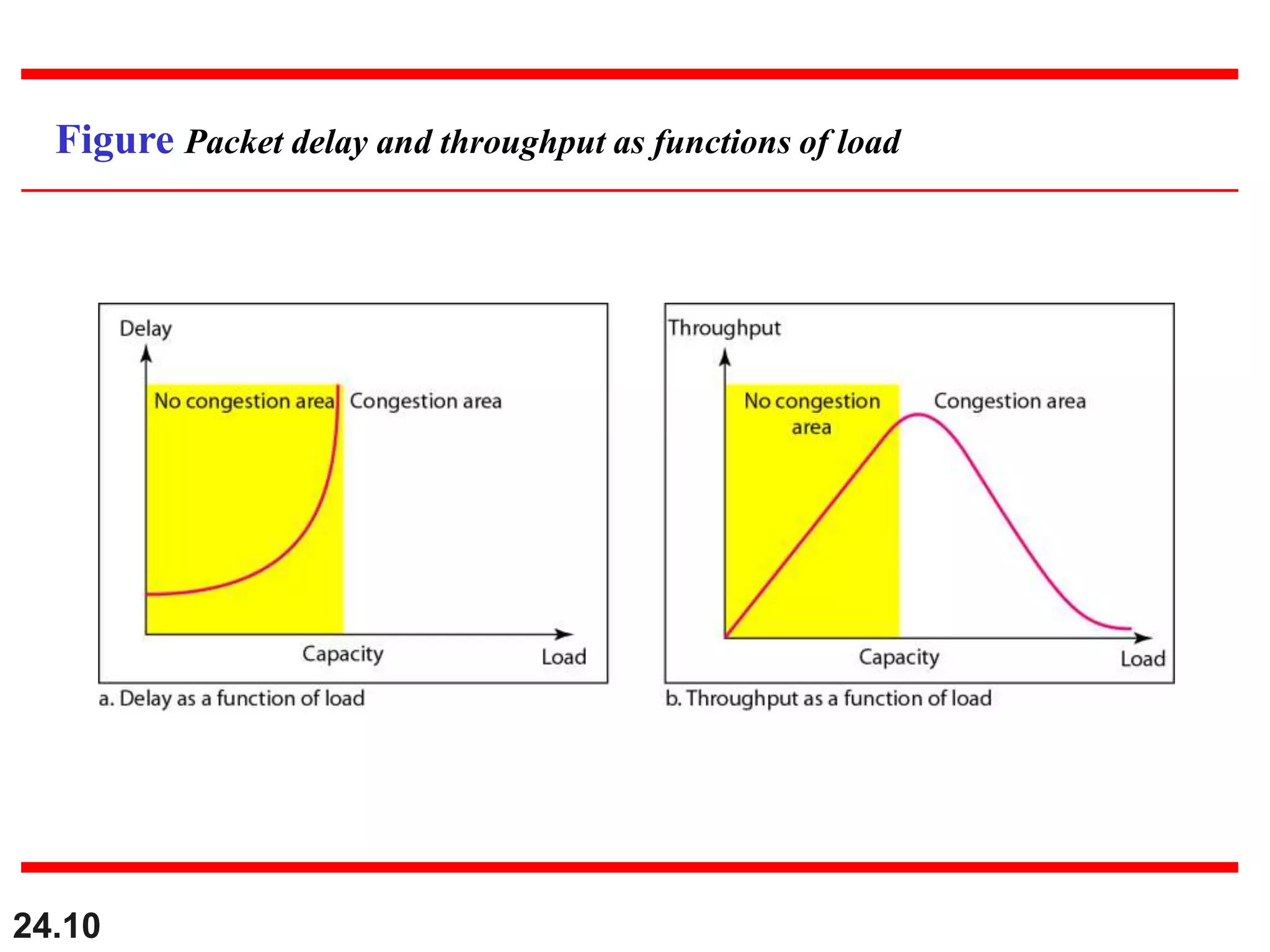

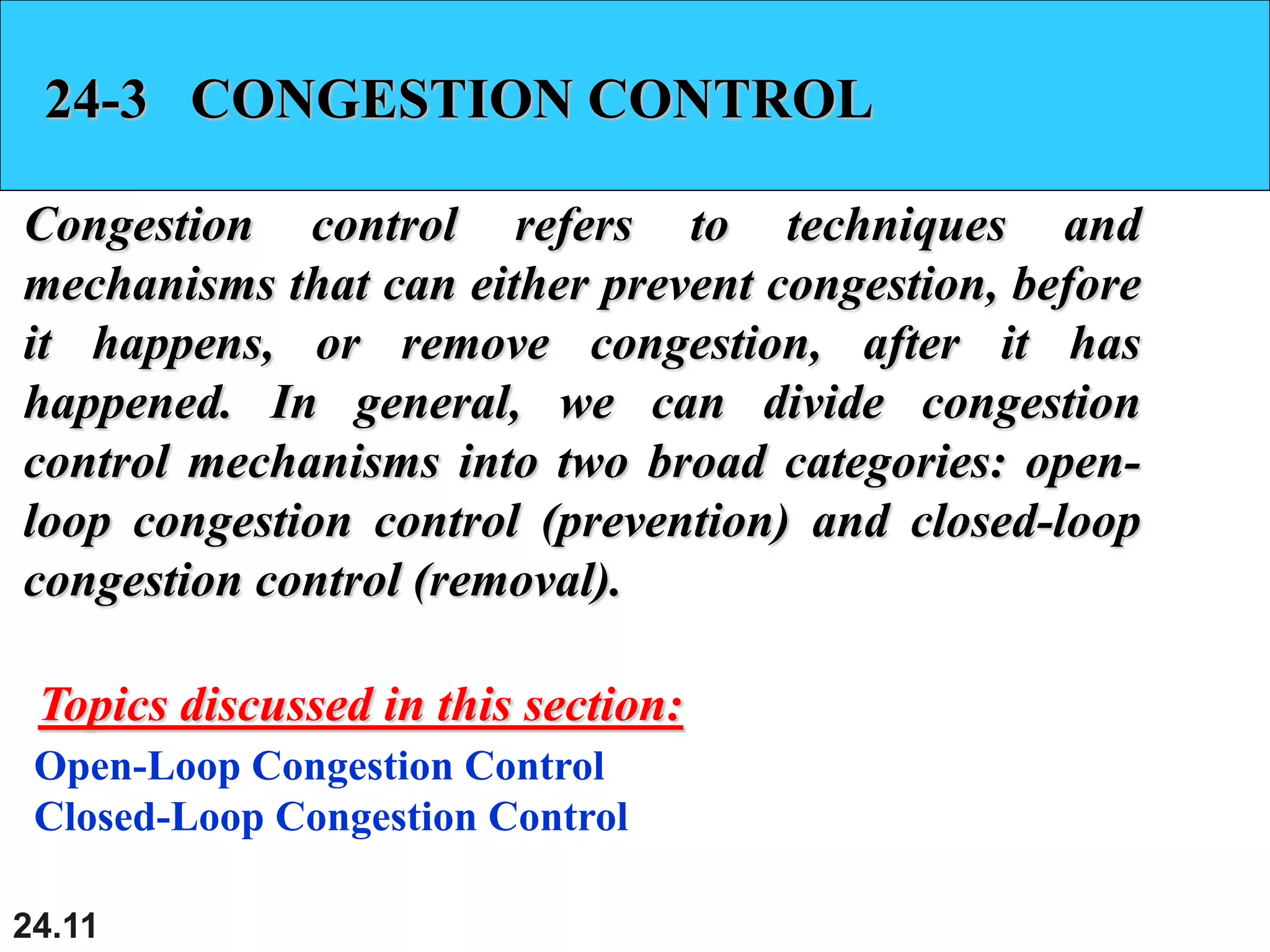

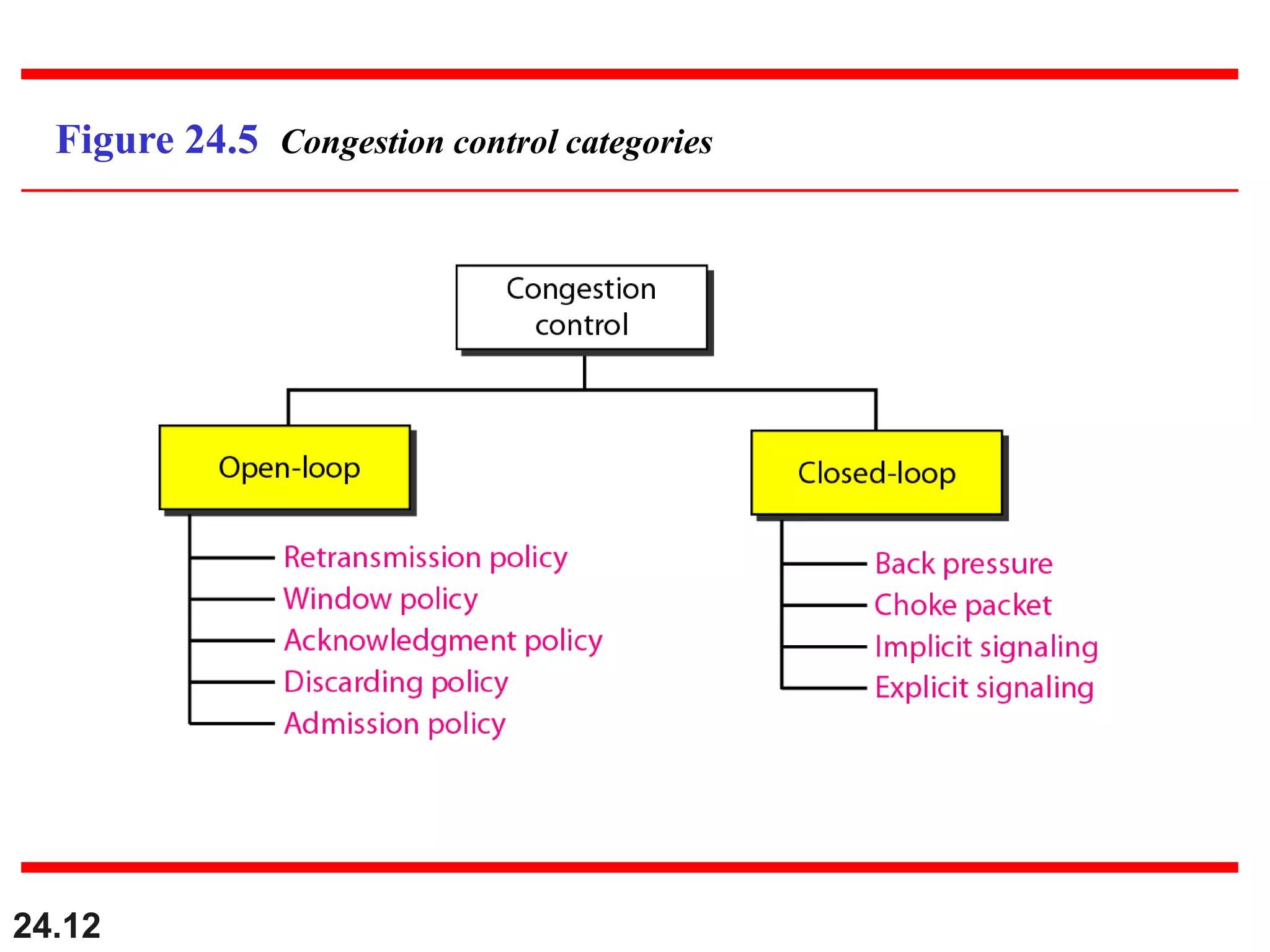

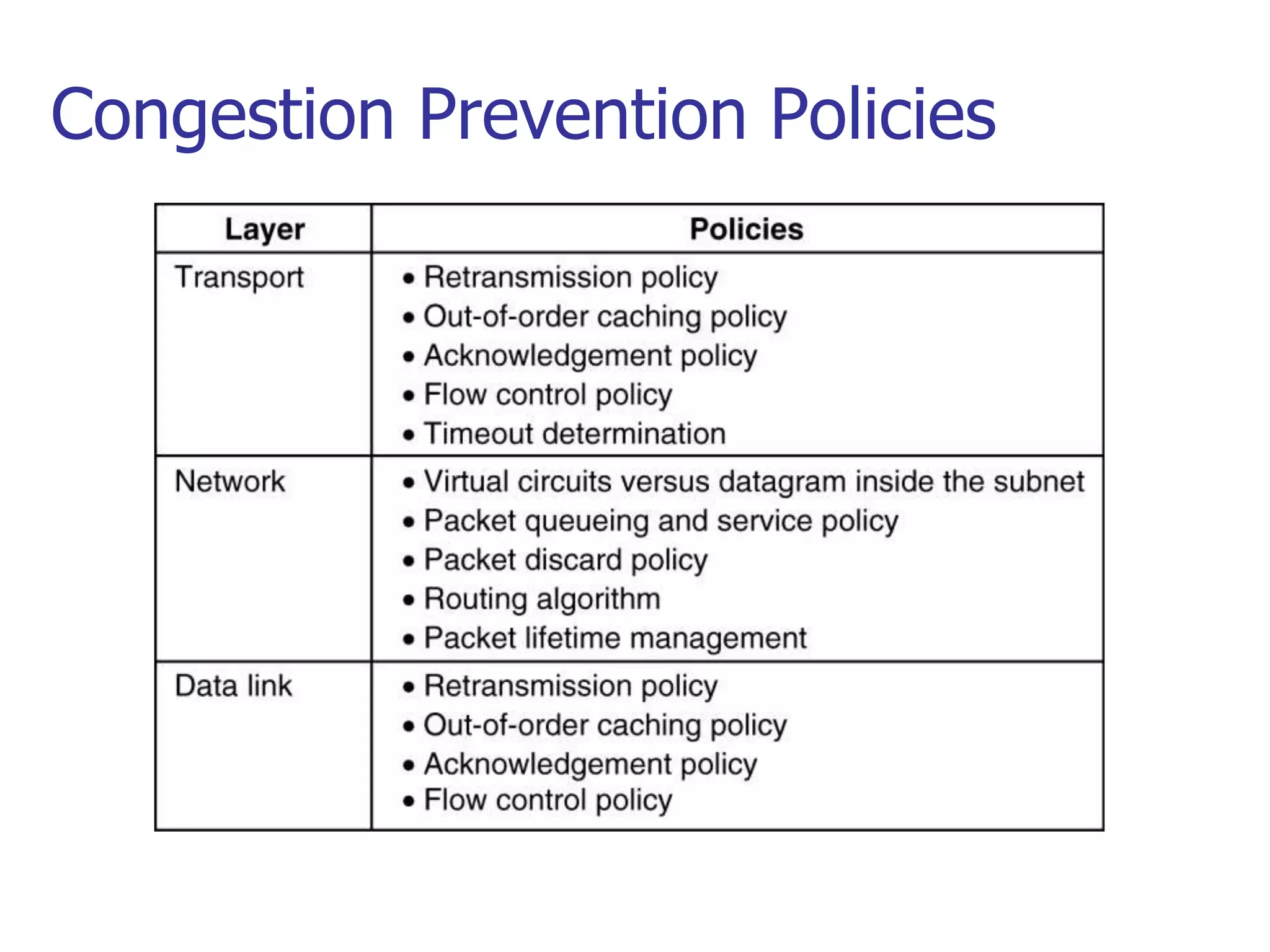

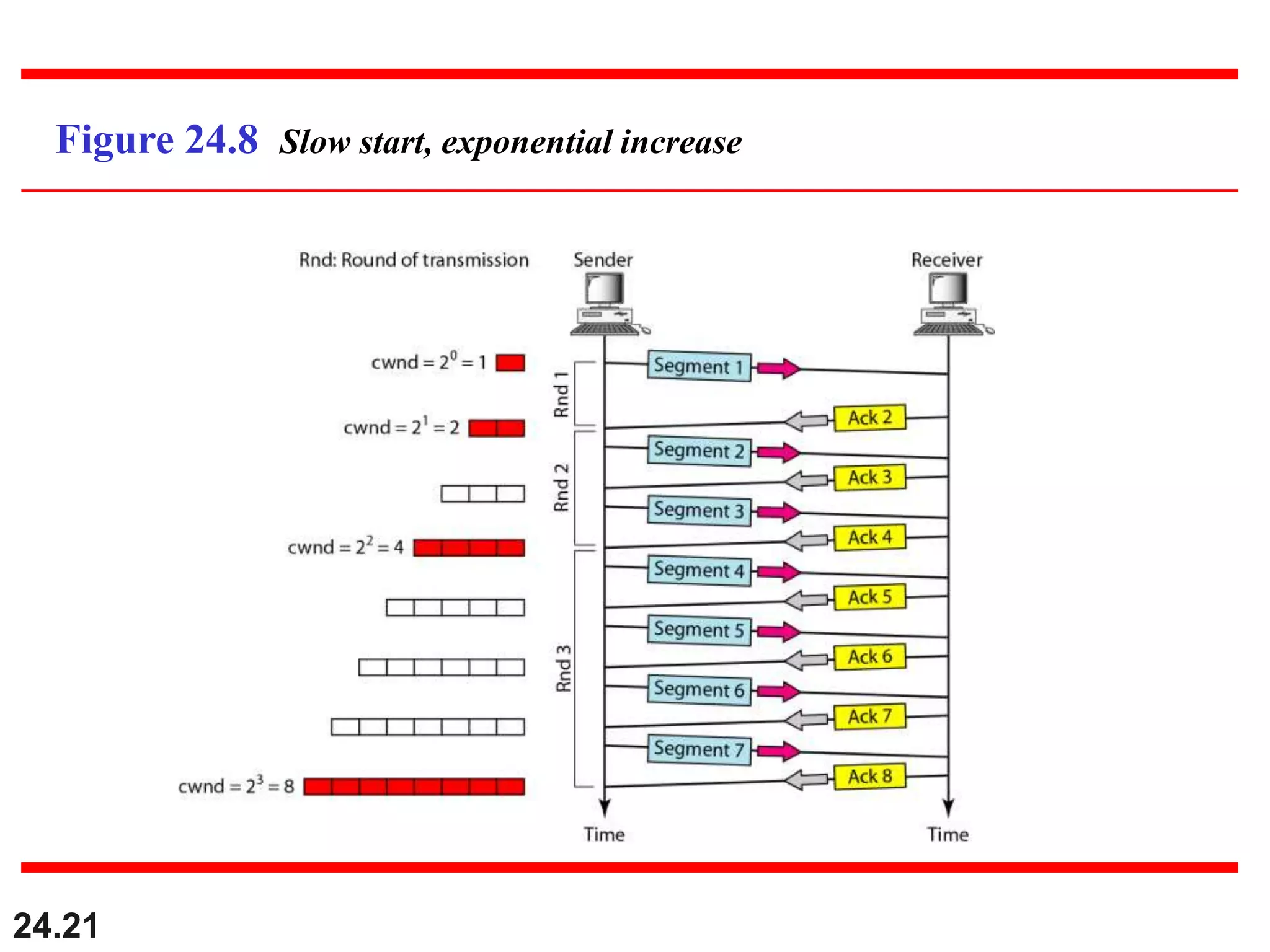

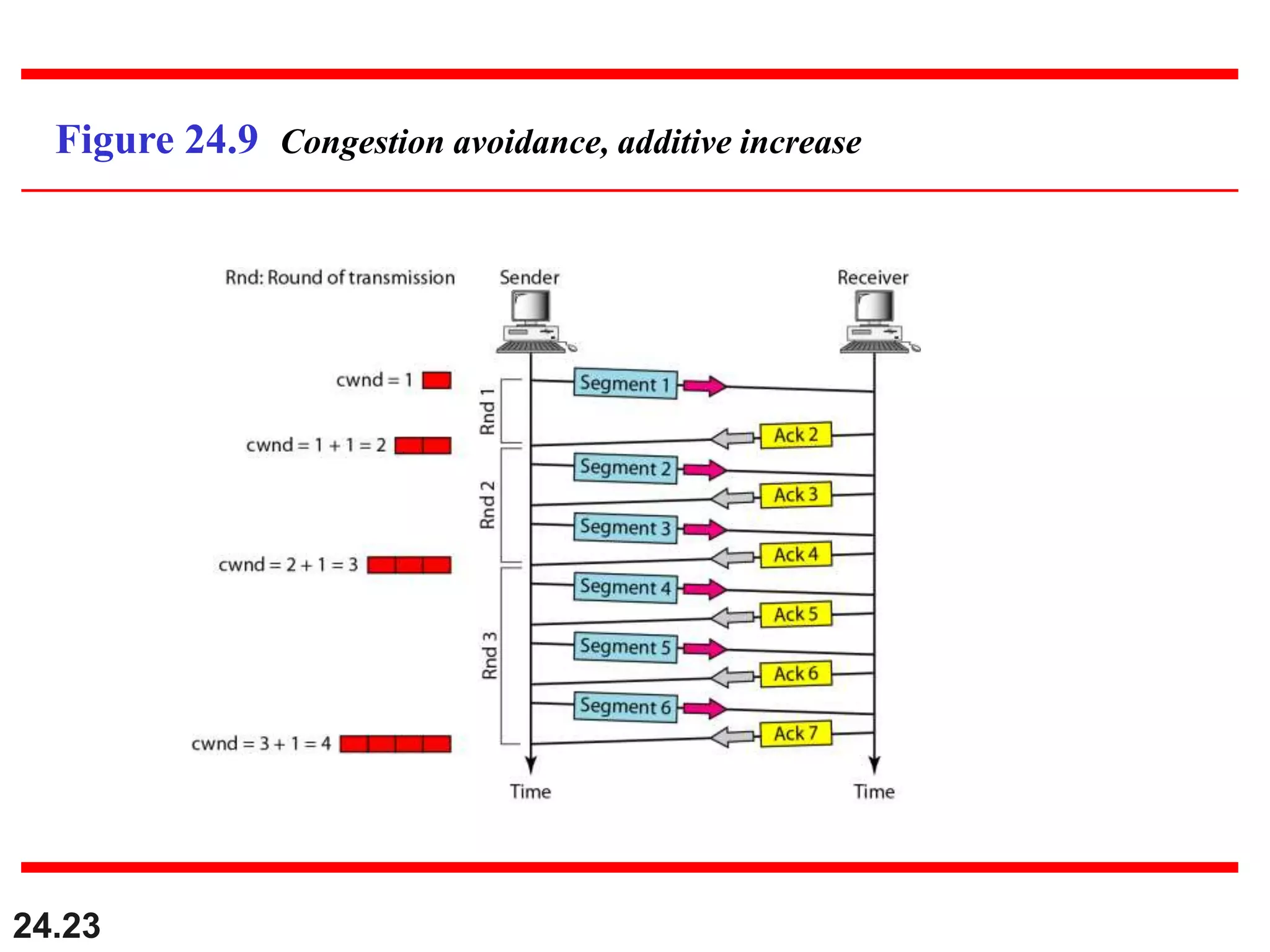

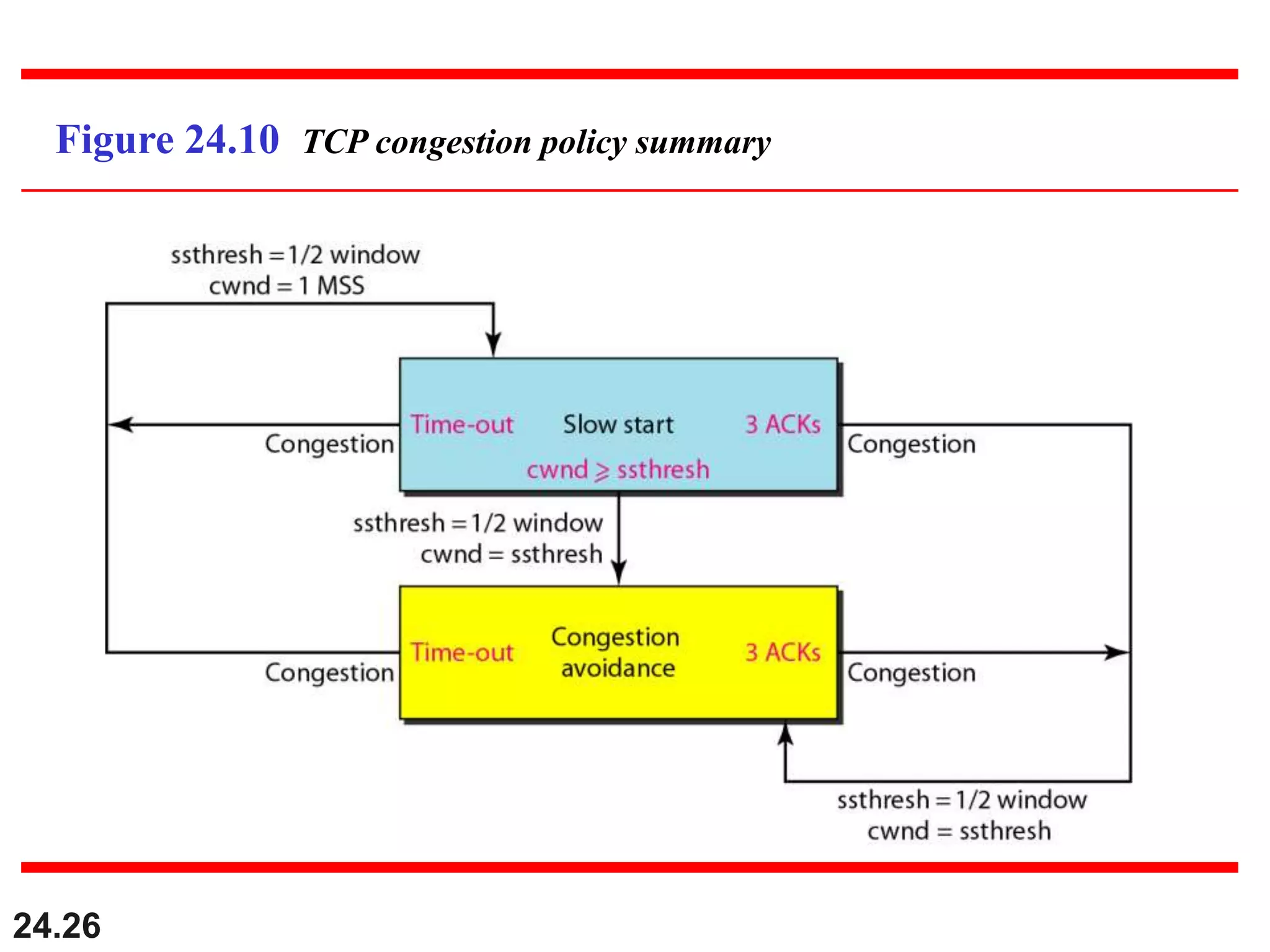

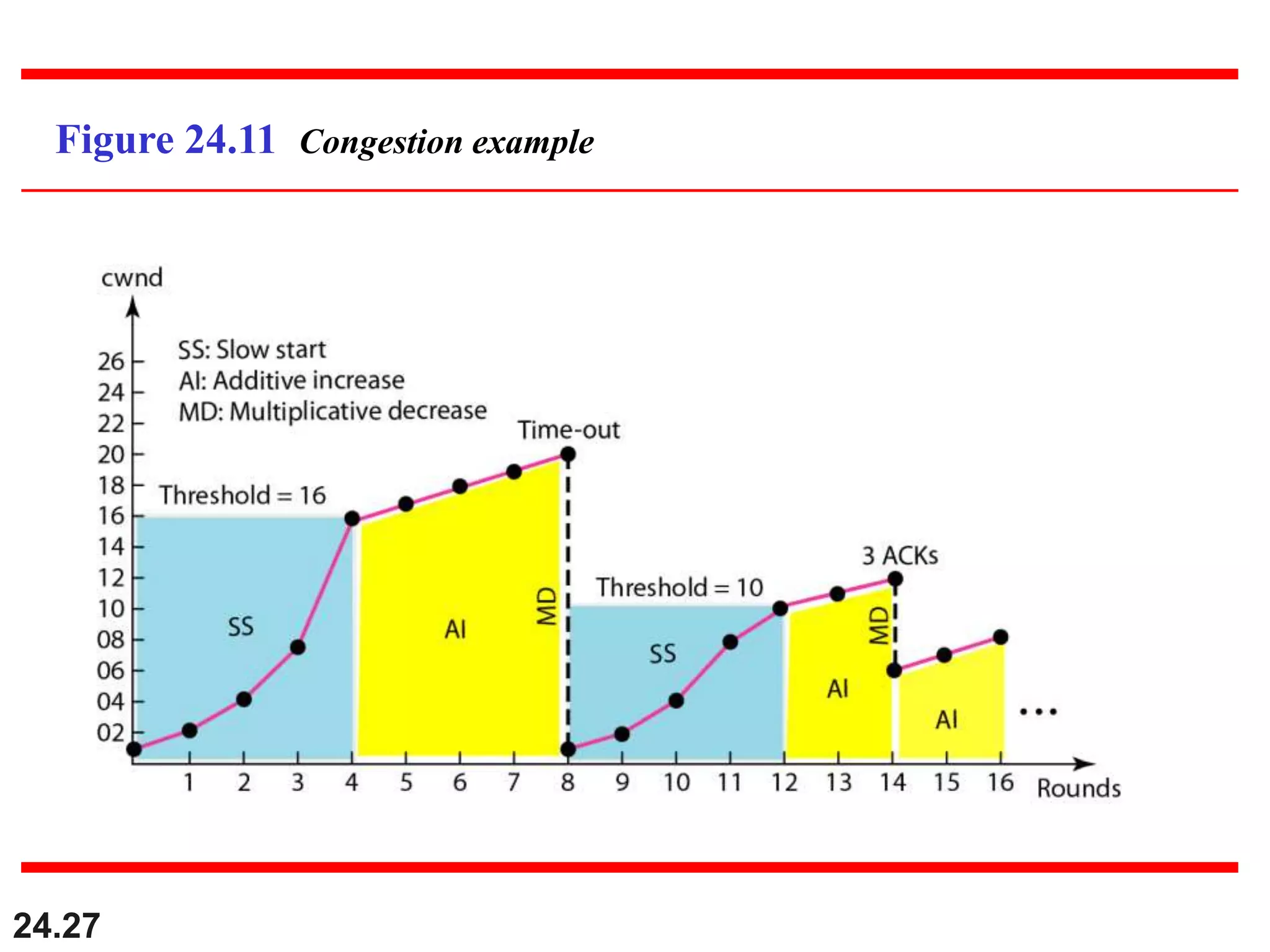

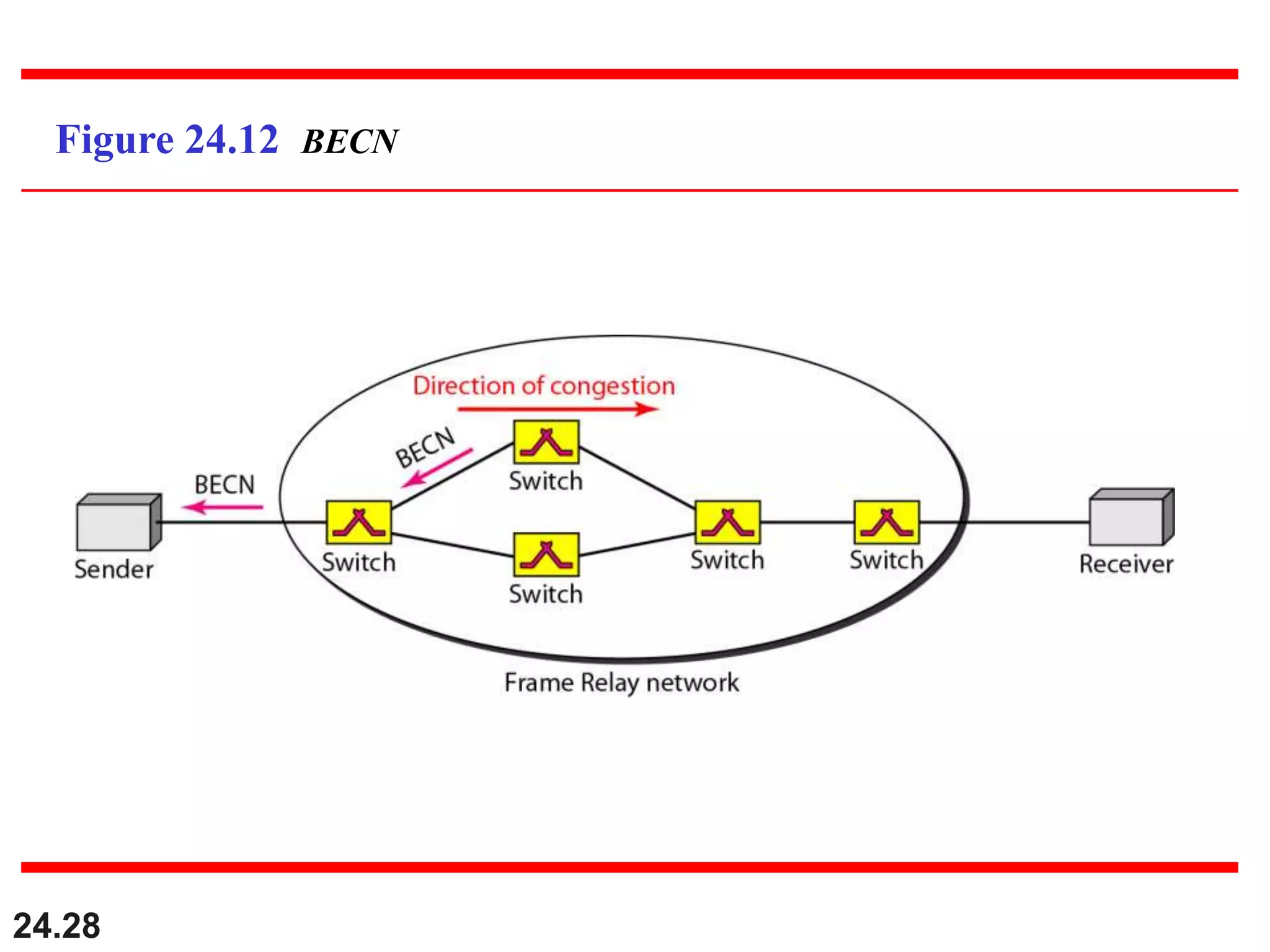

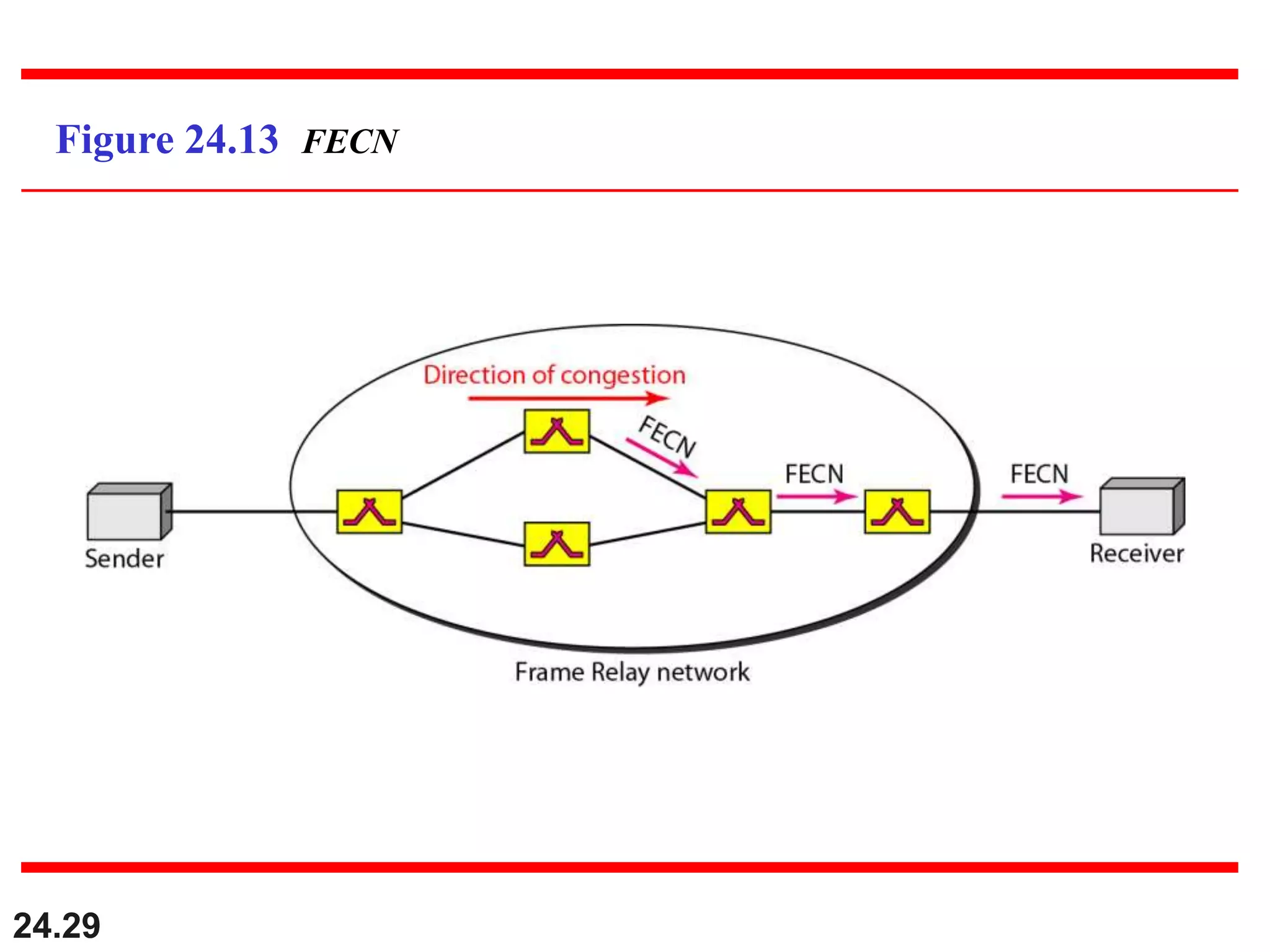

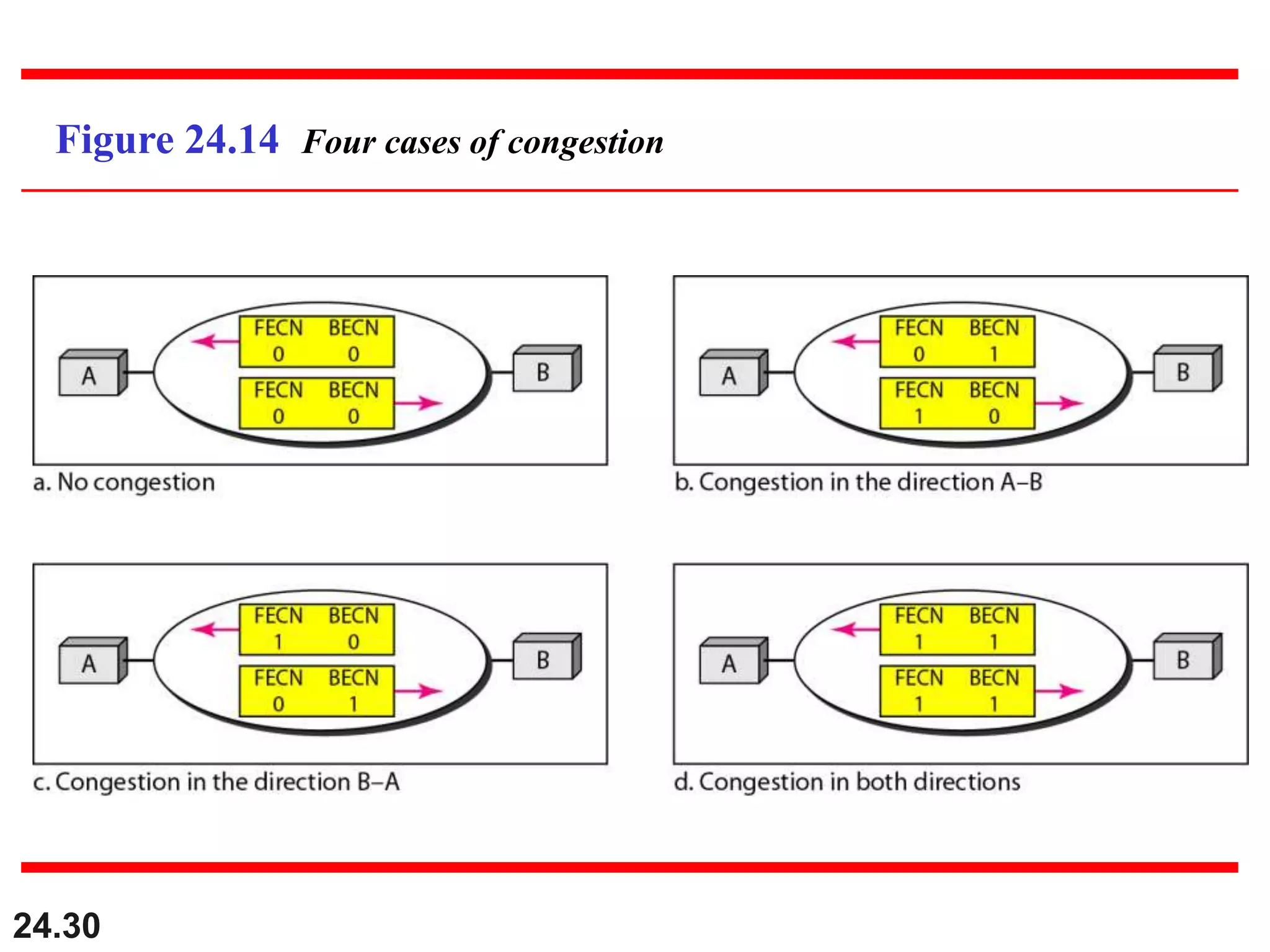

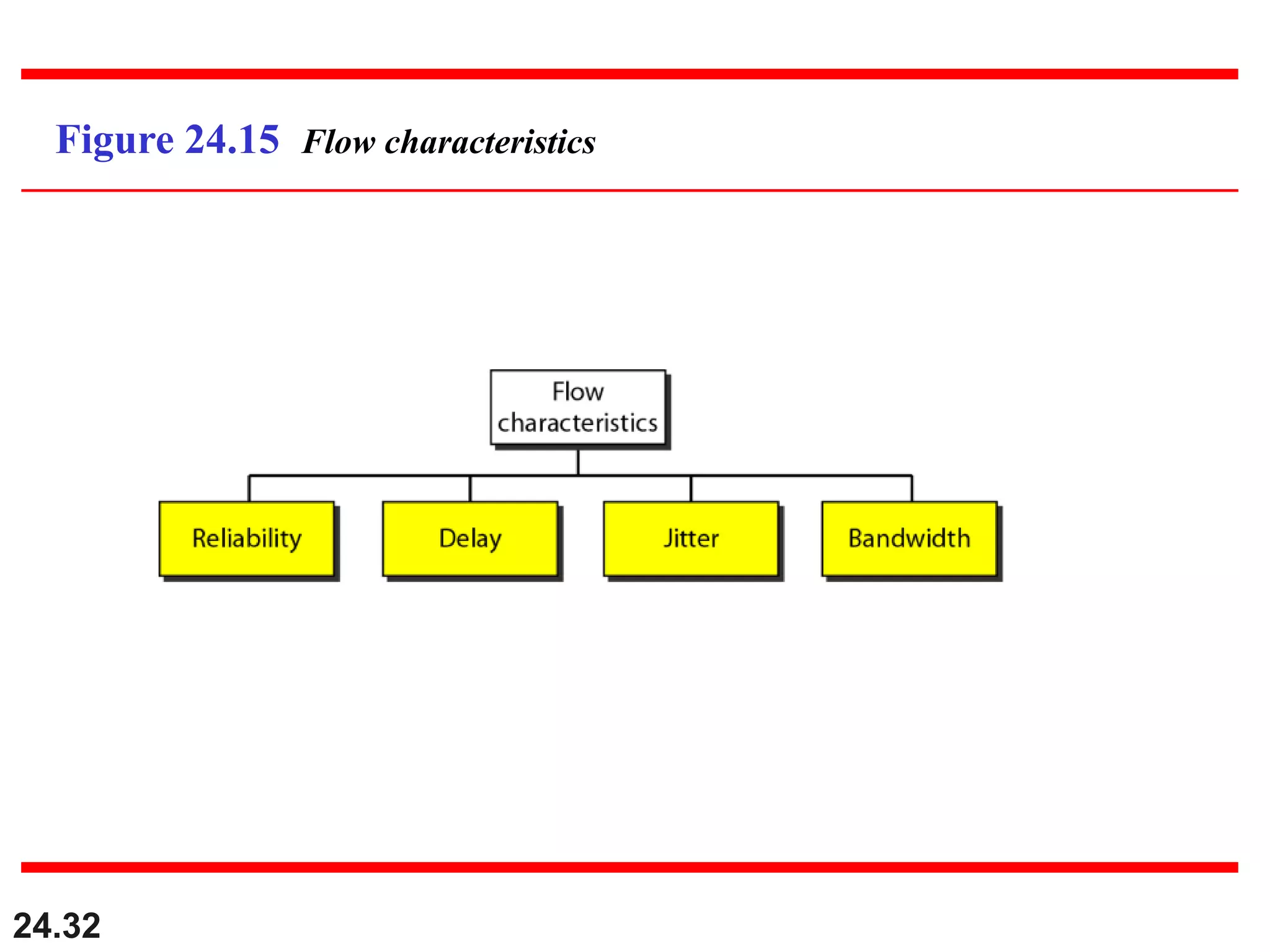

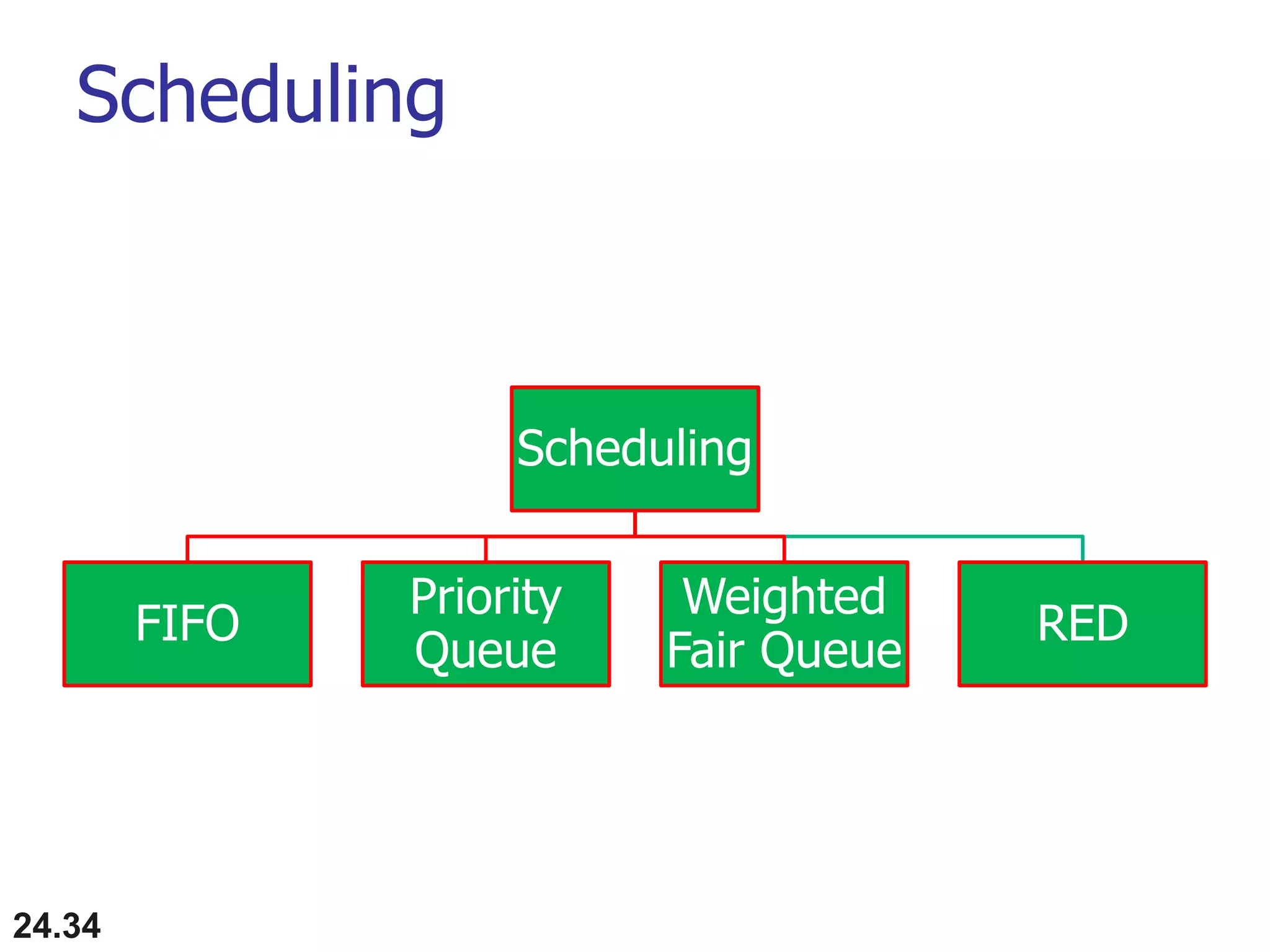

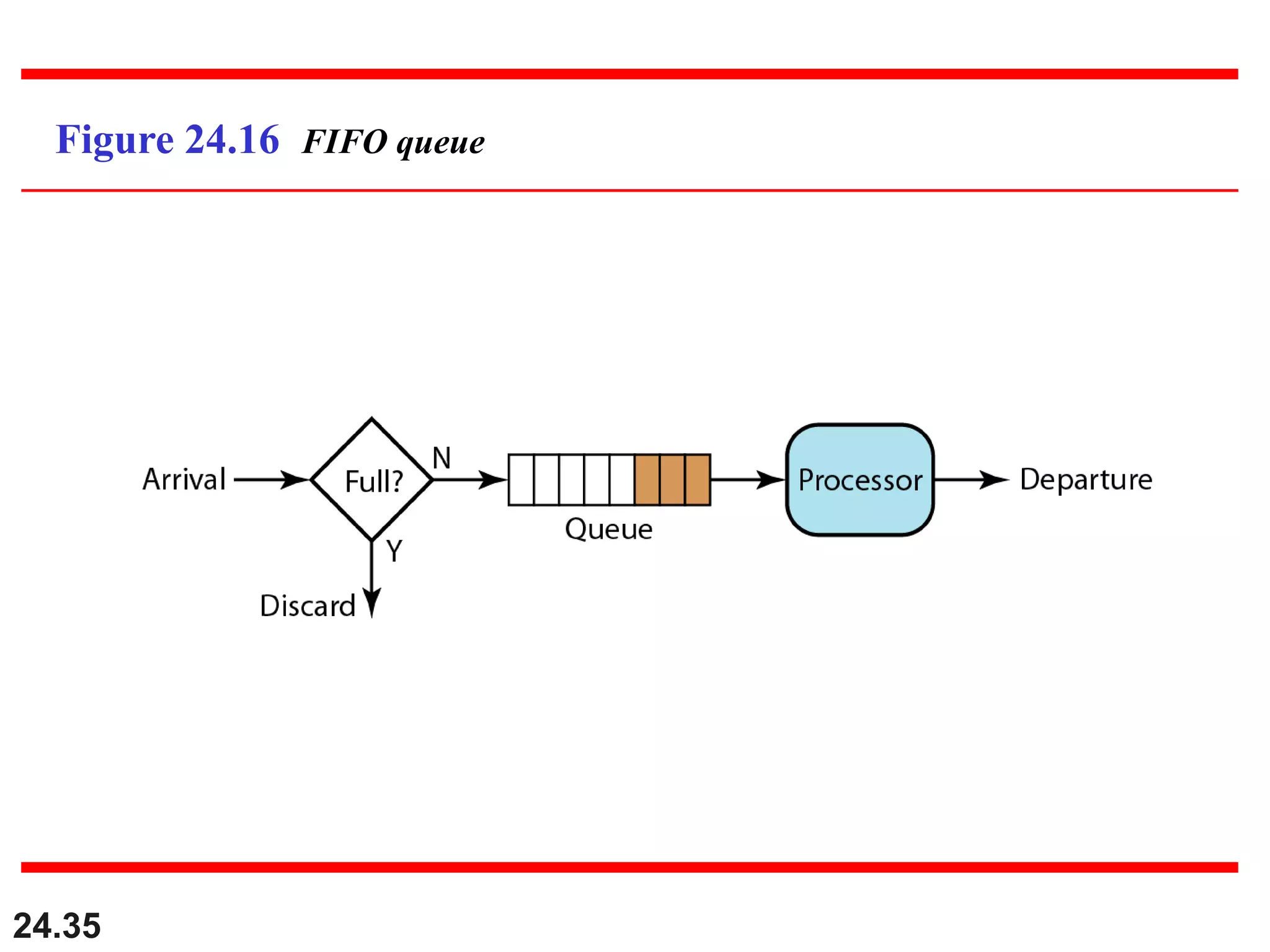

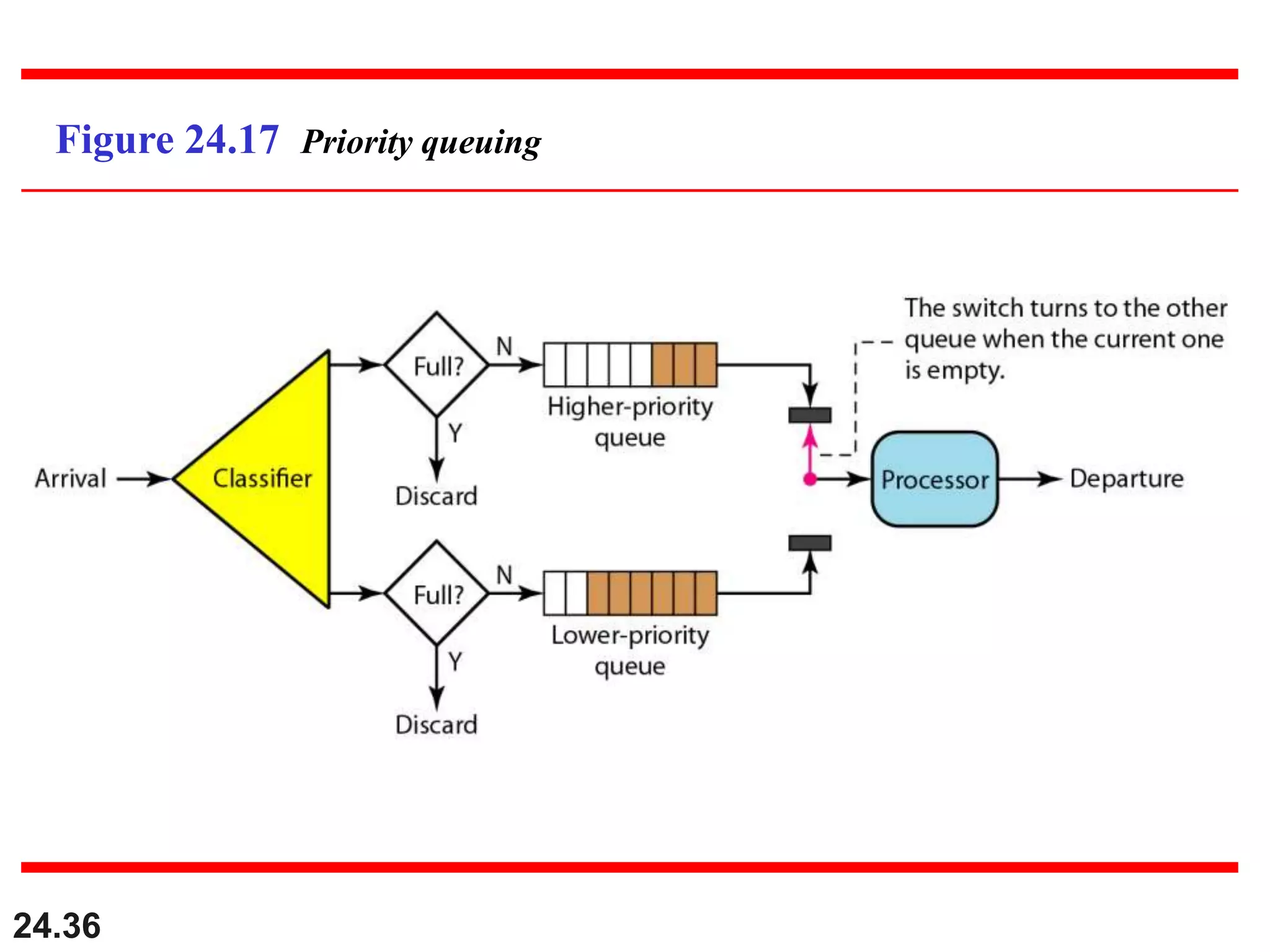

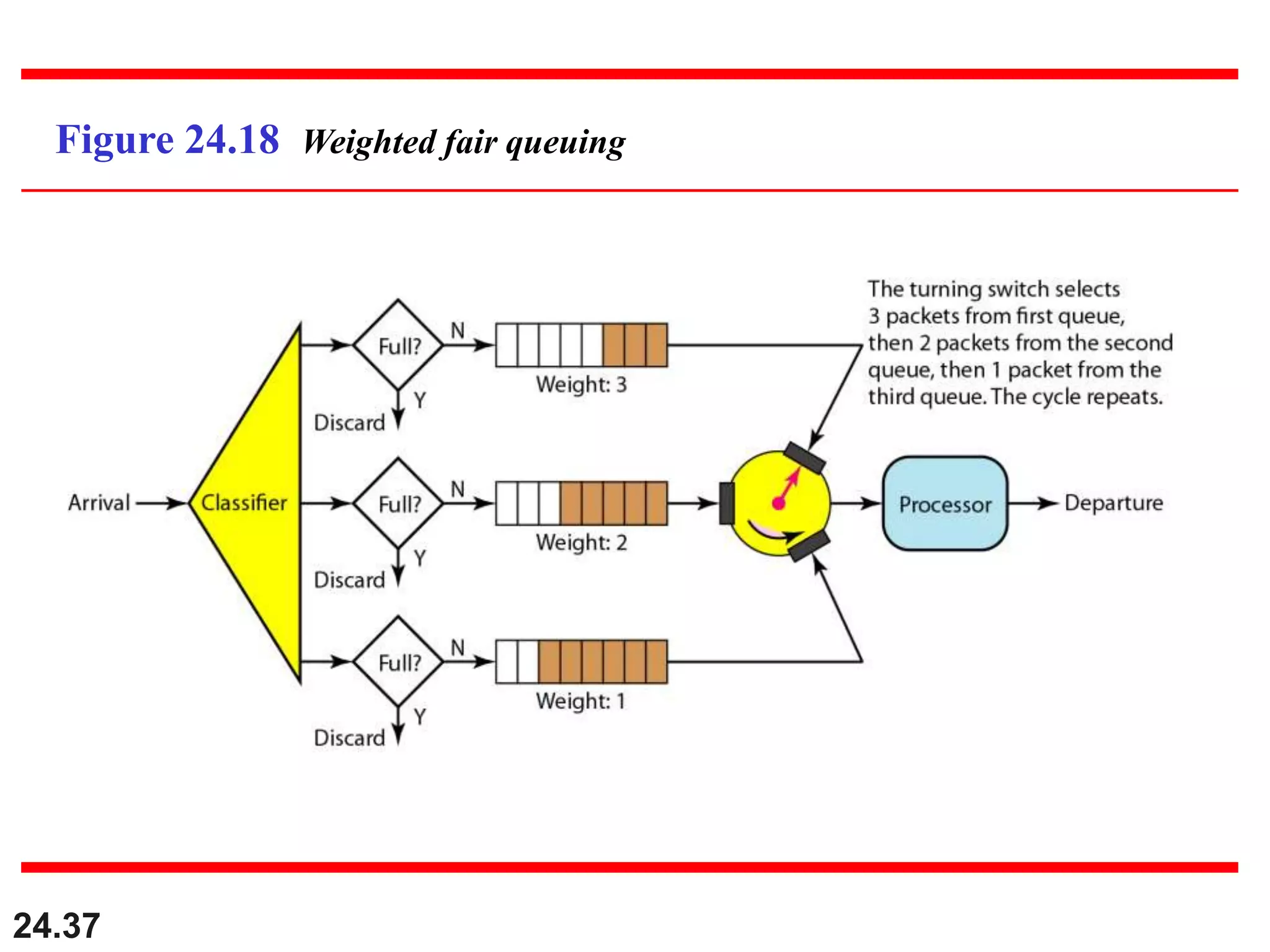

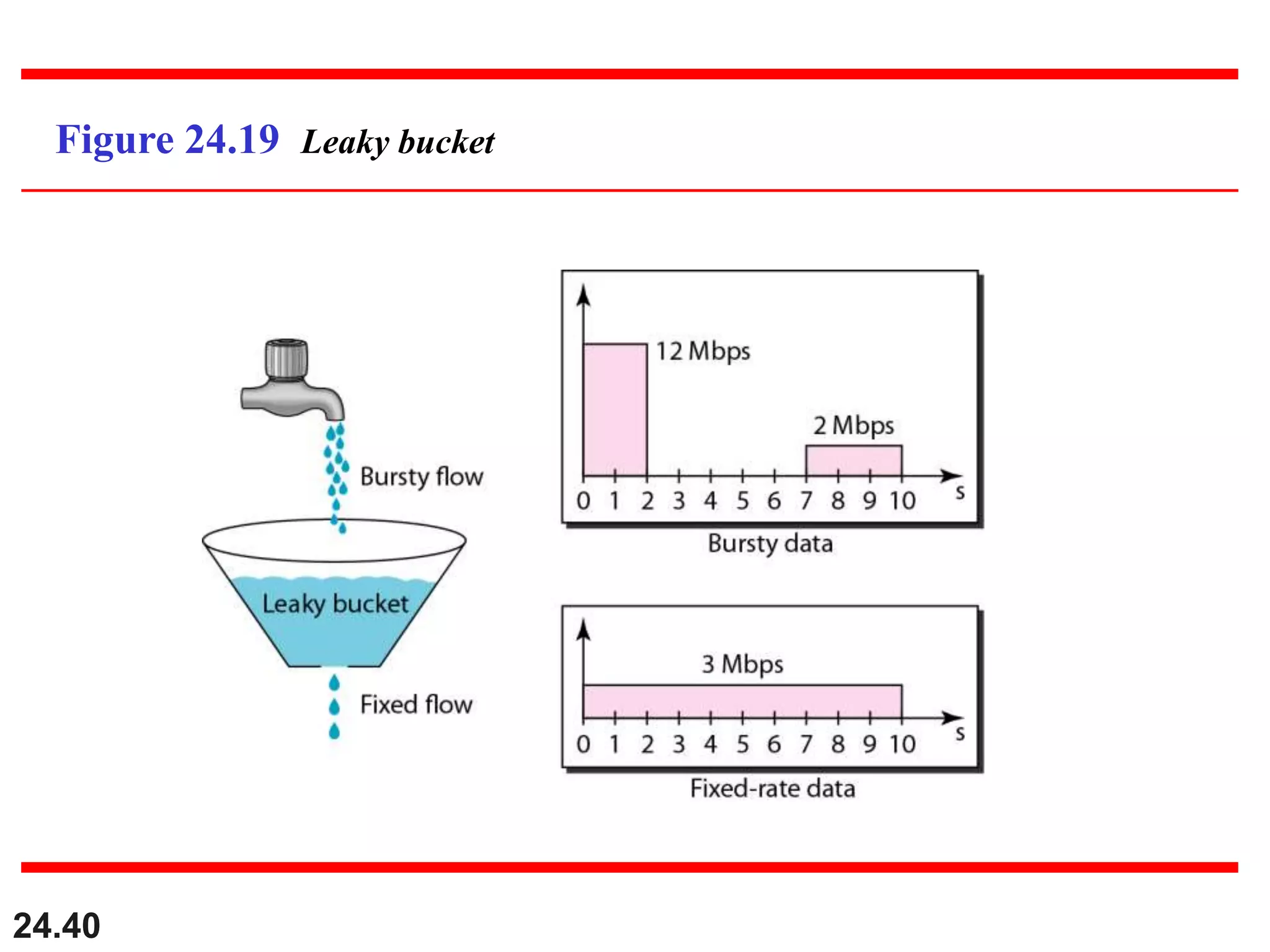

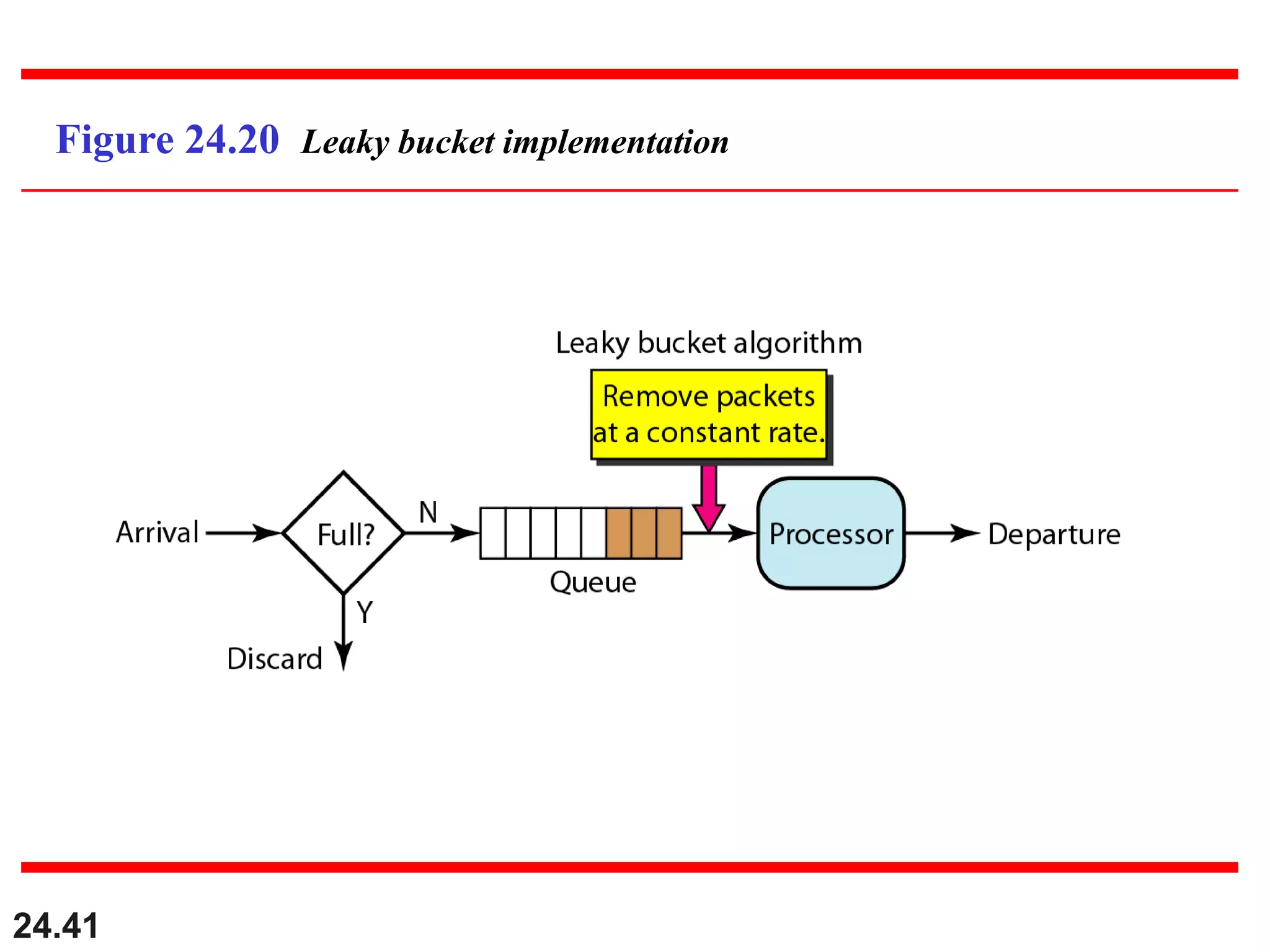

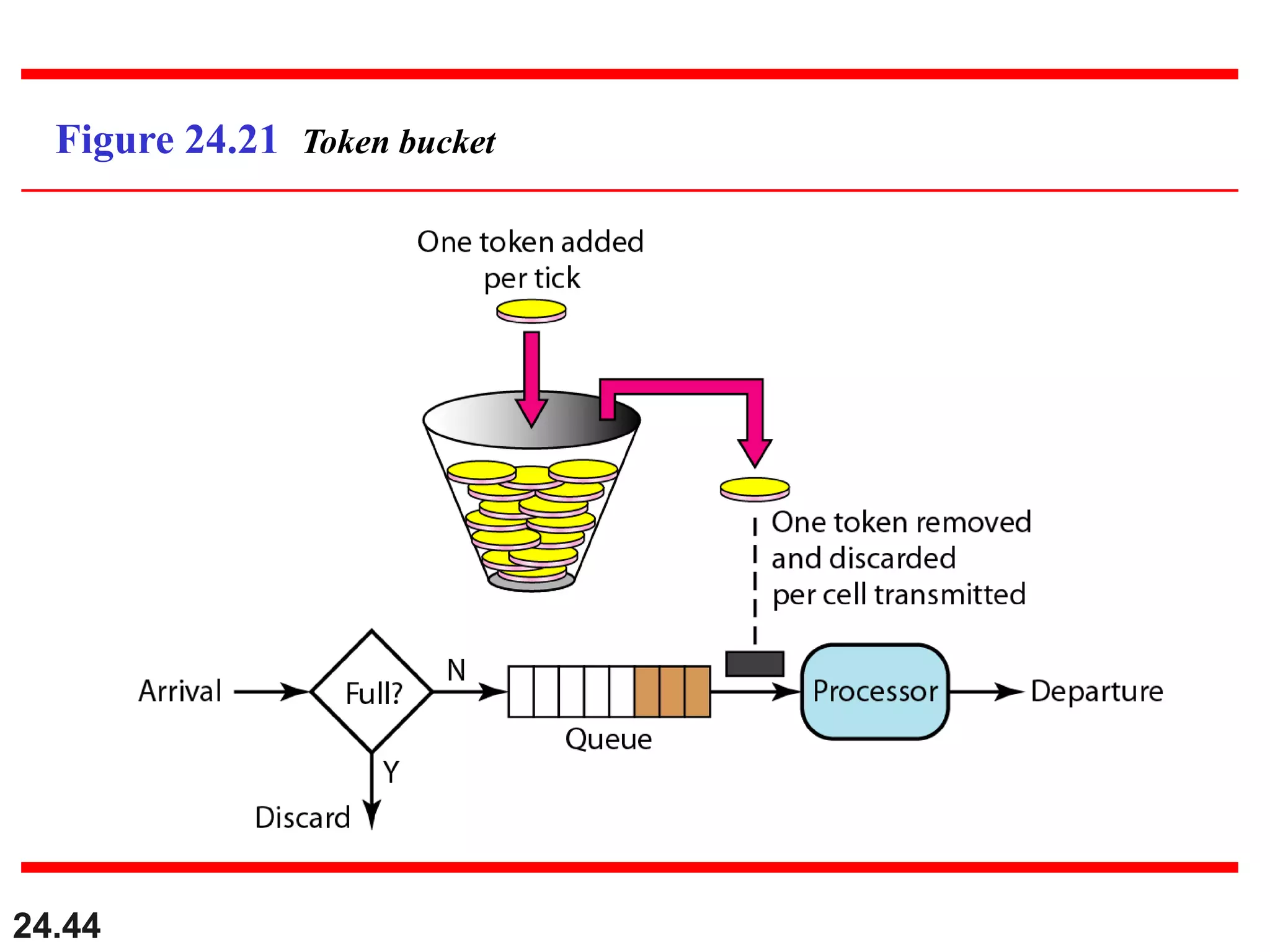

This document provides an overview of congestion control and quality of service techniques. It begins by defining data traffic and different traffic profiles. It then discusses congestion, including what causes it and different congestion control approaches like open-loop prevention and closed-loop removal. Two examples of congestion control are described - TCP and Frame Relay. Quality of service is then introduced and different techniques to improve it are outlined, including scheduling, traffic shaping, resource reservation, and admission control.