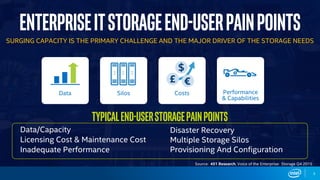

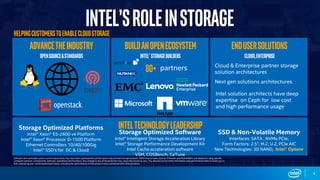

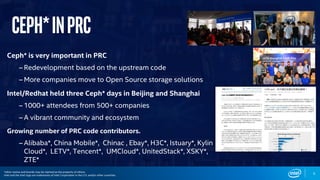

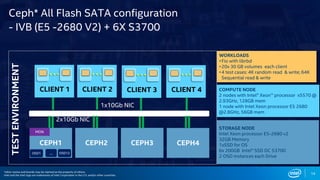

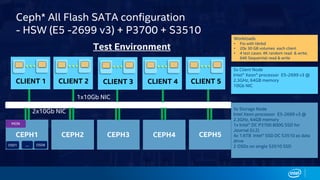

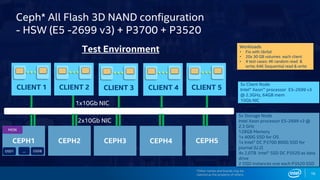

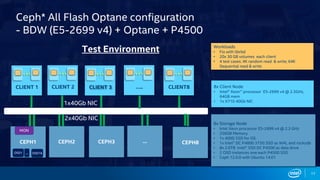

The document discusses trends in data growth and storage technologies that are driving the need for storage modernization. It outlines Intel's role in advancing the storage industry through open source technologies and standards. Specifically, it focuses on Intel's work optimizing Ceph for Intel platforms, including performance profiling, enabling Intel optimized solutions, and end customer proofs-of-concept using Ceph with Intel SSDs, Optane, and platforms.