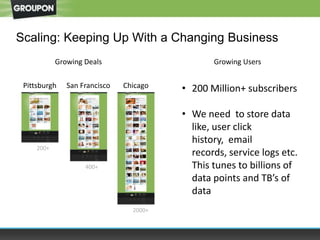

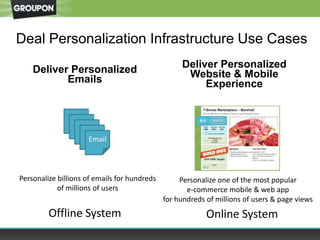

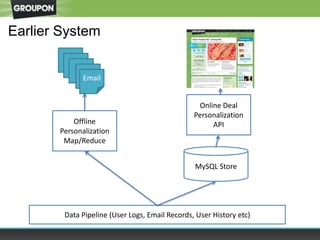

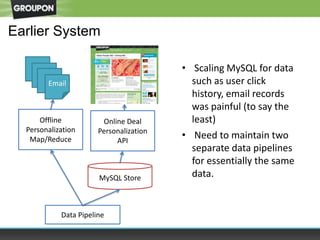

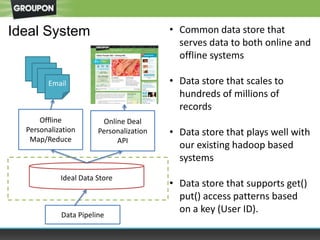

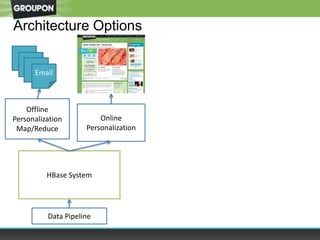

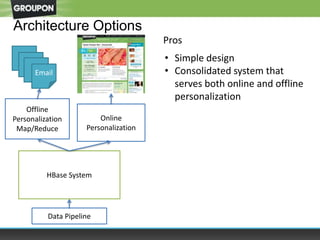

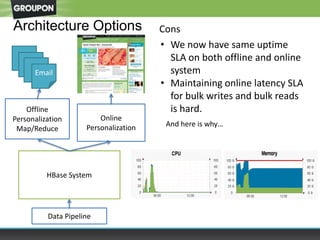

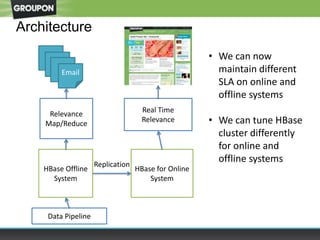

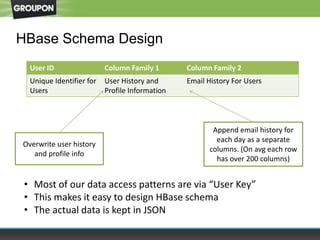

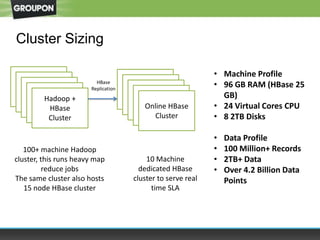

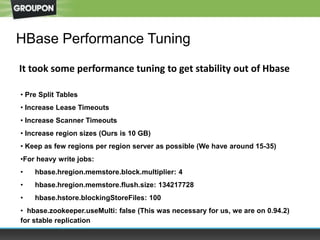

Groupon uses HBase as its data store for both online and offline personalization systems. Previously, these systems used separate MySQL and MapReduce infrastructures. With HBase, Groupon is able to consolidate these into a single system, simplifying the architecture. They design the HBase schema around a user ID key to access user profile and activity data. The HBase clusters require performance tuning to handle Groupon's scale of hundreds of millions of users and terabytes of data for low-latency online and batch offline use cases.