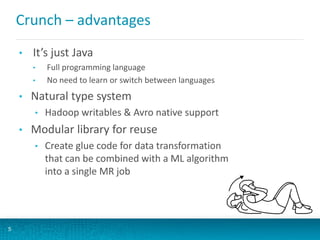

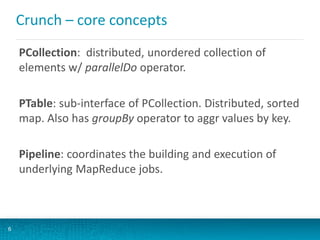

The document introduces new tools for building applications on Apache Hadoop, including Apache Avro, Apache Crunch, and Cloudera ML, aimed at enhancing analytics-driven development. It outlines the features and benefits of these tools, emphasizing their ease of use and integration with existing components in the Hadoop ecosystem. Additionally, it highlights the Cloudera Development Kit, which simplifies the application development process by providing higher-level APIs and modular libraries.

![Crunch – word count

7

public class WordCount {

public static void main(String[] args) throws Exception {

Pipeline pipeline = new MRPipeline(WordCount.class);

PCollection lines = pipeline.readTextFile(args[0]);

PCollection words = lines.parallelDo("my splitter", new DoFn() {

public void process(String line, Emitter emitter) {

for (String word : line.split("s+")) {

emitter.emit(word);

}

}

}, Writables.strings());

PTable counts = Aggregate.count(words);

pipeline.writeTextFile(counts, args[1]);

pipeline.run();

}

}](https://image.slidesharecdn.com/untitled-130819093838-phpapp01/85/Building-Applications-using-Apache-Hadoop-9-320.jpg)

![Scrunch – Scala wrapper

8

class WordCountExample {

val pipeline = new Pipeline[WordCountExample]

def wordCount(fileName: String) = {

pipeline.read(from.textFile(fileName))

.flatMap(_.toLowerCase.split("W+"))

.filter(!_.isEmpty())

.count

}

}

Based on Google’s Cascade project](https://image.slidesharecdn.com/untitled-130819093838-phpapp01/85/Building-Applications-using-Apache-Hadoop-10-320.jpg)