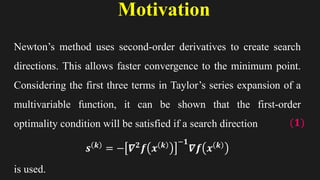

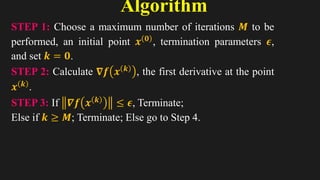

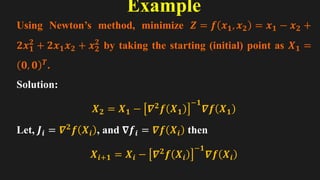

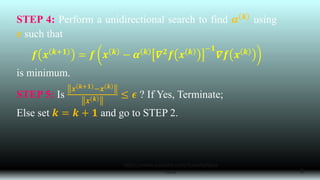

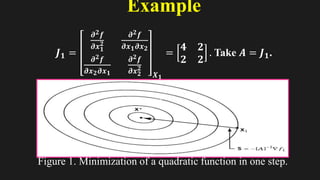

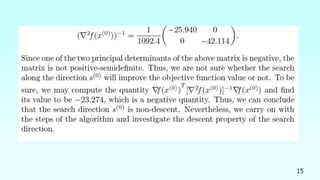

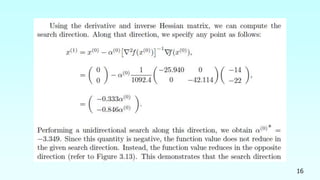

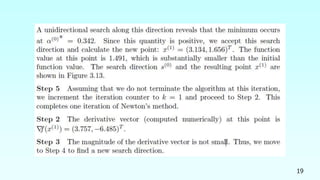

Newton's method for multivariable optimization employs second-order derivatives to achieve faster convergence to minimum points, while its algorithm includes steps for calculating gradients and managing iterations. Although the search direction may not guarantee a decrease in function value at every step, it is assumed to be descent near the minimum if certain conditions are met. Practical examples demonstrate the method's efficacy, especially when starting points are near optimal solutions.