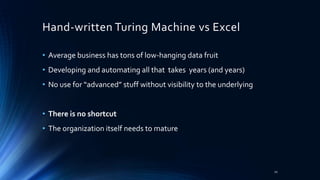

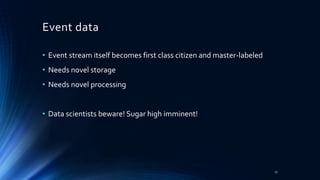

The document discusses the complexities and challenges associated with big data, emphasizing the rapid influx of diverse and often corrupted data that businesses must manage. It highlights the differences between human-generated and machine-generated data and suggests that data systems and algorithms need to adapt to these new realities for effective analysis and decision-making. The importance of real-time systems, visualization, and understanding business needs in the context of data solutions is also underlined.