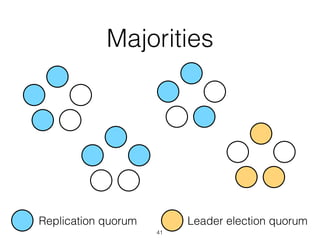

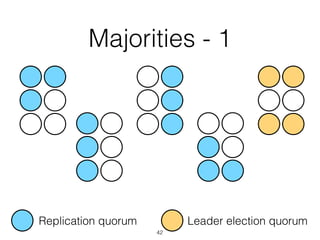

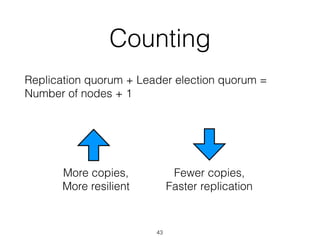

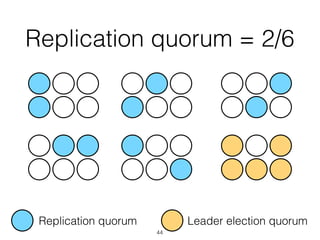

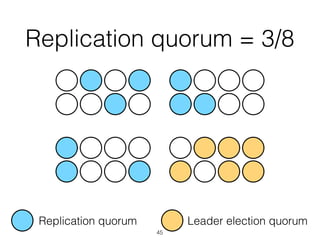

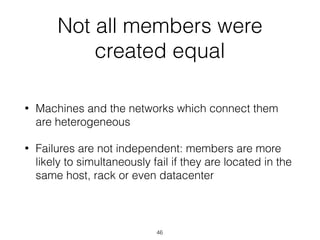

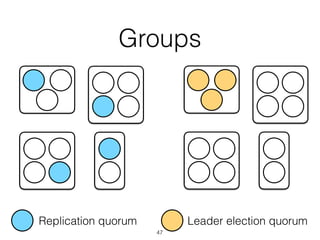

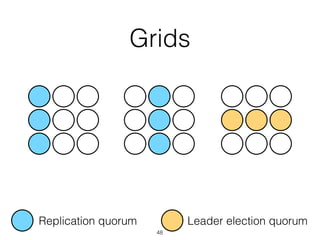

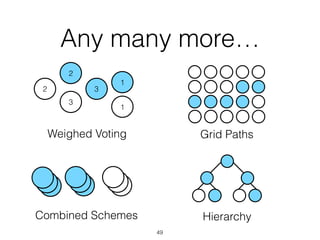

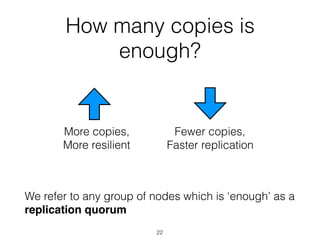

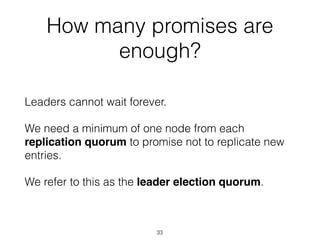

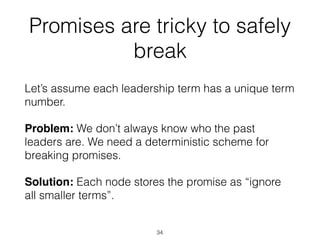

Flexible Paxos introduces a generalization of the Paxos algorithm that allows for reaching consensus without requiring majority quorums. The key insight is that only the intersection between replication quorums and leader election quorums needs to be considered, rather than all quorums intersecting. This opens up many new quorum schemes that can provide more scalable and available consensus. Majority quorums are not necessarily optimal and different quorum designs may better suit practical systems with heterogeneous resources. Ongoing work aims to adopt these ideas into production and further expand the algorithms to new failure models.

![Context

This mechanism of views and promises is widely

utilised. This core idea is commonly referred to as

Paxos.

It’s a recurring foundation in the literature:

Viewstamped Replication [PODC’88], Paxos

[TOCS’98], Zab/Zookeeper [ATC’10] and Raft

[ATC’14] and many more.

37](https://image.slidesharecdn.com/fpaxosdev2-161104132519/85/Flexible-Paxos-Reaching-agreement-without-majorities-37-320.jpg)