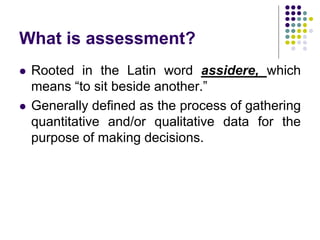

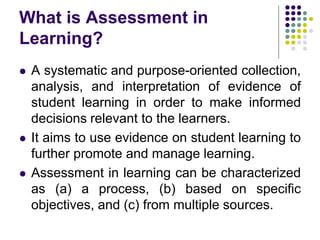

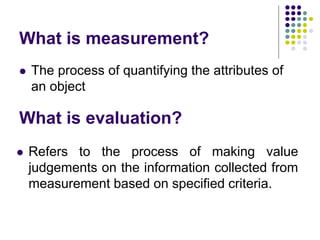

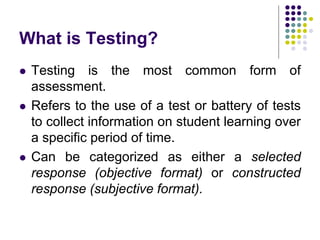

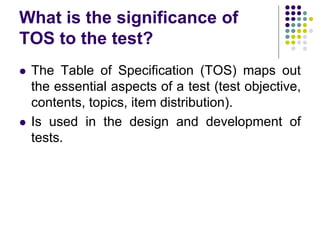

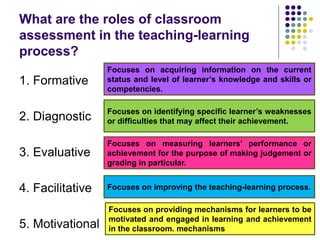

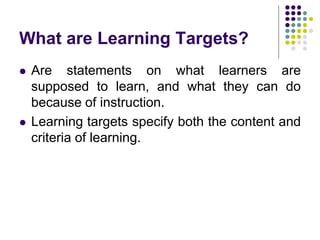

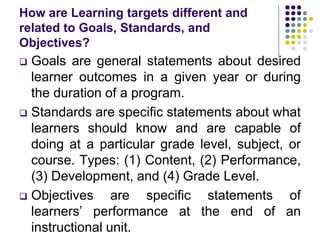

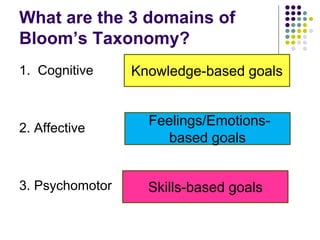

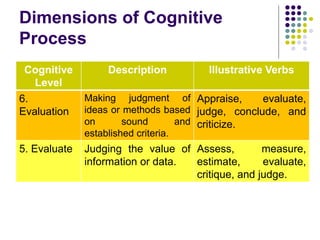

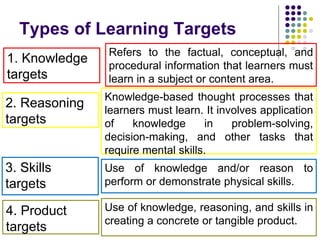

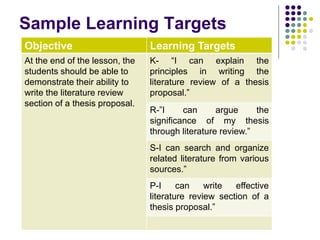

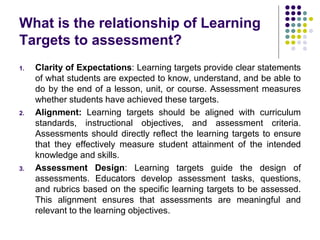

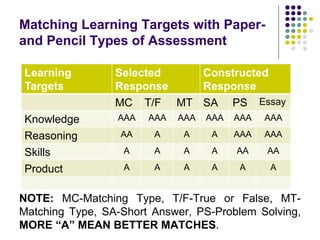

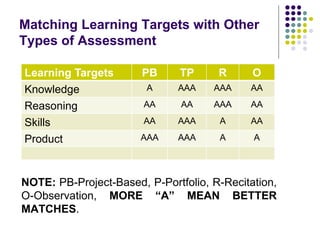

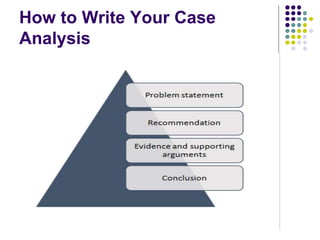

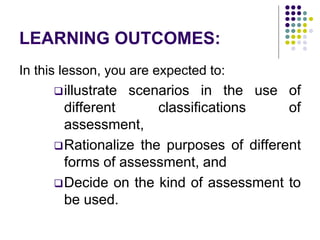

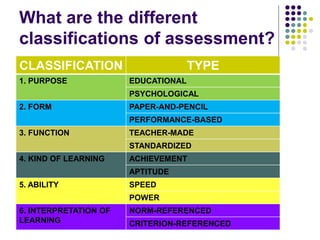

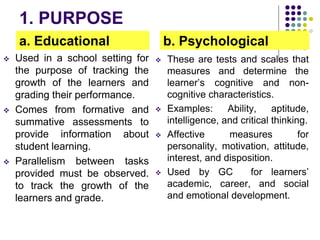

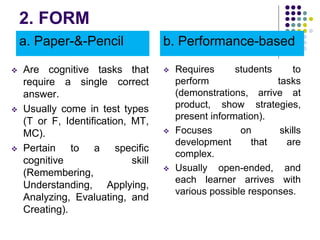

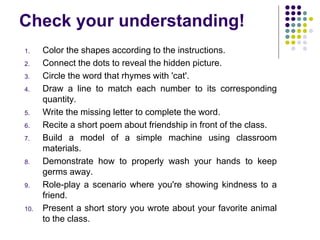

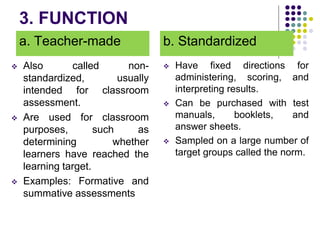

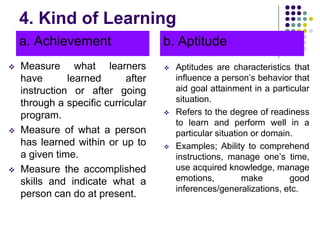

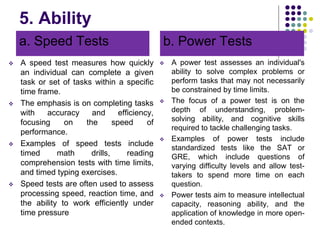

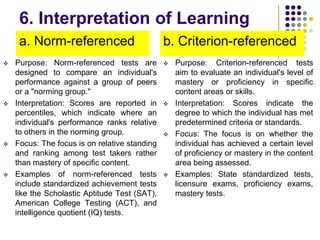

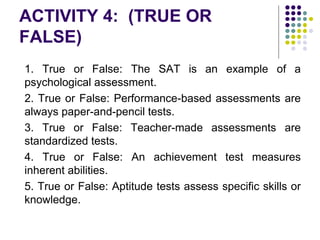

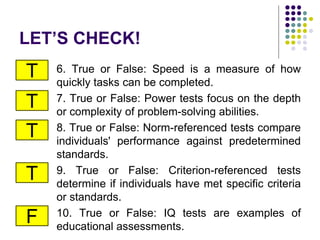

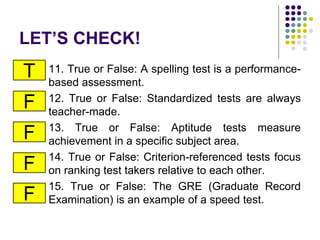

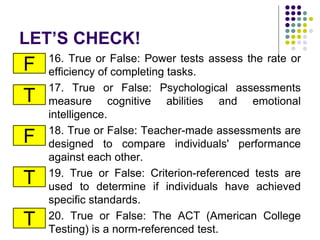

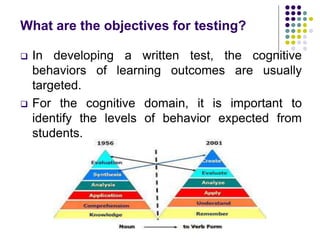

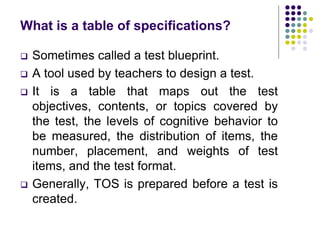

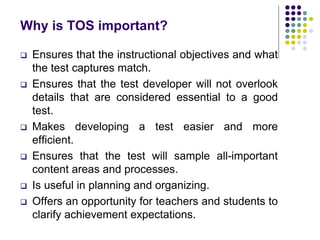

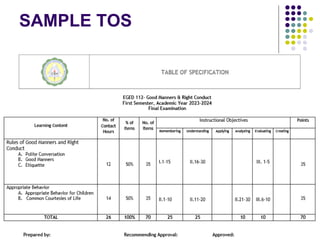

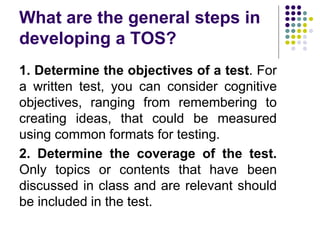

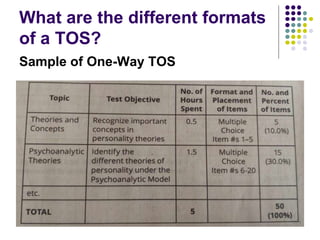

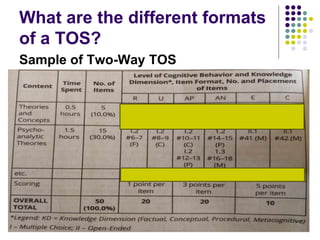

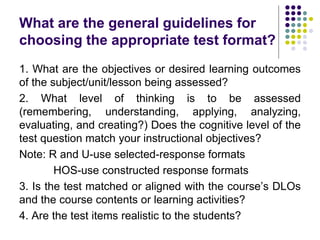

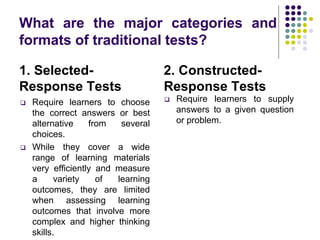

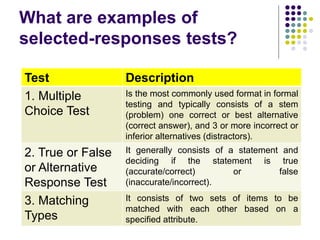

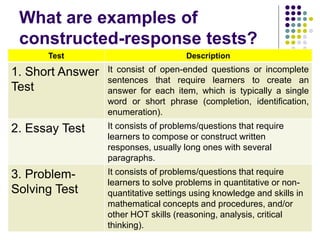

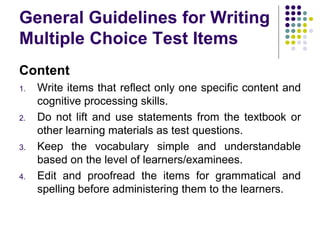

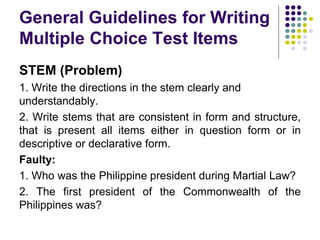

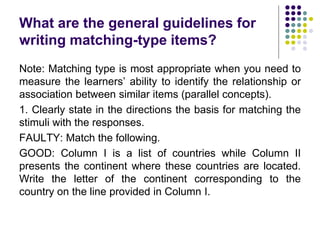

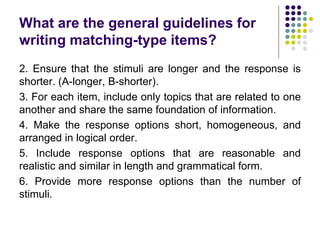

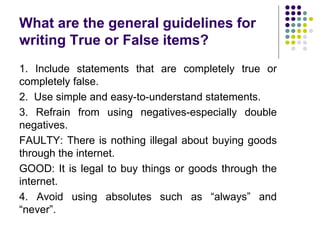

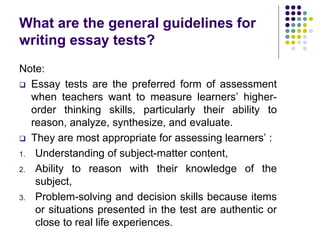

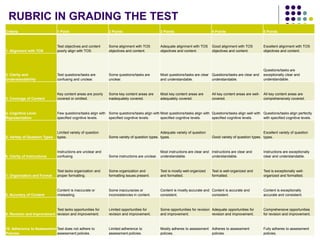

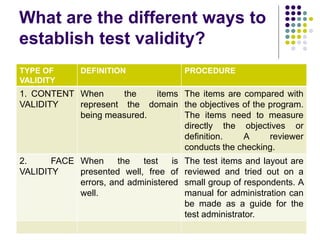

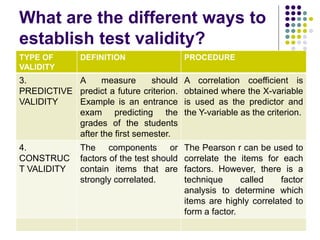

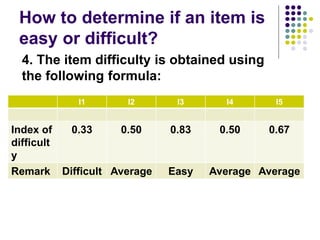

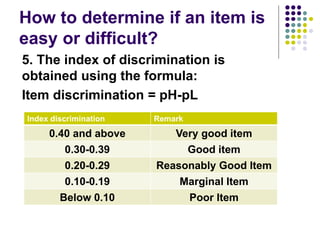

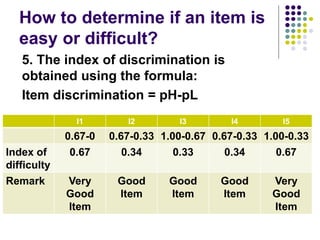

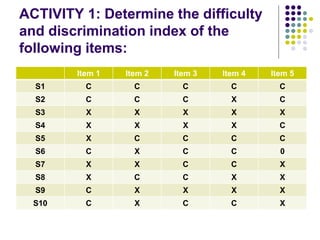

The document outlines the concepts and principles of assessment in learning, emphasizing its systematic nature in evaluating student learning for decision-making. It covers various types of assessments (formative, summative, diagnostic, etc.), measurement frameworks (classical test theory, item response theory), and principles for effective assessment. The document also highlights the relationship between learning targets and assessment methods, providing guidelines for aligning them to enhance instructional planning.