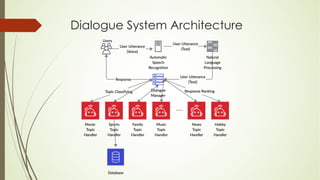

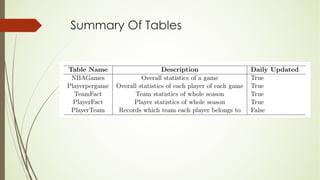

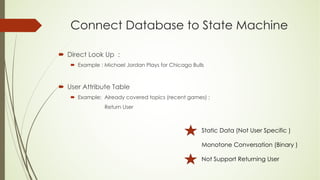

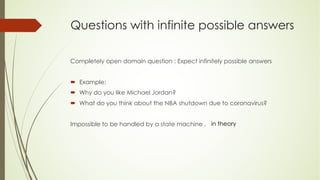

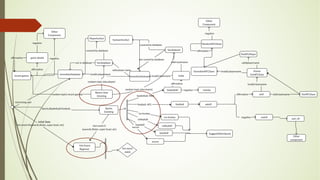

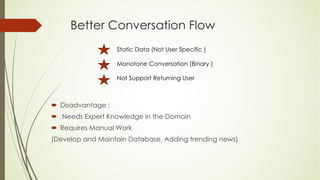

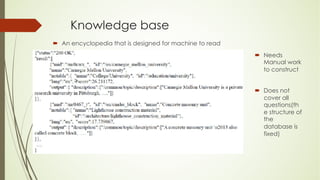

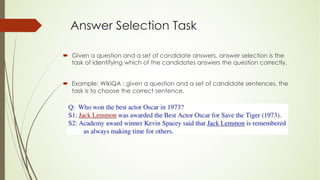

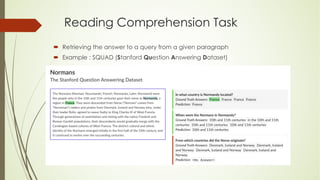

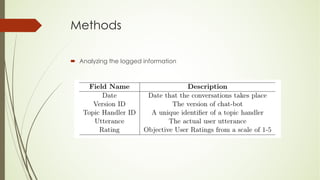

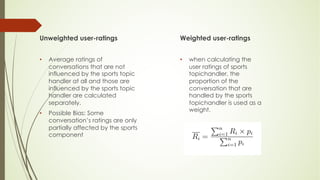

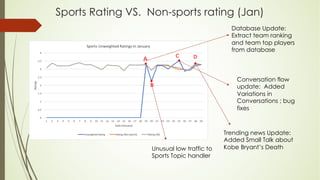

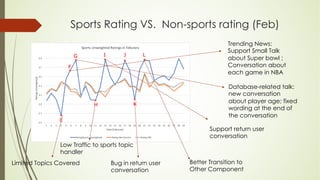

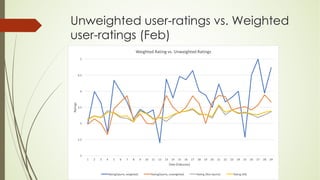

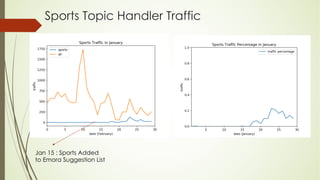

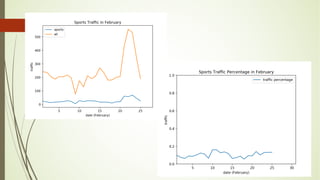

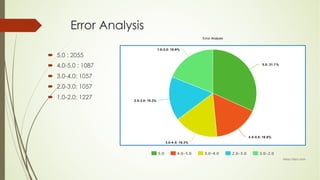

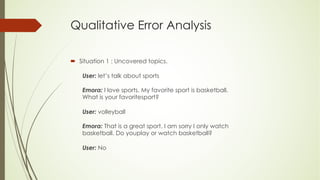

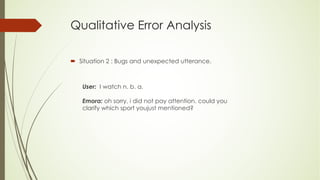

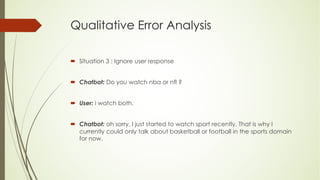

This document analyzes a state machine-based dialogue management system for a conversational agent. It discusses using state machines to control conversation flow, with a sports-focused database to enable real-time fact-based discussions. Quantitative analysis showed updates to the sports component's database and conversation logic correlated with higher user ratings, while bugs correlated with lower ratings. Qualitative analysis found errors occurred from uncovered topics, bugs, and ignoring user responses. The study concludes associating state machines with databases can improve dialogue quality but requires effort, while open-domain question answering is not suitable due to latency issues.