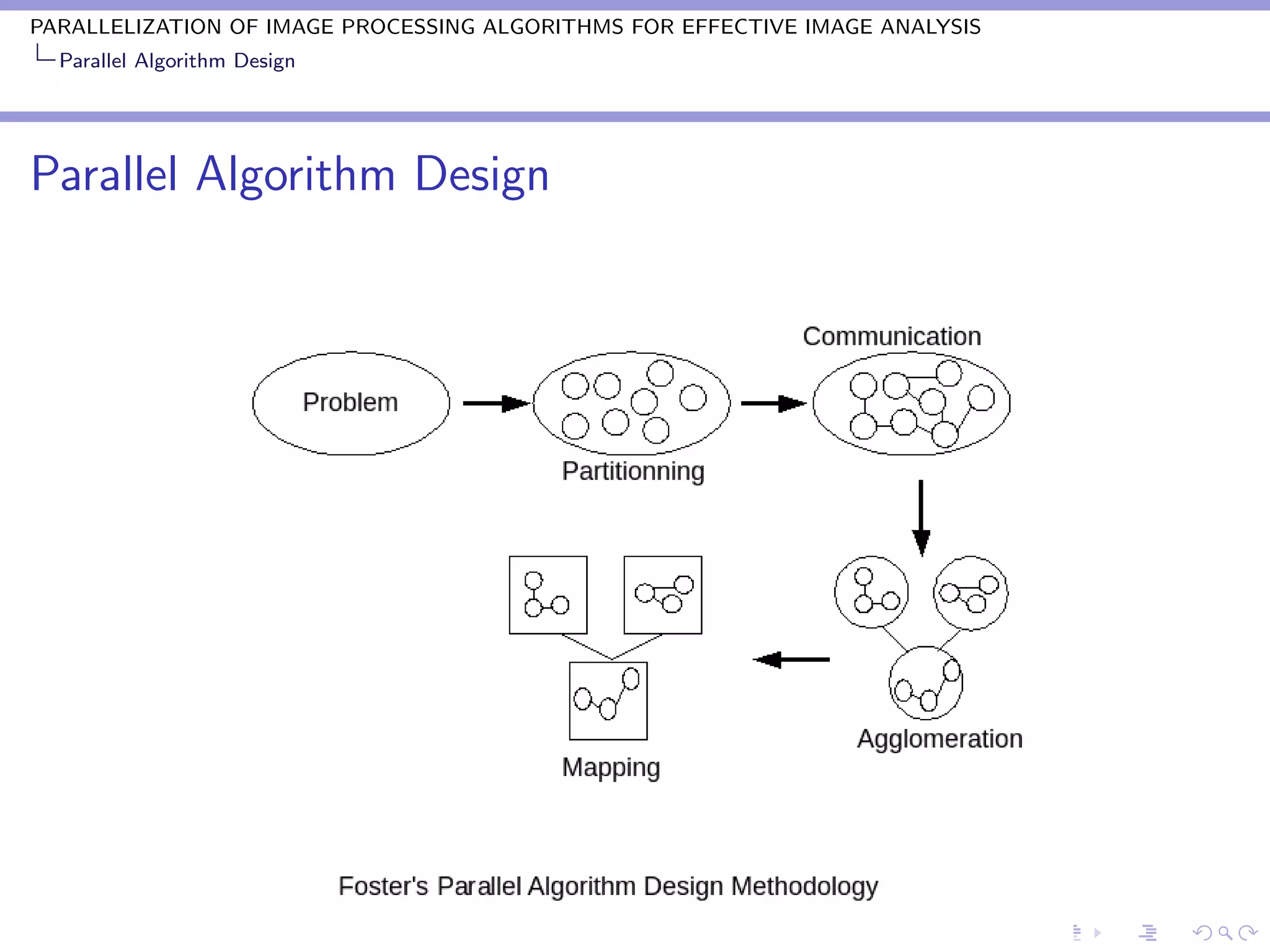

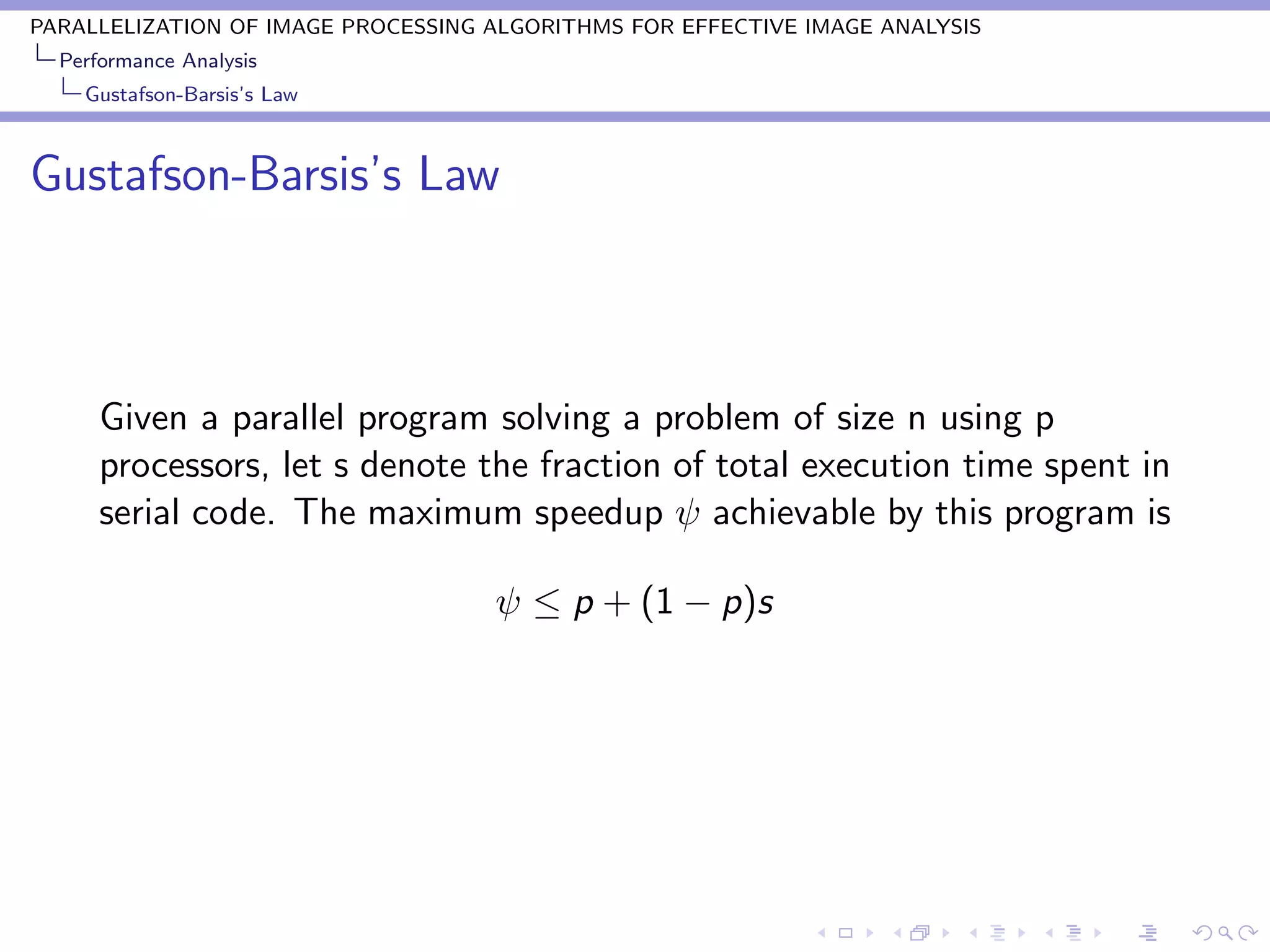

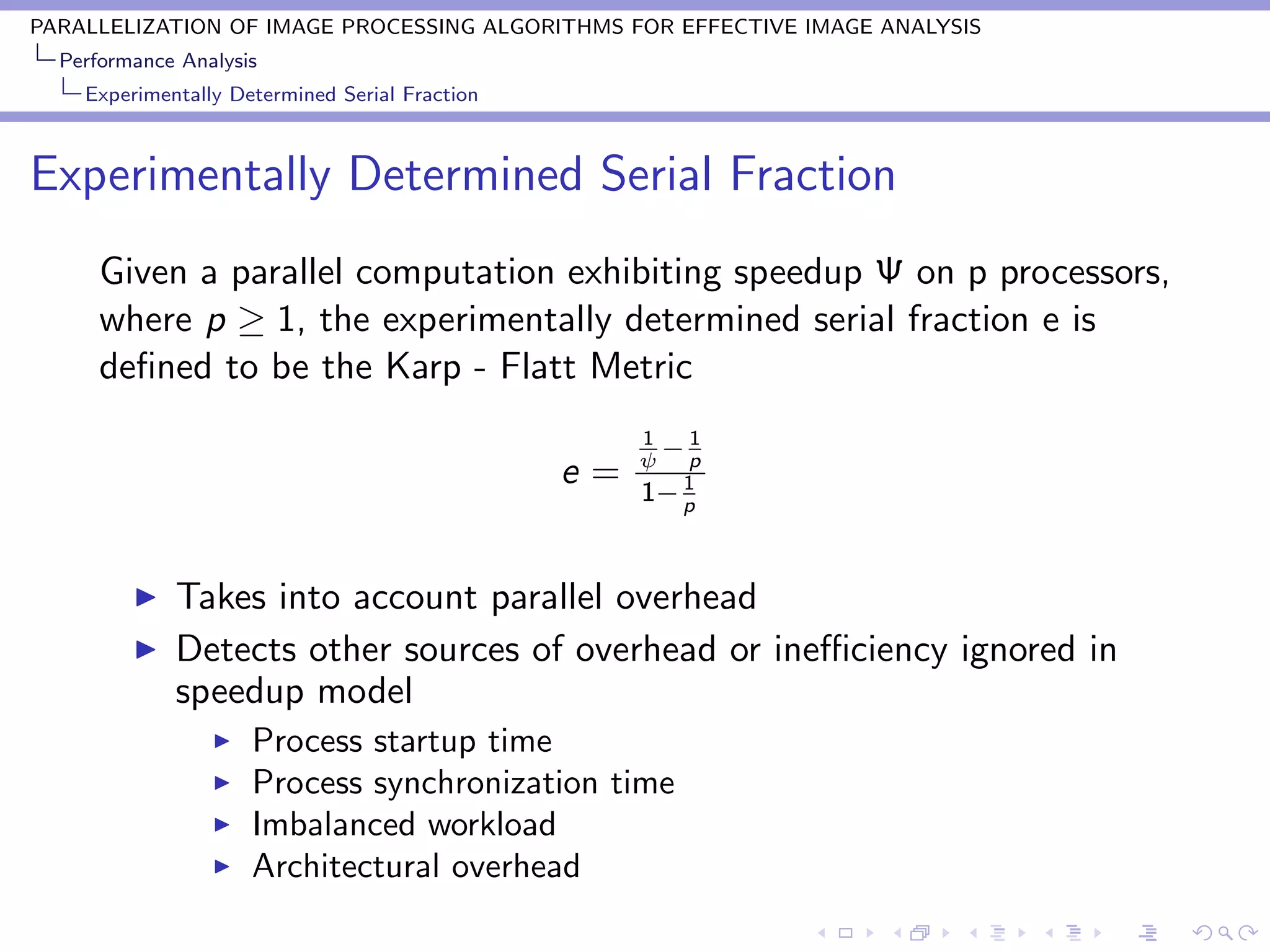

The document discusses parallelizing image processing algorithms for effective image analysis. It aims to design parallel algorithms for common image processing tasks like filtering and edge detection to reduce processing time. The key aspects of parallel algorithm design discussed are partitioning, communication, agglomeration, and mapping tasks to processors. Performance analysis metrics like Amdahl's law and Gustafson-Barsis' law are also covered to understand speedup from parallelization and ensure algorithms remain cost effective as the number of processors increases.

![PARALLELIZATION OF IMAGE PROCESSING ALGORITHMS FOR EFFECTIVE IMAGE ANALYSIS

Parallel Algorithm Design

Parallel Algorithm Design

According to Foster the design of parallel algorithm consist of 4

stages[13] :

Partitioning

Communication

Agglomeration

Mapping](https://image.slidesharecdn.com/amdmntlotushosthomejaishakthipresentationrmeet1rmeet-1-100317001347-phpapp01/75/Amd-mnt-lotus-host-home-jaishakthi-presentation-rmeet1-rmeet-1-5-2048.jpg)