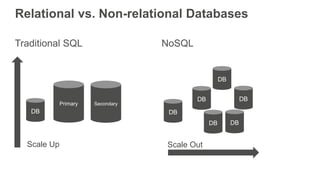

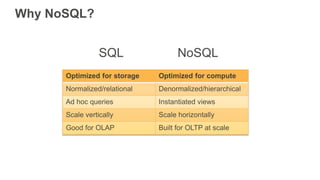

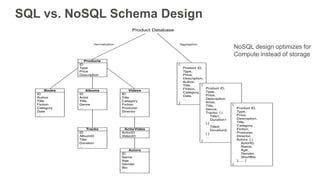

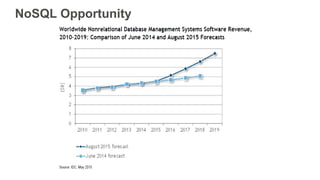

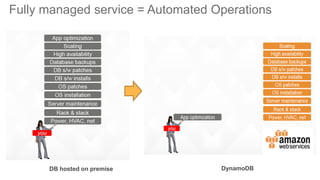

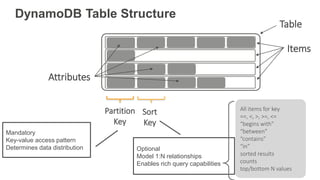

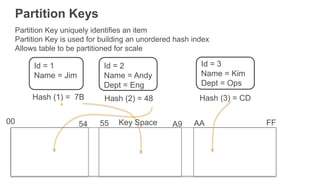

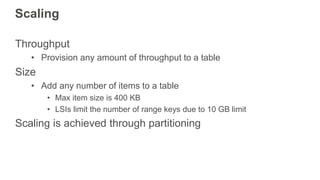

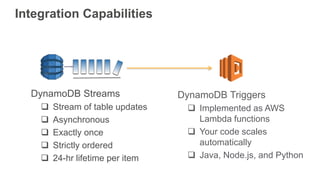

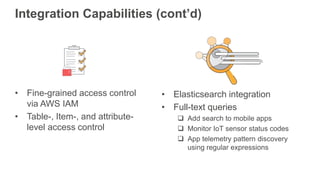

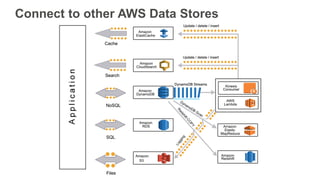

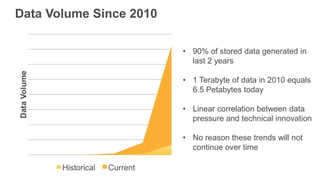

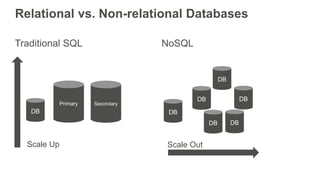

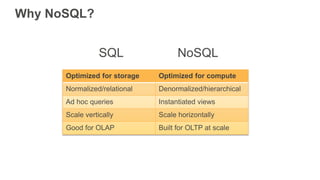

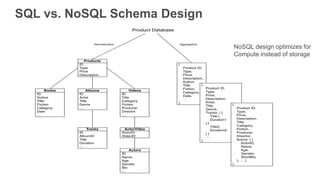

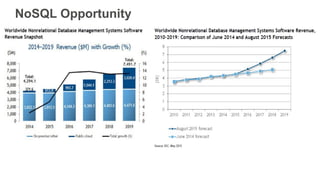

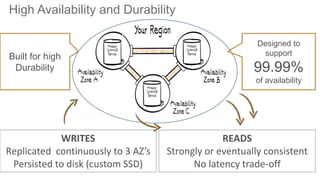

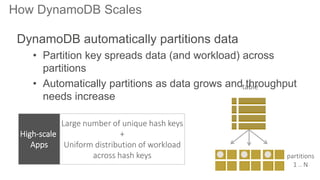

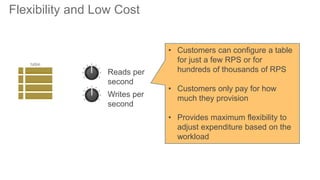

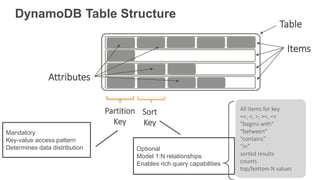

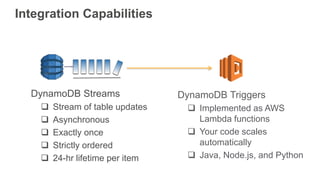

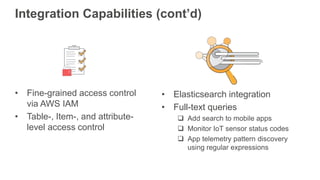

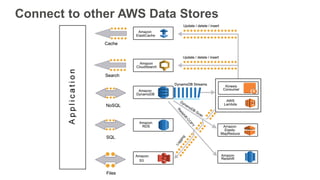

Jim Scharf will give a presentation on Getting Started with Amazon DynamoDB. The presentation will provide a brief history of data processing, compare relational and non-relational databases, explain DynamoDB tables and indexes, scaling, integration capabilities, pricing, and include customer use cases. The agenda also includes time for Q&A.