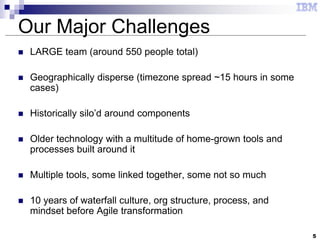

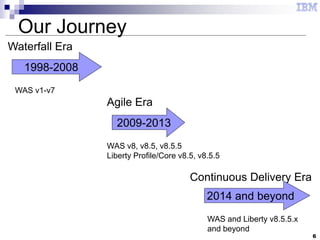

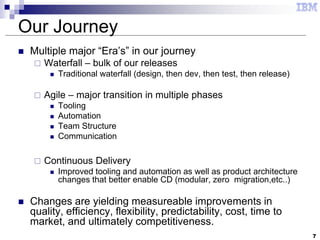

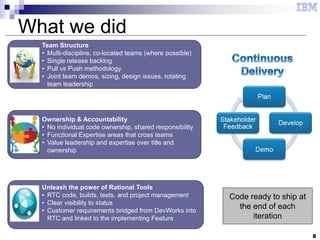

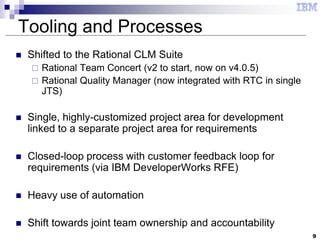

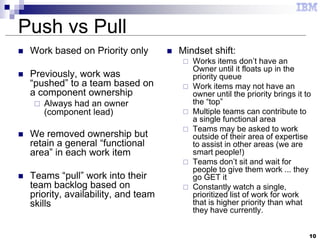

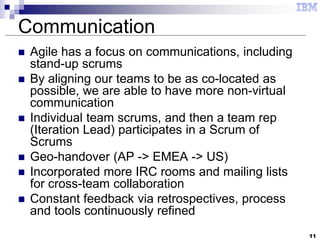

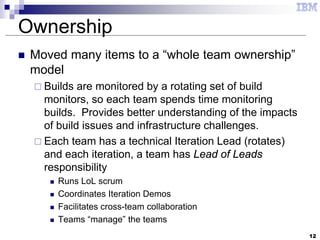

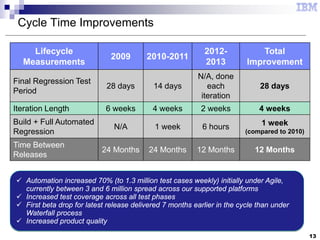

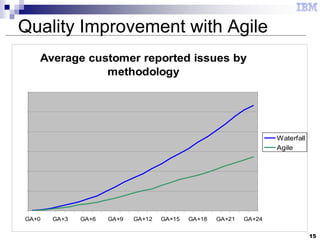

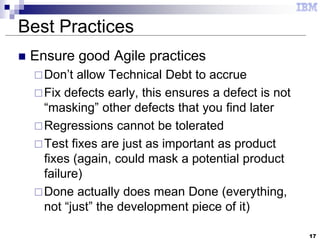

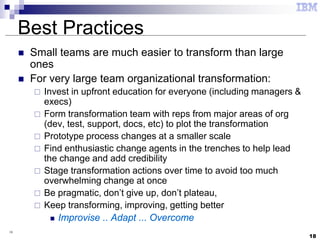

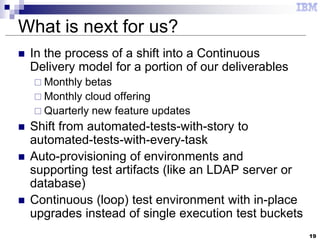

Susan Hanson from IBM discusses the transformation of WebSphere Application Server towards agile and continuous delivery methodologies since 2009. The document outlines challenges faced, such as a large geographically dispersed team, and how improvements in tooling, team structure, and communication have led to measurable gains in quality and efficiency. Additionally, it highlights ongoing initiatives and best practices for sustaining agile practices in a large organizational context.