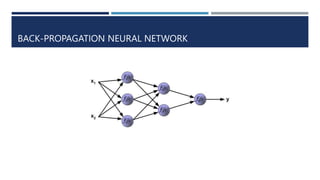

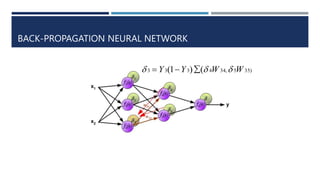

The document discusses agent adaptability, defining an adaptive agent as one that can respond to stimuli in its environment. It differentiates between simple reactive agents and more advanced reasoning and learning agents, outlining techniques like genetic algorithms, reinforcement learning, and back-propagation in neural networks. Additionally, it covers methods of clustering, including k-means and DBSCAN, in the context of adaptable agent technologies.

![THRESHOLD FUNCTIONS [TRANSFER FUNCTION]](https://image.slidesharecdn.com/agentadaptability-240224182516-9965f4f0/85/Agent-Adaptability-in-a-machine-learning-6-320.jpg)

![STRUCTURE OF NEURAL NETWORK

Layers

Input Layer [based on input features]

Hidden Layers

Output Layer [based on output]

Learning Rate](https://image.slidesharecdn.com/agentadaptability-240224182516-9965f4f0/85/Agent-Adaptability-in-a-machine-learning-7-320.jpg)