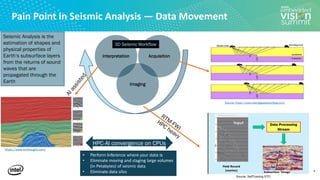

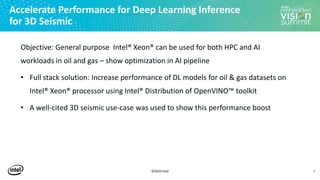

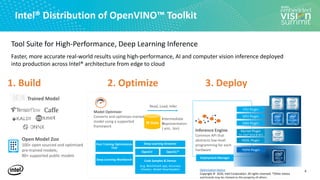

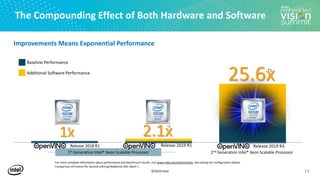

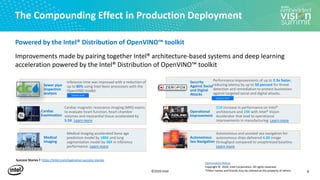

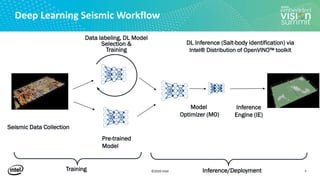

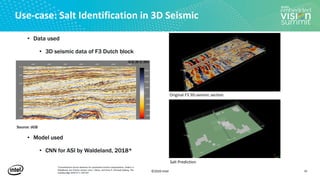

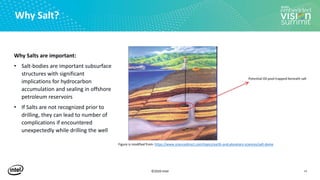

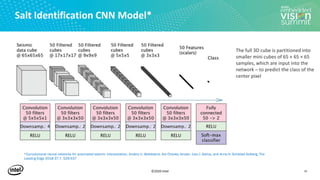

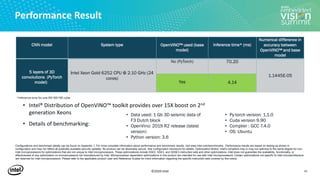

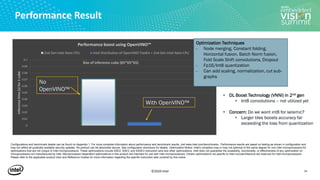

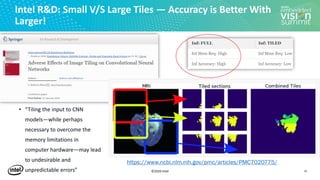

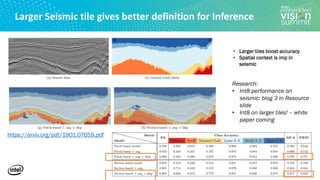

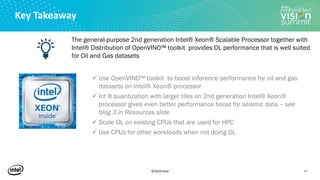

The document discusses the acceleration of deep learning in 3D seismic analysis using Intel® Distribution of OpenVINO™ toolkit and Intel® Xeon® processors, emphasizing performance improvements and cost efficiencies. It highlights a case study for salt-body identification in seismic data, showcasing significant boosts in inference performance when using optimized deep learning models. Additionally, it provides insights on optimization strategies, hardware requirements, and resources for deploying accelerated deep learning applications in the oil and gas sector.