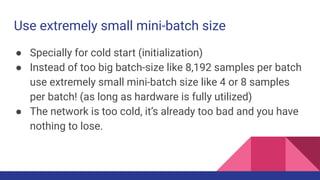

This document proposes a method called Train-Measure-Adapt-Repeat for accelerating stochastic gradient descent training of deep neural networks using adaptive mini-batch sizes. The method starts with an extremely small mini-batch size, such as 4-8 samples, to allow for faster training initially through more frequent weight updates. Accuracy is evaluated over time rather than by the number of steps, and the mini-batch size is increased adaptively when accuracy improvements stall. Experiments on image classification datasets demonstrate the method reaching higher accuracy levels faster than using fixed large mini-batch sizes.

![“Stochastic Learning” or “Stochastic Gradient Descent” (SGD) is

done by taking small random samples (mini-batches) instead of the

whole batch of training data “Batch Learning”. Faster to converge

and better in handling the noise and non-linearity. That’s why batch

learning was considered inefficient[1][2]

.

1. Y. LeCun, “Efficient backprop”

2. D. R. Wilson and T. R. Martinez, “The general inefficiency of batch training for gradient descent

learning,”

Batch Learning vs. Stochastic Learning](https://image.slidesharecdn.com/acceleratingstochasticgradientdescentusingadaptivemini-batchsize3-191010121657/85/Accelerating-stochastic-gradient-descent-using-adaptive-mini-batch-size3-11-320.jpg)