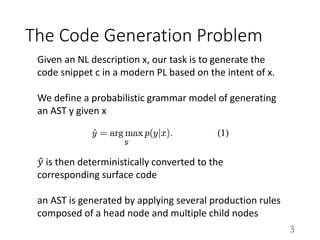

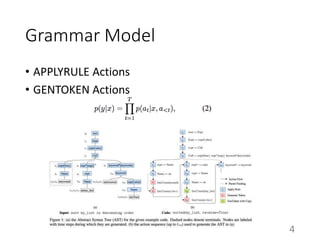

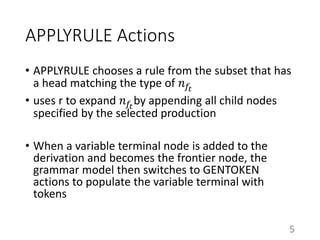

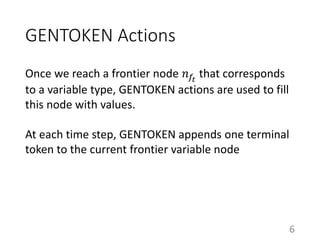

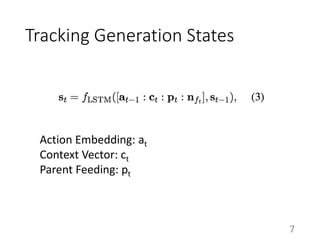

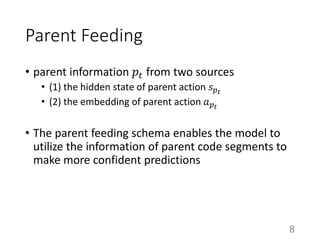

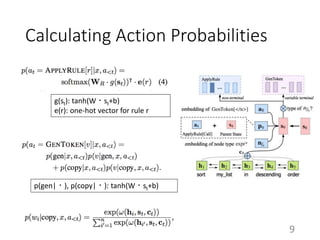

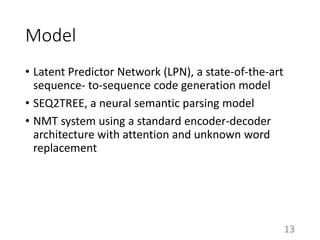

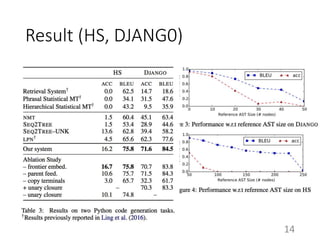

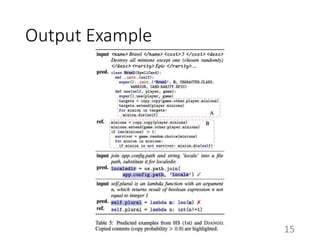

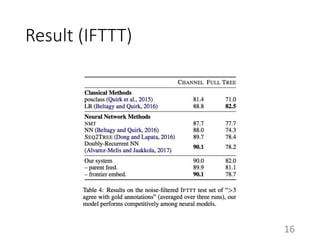

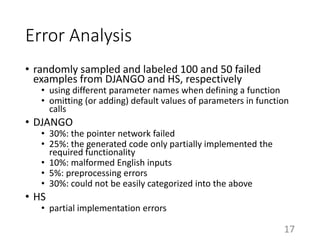

This paper proposes a neural model for code generation that generates an abstract syntax tree (AST) by sequentially applying production rules from a grammar model of the target programming language. The model uses an encoder-decoder architecture with attention to generate actions for building the AST from natural language descriptions. It is evaluated on datasets for generating Hearthstone card implementations, Django web framework code, and IFTTT applets. Results show the proposed model outperforms sequence-to-sequence and semantic parsing baselines in accuracy and BLEU scores. Error analysis finds the main errors are related to generating incomplete code that only partially implements the functionality or using incorrect parameter names.