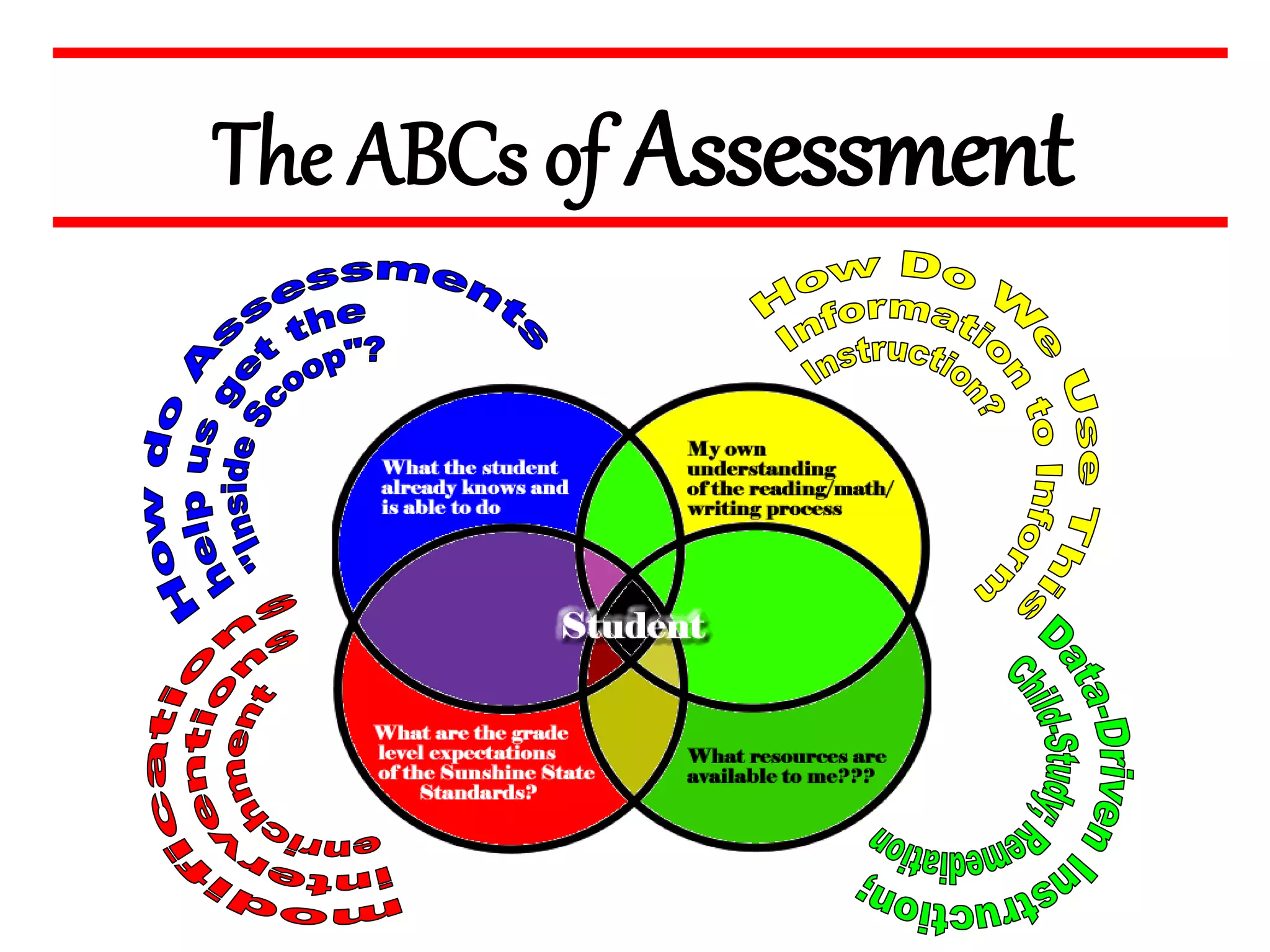

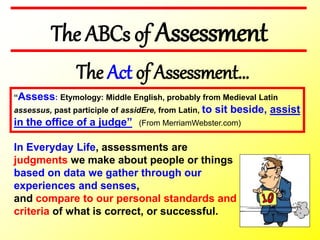

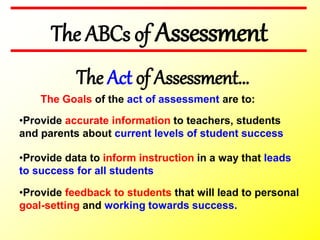

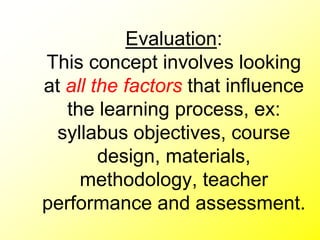

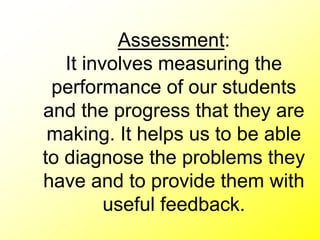

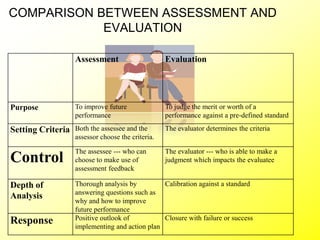

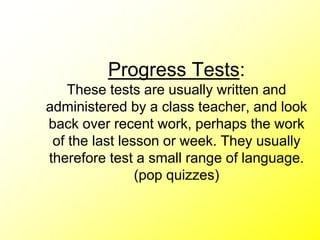

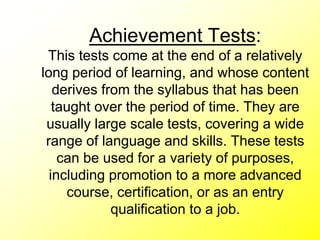

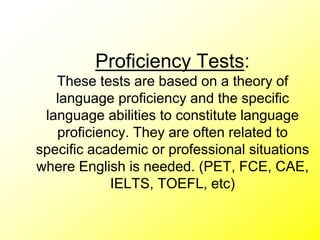

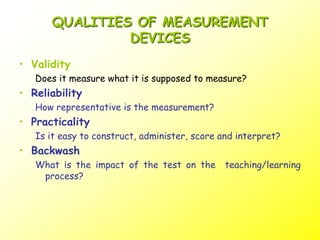

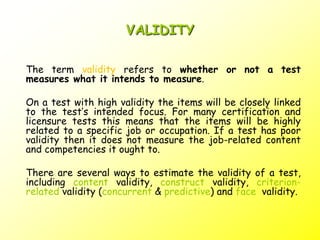

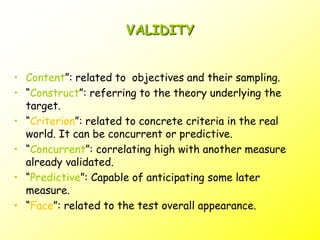

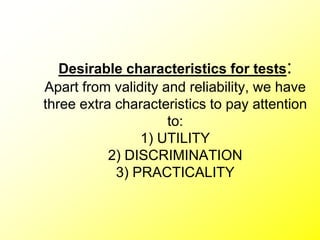

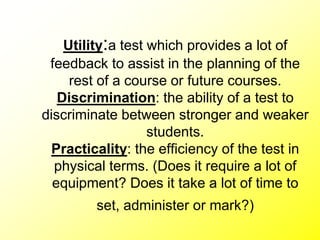

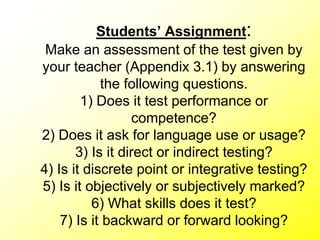

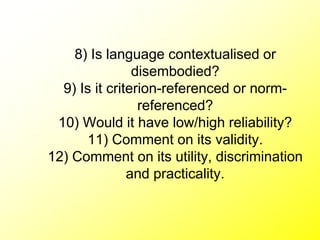

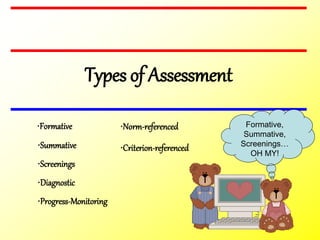

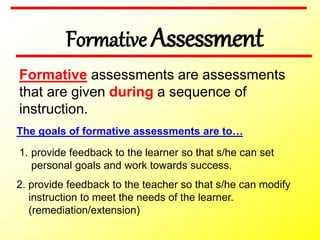

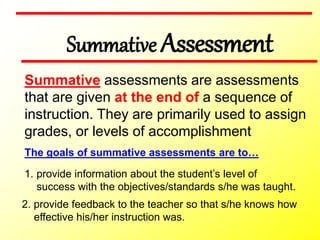

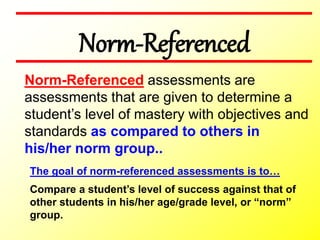

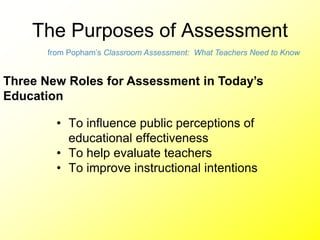

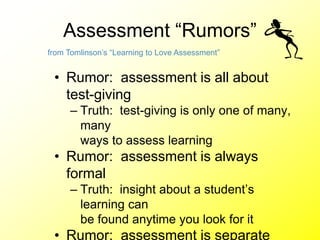

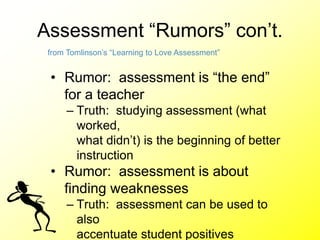

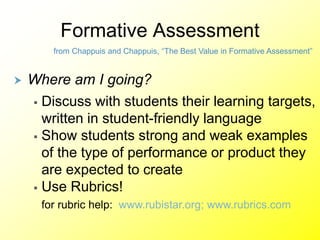

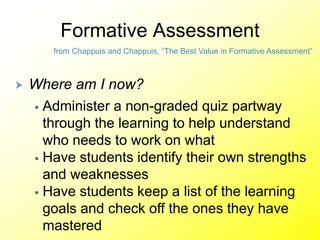

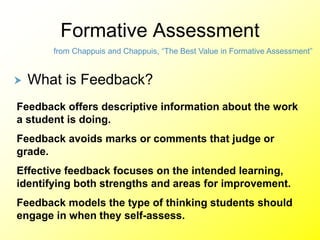

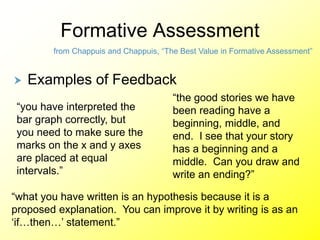

The document discusses key concepts related to assessment including definitions, purposes, types, and principles. It defines assessment as making judgments about students' performance based on data gathered through instruments or observation compared against standards. The main types of assessment discussed are informal assessment, formal assessment (testing), and self-assessment. It also covers the goals of assessment as providing feedback, accurate information, and data to inform instruction.