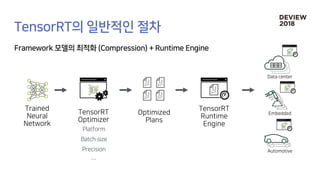

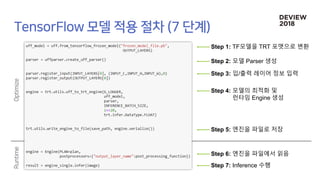

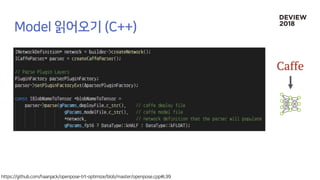

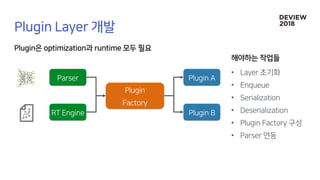

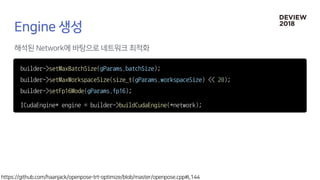

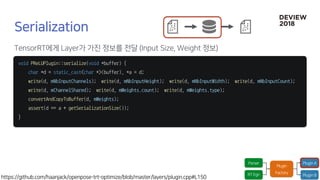

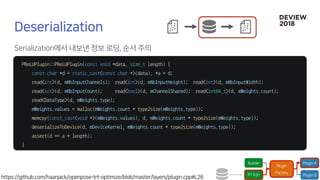

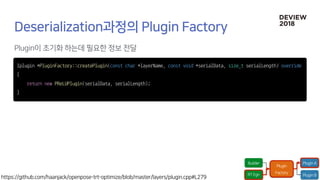

This document describes the steps to convert a TensorFlow model to a TensorRT engine for inference. It includes steps to parse the model, optimize it, generate a runtime engine, serialize and deserialize the engine, as well as perform inference using the engine. It also provides code snippets for a PReLU plugin implementation in C++.

![•

•

•

PReLUPlugin::PReLUPlugin(const Weights *weights, int nbWeights) {

mWeights = weights[0];

mWeights.values = malloc(mWeights.count * type2size(mWeights.type));

memcpy(const_cast<void *>(mWeights.values), weights[0].values, mWeights.count * type2size(mWeights.type));

}](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-24-320.jpg)

![int PReLUPlugin::enqueue(int batchSize, const void *const *inputs, void **outputs, void *workspace,

cudaStream_t stream) {

const float zerof{0.0f}; const __half zeroh = fp16::__float2half(0.0f);

if (mWeights.type == DataType::__float) {

CHECK(Forward_gpu<__float>(batchSize * mNbInputCount, mNbInputChannels,

mNbInputHeight * mNbInputHeight, reinterpret_cast<const __float *>(mDeviceKernel),

reinterpret_cast<const __float *>(inputs[0]), reinterpret_cast<__float *>(outputs[0]),

zerof, mChannelShared ? mNbInputChannels : 1, stream));

} else { // DataType::kFLOAT }

return 0;

}](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-25-320.jpg)

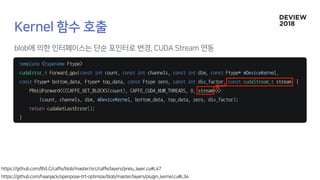

![template <typename Ftype>

__global__ void PReLUForward(const int n, const int channels, const int dim, const Ftype* slope_data, const

Ftype* in, Ftype* out, const Ftype zero, const int div_factor) {

CUDA_KERNEL_LOOP(index, n) {

int c = (index / dim) % channels / div_factor;

out[index] = (in[index] > (Ftype(zero))) ? in[index] :

in[index] * *(reinterpret_cast<const Ftype*>(slope_data)+c);

}

}](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-26-320.jpg)

![cudaMemcpyAsync(buffers[inputIndex], input, batchSize * INPUT_SIZE * sizeof(float),

cudaMemcpyHostToDevice, stream);

context->enqueue(gParams.batchSize, &buffers[0], stream, nullptr);

cudaMemcpyAsync(output, buffers[outputIndex], batchSize * OUTPUT_SIZE * sizeof(float),

cudaMemcpyDeviceToHost, stream);

cudaStreamSynchronize(stream);

cudaStreamCreate(&stream));

IExecutionContext* context = engine->createExecutionContext();](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-36-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-37-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-38-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-39-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-40-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-41-320.jpg)

![[232]TensorRT를 활용한 딥러닝 Inference 최적화](https://image.slidesharecdn.com/232dlinferenceoptimizationusingtensorrt1-181012004550/85/232-TensorRT-Inference-42-320.jpg)