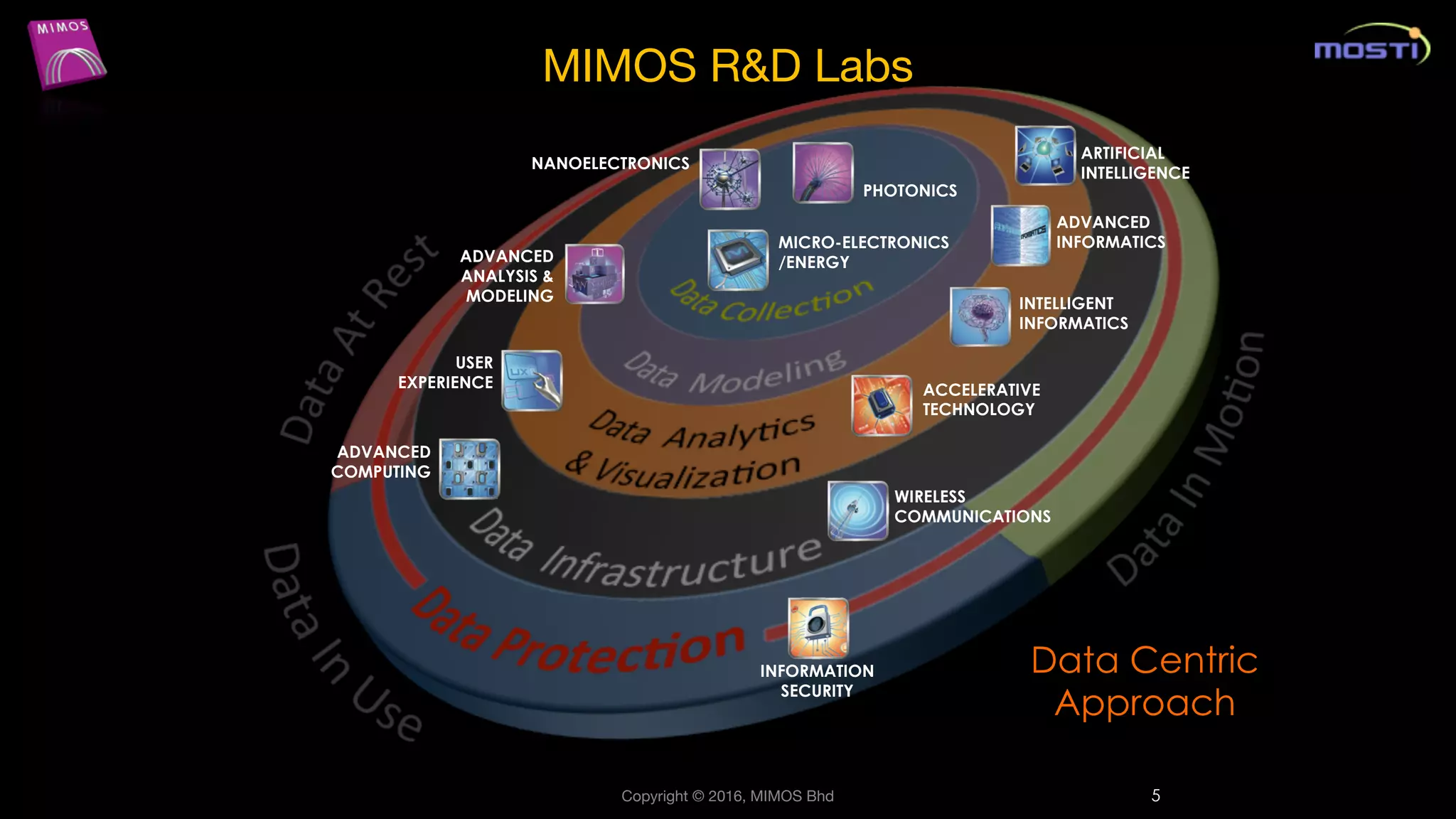

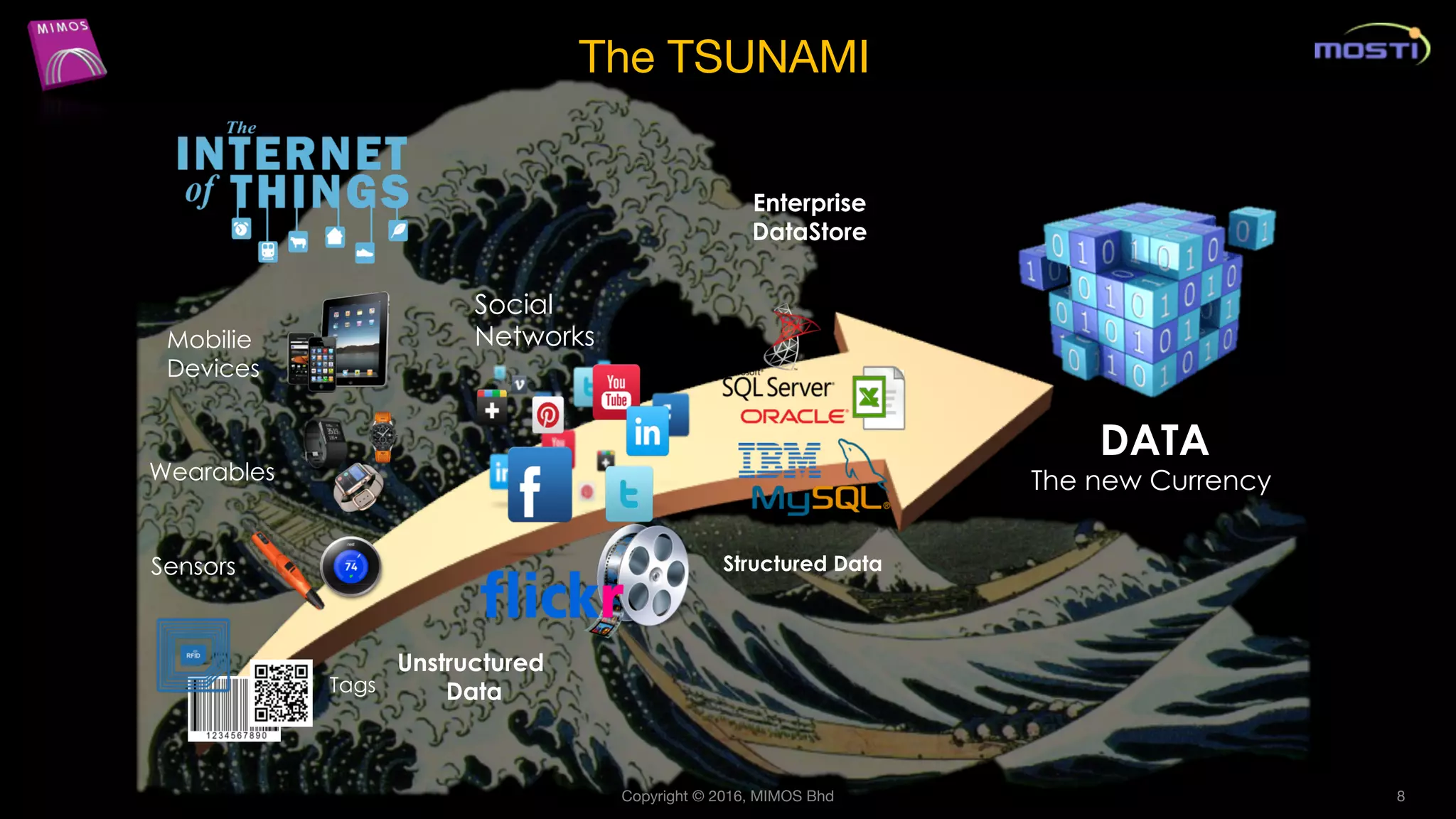

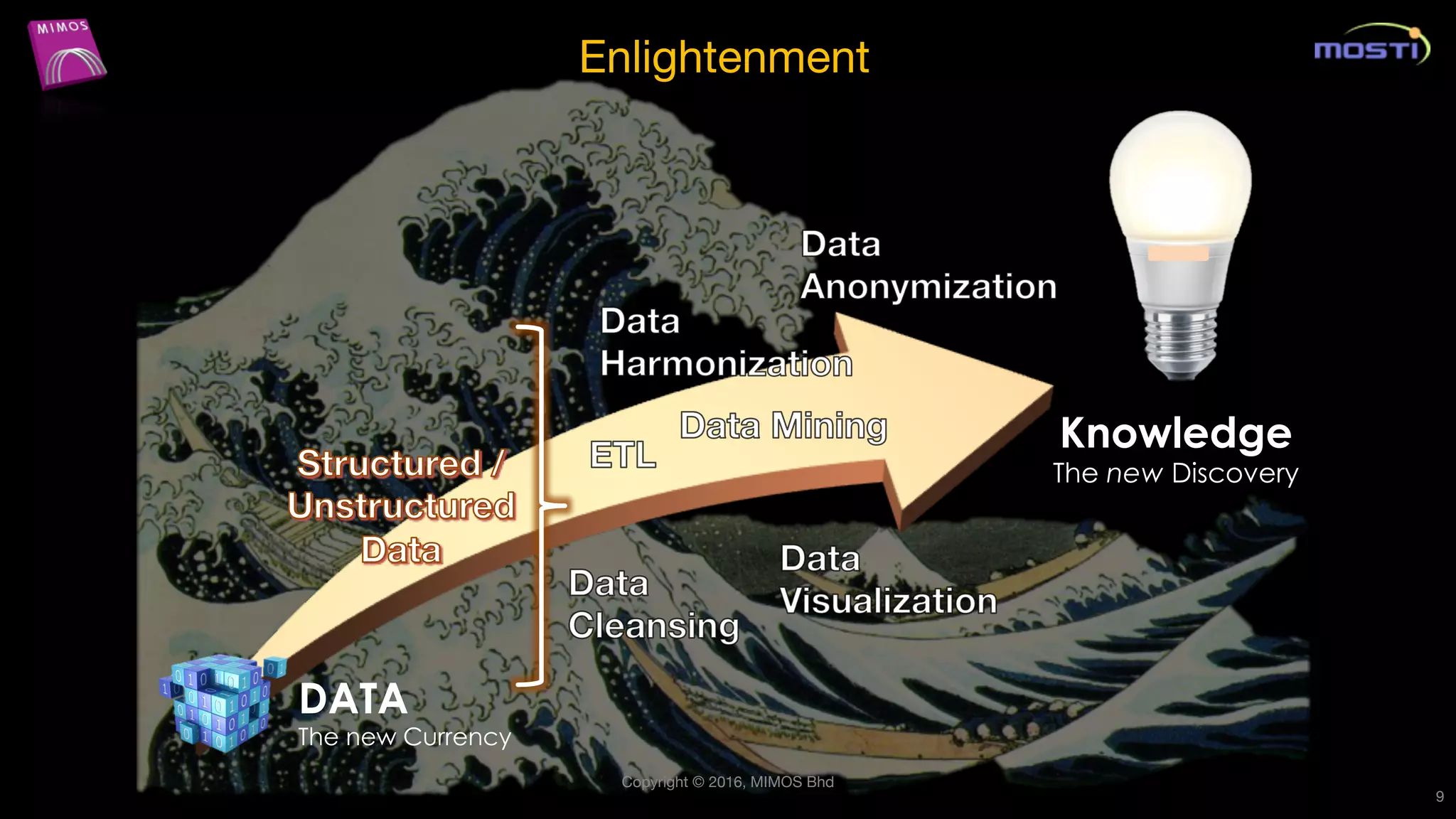

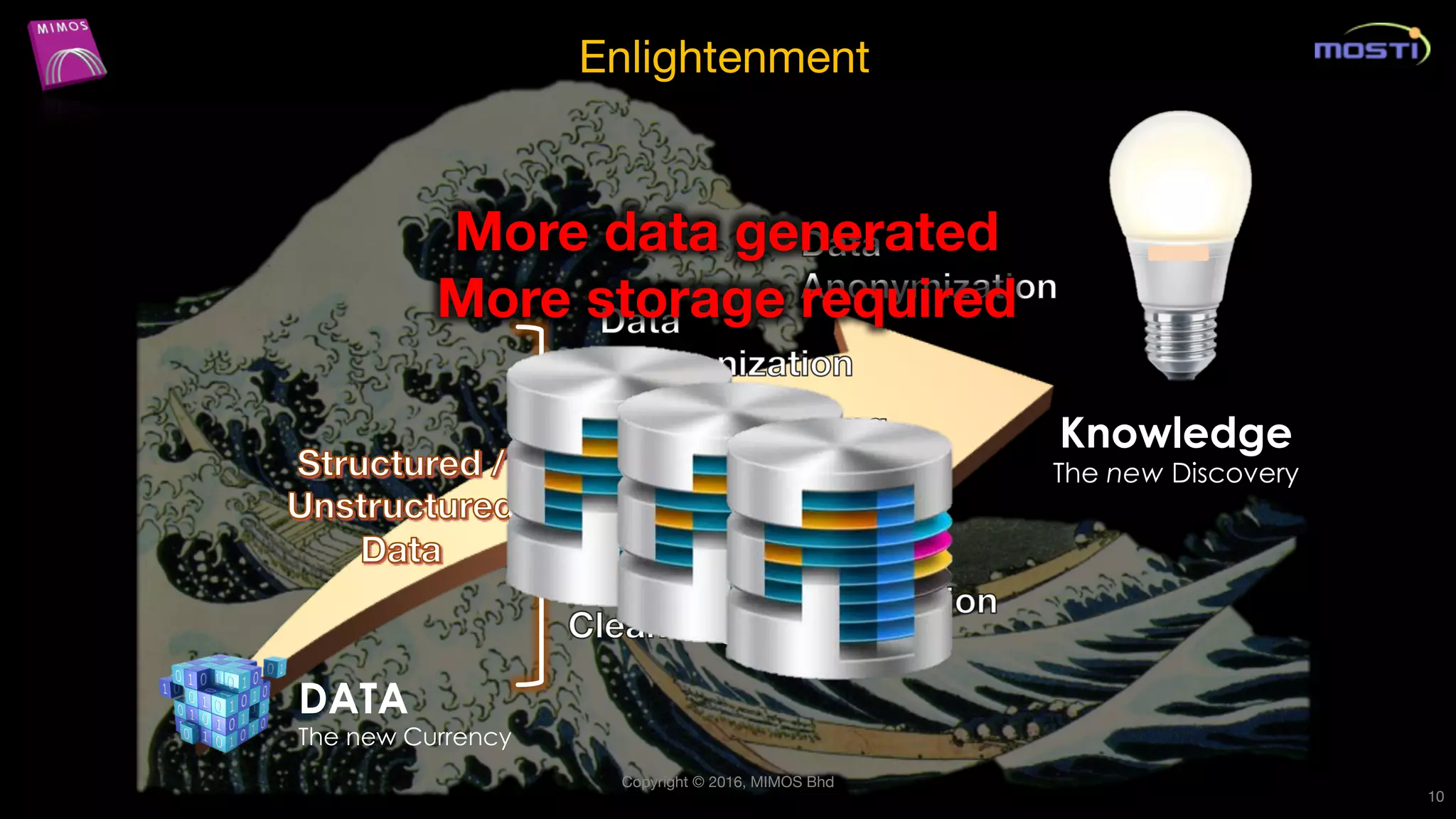

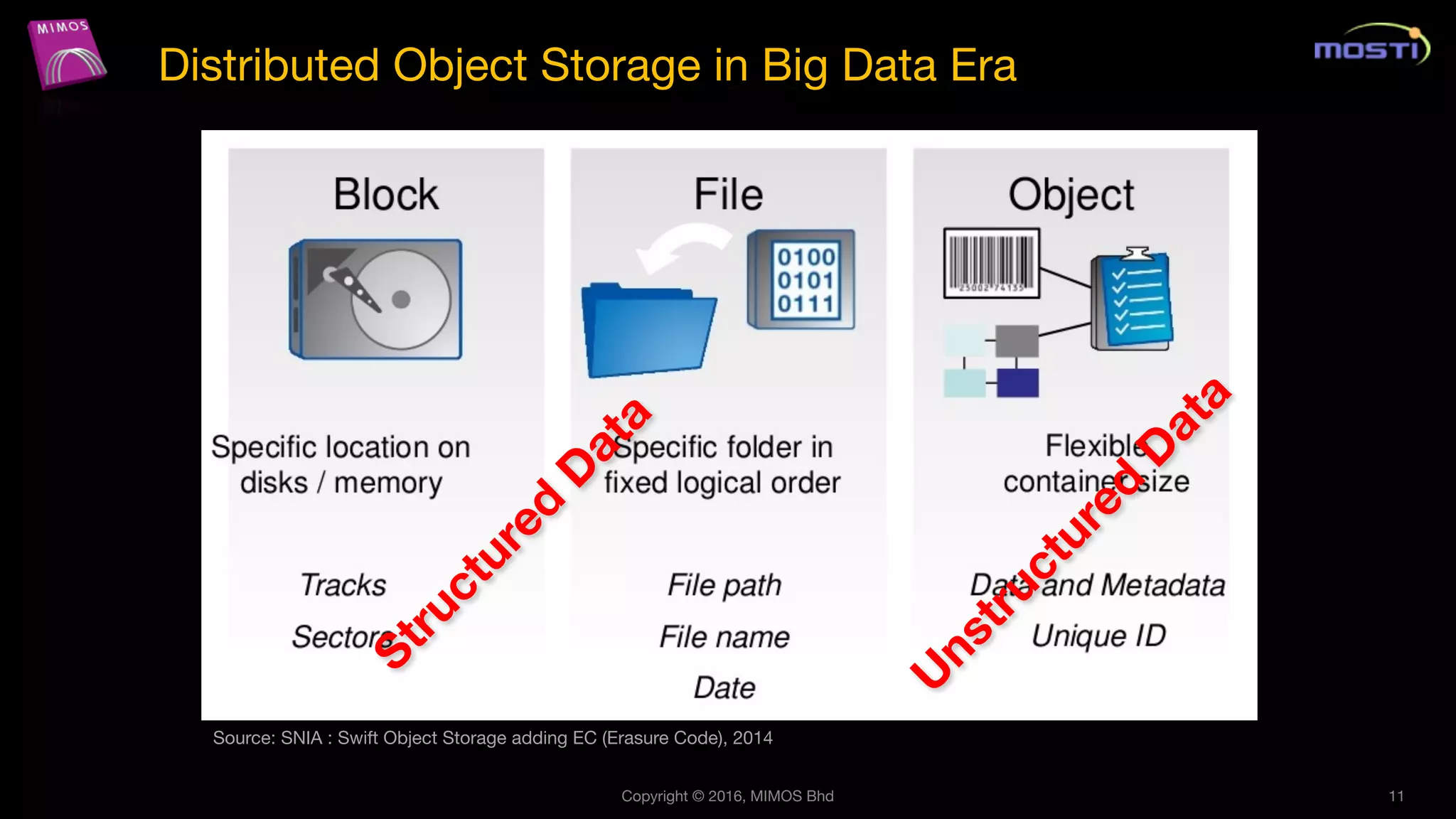

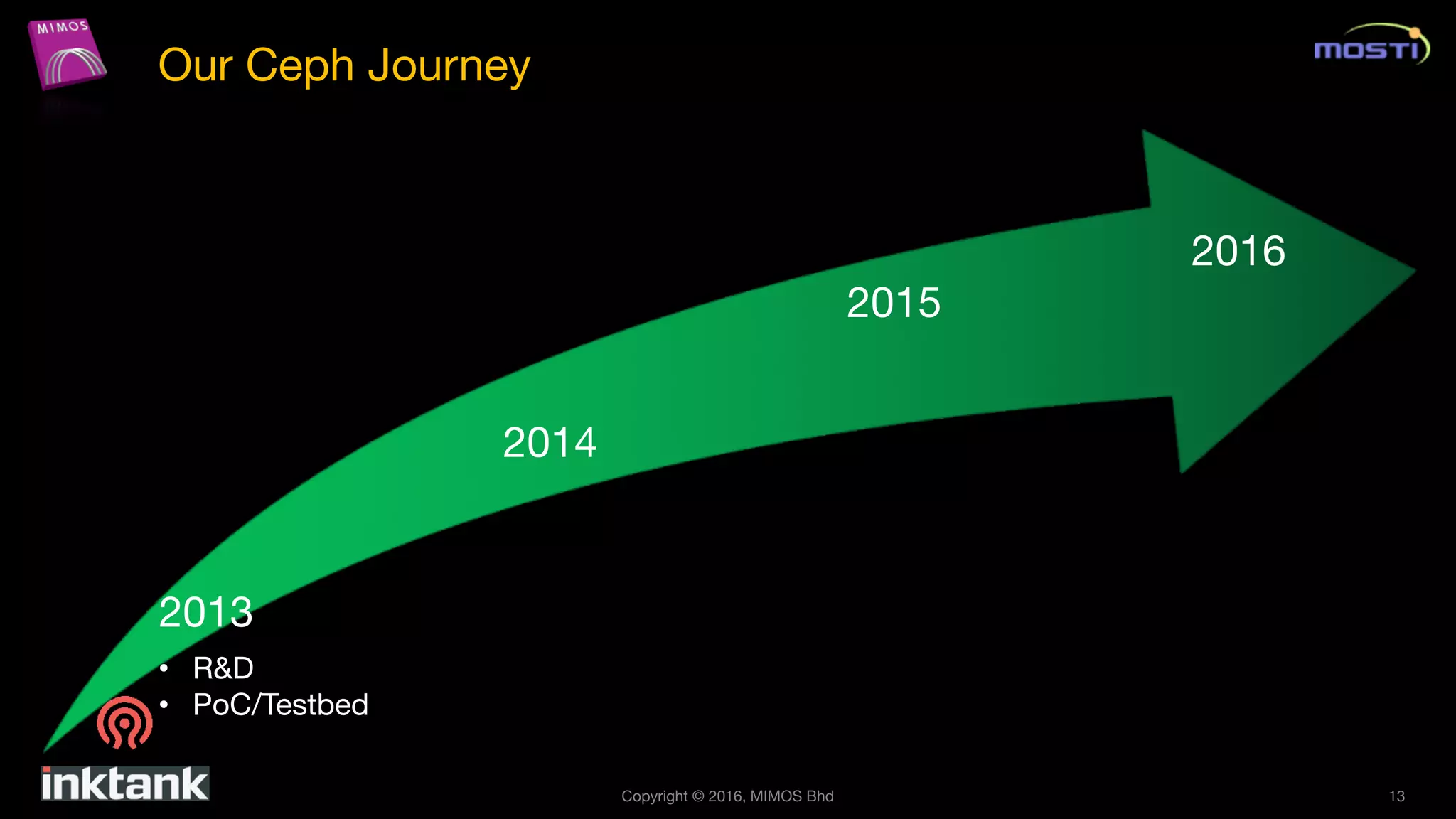

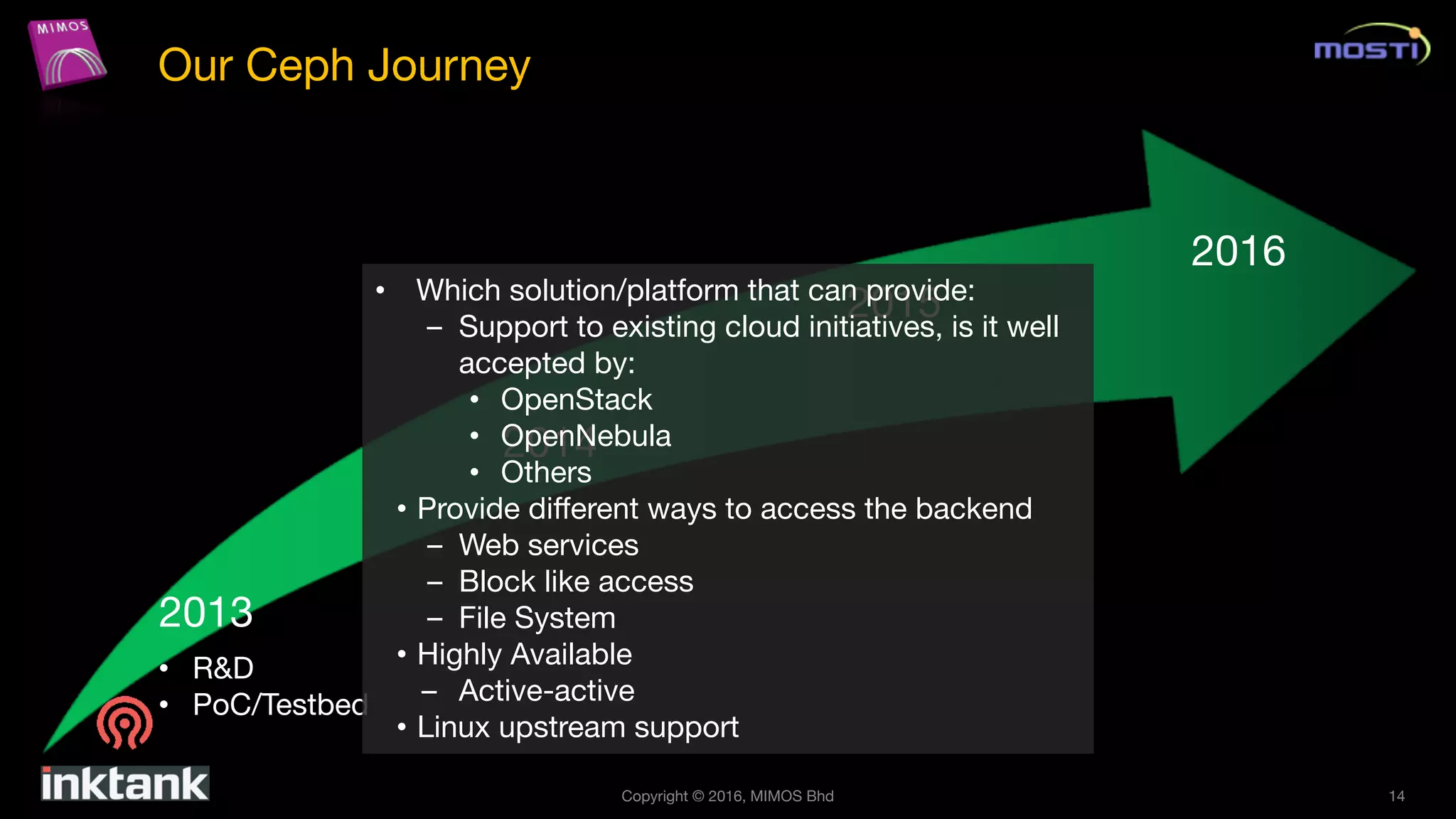

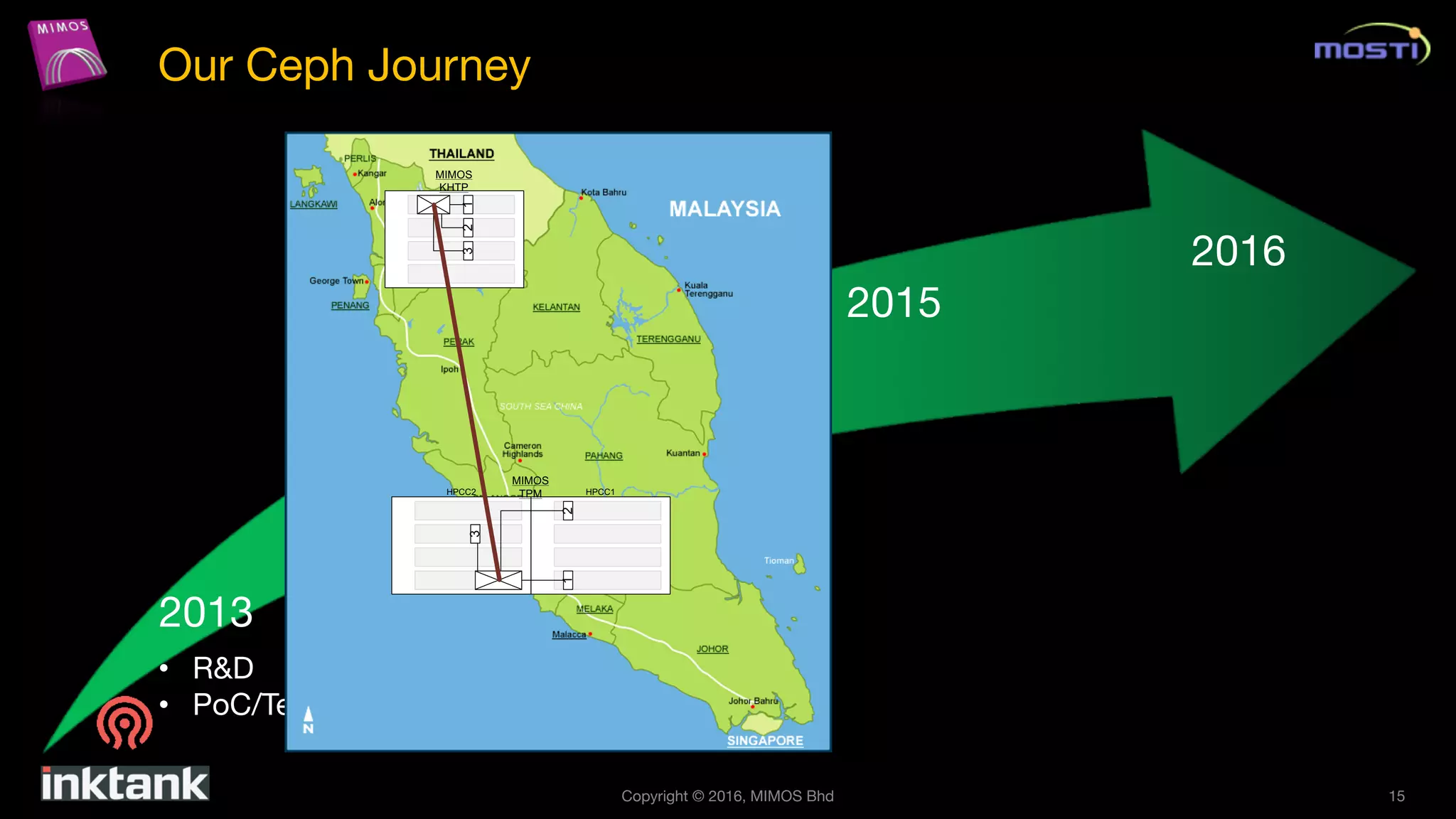

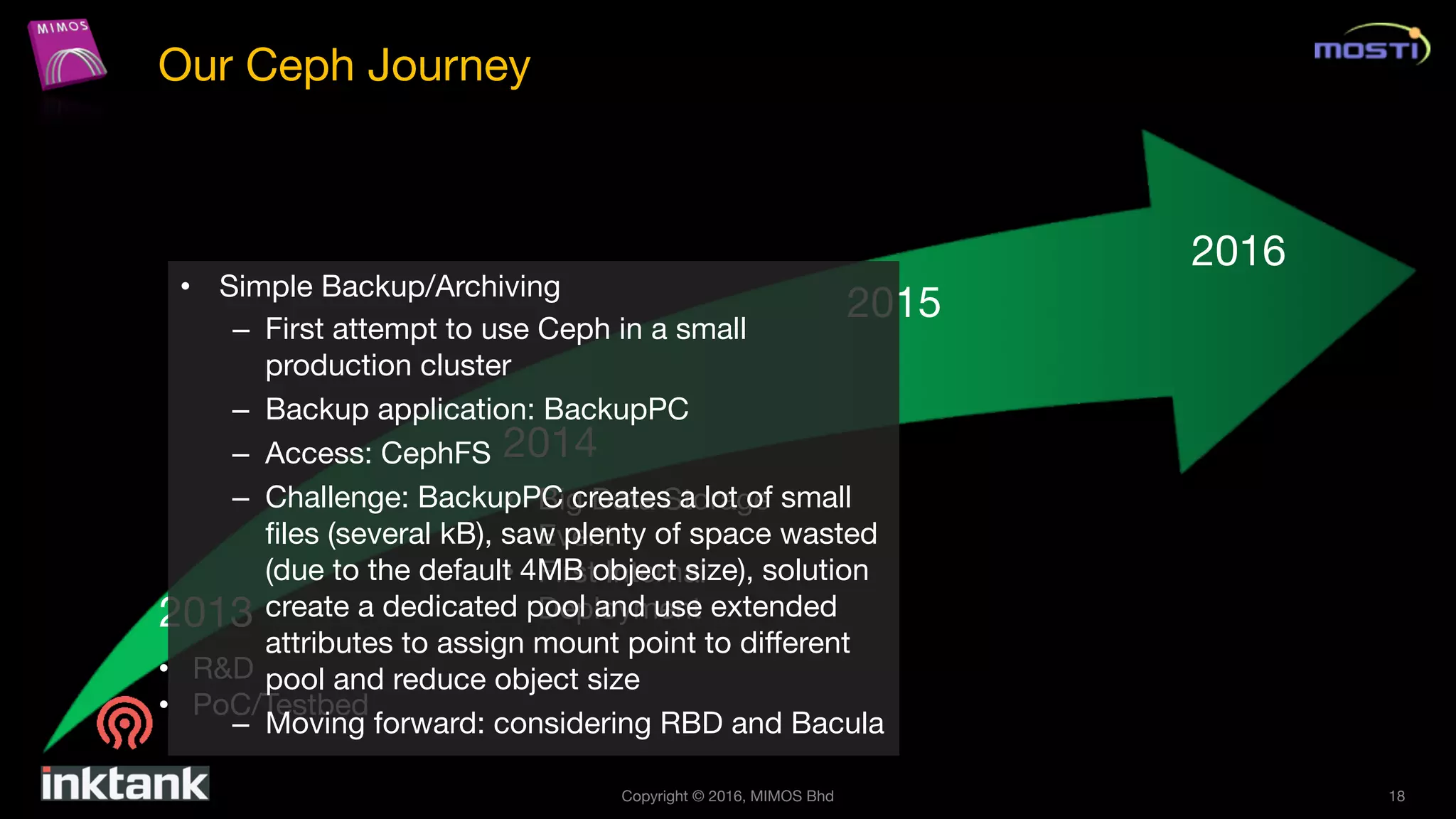

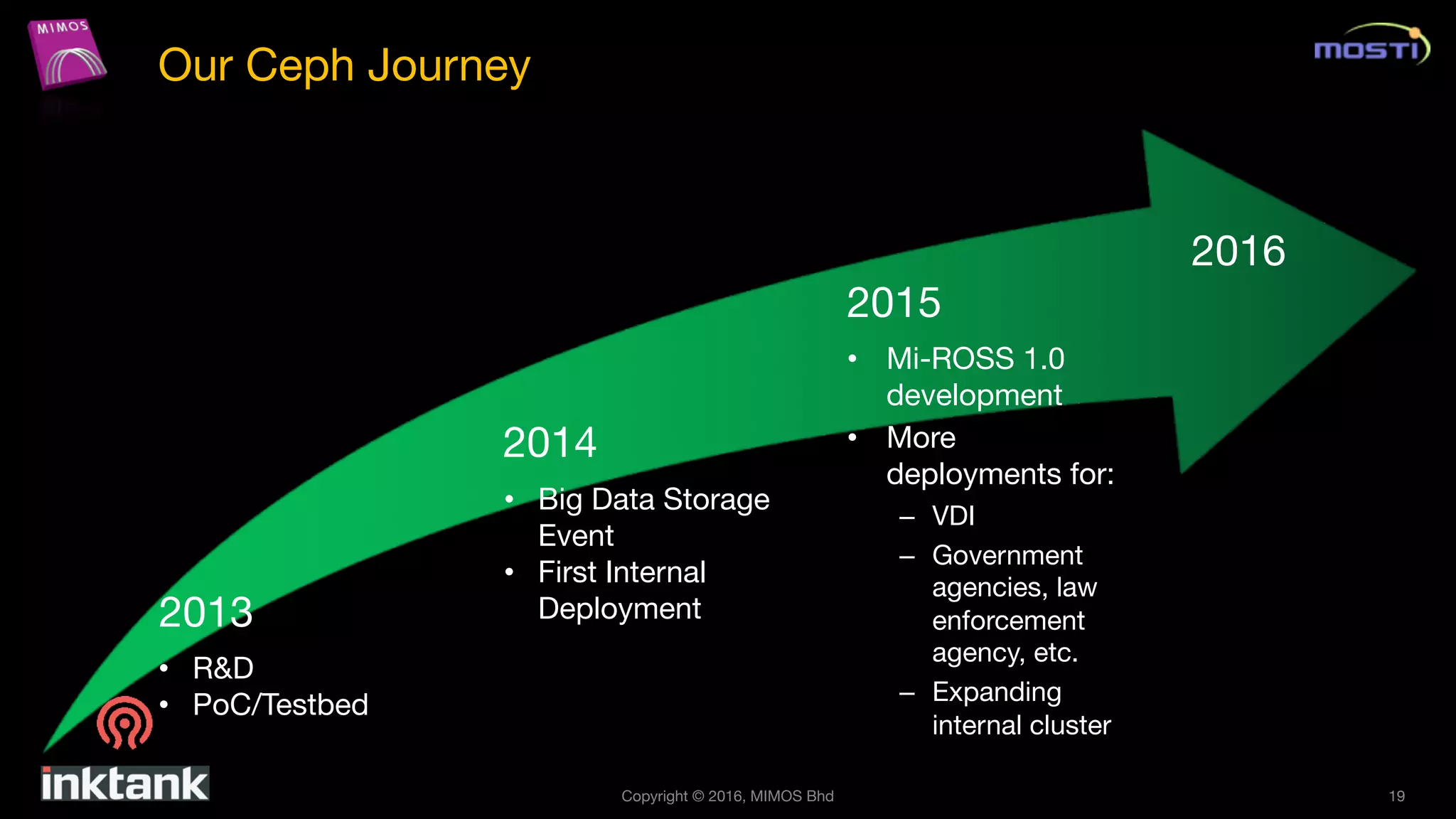

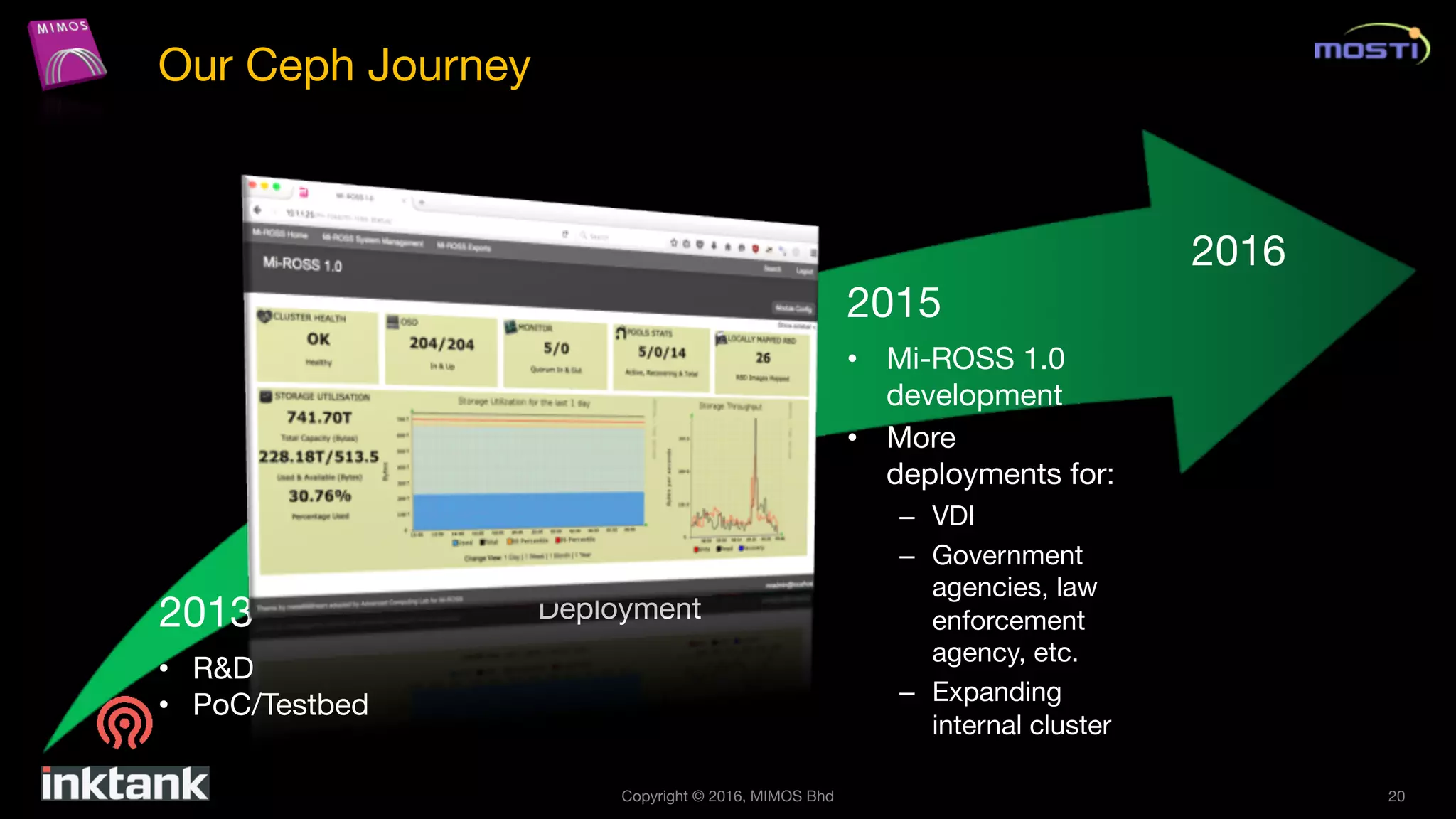

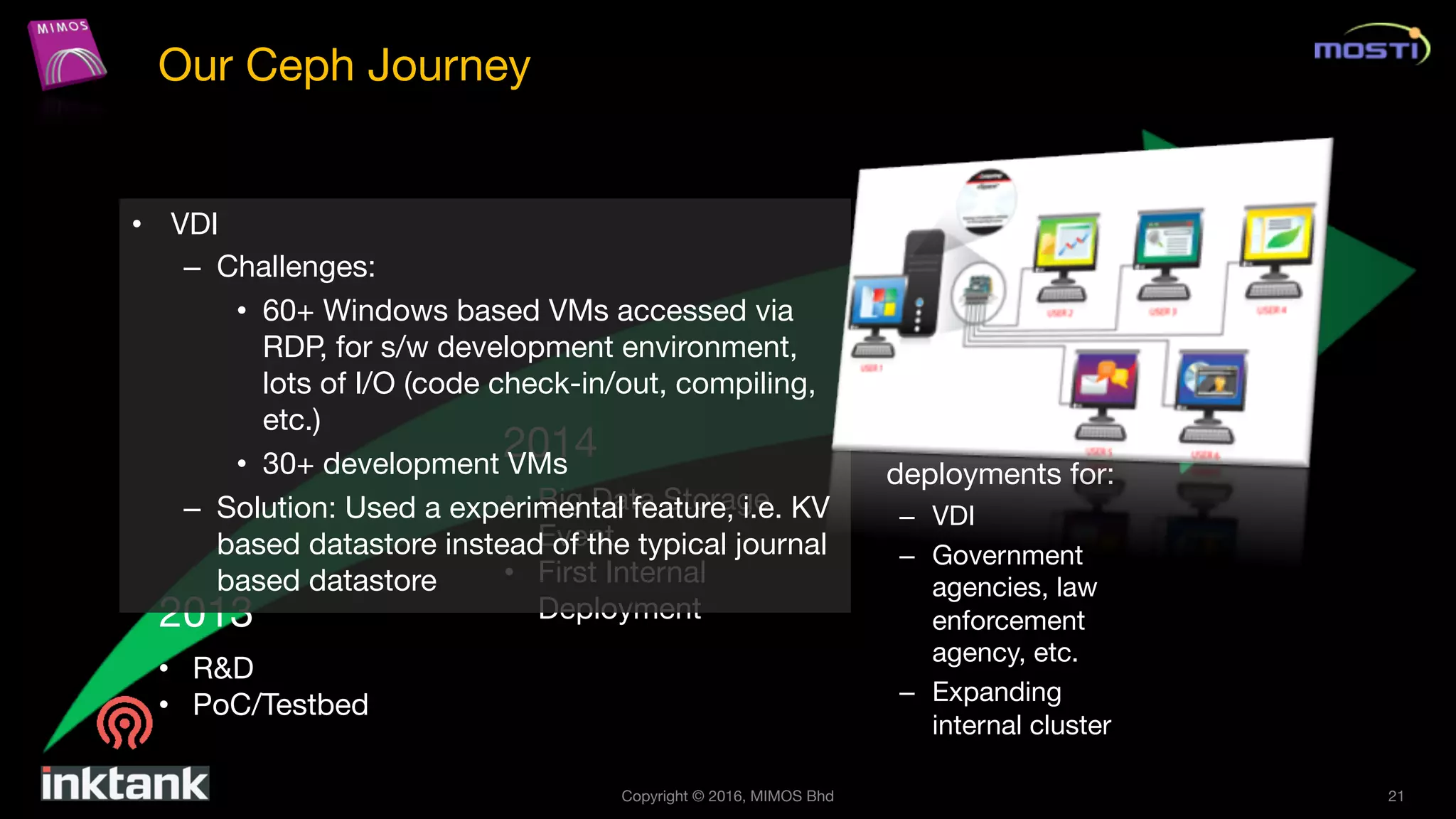

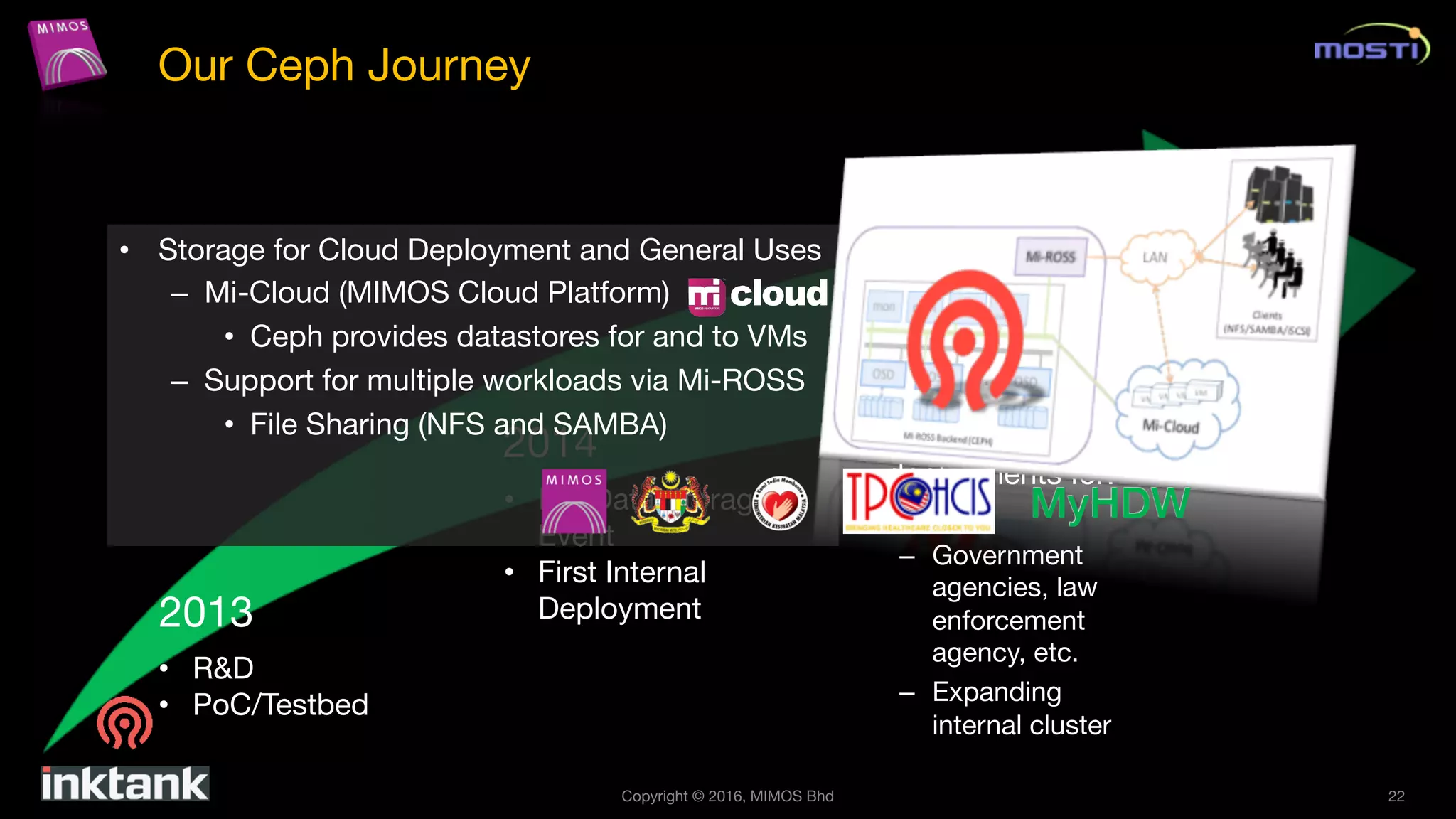

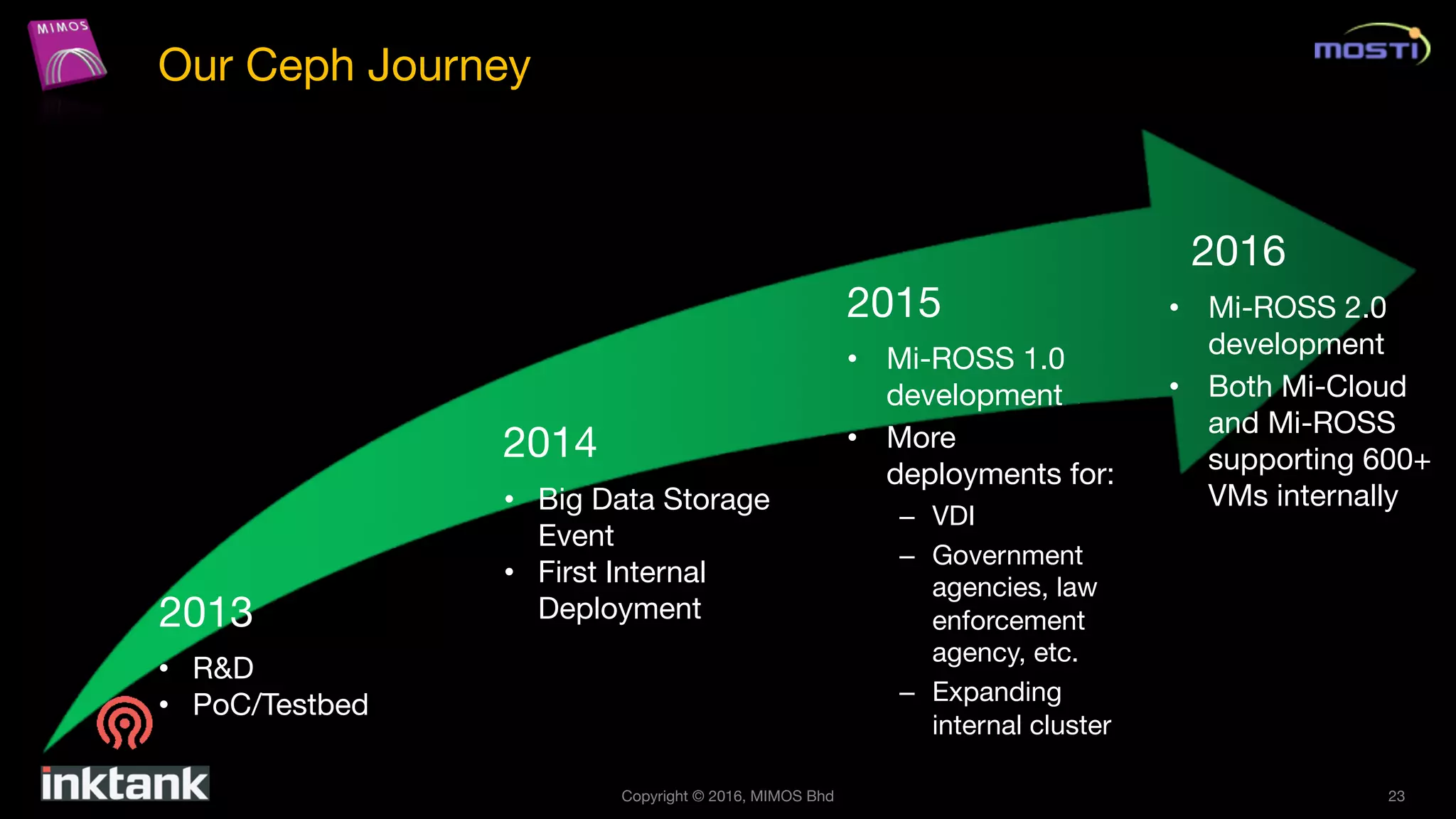

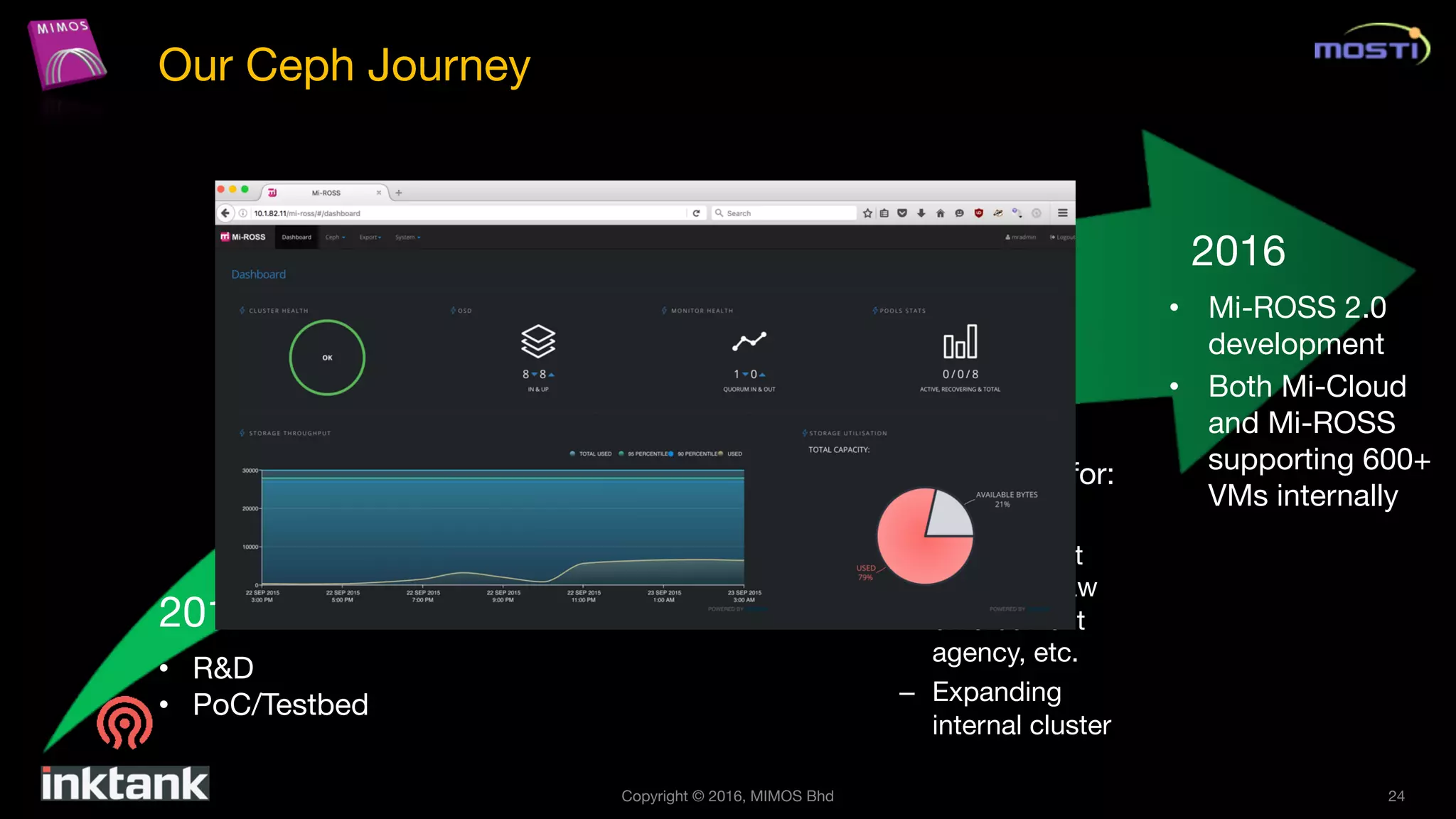

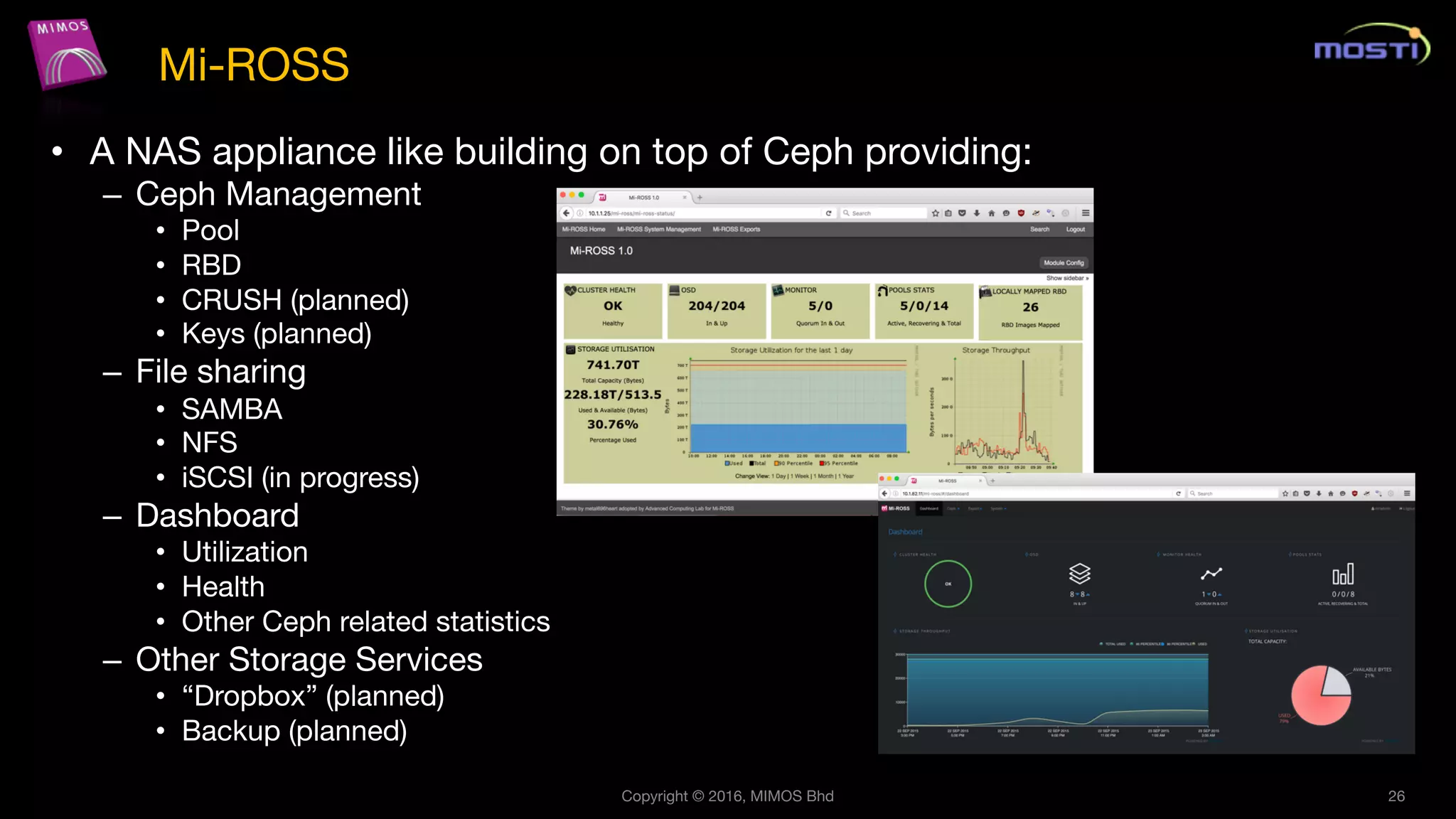

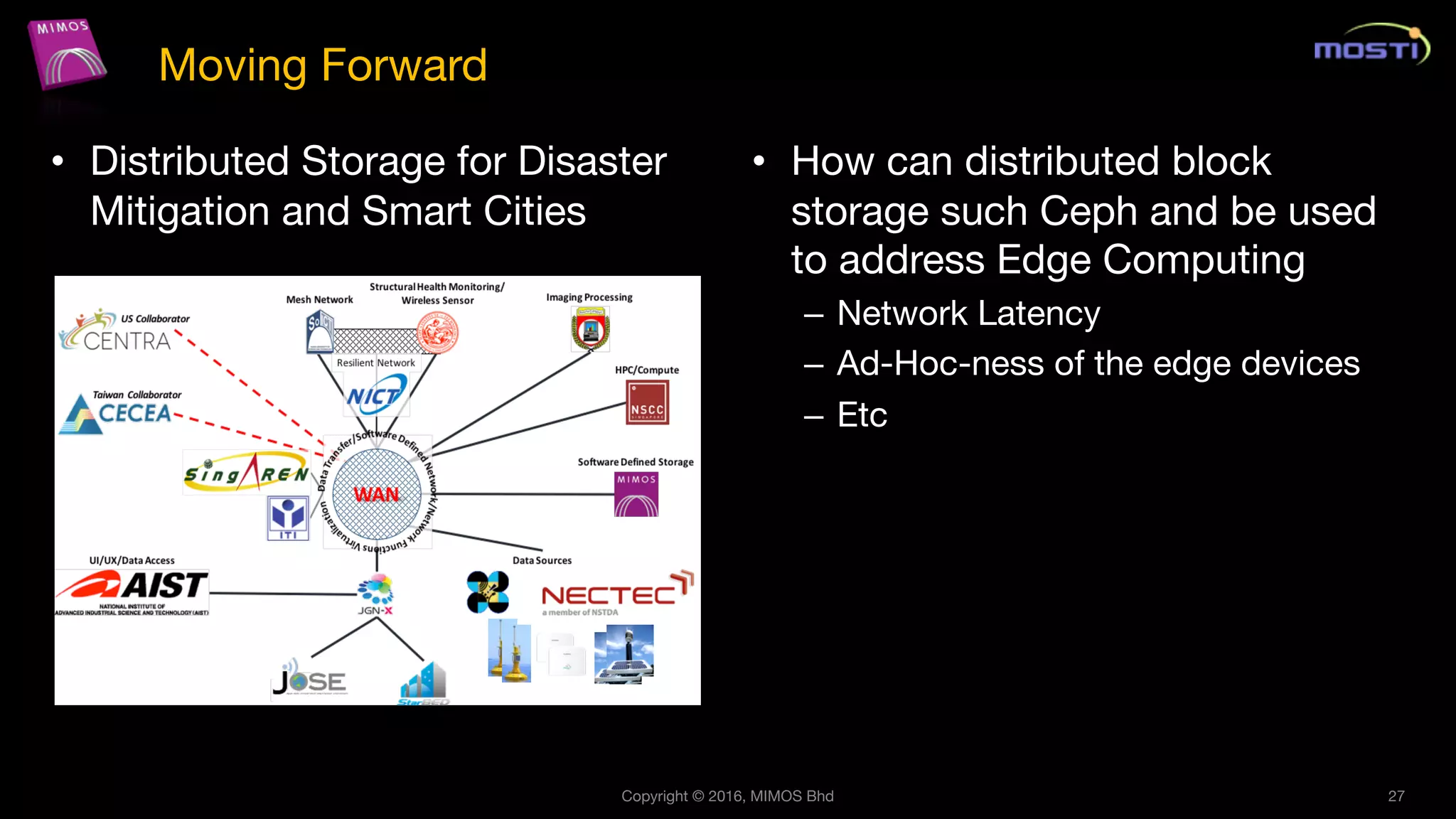

The document outlines MIMOS's journey in implementing Ceph for distributed object storage from 2013 to 2016, highlighting its development, internal deployments, and lessons learned. It emphasizes the challenges faced, such as performance impacts and storage management, as well as future directions for addressing the demands of big data and IoT. MIMOS invites collaboration to enhance open solutions in this area.