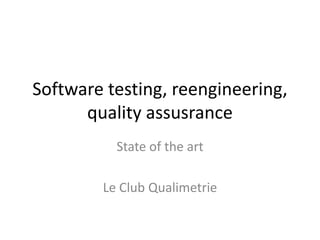

The document discusses cyclomatic complexity, a software metric used to measure the number of linearly independent paths through a program's source code. It provides definitions and formulas for calculating complexity, describes desired properties of complexity metrics, and discusses applications of complexity metrics like testing, design validation, and security. The key points covered are the definition of cyclomatic complexity, methods for computing it, its relationship to testing efforts, and how it can impact reliability.

![Google book search – McCabe Complexity – 826 books

Encyclopedia of Microcomputers

Introduction to the Team Software Process

]

Network Analysis, Architecture and Design

Separating Data from Instructions: Investigating a New Programming](https://image.slidesharecdn.com/02-softwaretestingmccabe-171109091338/85/20100309-02-Software-testing-McCabe-14-320.jpg)

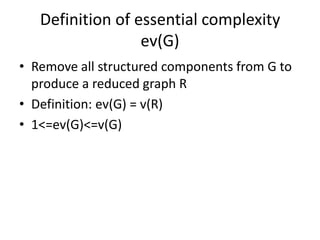

![23

• Module Design Complexity, iv(g)

[integration]

– measure of logical paths containing

calls/invocation of immediate subordinate

modules, i.e., Integration

– calculated by reduction of graph after

removing decisions/nodes with no calls

– value should be higher for modules higher in

hierarchies, that is, in management modules

• Paths Containing Global Data, gdv(g)

– global data also impacts reliability, because it

creates relationships between modules

• Branches, which is related to v(g)

• Maximum Nesting Level

• Others

v(g) = 10

ev(g) = 3

iv(g) = 3

III. Development of Reliable Software - Code Structure

Complexity Metrics Impacting Reliability -

Module](https://image.slidesharecdn.com/02-softwaretestingmccabe-171109091338/85/20100309-02-Software-testing-McCabe-23-320.jpg)