leewayhertz.com-What role do embeddings play in a ChatGPT-like model.pdf

- 1. 1/9 April 21, 2023 What role do embeddings play in a ChatGPT-like model? leewayhertz.com/what-is-embedding Machine learning is a subset of artificial intelligence that enables computers to learn from data and improve their performance over time without being explicitly programmed. Although machine learning is a vast field with all its components bearing special significance in its working, the importance of embeddings cannot be overemphasized. Machine learning has brought about revolutionary transformations in the field of artificial intelligence by enabling computers to learn and enhance their abilities through data, without the need for explicit programming. And in the vast realm of machine learning, where different technical components come together to build ingenious solutions and applications, embeddings hold a crucial place and their significance cannot be overstated. Embeddings are mathematical representations of data that capture meaningful relationships between entities. Embeddings have become an indispensable part of a data scientist’s toolkit and have dramatically changed how natural language processing (NLP), computer vision, and recommender systems work. Despite their widespread use, many data scientists find embeddings archaic and confusing. Many more use them blindly without understanding what they are. However, understanding embeddings is crucial for anyone working in the field of machine learning, especially in NLP. According to a recent report by OpenAI, language models like GPT-3 that utilize embeddings have the ability to perform a wide range of natural language tasks, including language translation, question answering, and language generation.

- 2. 2/9 In this article, we will dive deep into what embeddings are, how they work, and how they are often operationalized in real-world systems. We will explore the different types of embeddings, including text and image embedding. We will also examine popular embedding models like Word2Vec, PCA, SVD and BERT. Additionally, we will discuss the role of embeddings in ChatGPT-like models, which are some of the most advanced language models available today. By the end of this article, you will better understand the importance of embeddings in machine learning and their significant impact on natural language processing. So, let’s dive in and explore machine learning embeddings. What are embeddings? User movies (subset used as input features) Sparse vector encoding Logit layer User movies (subset to use as “labels”) Other features (optional) Softmax Loss 0 1 2 3 4 5 6 7 8 9 3 Dimensional embedding Target prob. dist (sparse) LeewayHertz An embedding is a way of representing complex information, like text or images, using a set of numbers. It works by translating high-dimensional vectors into a lower- dimensional space, making it easier to work with large inputs. The process involves capturing the semantic meaning of the input and placing similar inputs close together in the embedding space, allowing for easy comparison and analysis of the information. One of the main benefits of using embeddings is that they can be learned and reused across models. This means that once an embedding is created, it can be applied to various applications, like text classification, sentiment analysis, or image recognition. For instance, if we have a sentence, “What is the main benefit of voting?” We can represent it in a vector space with a set of numbers. These numbers represent the meaning of the sentence and can be used to compare it to other sentences. By calculating the distance between different embeddings, we can determine how similar the meaning of two sentences is.

- 3. 3/9 Embeddings are not limited to text. They can also be used to represent images by creating a list of numbers that describe the image’s features which can then be compared to text embeddings to determine if a sentence accurately describes the image. Embeddings are an incredibly useful tool in machine learning, especially when combined with collaborative filtering to create recommendation systems. By representing data as embeddings, we can simplify the training and prediction process while also improving model performance. In particular, item similarity use cases have benefited greatly from embeddings, helping to create more effective and accurate recommendation systems. Embeddings are an essential tool in the field of machine learning and are utilized in various data-driven industries. They allow the representation of high-dimensional data in a lower-dimensional space, which makes it possible to capture the underlying relationships between data points. Embeddings are widely used for applications such as natural language processing, image recognition, and recommender systems. Their ability to efficiently represent complex data structures in a simplified format has made them a critical component in the development of modern machine learning algorithms. How are embeddings stored and accessed? Embeddings represent data in machine learning applications, but how are they stored and accessed? This is where the concept of a vector store comes into play. A vector store is a type of data structure that allows embeddings to be efficiently stored and accessed. By storing embeddings as vectors, we can perform vector operations such as cosine similarity and vector addition, which can be useful in many machine-learning tasks. For example, in natural language processing, we can use vector stores to represent words as embeddings and perform tasks such as semantic similarity and word analogies. To understand how vector stores work, it’s helpful to consider how embeddings are created first. Before we can create embeddings from text, we need to process the text to prepare it for modeling. One common text processing technique is dividing the text into smaller pieces called chunks. These chunks can be sentences, paragraphs, or even smaller units such as words. Once the text has been divided into chunks, we can create embeddings for each chunk using neural network models such as Word2Vec, GloVe, or FastText. These models learn to map words to high-dimensional vectors based on their co-occurrence patterns in large text corpora. For example, words that appear in similar contexts (e.g., “king” and “queen”) are assigned similar vectors, while words with different meanings (e.g., “king” and “car”) are assigned different vectors. These embeddings capture the semantic and syntactic properties of the text in each chunk, which can be useful in various machine- learning tasks. Chunks are particularly useful in tasks such as sentiment analysis or named entity recognition, where we want to classify individual parts of a text rather than the entire text. By processing the text in chunks, we can obtain more fine-grained information about the text and improve the accuracy of our models.

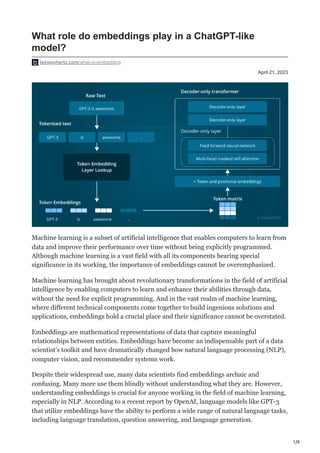

- 4. 4/9 Once the embeddings have been generated, they are stored in the vector store, essentially a database that stores the embeddings as high-dimensional vectors, with each vector corresponding to a specific word. When we want to perform any operation on the embeddings, such as finding the most similar words to a given word or computing the vector representation of a sentence, we can retrieve the appropriate vectors from the vector store. This is typically done using a key-value lookup, where the word is used as the key, and the corresponding embedding vector is returned as the value. One advantage of using vector stores is that they enable fast and efficient vector operations, such as computing the cosine similarity between two embeddings or performing vector addition and subtraction. These operations can be useful in various machine-learning tasks, such as sentiment analysis, text classification, and language translation. Importance of embeddings Embeddings play a crucial role in the functioning of transformers. Transformers are an essential concept to understand when discussing platforms like ChatGPT that are based on language transformers. These models possess unique properties that differentiate them from other machine learning models. Unlike other models that produce static and immutable weights, transformers have soft weights that can be changed at runtime, similar to a dynamically-typed programming language’s REPL ( read–eval–print loop). Embeddings in the transformer model create a new layer of lower dimensionality that gives cadence to much larger high-dimensional vectors, allowing for more nuanced data analysis and can improve a model’s ability to classify and interpret human language. Dimensionality, in this context, refers to the shape of the data. Embeddings can enhance a model’s ability to analyze complex data by creating a low-dimensional space to represent high-dimensional vectors. In essence, embeddings are a crucial tool for improving the performance of soft weight models like transformers, making them a powerful technique for natural language processing and other machine learning applications. Here are some of the reasons why embeddings are important: Semantic representation: Embeddings capture the semantic meaning of the input data, allowing for easy comparison and analysis. This can improve the performance of many natural language processing tasks, such as sentiment analysis and text classification. Lower dimensionality: Embeddings create a lower-dimensional space to represent high-dimensional vectors, which makes it easier to work with large inputs and reduces the computational complexity of many machine learning algorithms. Reusability: Once an embedding is created, it can be used across multiple models and applications, making it a powerful and efficient technique for data analysis. Robustness: Embeddings can be trained on large datasets and capture the underlying patterns and relationships in the data, making them robust and effective for many industry applications.

- 5. 5/9 Types of embeddings When it comes to producing embeddings in a deep neural network, many different strategies are available, each with its own strengths and weaknesses. The choice of strategy depends entirely on the purpose of the embeddings and what kind of data is being used. Whether it’s through recurrent neural networks, convolutional neural networks, or some other method, the right strategy can make all the difference in creating high-quality embeddings that accurately capture the underlying semantic meaning of the data. Text embedding Text embedding is a technique to convert a string of characters into a vector of real numbers. This process creates a space for the text to be embedded, referred to as an “embedding.” Text embedding is closely related to text encoding, which converts plain text into tokens. In sentiment analysis, the text embedding block is integrated with the datasets view’s text encoding. It is important to note that the text embedding block can only be used following an input block that requires the selection of a text-encoded feature. Additionally, it is crucial to ensure that the language model chosen matches the language model selected during text encoding. Several models, such as NNLM, GloVe, ELMo, and Word2vec, are designed to learn word embeddings, which are featured vectors for each real-valued word. Image embedding Image embedding is a process that involves analyzing images and generating numerical vectors that capture the features and characteristics of the image. This is typically done using deep learning algorithms, which have the ability to extract features from the image and convert them into a vector representation. This vector representation can be used to perform various tasks such as image search, object recognition, and image classification. Various image embedding techniques are available, each of which has been designed for a specific task. Some embedding techniques require the images to be uploaded to a remote server for processing, while others can be performed locally on the user’s computer. The SqueezeNet embedder is an example of a technique that can be run locally and is particularly useful for quick image reviews without an internet connection. Common embedding models Principal Component Analysis (PCA) Principal Component Analysis (PCA) is a type of embedding model commonly used in machine learning and data analysis. PCA is a technique for reducing the dimensionality of a dataset while retaining as much of the original information as possible. It works by finding a set of linearly uncorrelated variables, known as principal components, that capture the maximum amount of variation in the data. These principal components can

- 6. 6/9 be considered a lower-dimensional representation of the original data and can be used as input features for a machine learning algorithm. PCA is a powerful tool for visualizing and exploring high-dimensional datasets and can be used in a wide range of applications, including image and text analysis, data compression, and feature extraction. SVD Singular Value Decomposition (SVD) is a mathematical technique that is widely used in many fields, including machine learning and data science. It is a matrix factorization method that decomposes a matrix into two smaller matrices, allowing for a reduction in the number of features or dimensions in a dataset, known as dimensionality reduction. SVD is commonly applied to a matrix that contains information about user ratings for different items, such as movies, books, or products. In this case, the matrix is decomposed into two smaller matrices, known as embeddings. One matrix represents users, and the other represents items. These embeddings capture the relationships between users and items in the dataset. For example, if a user frequently rates horror movies highly, their embedding will be closer to the embedding for horror movies than to the embedding for romantic comedies. Similarly, if two movies have similar embeddings, they may be recommended to the same user. By multiplying these embeddings, we can predict user ratings for items by taking the dot product of the user embedding and the item embedding, giving a predicted rating for that item. The higher the dot product, the higher the predicted rating. Word2Vec Word2Vec is a computer program that can help understand how words are related to each other. It does this by turning each word into a special code called an “embedding.” To create these embeddings, Word2Vec looks at how often words appear together in sentences. It then uses this information to guess what other words should appear in the same sentence, which is like a game of charades where you have to guess what word your friend is thinking of based on the words they use to describe it. Once Word2Vec has made these guesses, it uses them to create the embeddings. Words that are often used together will have similar embeddings. For example, “king” and “queen” will have similar embeddings because they are often used together in sentences. These embeddings can be used to create analogies. For example, we can use the embeddings to answer the question, “What word is to ‘man’ as ‘queen’ is to ‘woman’?” The answer is “king.” Overall, Word2Vec is a useful tool for understanding how words relate to each other. Its embeddings can be used to create analogies and help computer programs better understand human language. BERT

- 7. 7/9 BERT stands for Bidirectional Encoder Representations of Transformers. It’s a special computer program that can understand human language. BERT is better than older language programs like Word2Vec because it goes through two stages of training. First, BERT studies a lot of text from places like Wikipedia to learn how words relate to each other. Then, it goes through a second stage of training where it is taught to understand a specific type of text, like medical articles. What makes BERT special is its ability to pay attention to the context of words. For example, BERT can tell that “play” in “Let’s play a game” is different from “play” in “I’m going to see a play tonight.” BERT does this by considering the words that come before and after each word it analyzes. Overall, BERT is good at understanding language and predicting what comes next in a sentence. This makes it a popular tool for computer programs that need to understand and generate human-like text. Various applications of embeddings Embeddings have transcended their initial use in research and have become a crucial component of real-world machine-learning applications. They are widely employed in various fields, including Natural Language Processing (NLP), recommender systems, and computer vision. By enabling the efficient representation of data in a low-dimensional space, embeddings have improved the performance of many machine learning models in various real-world applications. For instance, embeddings have been utilized to improve search engines, power voice assistants, and enhance image recognition systems. Recommender systems In a recommender system, the goal is to predict user preferences and ratings for various products or entities. Two common approaches to recommender systems are collaborative filtering and content-based filtering. Collaborative filtering involves using user actions to train and generate recommendations. Modern collaborative filtering systems often use embeddings, such as the SVD method described earlier, to build a relationship between users and products. By multiplying a user embedding by an item embedding, a rating prediction is generated, allowing similar items to be recommended to similar users. Embeddings can also be used in downstream models, such as YouTube’s recommender system, which uses embeddings as inputs to a neural network that predicts watch time. Semantic search To provide more accurate search results, BERT-based embeddings take into account the context and meaning of words, enabling search engines to understand the nuances of language and provide better results. For example, when searching for “How to make pizza,” a semantic search engine using BERT would understand that the user is looking for instructions on making pizza, not just general information about pizza. This improves the relevance and accuracy of search results, leading to a better user experience.

- 8. 8/9 Computer vision In computer vision, embeddings are crucial in bridging the gap between different contexts. In practical applications like self-driving cars, images can be transformed into embeddings and used to make decisions based on the embedded context. This enables transfer learning, allowing models to be trained using generated images from video games instead of expensive, real-world images. Tesla is already utilizing this technique. Another interesting example is the AI Art Machine, which can generate an image based on user input text. By transforming text and an image into embeddings in the same latent space, we can translate between the two using embeddings as an intermediate representation. With this approach, it is possible to go from text to image and vice versa using multiple transformations, such as Image -> Embedding, Text -> Embedding, Embedding -> Text, and Image -> Text. How to efficiently store and retrieve embeddings in an LLM like ChatGPT? Storing and retrieving embeddings can be a challenge, especially when dealing with large datasets. One solution to this problem is using the Llama index, a data structure that enables fast and efficient nearest-neighbor search in high-dimensional spaces. The Llama index is designed to work with embeddings and other high-dimensional vectors, allowing for fast retrieval of similar vectors based on similarity measures such as cosine similarity. By efficiently indexing the embeddings using the Llama Index, we can quickly search through large datasets and find the most similar vectors to a given query vector. In the context of natural language processing, the Llama index can be used to find the most similar words or phrases to a given query word or phrase. This can be useful in various applications, such as recommendation systems, search engines, and text classification. It works by dividing the high-dimensional space into smaller cells or buckets, each containing a subset of the vectors. The cells are arranged in a hierarchical structure, with each level of the hierarchy having a smaller set of cells that represent a more refined partitioning of the space. The Llama index employs a technique known as product quantization, which divides the high-dimensional vector into multiple low-dimensional subvectors, each of which is quantized to a finite number of values. The quantized subvectors are then used to identify the cells the vector belongs to, allowing for a fast and efficient lookup of similar vectors. One of the main benefits of the Llama Index is its ability to perform fast and accurate nearest-neighbor searches, which is useful in various machine learning applications. In ChatGPT, for example, the Llama index is used to find the most similar context vectors to a given input text, allowing the model to generate more relevant and coherent responses.

- 9. 9/9 Another benefit of the Llama index is its scalability, as it can handle large datasets with millions or even billions of vectors. This makes it well-suited for applications that involve processing large amounts of text data, such as natural language understanding and sentiment analysis. Additionally, the Llama index can reduce the embeddings’ memory footprint by storing only a subset of the vectors in memory and retrieving the rest on demand. This can significantly reduce the memory requirements of machine learning models that rely on embeddings. Endnote Embeddings have become crucial to various machine learning models, including recommendation algorithms, language transformers, and classification models. They are essentially high-dimensional numerical representations of words that capture the meaning and context of the text, making it easier for models to interpret and analyze language. OpenAI’s embedding implementation is particularly useful for the ChatGPT model. Using embeddings, ChatGPT can easily understand the relationships between different words and categories rather than just analyzing each word in isolation, allowing the model to generate more coherent and contextually relevant responses to user prompts and questions. Overall, embeddings are a powerful tool for improving the accuracy and efficiency of machine learning models, enabling them to better capture the nuances and complexities of language, leading to more accurate predictions and highly effective algorithms. As machine learning advances, we can expect embeddings to play an increasingly important role in developing new and innovative applications. Ready to leverage the power of embeddings for your project? Contact us today to learn how we can help you create high-quality embeddings that capture the relationships between words in your dataset. Start a conversation by filling the form