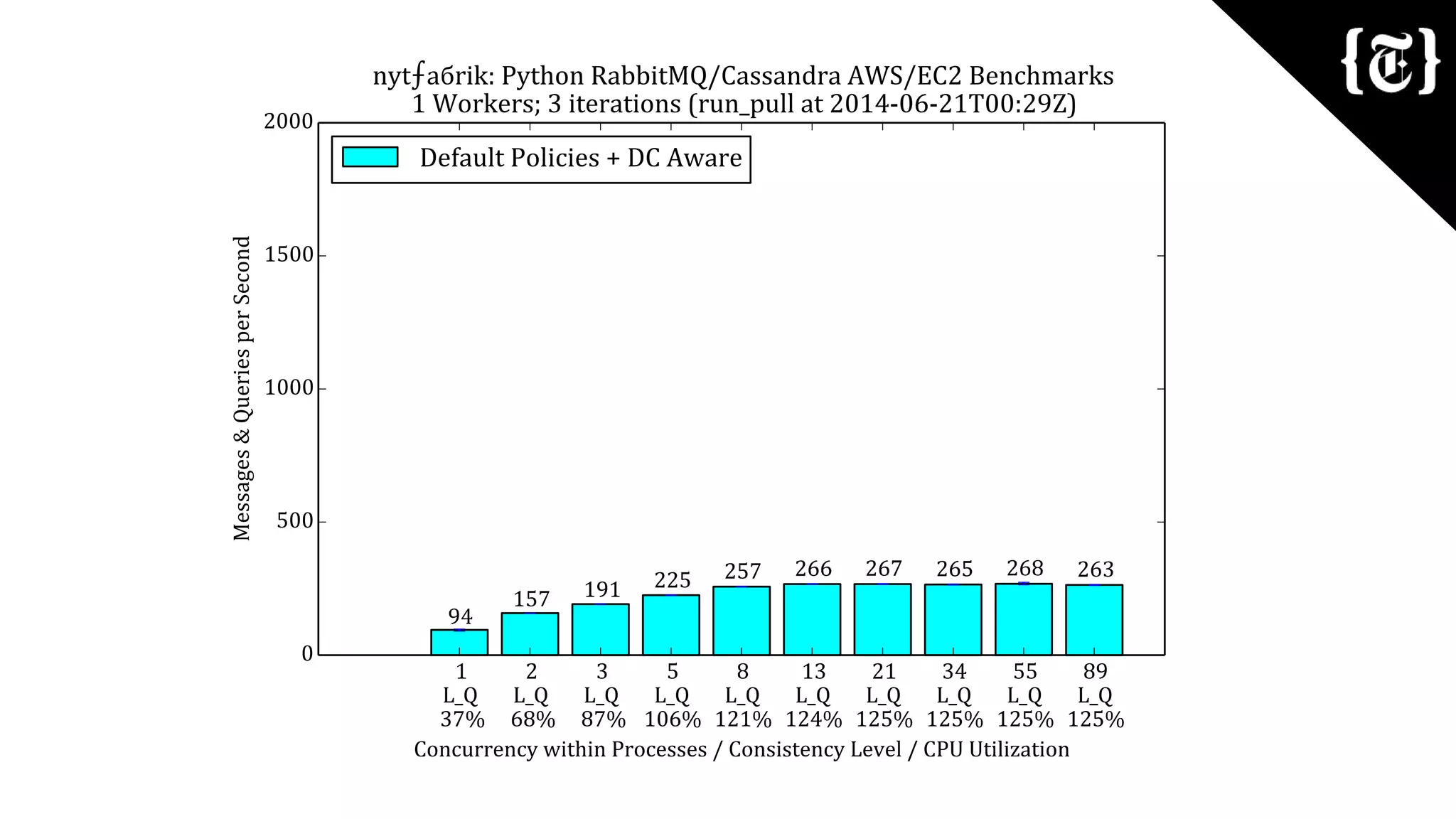

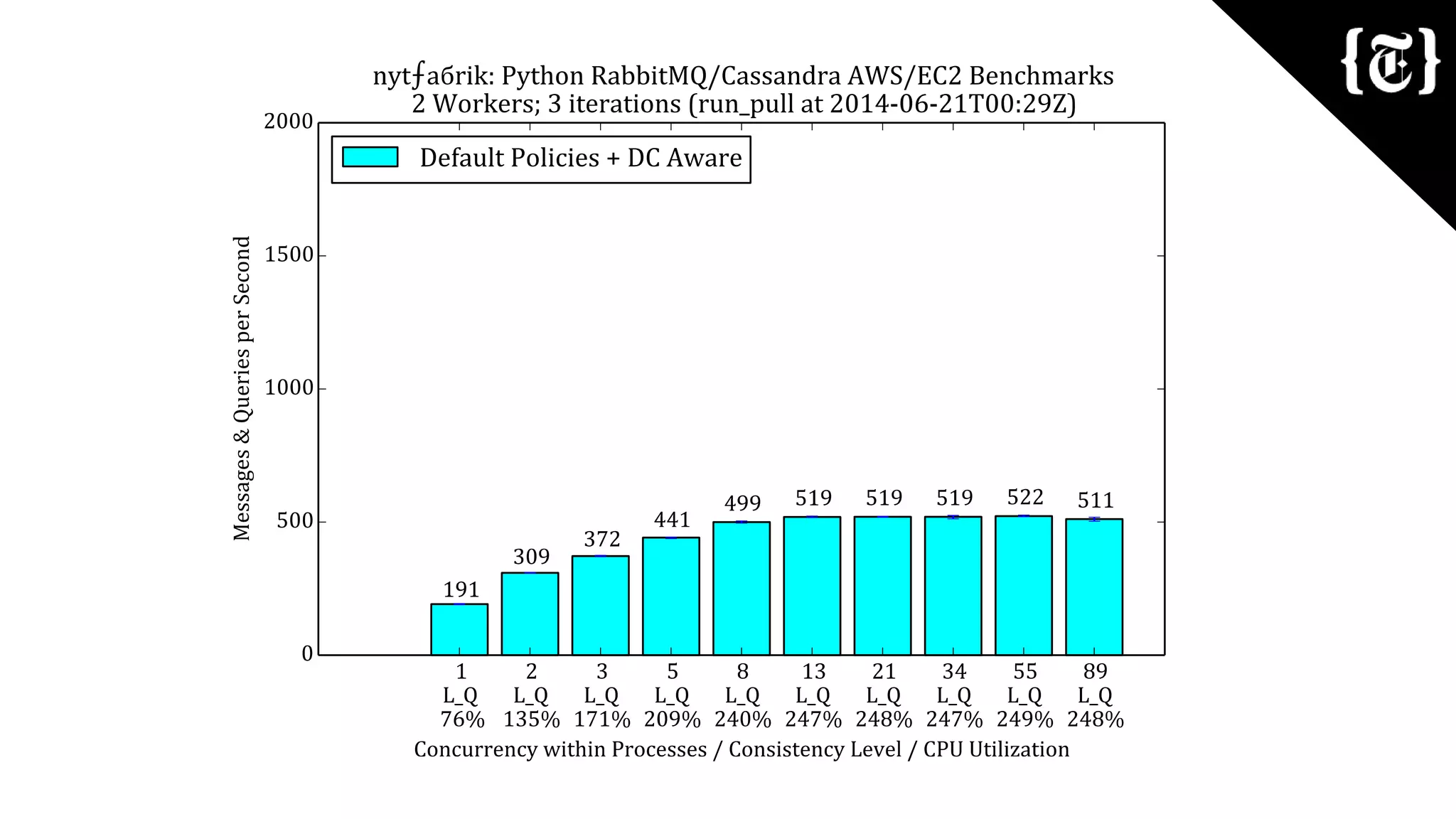

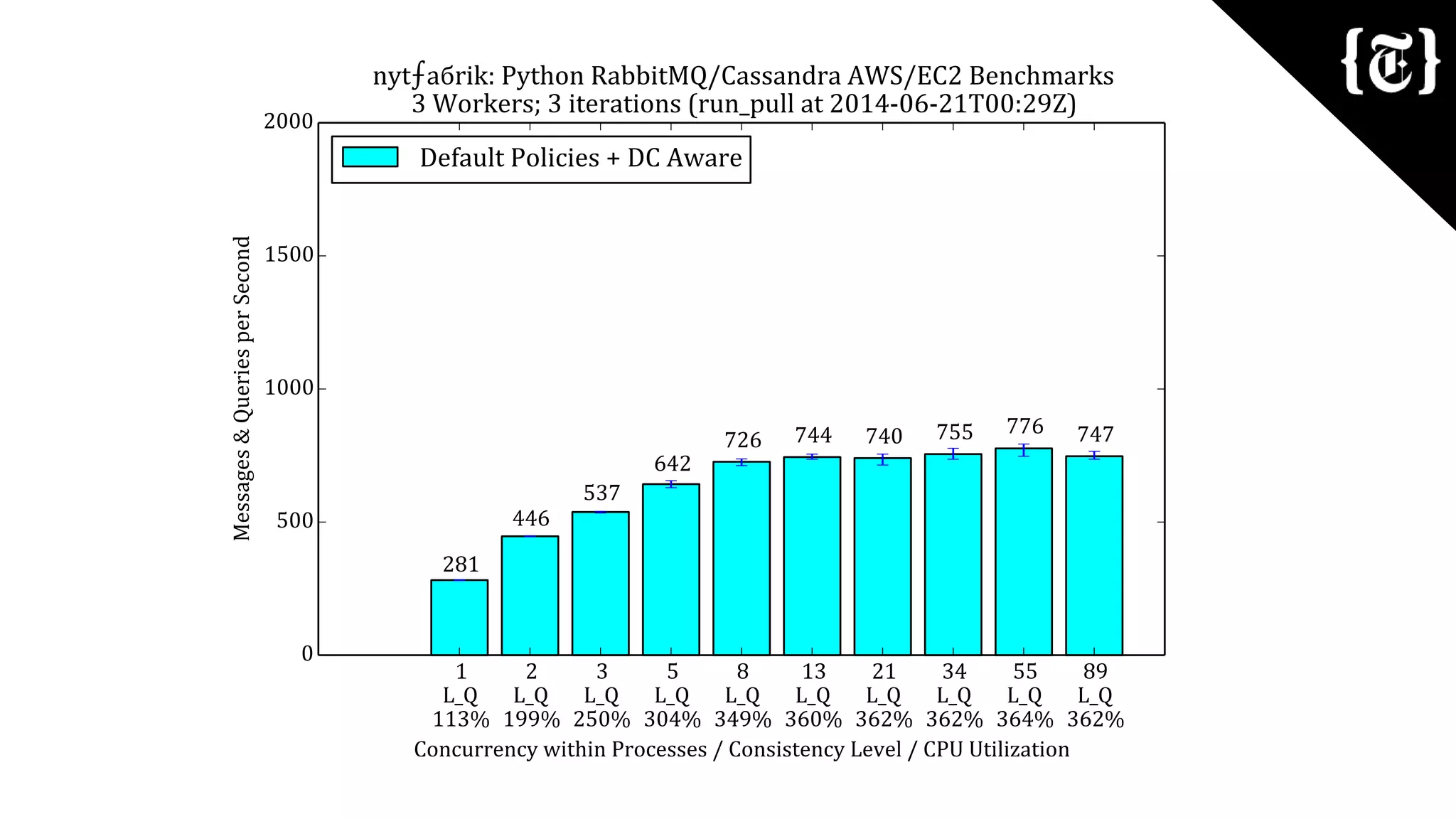

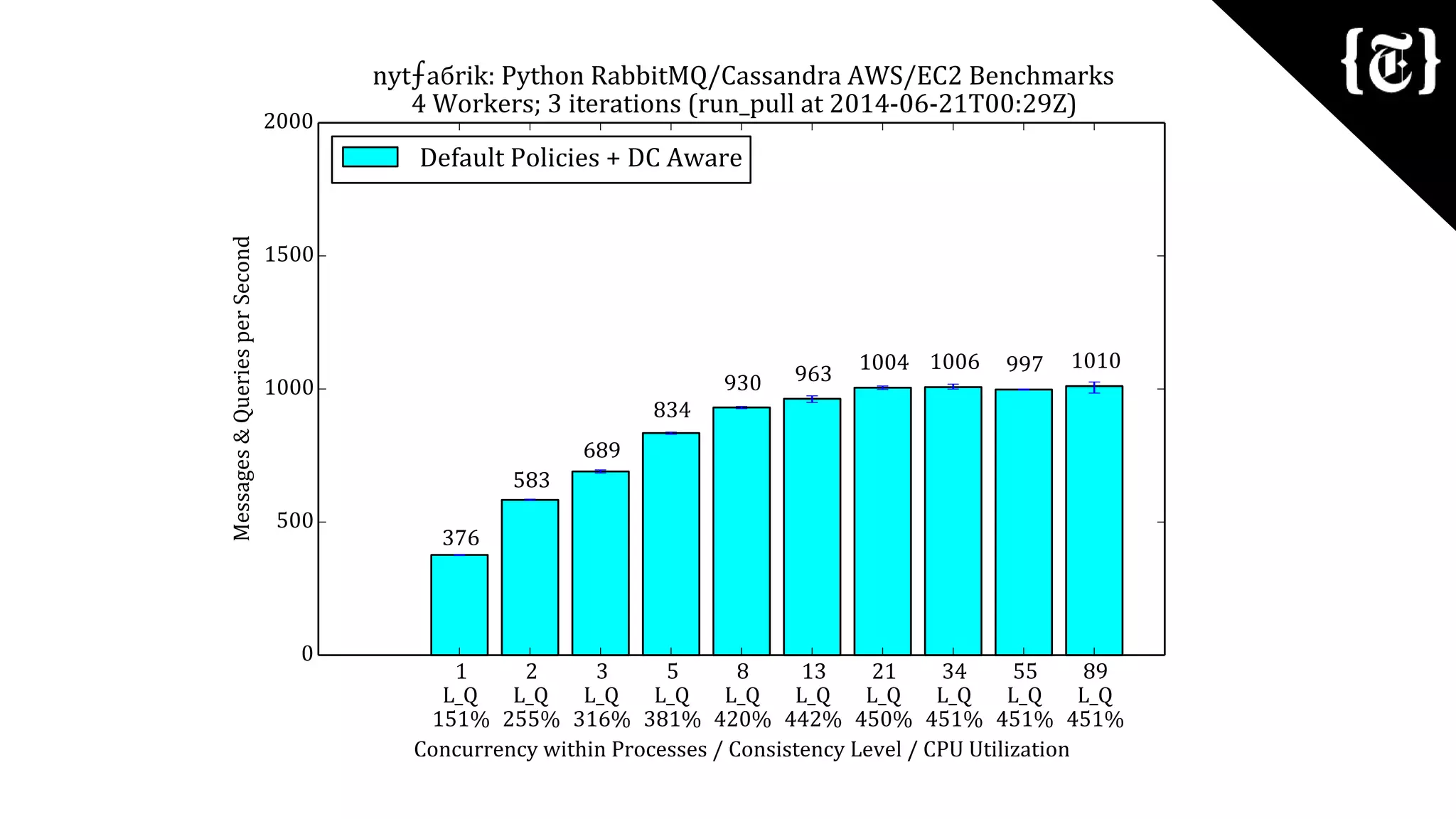

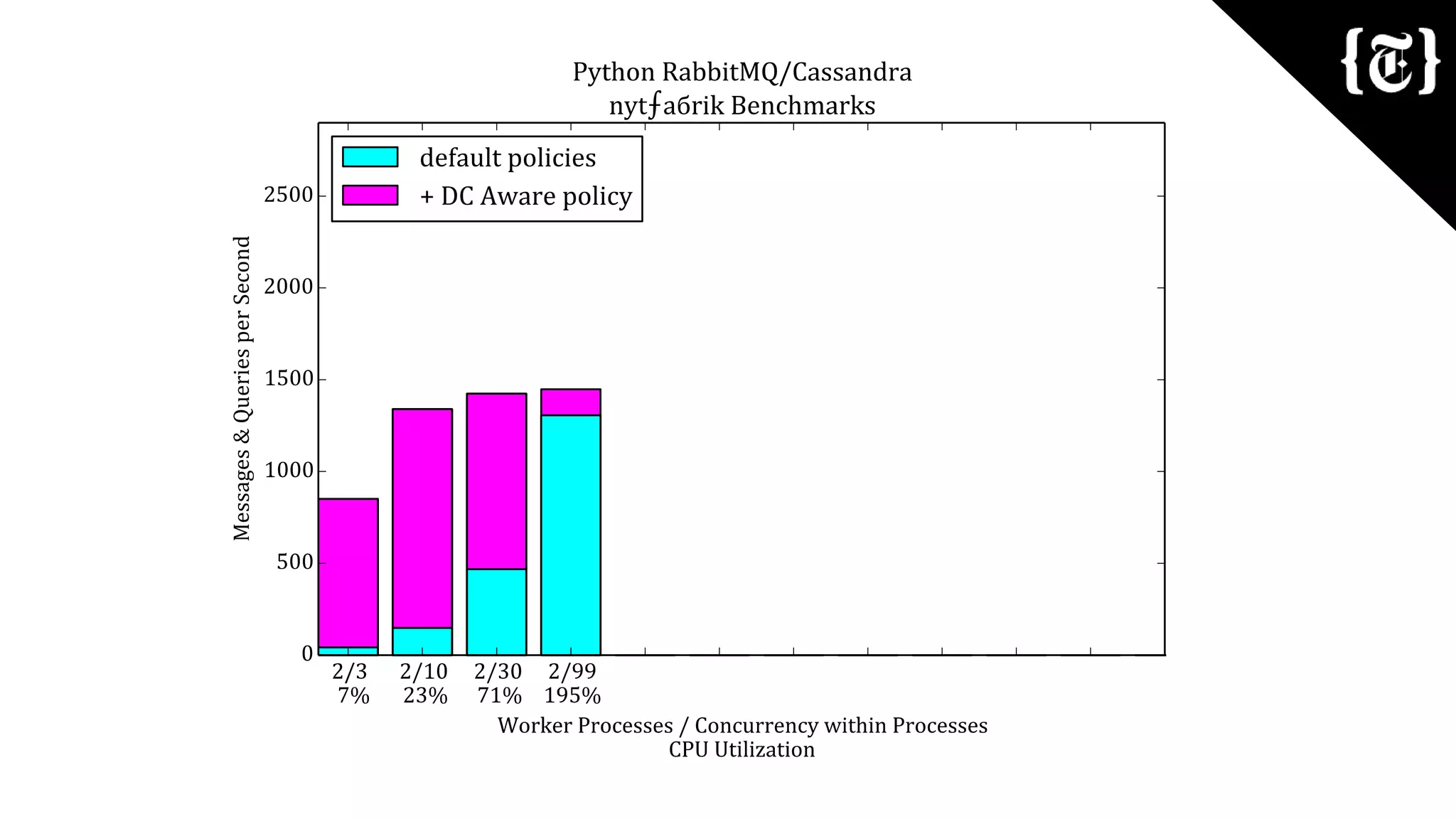

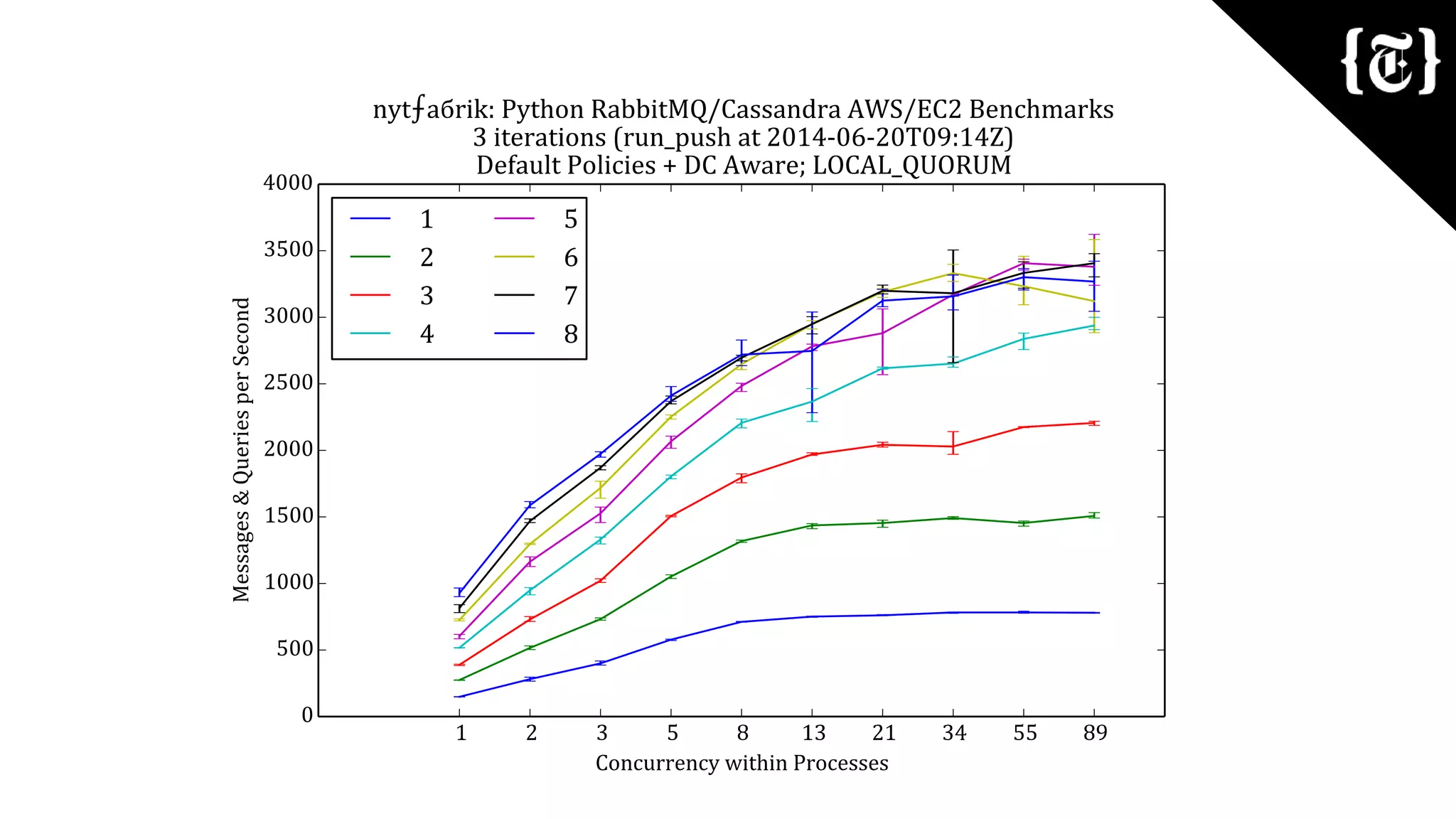

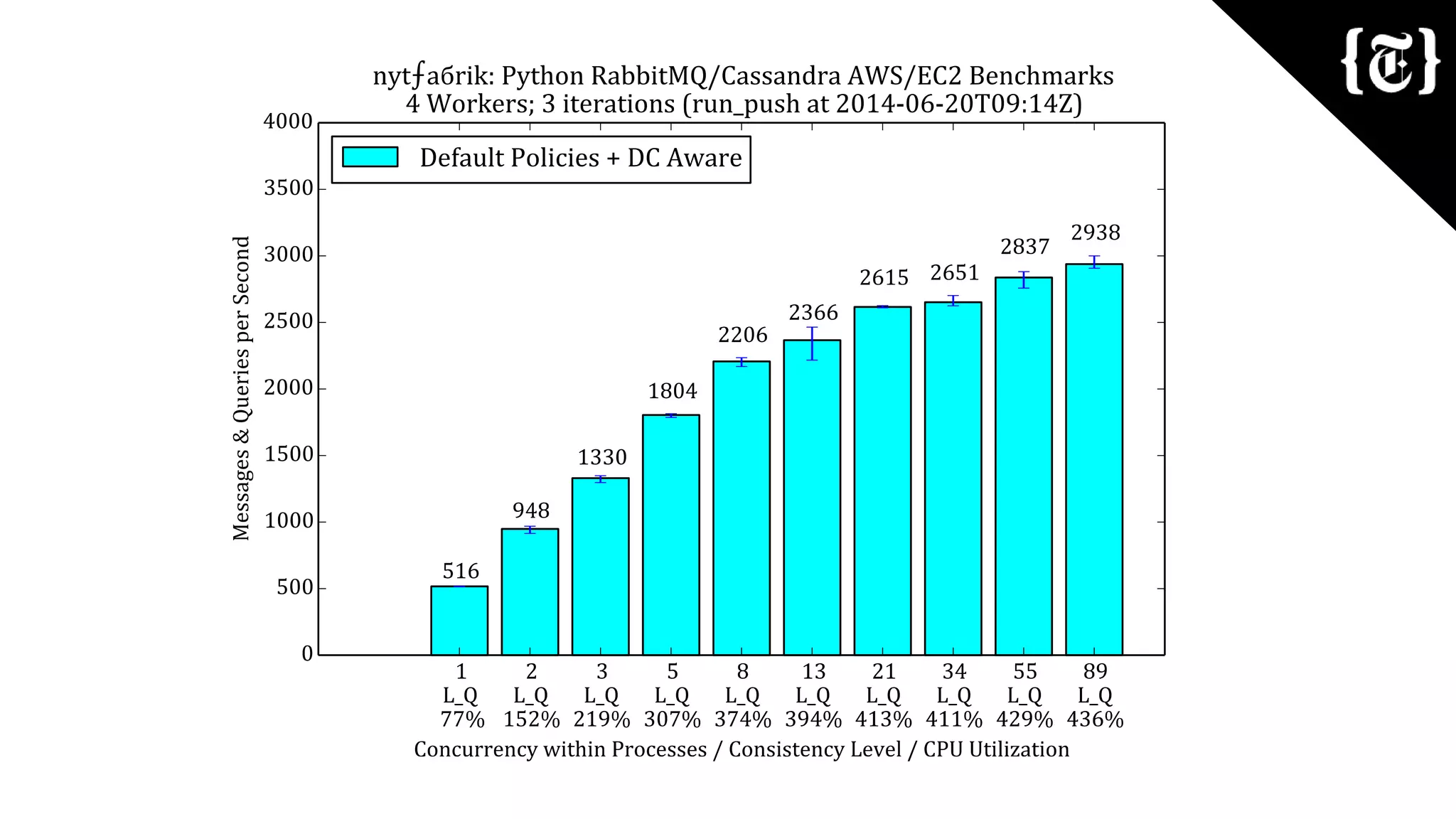

The document details benchmarking methodologies for the Cassandra Python driver in handling concurrency, particularly for pushing messages from a RabbitMQ queue into a Cassandra table. It outlines the structure of the message data, various configurations for submitting queries, and options for executing tests with different concurrency levels and consistency settings. The results of the benchmarking tests are formatted as JSON output.

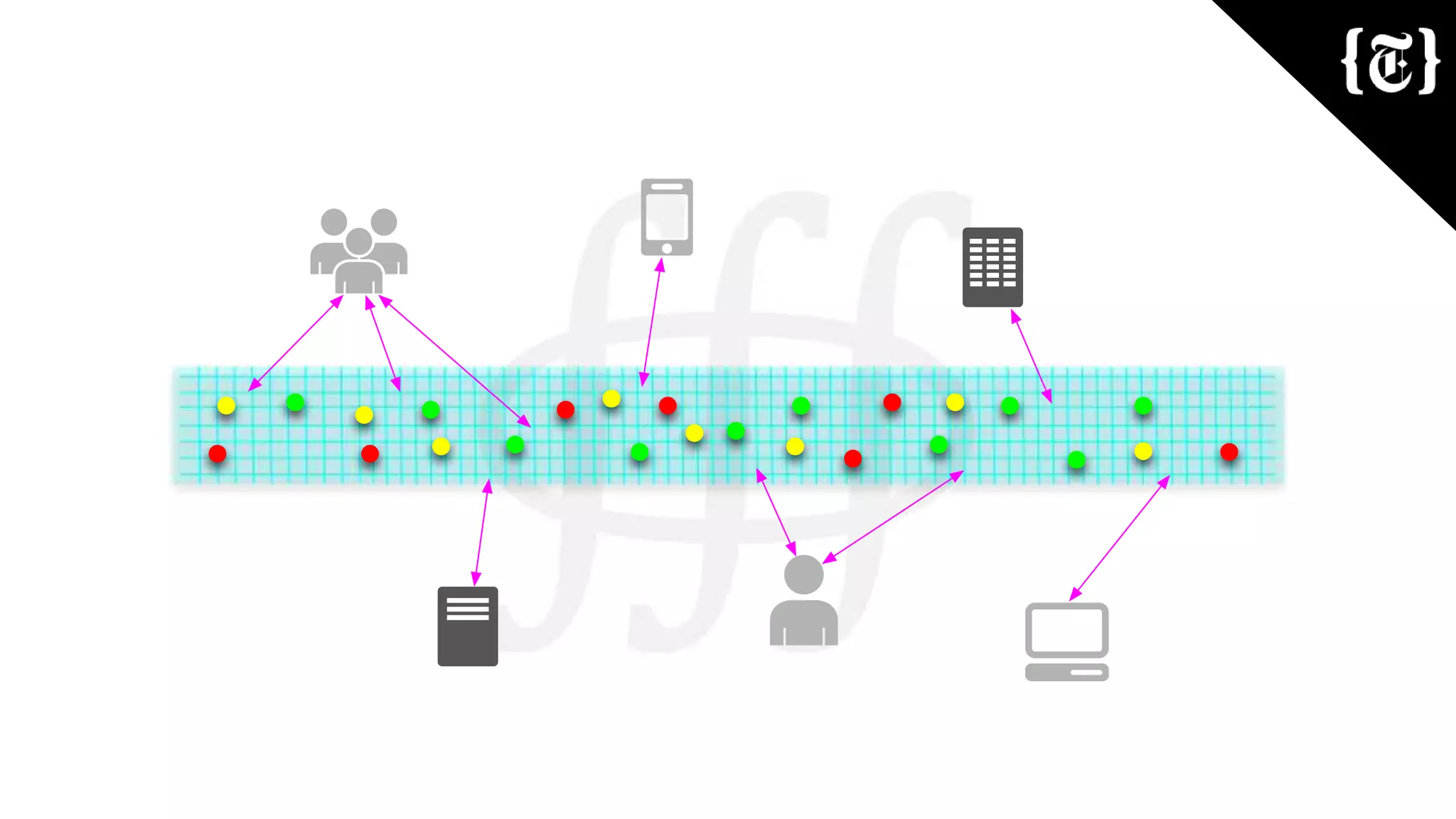

![Put it in the pipeline

def submit_query(self, message):

body = message.pop('body')

substitution_args = (

json.dumps(message, **JSON_DUMPS_ARGS),

body,

message['hash_key'],

uuid.UUID(message['message_id'])

)

future = self._cql_session.execute_async(

self._query, substitution_args

)

future.add_callback(self.push_or_finish)

future.add_errback(self.note_error)](https://image.slidesharecdn.com/michaellaingnytdevelopers1-140723180834-phpapp01/75/Cassandra-Day-NY-2014-Apache-Cassandra-Python-for-the-The-New-York-Times-a-rik-Platform-24-2048.jpg)

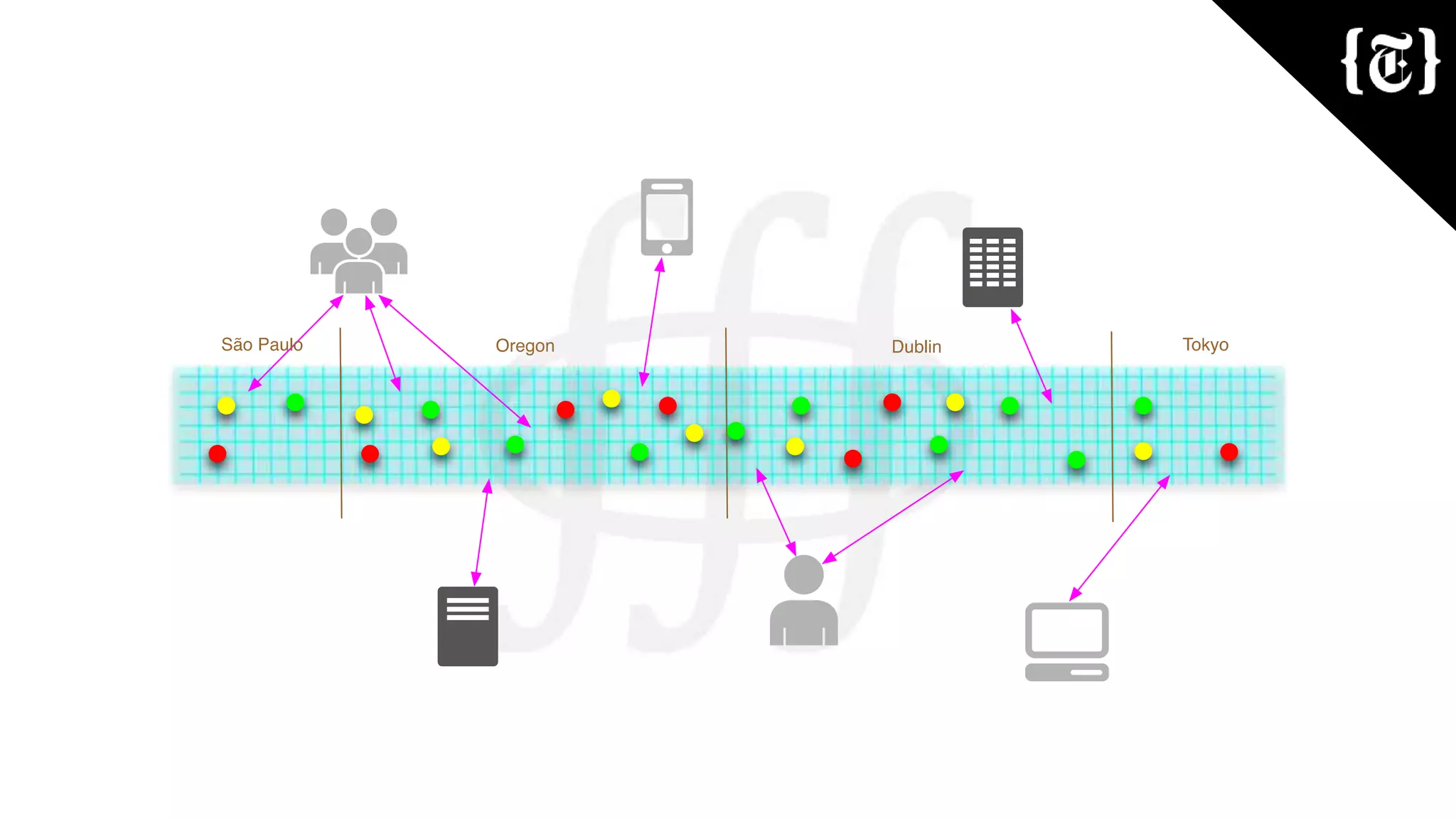

![Push some messages

usage: bm_push.py [-h] [-c [CQL_HOST [CQL_HOST ...]]] [-d LOCAL_DC]

[--remote-dc-hosts REMOTE_DC_HOSTS] [-p PREFETCH_COUNT]

[-w WORKER_COUNT] [-a] [-t]

[-n {ONE, TWO, THREE, QUORUM, ALL, LOCAL_QUORUM,

EACH_QUORUM, SERIAL, LOCAL_SERIAL, LOCAL_ONE}]

[-r] [-j] [-l

{CRITICAL,ERROR,WARNING,INFO,DEBUG,NOTSET}]

Push messages from a RabbitMQ queue into a Cassandra table.](https://image.slidesharecdn.com/michaellaingnytdevelopers1-140723180834-phpapp01/75/Cassandra-Day-NY-2014-Apache-Cassandra-Python-for-the-The-New-York-Times-a-rik-Platform-39-2048.jpg)

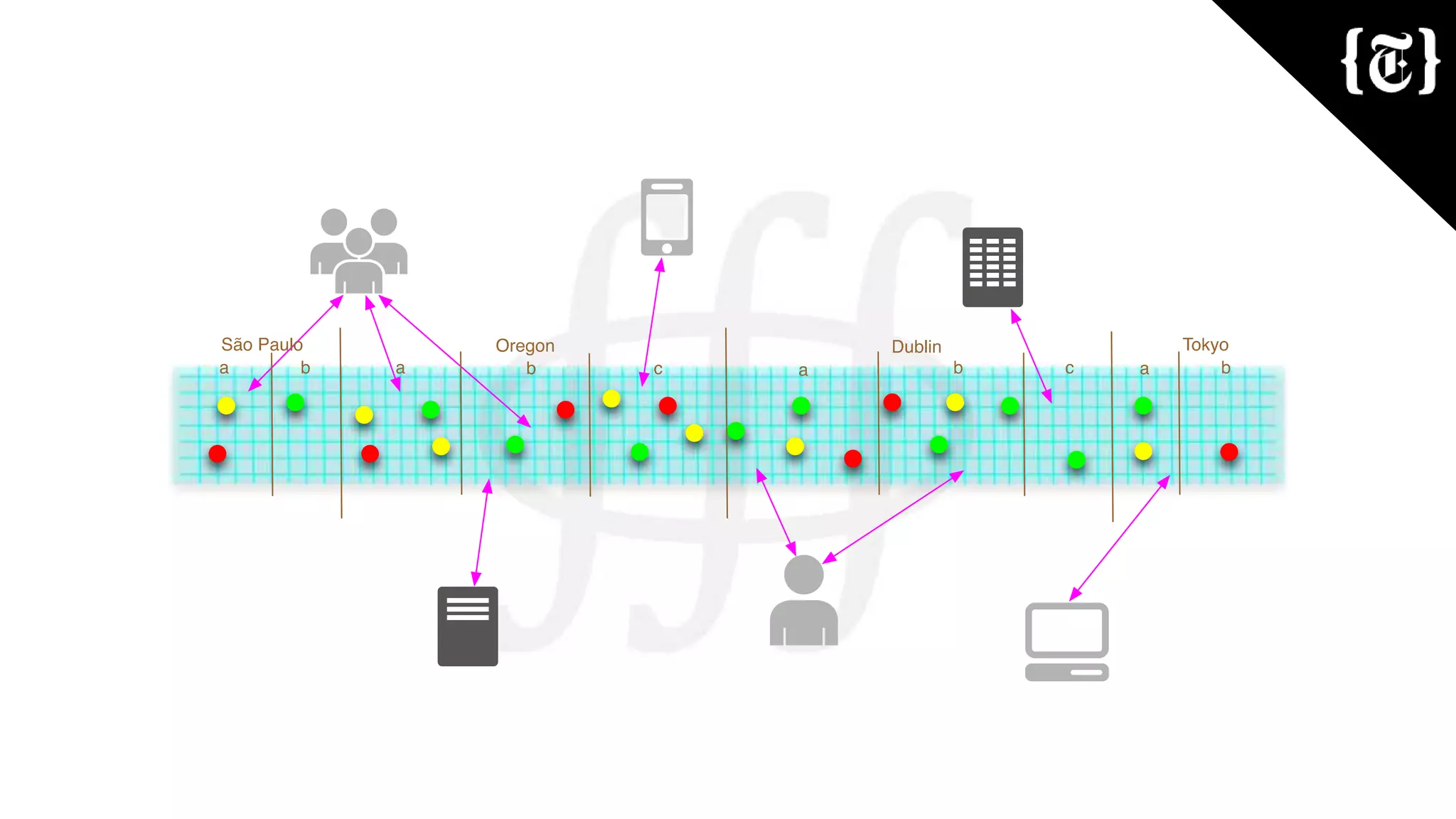

![Push messages many times

usage: run_push.py [-h] [-c [CQL_HOST [CQL_HOST ...]]] [-i ITERATIONS]

[-d LOCAL_DC] [-w [worker_count [worker_count ...]]]

[-p [prefetch_count [prefetch_count ...]]]

[-n [level [level ...]]] [-a] [-t] [-m MESSAGE_EXPONENT]

[-b BODY_EXPONENT]

[-l {CRITICAL,ERROR,WARNING,INFO,DEBUG,NOTSET}]

Run multiple test cases based upon the product of worker_counts,

prefetch_counts, and consistency_levels. Each test case may be run with up to

4 variations reflecting the use or not of the dc_aware and token_aware

policies. The results are output to stdout as a JSON object.](https://image.slidesharecdn.com/michaellaingnytdevelopers1-140723180834-phpapp01/75/Cassandra-Day-NY-2014-Apache-Cassandra-Python-for-the-The-New-York-Times-a-rik-Platform-40-2048.jpg)