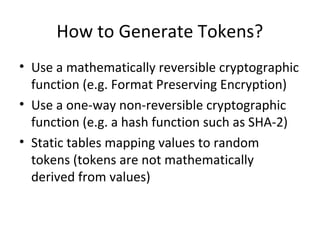

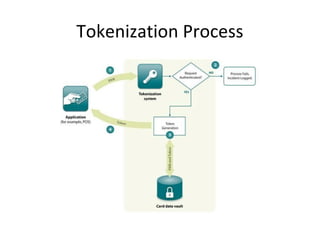

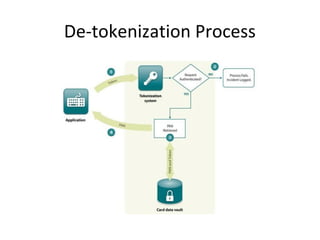

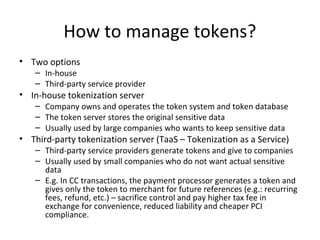

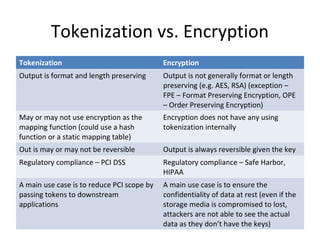

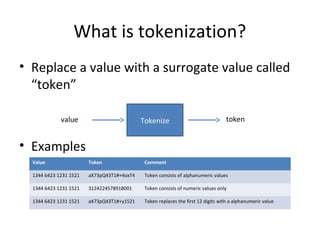

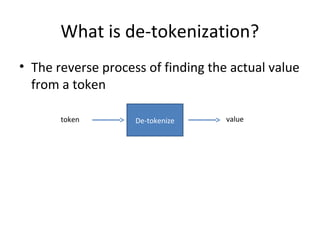

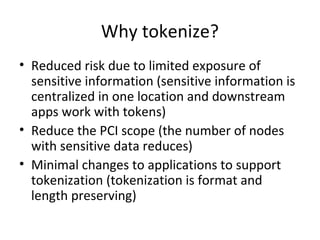

Tokenization is the process of replacing sensitive data with a surrogate value, or token, while preserving certain characteristics to ensure data security. It enables reduced risk of data exposure, minimizes the scope of PCI compliance, and requires minimal application modifications. The document also outlines the distinctions between single-use and multi-use tokens, as well as how tokenization compares to encryption in terms of format preservation and reversibility.

![An Example – Tokenizing CC#s

Point of Payment App

Sale Tokenization

System

(2) Tokenize CC

(3) Tokenized CC

(1) Payment, CC

Customer Data

Warehouse

(4) Tokenized CC

Order Processing

App

CRM App

[INTERNET]

MERCHANT

DATA CENTER

(5) Tokenized CC](https://image.slidesharecdn.com/tokenization-140828153043-phpapp02/85/Introduction-to-Tokenization-6-320.jpg)