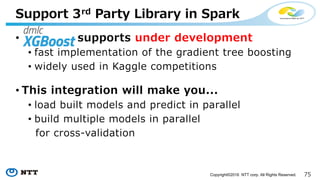

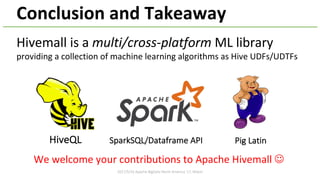

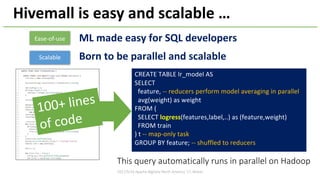

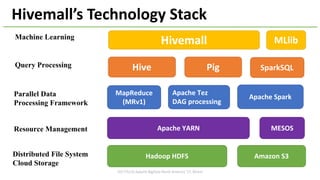

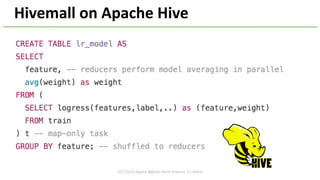

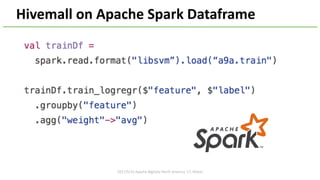

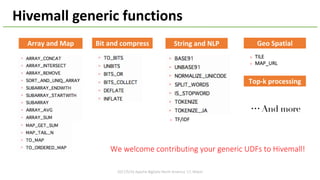

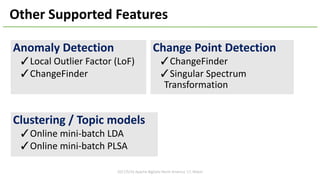

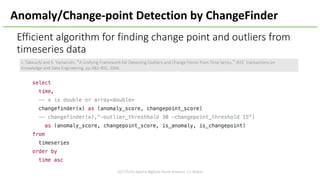

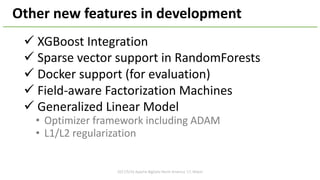

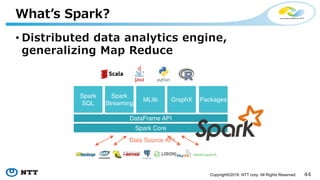

This document summarizes a presentation about Apache Hivemall, a scalable machine learning library for Apache Hive, Spark, and Pig. Hivemall provides easy-to-use machine learning functions that can run efficiently in parallel on large datasets. It supports various classification, regression, recommendation, and clustering algorithms. The presentation outlines Hivemall's capabilities, how it works with different big data platforms like Hive and Spark, and its ongoing development including new features like XGBoost integration and generalized linear models.

![67Copyright©2016 NTT corp. All Rights Reserved.

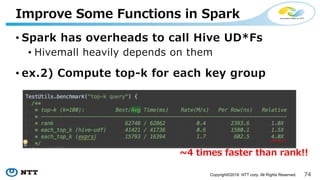

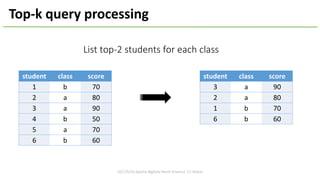

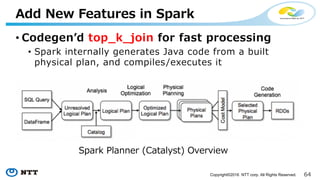

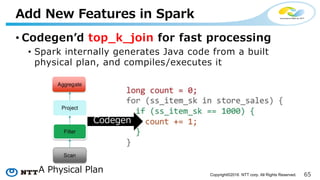

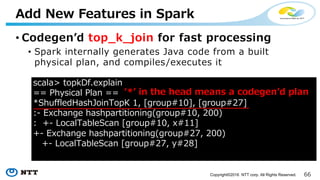

• Codegenʼd top_k_join for fast processing

• Spark internally generates Java code from a built

physical plan, and compiles/executes it

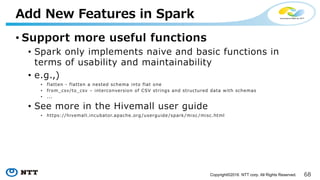

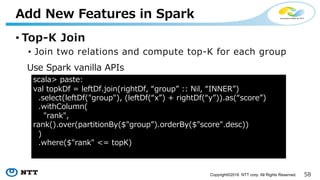

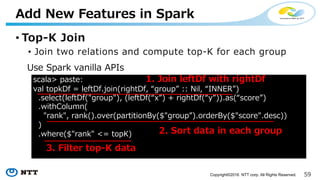

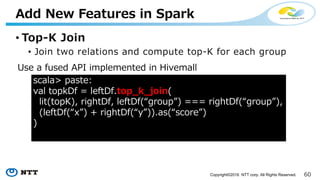

Add New Features in Spark

scala> topkDf.explain

== Physical Plan ==

*ShuffledHashJoinTopK 1, [group#10], [group#27]

:- Exchange hashpartitioning(group#10, 200)

: +- LocalTableScan [group#10, x#11]

+- Exchange hashpartitioning(group#27, 200)

+- LocalTableScan [group#27, y#28]

ʻ*ʼ in the head means a codegenʼd plan](https://image.slidesharecdn.com/apachebigdata2017-170520184648/85/Apache-Hivemall-Apache-BigData-17-Miami-67-320.jpg)