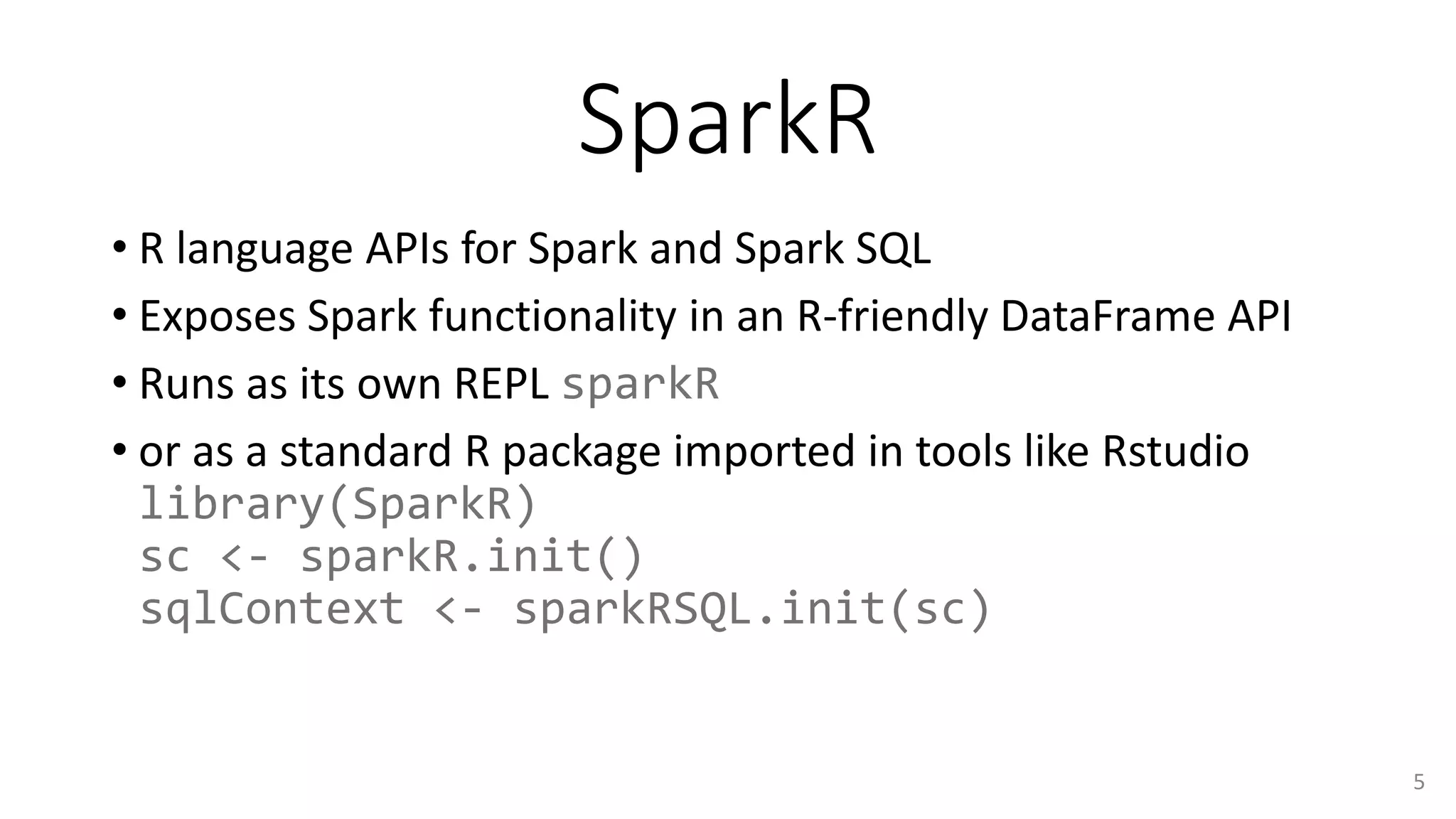

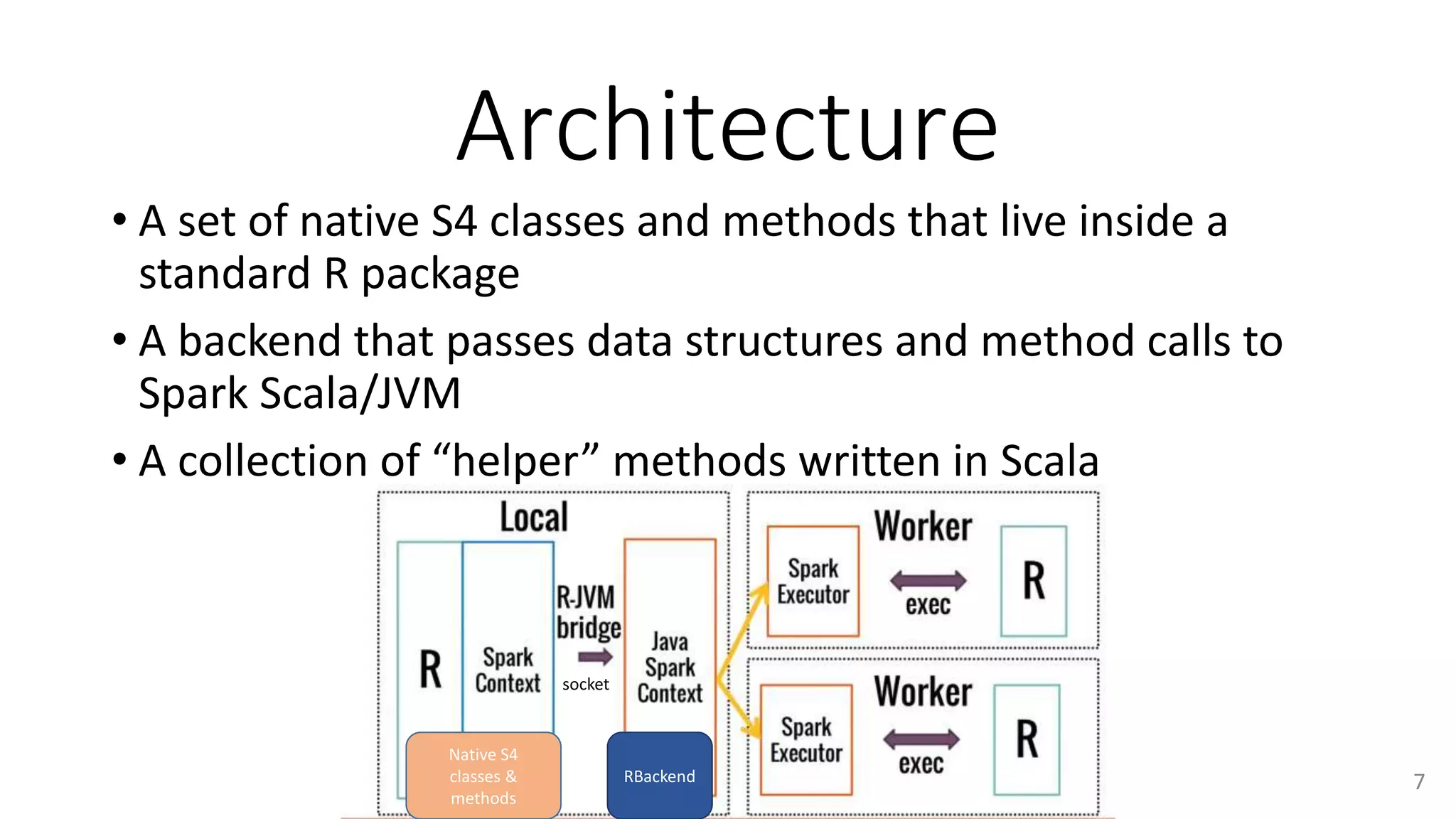

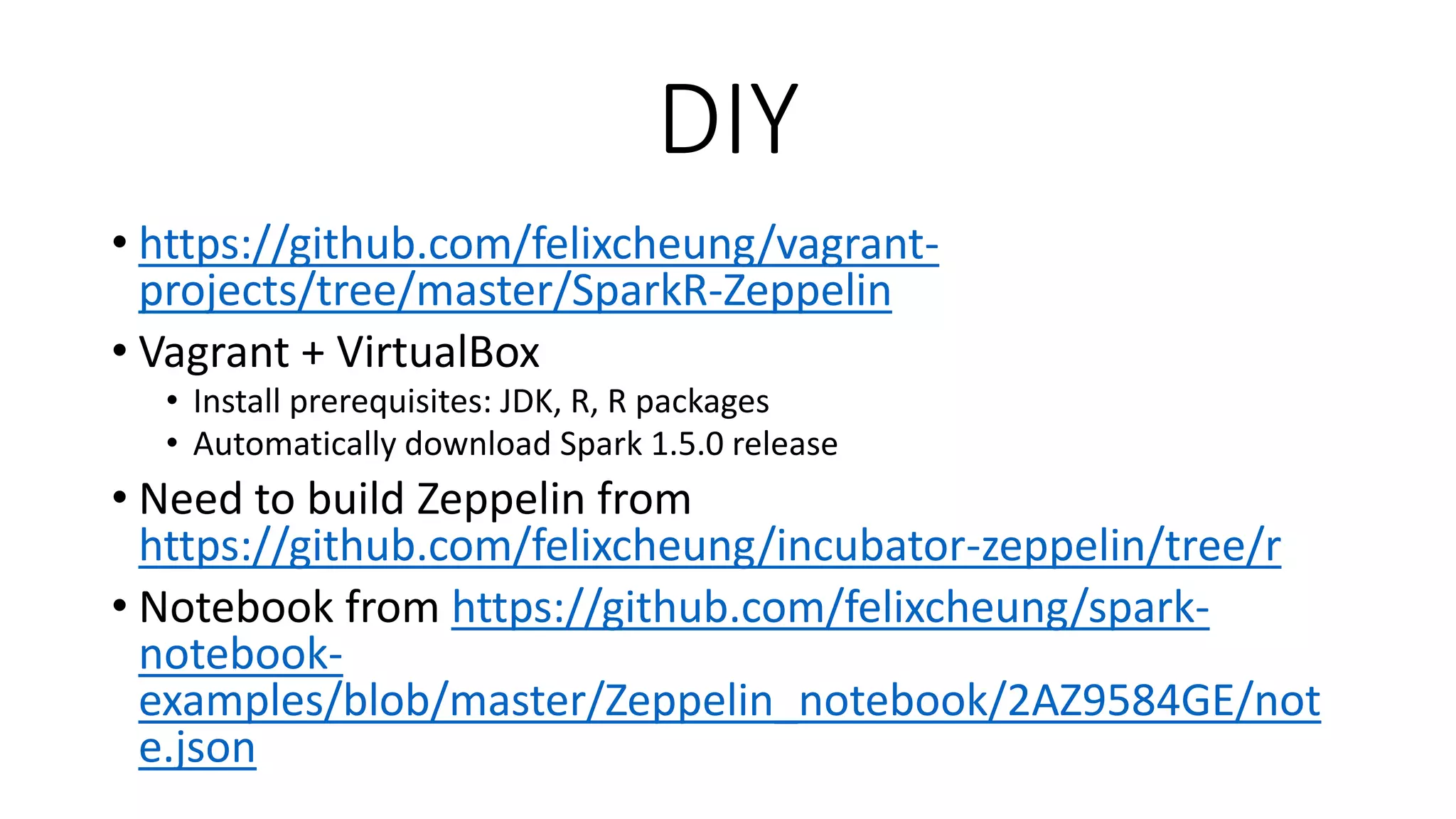

This document summarizes a presentation on using SparkR and Zeppelin. SparkR allows using R language APIs for Spark, exposing Spark functionality through an R-friendly DataFrame API. SparkR DataFrames can be used in Zeppelin, providing interfaces between native R and Spark operations. The presentation demonstrates running SparkR code and DataFrame transformations in Zeppelin notebooks.

![SparkR in Spark 1.5.0

Get this today:

• R formula

• Machine learning like GLM

model <- glm(Sepal_Length ~ Sepal_Width +

Species, data = df, family = "gaussian")

• More R-like

df[df$age %in% c(19, 30), 1:2]

transform(df, newCol = df$col1 / 5, newCol2 =

df$col1 * 2)](https://image.slidesharecdn.com/sparkrzeppelin-150910222105-lva1-app6892/75/SparkR-Zeppelin-18-2048.jpg)

![subset

# Columns can be selected using `[[` and `[`

df[[2]] == df[["age"]]

df[,2] == df[,"age"]

df[,c("name", "age")]

# Or to filter rows

df[df$age > 20,]

# DataFrame can be subset on both rows and Columns

df[df$name == "Smith", c(1,2)]

df[df$age %in% c(19, 30), 1:2]

subset(df, df$age %in% c(19, 30), 1:2)

subset(df, df$age %in% c(19), select = c(1,2))](https://image.slidesharecdn.com/sparkrzeppelin-150910222105-lva1-app6892/75/SparkR-Zeppelin-22-2048.jpg)