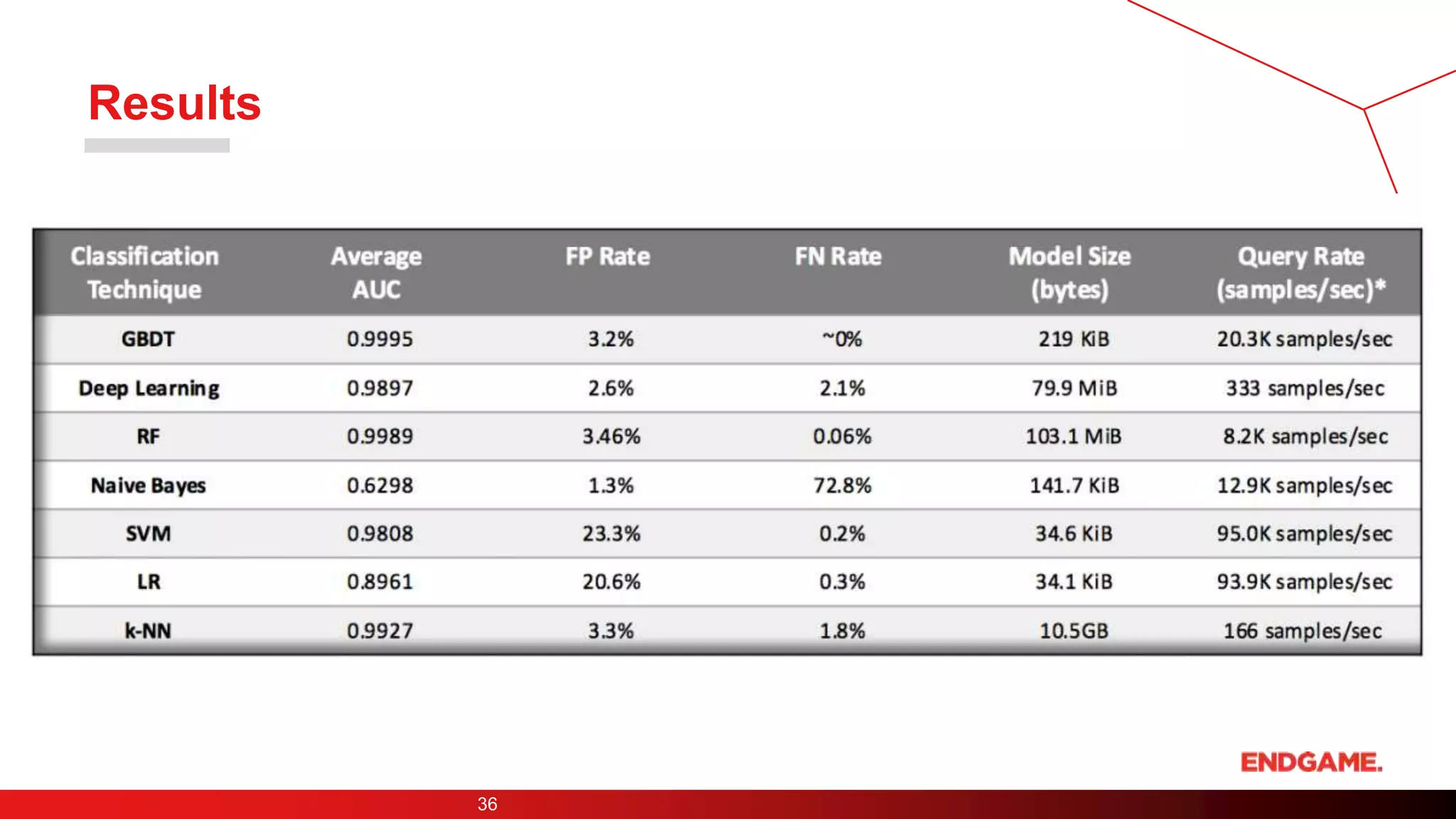

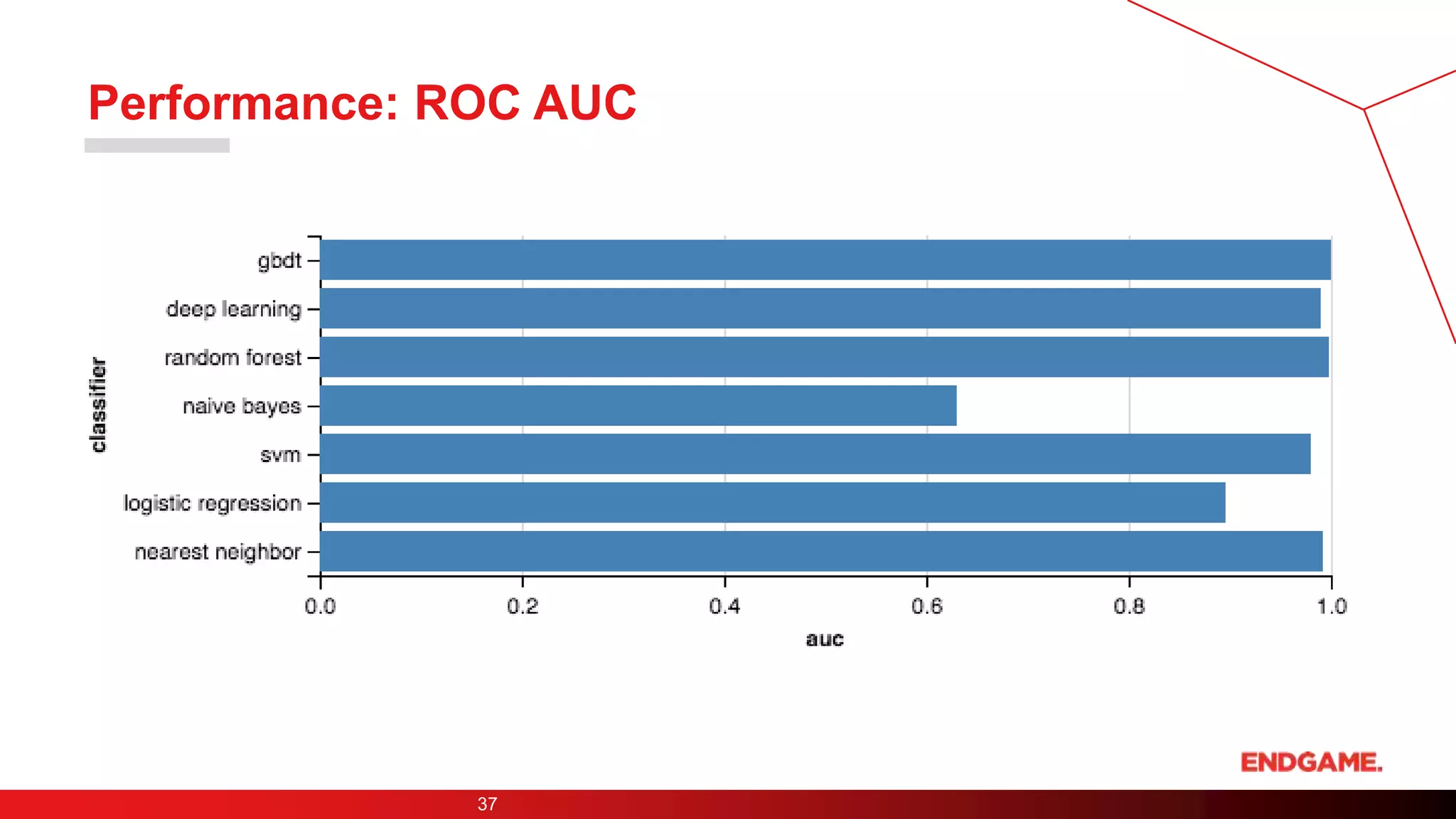

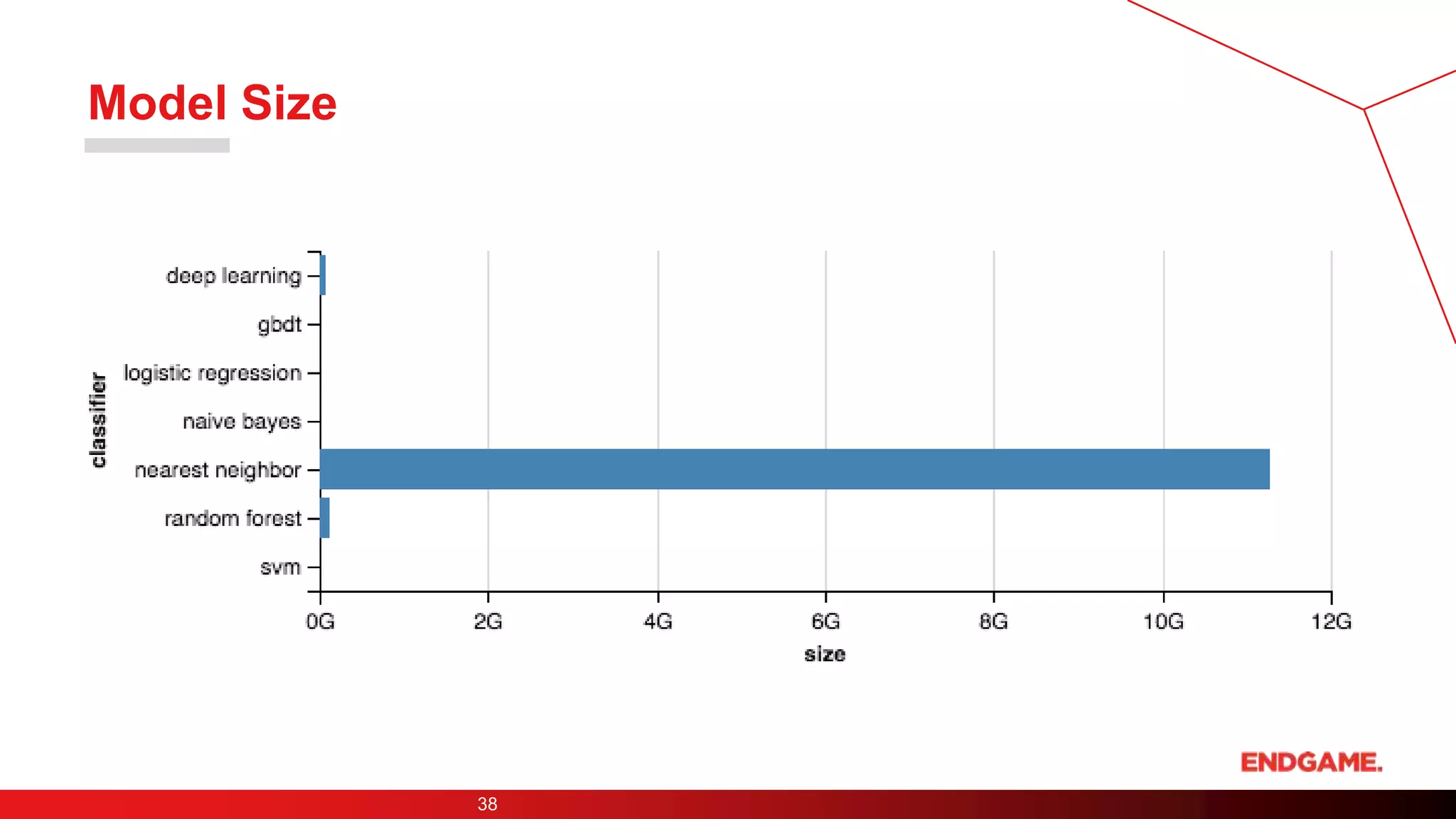

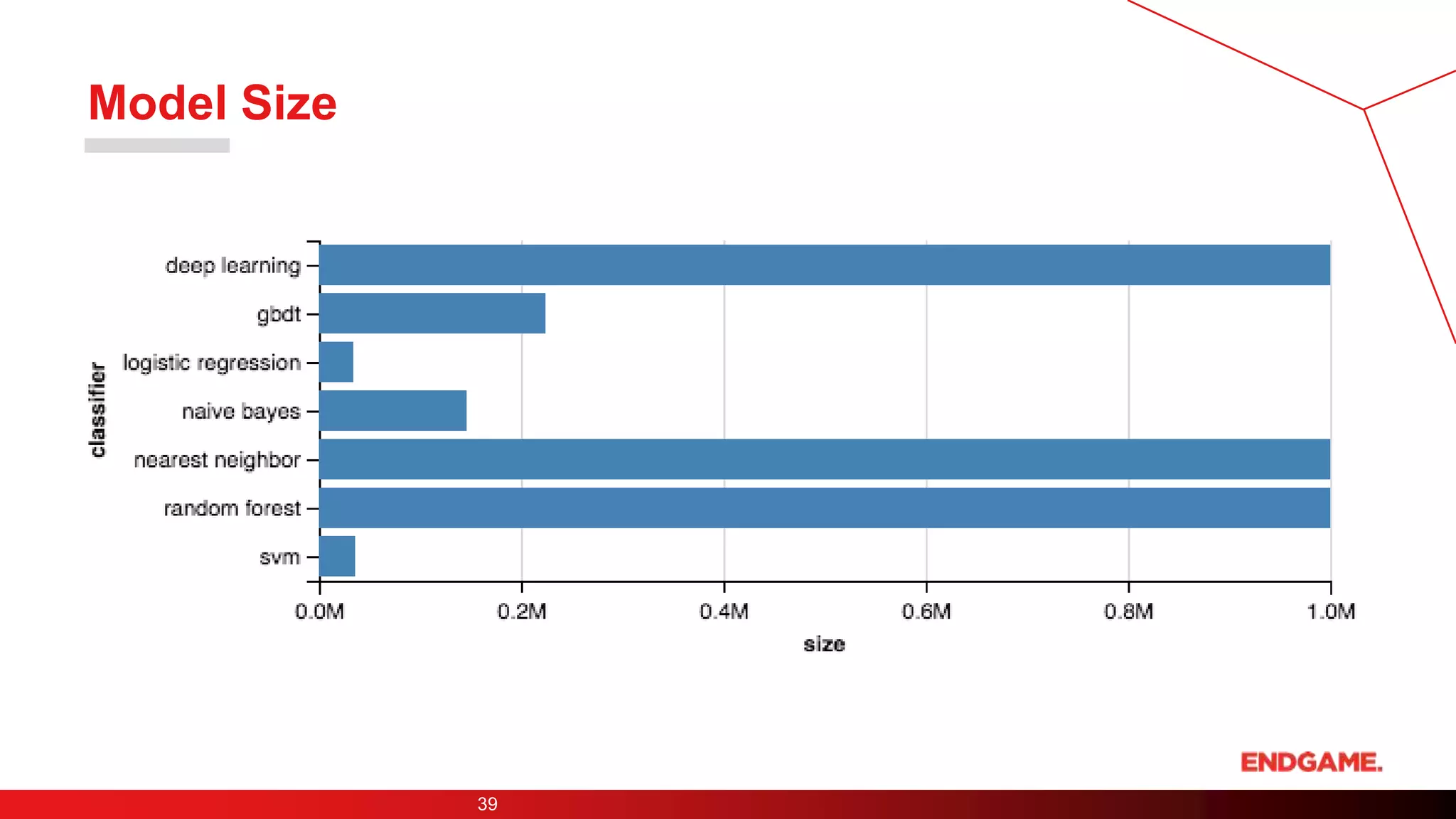

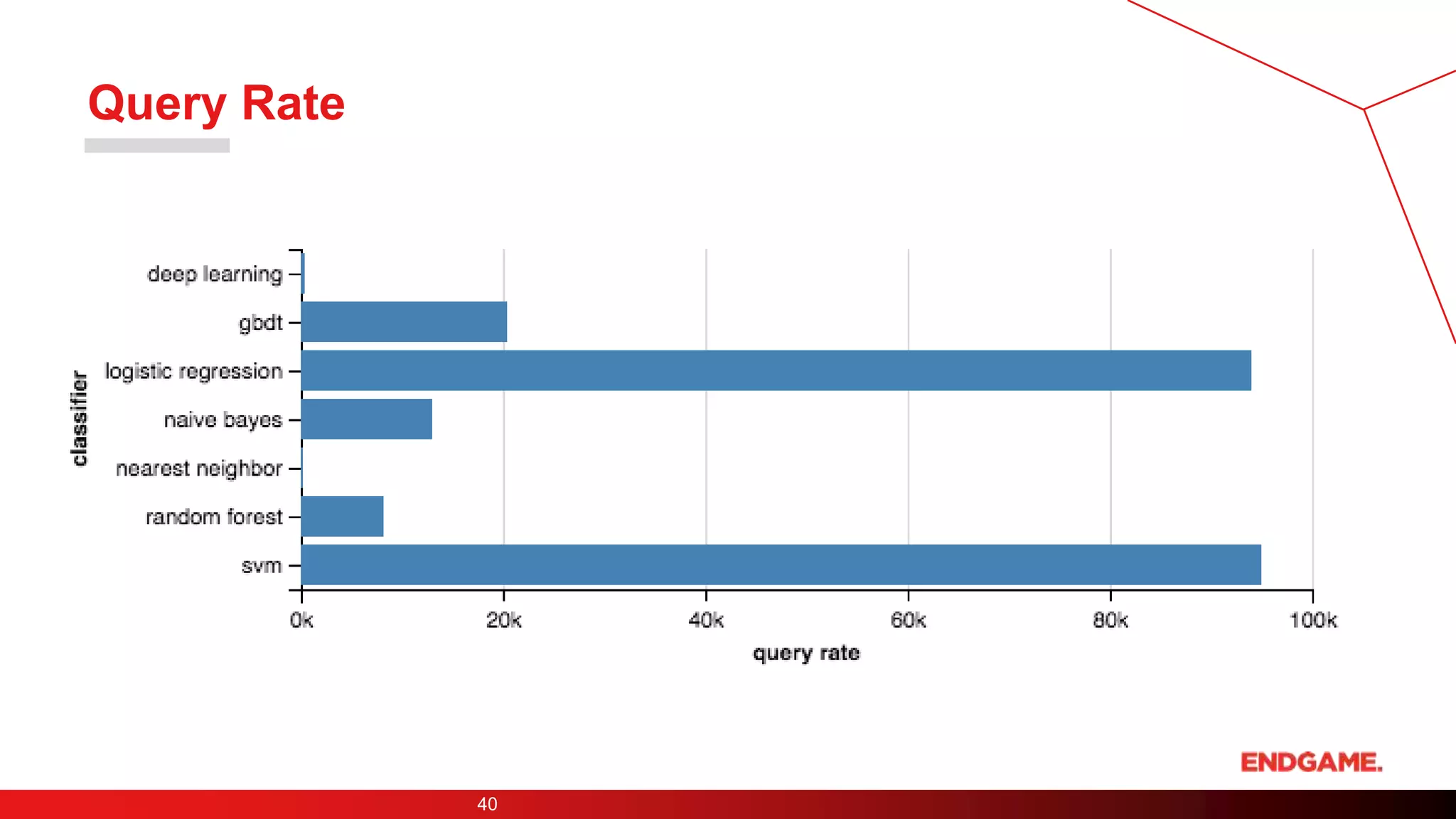

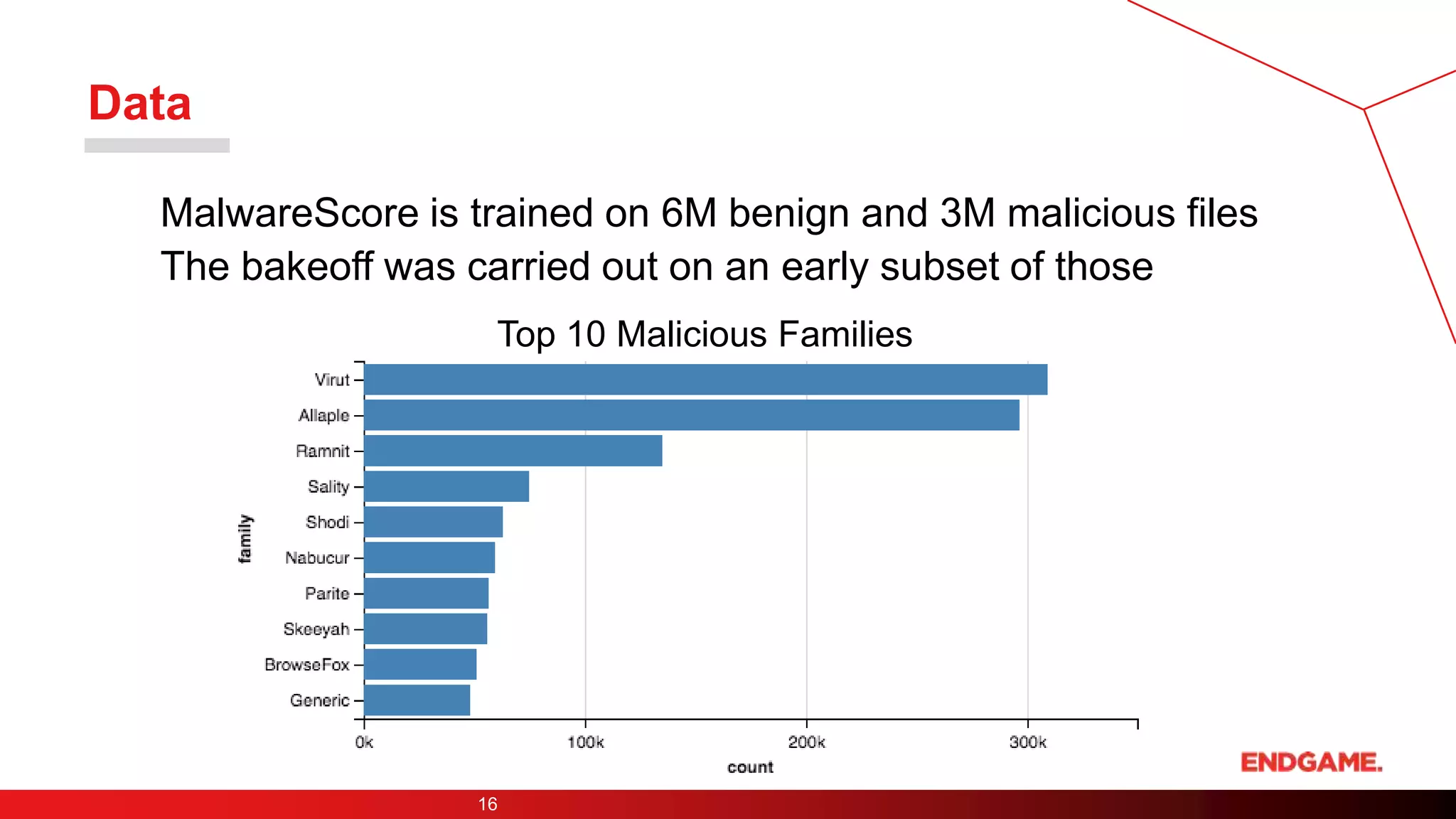

Phil Roth presented the results of a malware classification model bakeoff between several machine learning algorithms. The models evaluated were k-nearest neighbors, logistic regression, support vector machines, naive Bayes, random forests, gradient boosted decision trees, and deep learning. Based on the performance, size, and query time metrics, gradient boosted decision trees had the best overall results. However, the presenter noted that deep learning approaches deserve more research due to their potential to learn directly from file content. The conclusions were that gradient boosted decision trees could be deployed to endpoints while larger deep learning models could be used in the cloud after further development.

![Relevant

6

https://arstechnica.com/ [various]](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-6-2048.jpg)

![Nearest Neighbor

27

from sklearn.neighbors import KNeighborsClassifier

tuned_parameters = {'n_neighbors': [1,5,11], 'weights': ['uniform','distance']}

model = bake(KNeighborsClassifier(algorithm='ball_tree'), tuned_parameters, X[ix], y[ix])

pickle.dump(model, open('Bakeoff_kNN.pkl', 'w'))

Introduction to Statistical Learning page 40](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-27-2048.jpg)

![Logistic Regression

28

from sklearn.linear_model import SGDClassifier

tuned_parameters = {'alpha':[1e-5,1e-4,1e-3], 'l1_ratio': [0., 0.15, 0.85, 1.0]}

model = bake(SGDClassifier(loss='log', penalty='elasticnet'), tuned_parameters, X, y)

pickle.dump(model, open('Bakeoff_logisticRegression.pkl', 'w'))

Introduction to Statistical Learning page 131](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-28-2048.jpg)

![Support Vector Machine

29

Introduction to Statistical Learning page 342

from sklearn.linear_model import SGDClassifier

tuned_parameters = {'alpha':[1e-5,1e-4,1e-3], 'l1_ratio': [0., 0.15, 0.85, 1.0]}

model = bake(SGDClassifier(loss='hinge', penalty='elasticnet'), tuned_parameters, X, y)

pickle.dump(model, open('Bakeoff_SVM.pkl', 'w'))](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-29-2048.jpg)

![Random Forest

31

from sklearn.ensemble import RandomForestClassifier

tuned_parameters = {'n_estimators': [20, 50, 100],

'min_samples_split': [2, 5, 10],

'max_features': ["sqrt", .1, 0.2]}

model = bake(RandomForestClassifier(oob_score=False), tuned_parameters, X, y

pickle.dump(R, open('Bakeoff_randomforest.pkl', 'w'))

Introduction to Statistical Learning page 308](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-31-2048.jpg)

![Gradient Boosted Decision Trees

32

from xgboost import XGBClassifier

tuned_parameters = {'max_depth': [3, 4, 5],

'n_estimators': [20, 50, 100],

'colsample_bytree': [0.9, 1.0]}

model = bake(XGBClassifier(), tuned_parameters, X, y)

pickle.dump(R, open('Bakeoff_xgboost.pkl', 'w'))

http://scikit-learn.org/stable/auto_examples/index.html](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-32-2048.jpg)

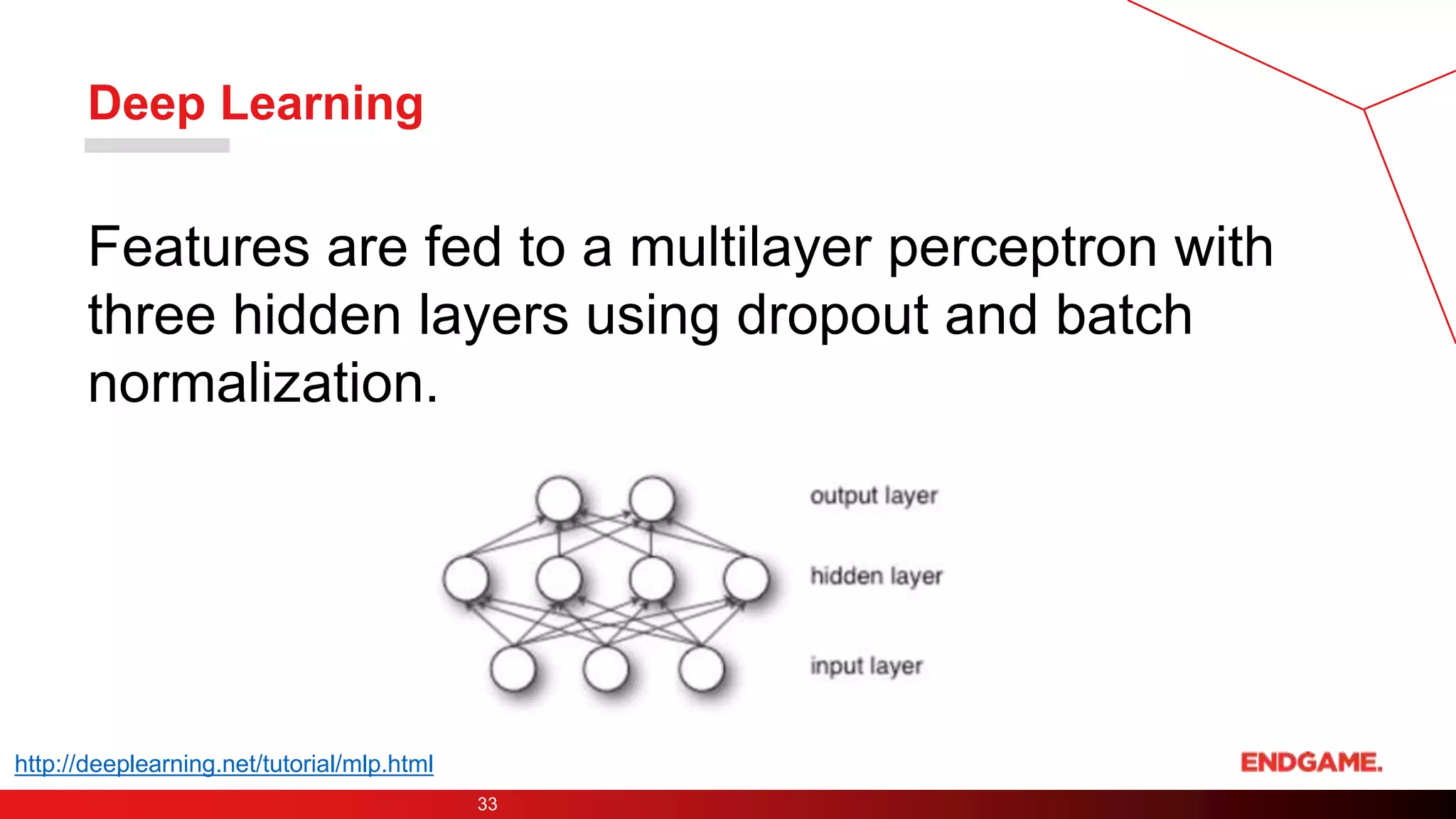

![34

from keras.models import Sequential

from keras.layers.core import Dense, Activation, Dropout

from keras.layers import PReLU, BatchNormalization

model = Sequential()

model.add(Dropout(input_dropout, input_shape=(n_units,)))

model.add( BatchNormalization() )

model.add(Dense(n_units, input_shape=(n_units,)))

model.add(PReLU())

model.add(Dropout(hidden_dropout))

model.add( BatchNormalization() )

model.add(Dense(n_units))

model.add(PReLU())

model.add(Dropout(hidden_dropout))

model.add( BatchNormalization() )

model.add(Dense(n_units))

model.add(PReLU())

model.add(Dropout(hidden_dropout))

model.add( BatchNormalization() )

model.add(Dense(1, activation='sigmoid'))

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])](https://image.slidesharecdn.com/dataintelligence-170625153147/75/Machine-Learning-Model-Bakeoff-34-2048.jpg)