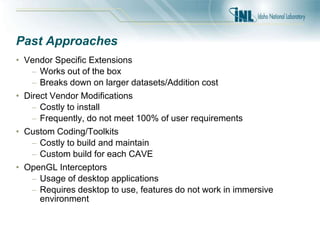

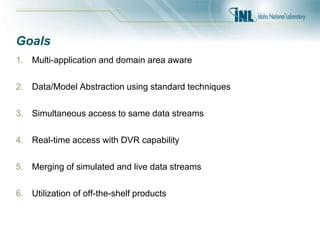

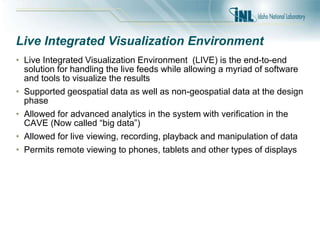

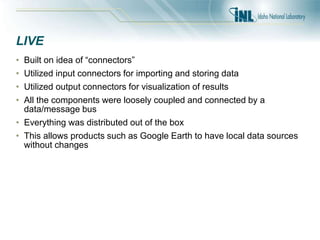

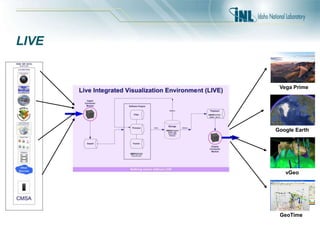

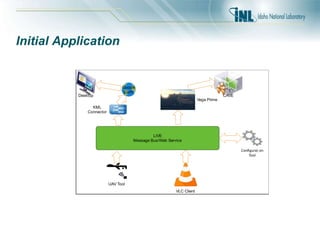

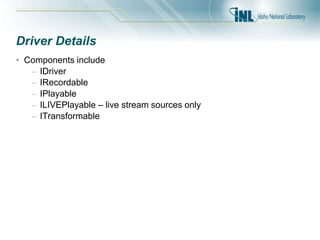

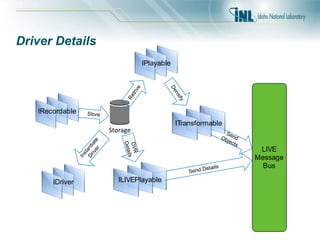

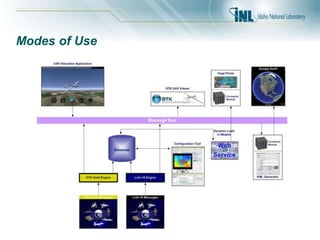

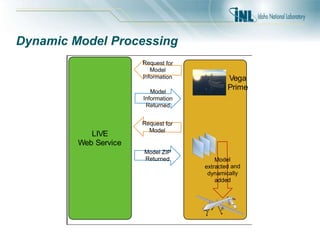

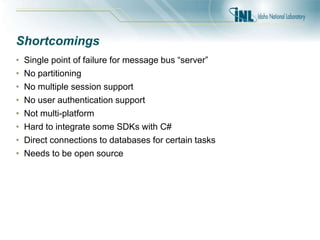

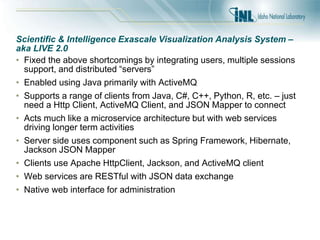

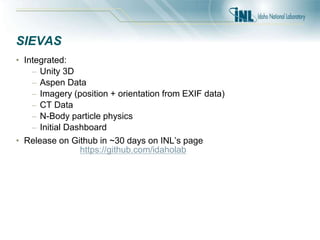

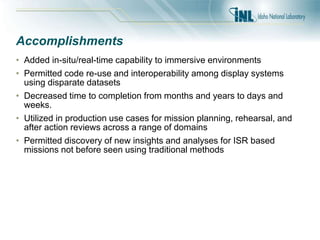

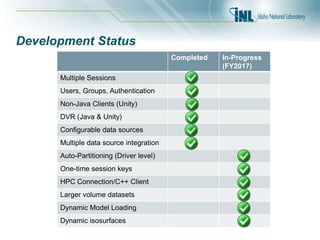

The document discusses the development of the Live Integrated Visualization Environment (LIVE), aimed at enhancing real-time data visualization for the Department of Defense. It outlines past approaches and shortcomings in visualization systems, leading to the creation of a more effective framework that supports various data sources and analytical requirements. Additionally, it highlights accomplishments such as improved interoperability, reduced completion times, and integration of advanced technologies in immersive environments.