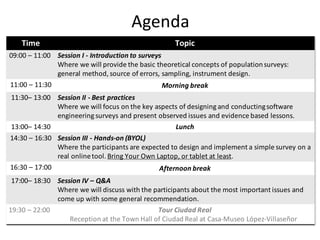

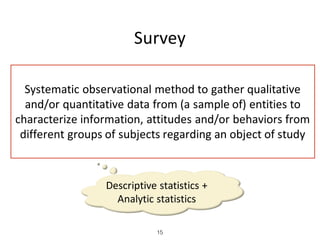

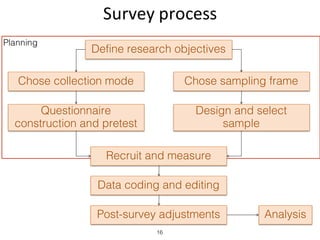

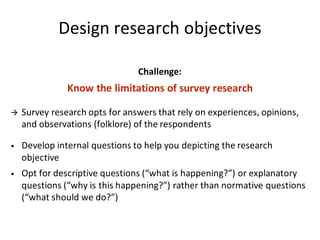

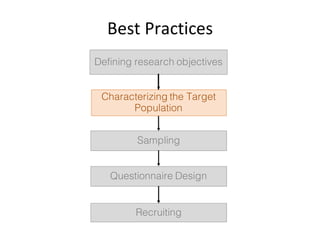

This document outlines an agenda for a workshop on surveys in software engineering. The workshop will cover four sessions: an introduction to surveys, best practices for designing and conducting software engineering surveys, a hands-on session for designing a survey using an online tool, and a question and answer session. The introduction will cover basic concepts of survey research including research objectives, sampling, instrument design, and analysis. The best practices session will focus on key aspects of software engineering surveys and lessons learned. Participants are encouraged to actively engage by asking questions and sharing their experiences with survey research.

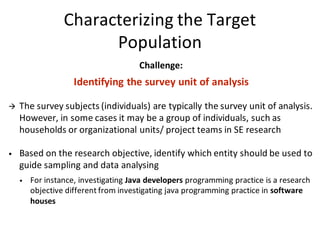

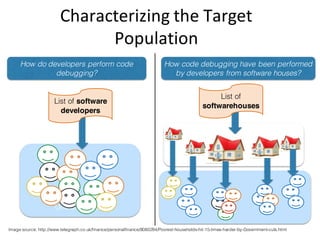

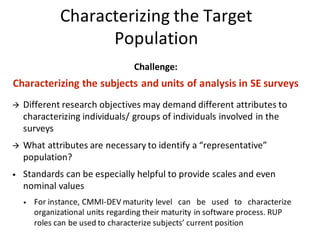

![Characterizing the Target

Population

Challenge:

Characterizing the subjects and units of analysis in SE surveys

• Individuals can be characterized through attributes such as: experience in the

research context, experience in SE, current professional role, location and

higher academic degree]

• Organizations can be characterized through attributes such as: size (scale

typically based in the number of employees), industry segment (software

factory, avionics, finance, health, telecommunications, etc.), location and

organization type (government, private company, university, etc.)

• Project teams can be characterized through attributes such as project size;

team size, client/product domain (avionics, finance, health,

telecommunications, etc.) and physical distribution](https://image.slidesharecdn.com/iasese-2016-surveysinsenobg-180116153632/85/Surveys-in-Software-Engineering-64-320.jpg)