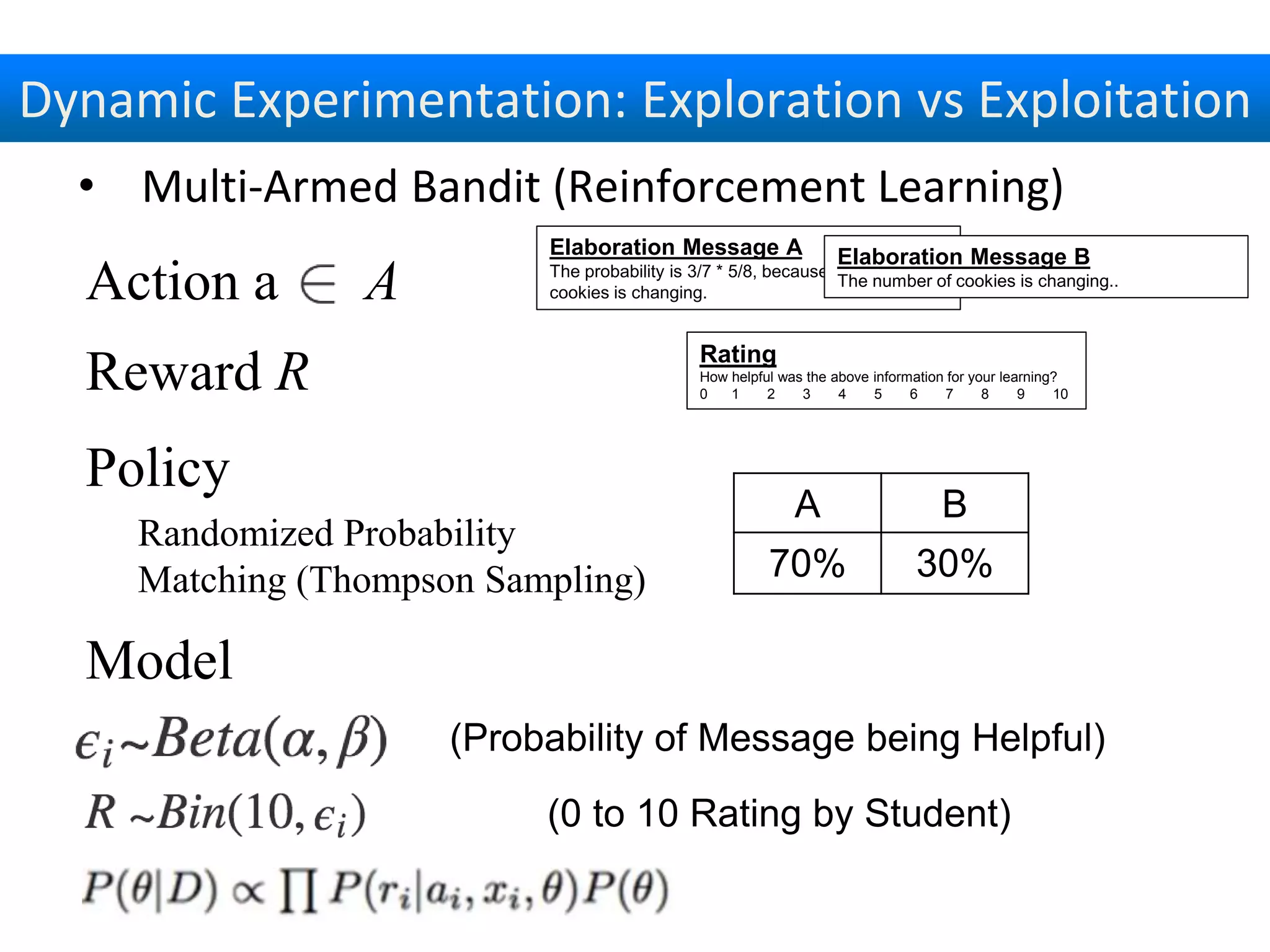

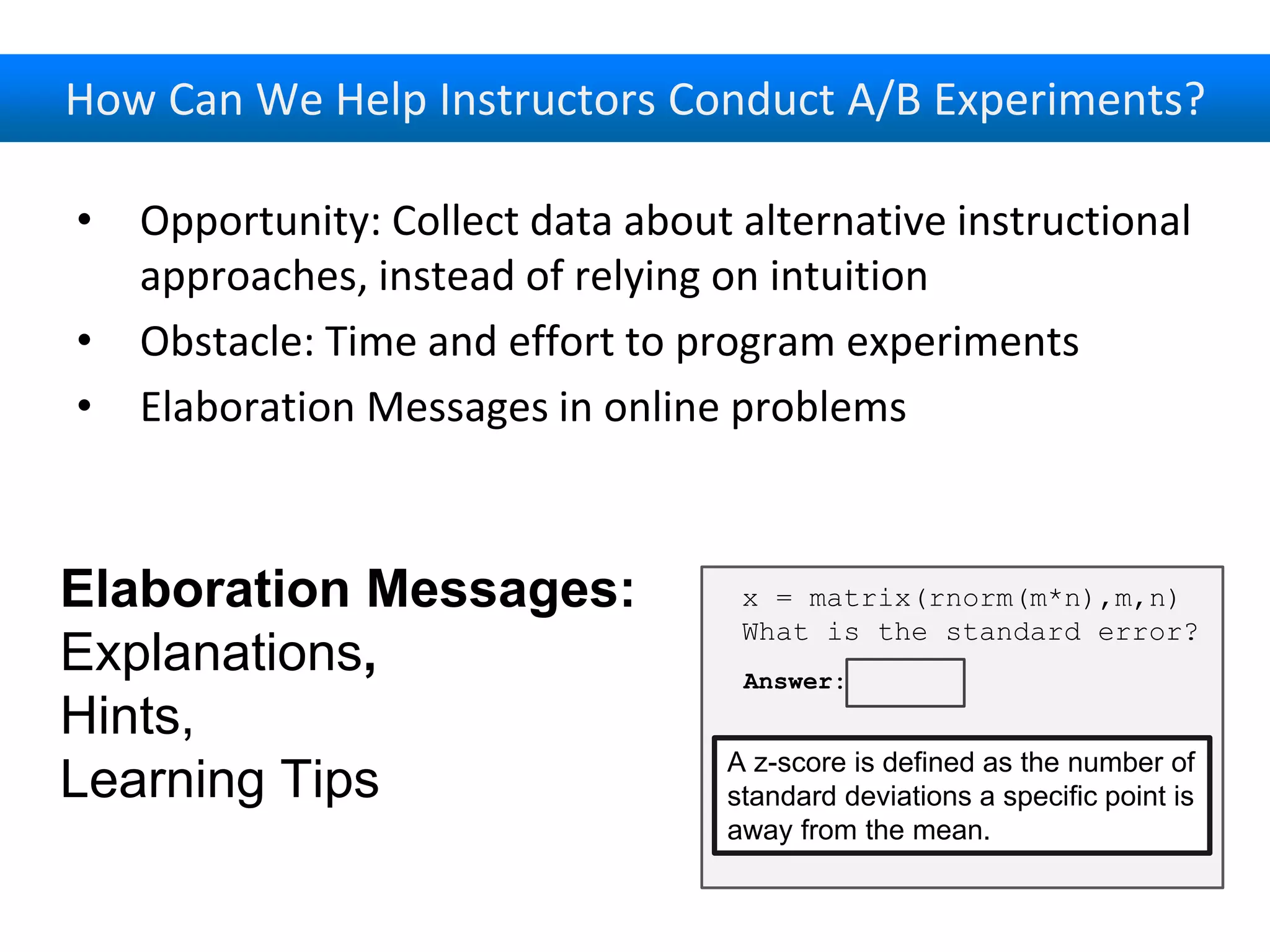

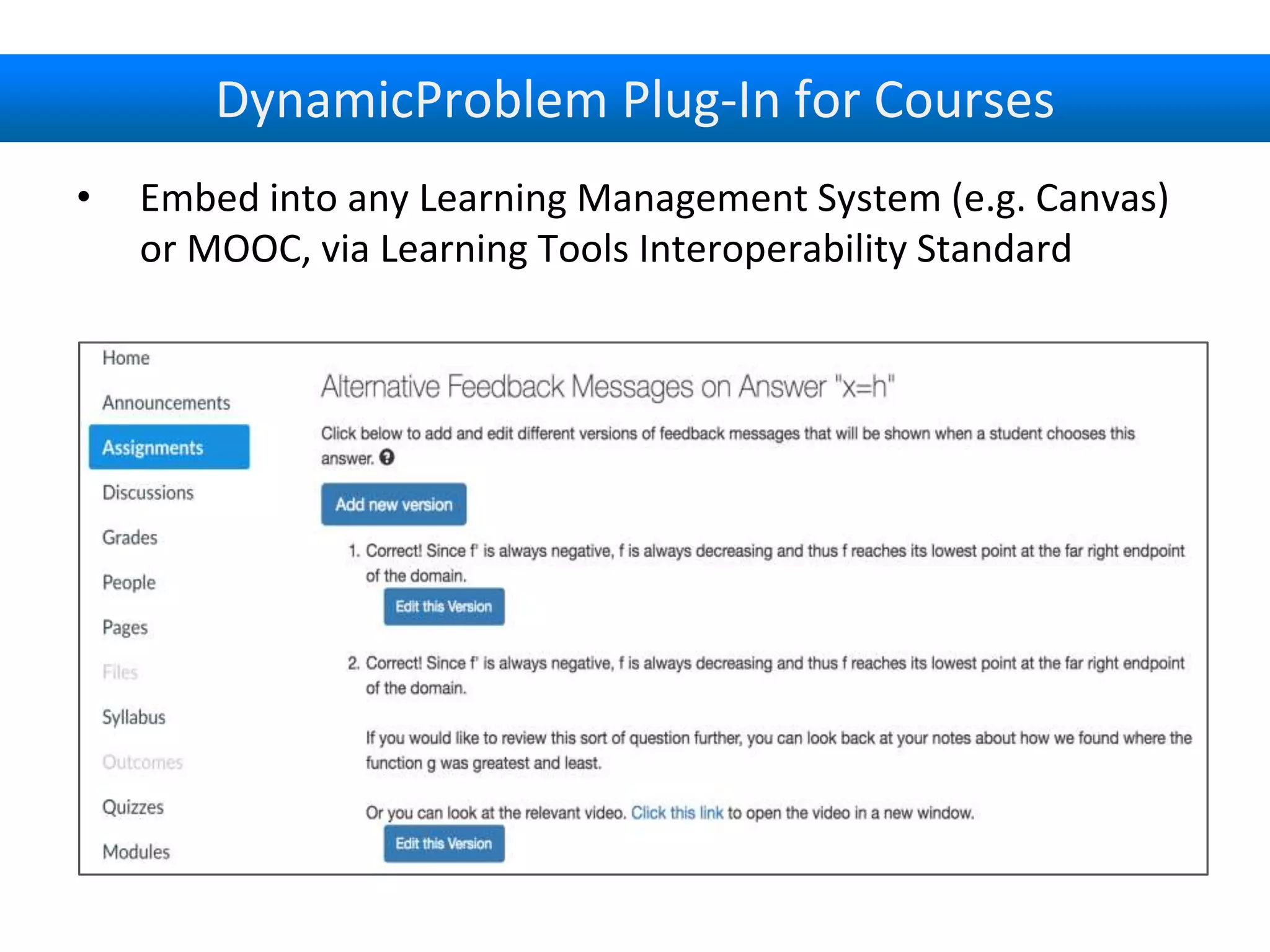

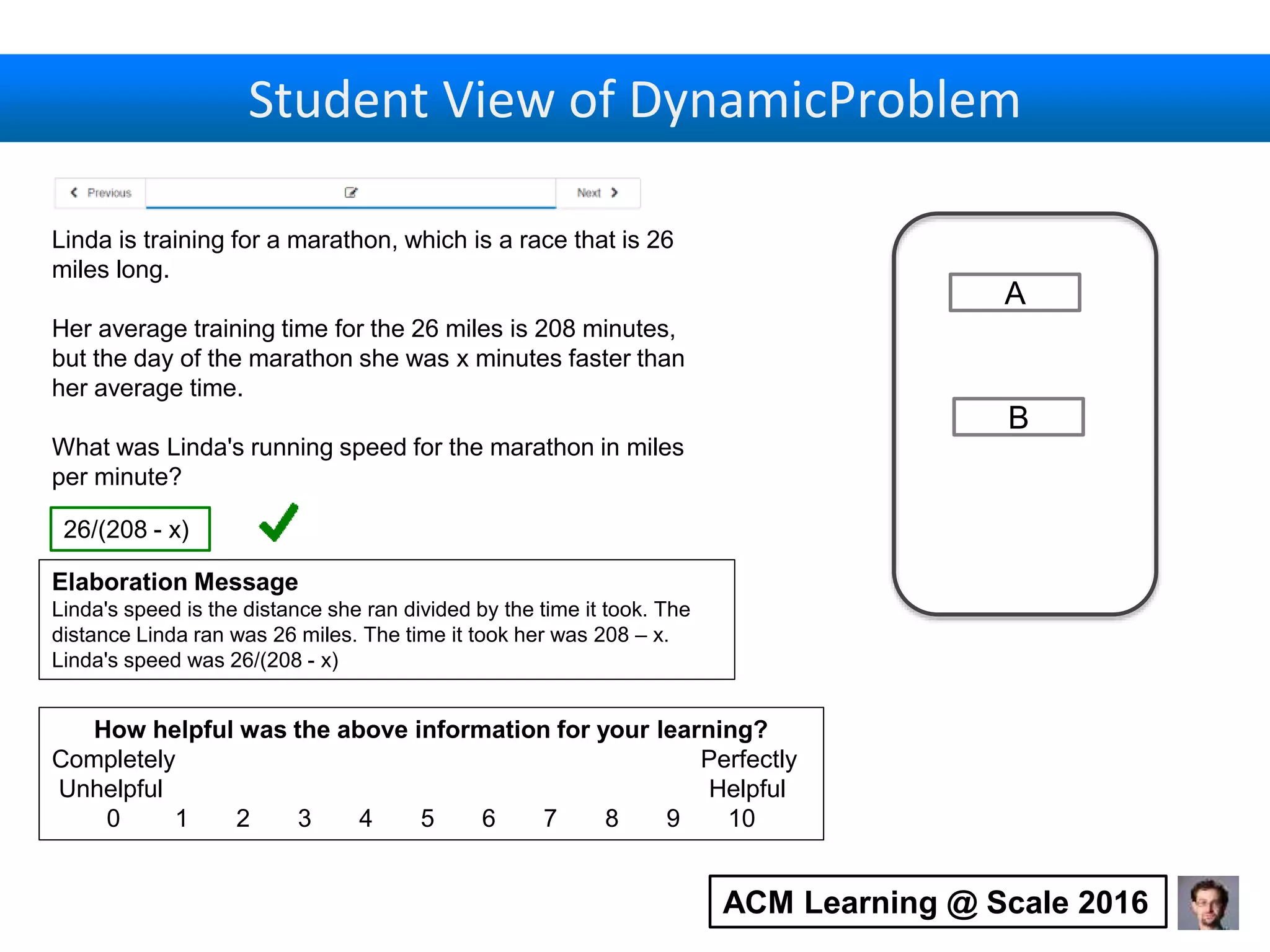

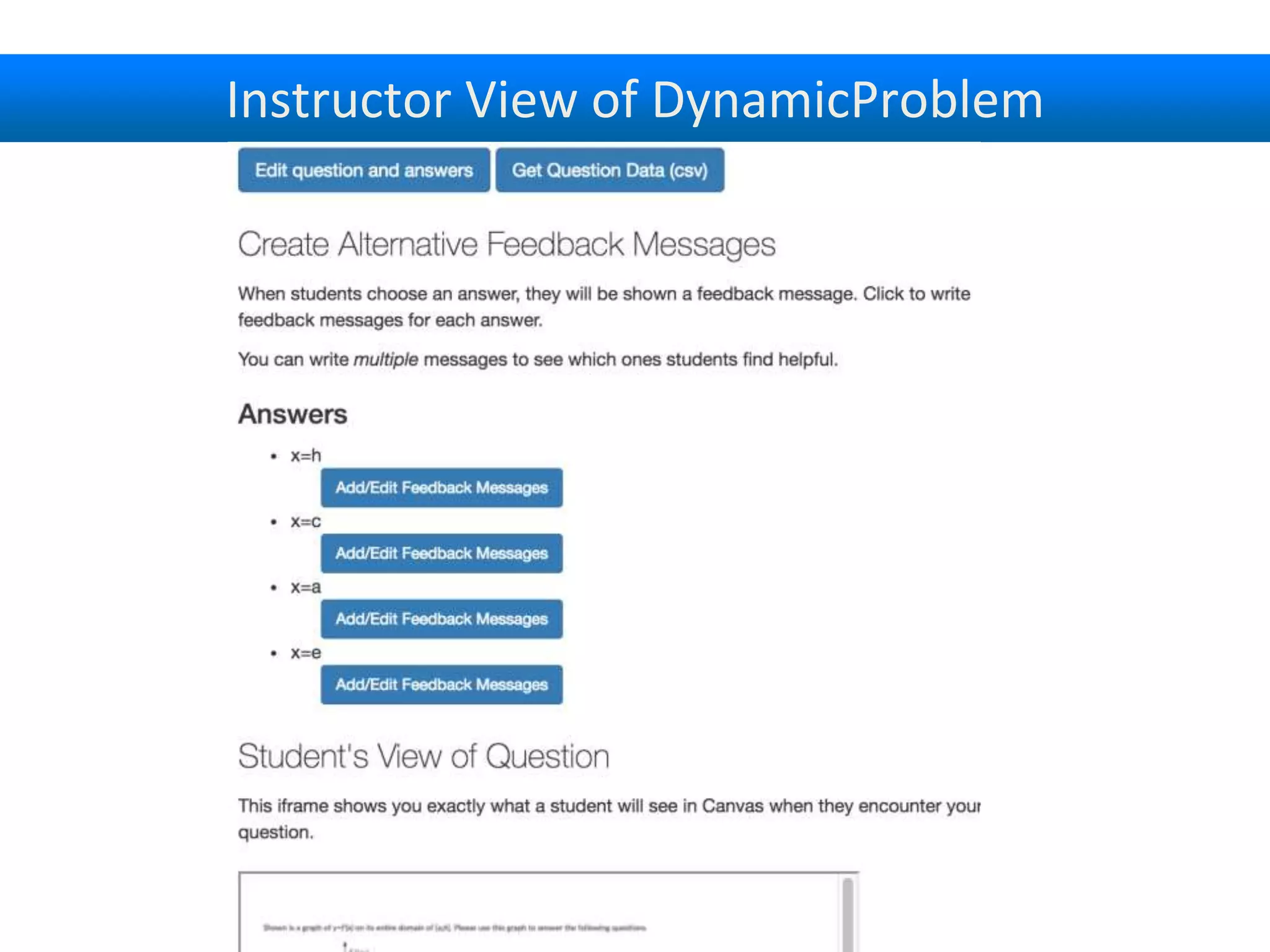

The document discusses the development and implementation of an instructor-centered tool called DynamicProblem for conducting A/B experiments in educational settings. It aims to ease the programming burden on instructors, allowing them to collect data on instructional approaches while providing elaborate messages to aid student learning. Insights from deploying the tool with faculty members highlighted its potential to improve student experiences and foster a practical research environment.

![Enhancing Online Problems Through Instructor-

Centered Tools for Randomized Experiments

Joseph Jay Williams

University of Toronto Computer Science ( Nat. U. of Singapore)

www.josephjaywilliams.com/papers, tiny.cc/icepdf

Anna Rafferty, Andrew Ang, Dustin Tingley, Walter Lasecki, Juho Kim

[I’m originally from the Caribbean,

Trinidad and Tobago]

Postdoc at U of T (www.josephjaywilliams.com/postdoc)

Computer Science PhD positions to do Education Research

CHI 2019 subcommittee on “Learning/Families” (Amy Ogan &

I are SCs)](https://image.slidesharecdn.com/chi2019enhancingonlineproblemsthroughinstructor-centeredtoolsforrandomizedexperiments-180424161903/75/CHI-Computer-Human-Interaction-2019-enhancing-online-problems-through-instructor-centered-tools-for-randomized-experiments-1-2048.jpg)

![Observations from Deployment with 3 Instructors

• Lowered Barriers: “not aware of any tools that do this

sort of thing”, “even if I found one, wouldn’t have the

technical expertise to incorporate it in my course”

• Reflection on pedagogy: “I never really seriously

considered [testing] multiple versions as we are now

doing. So even if we don't get any significant data, that

will have been a benefit in my mind”

• Making research practical: “a valuable tool. Putting in

the hands of the teacher to understand how their

students learn. Not just in broad terms, but specifically

in their course”….](https://image.slidesharecdn.com/chi2019enhancingonlineproblemsthroughinstructor-centeredtoolsforrandomizedexperiments-180424161903/75/CHI-Computer-Human-Interaction-2019-enhancing-online-problems-through-instructor-centered-tools-for-randomized-experiments-13-2048.jpg)