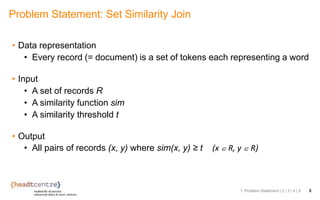

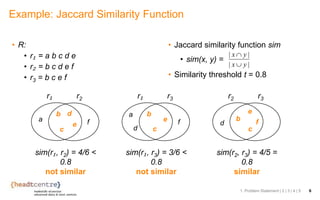

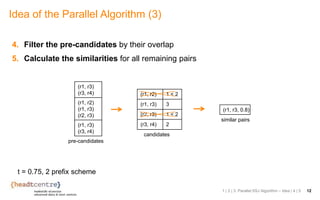

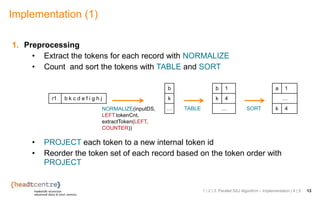

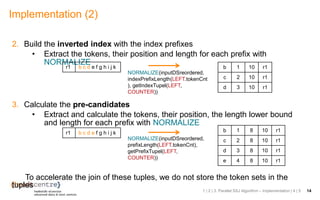

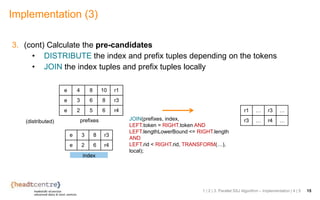

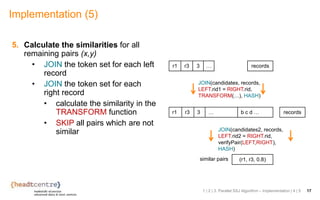

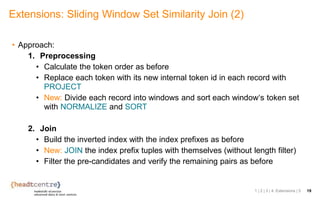

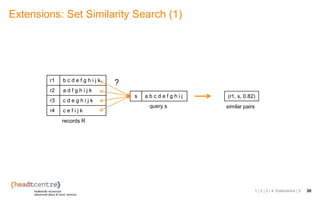

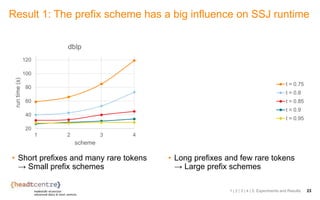

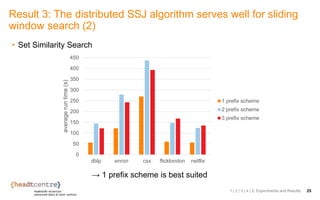

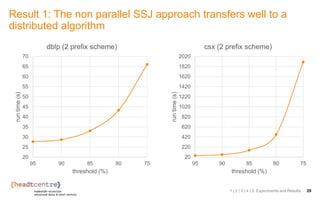

The document discusses the challenges and solutions related to identifying similar pairs of documents in large datasets, particularly through parallel set similarity join (SSJ) algorithms. It presents a filter-verification framework to efficiently compute similarities by reducing the number of pairs to evaluate using techniques like prefix and length filtering. The document also showcases various experiments demonstrating the performance of different algorithms and datasets, highlighting the effectiveness of the proposed methods.