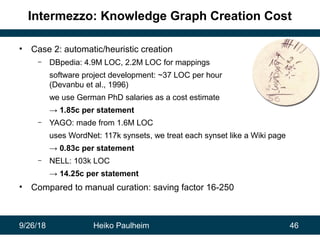

The document discusses knowledge graphs, their definitions, and creation methods, emphasizing the differences in their structures and the trade-offs between accuracy and user involvement. It also examines the utility of knowledge graphs in machine learning and the overlap between different knowledge graphs. The findings highlight the strengths and weaknesses of various public knowledge graphs such as DBpedia, Wikidata, and YAGO, alongside cost considerations for their creation.

![9/26/18 Heiko Paulheim 50

WebDataCommons Microdata Corpus

• Structured markup on the Web can be extracted to RDF

– See W3C Interest Group Note: Microdata to RDF [1]

[1] http://www.w3.org/TR/microdata-rdf/

<div itemscope

itemtype="http://schema.org/PostalAddress">

<span itemprop="name">Data and Web Science Group</span>

<span itemprop="addressLocality">Mannheim</span>,

<span itemprop="postalCode">68131</span>

<span itemprop="addressCountry">Germany</span>

</div>

_:1 a <http://schema.org/PostalAddress> .

_:1 <http://schema.org/name> "Data and Web Science Group" .

_:1 <http://schema.org/addressLocality> "Mannheim" .

_:1 <http://schema.org/postalCode> "68131" .

_:1 <http://schema.org/adressCounty> "Germany" .](https://image.slidesharecdn.com/rwsummerschool2018-180926072544/85/Machine-Learning-with-and-for-Semantic-Web-Knowledge-Graphs-50-320.jpg)

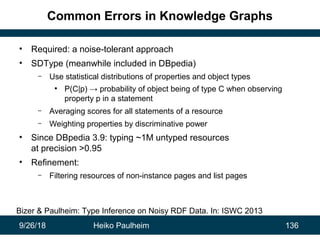

![9/26/18 Heiko Paulheim 159

Common Errors in Knowledge Graphs

• So far, we have looked at relation assertions

• Numerical values can also be problematic…

– Recap: Wikipedia is made for human consumption

• The following are all valid representations of the same height value

(and perfectly understandable by humans)

– 6 ft 6 in, 6ft 6in, 6'6'', 6'6”, 6´6´´, …

– 1.98m, 1,98m, 1m 98, 1m 98cm, 198cm, 198 cm, …

– 6 ft 6 in (198 cm), 6ft 6in (1.98m), 6'6'' (1.98 m), …

– 6 ft 6 in[1]

, 6 ft 6 in [citation needed]

, …

– ...

Wienand & Paulheim: Detecting Incorrect Numerical Data in DBpedia. ESWC 2014

Fleischhacker et al.: Detecting Errors in Numerical Linked Data Using Cross-Checked

Outlier Detection. ISWC 2014](https://image.slidesharecdn.com/rwsummerschool2018-180926072544/85/Machine-Learning-with-and-for-Semantic-Web-Knowledge-Graphs-159-320.jpg)