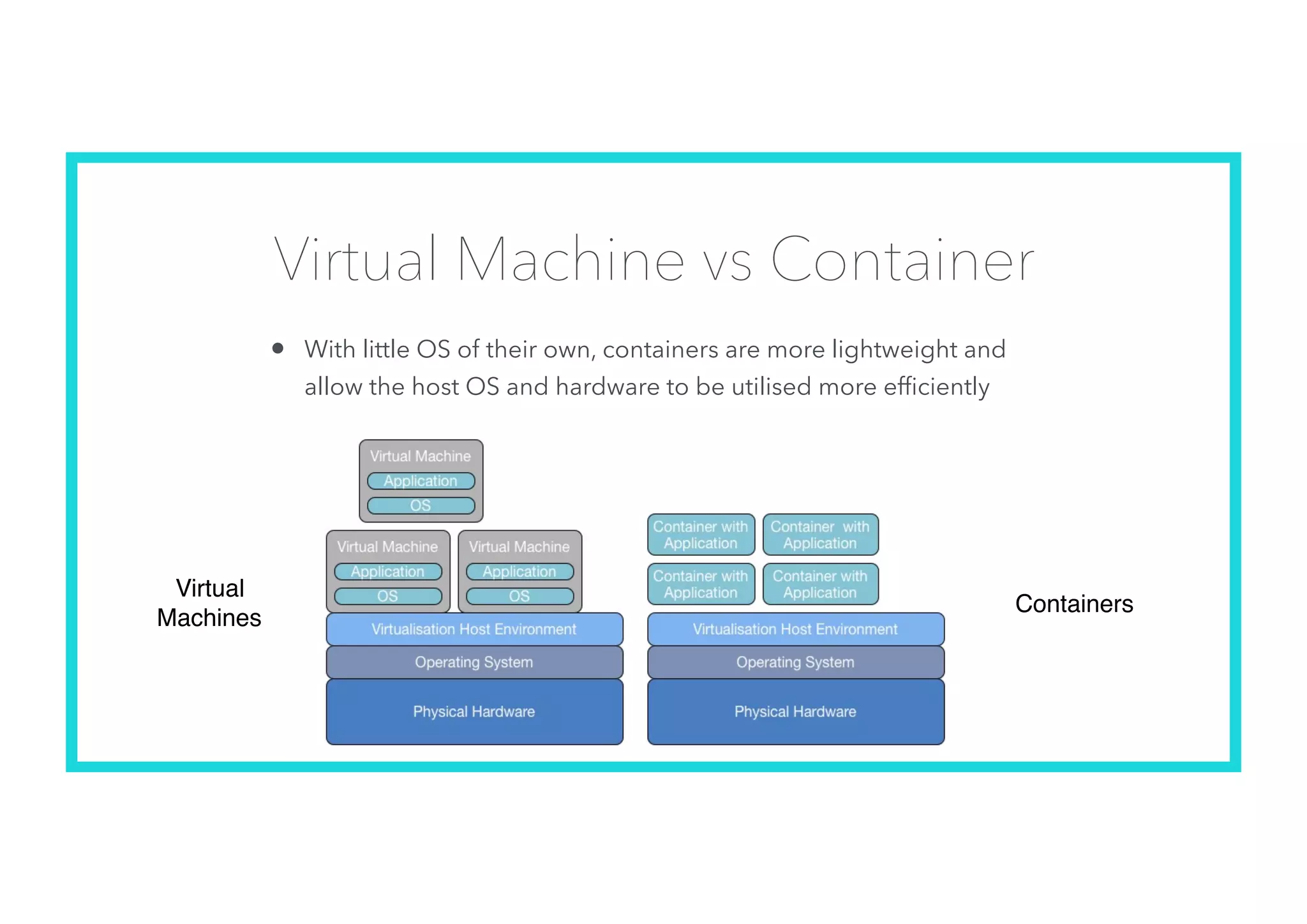

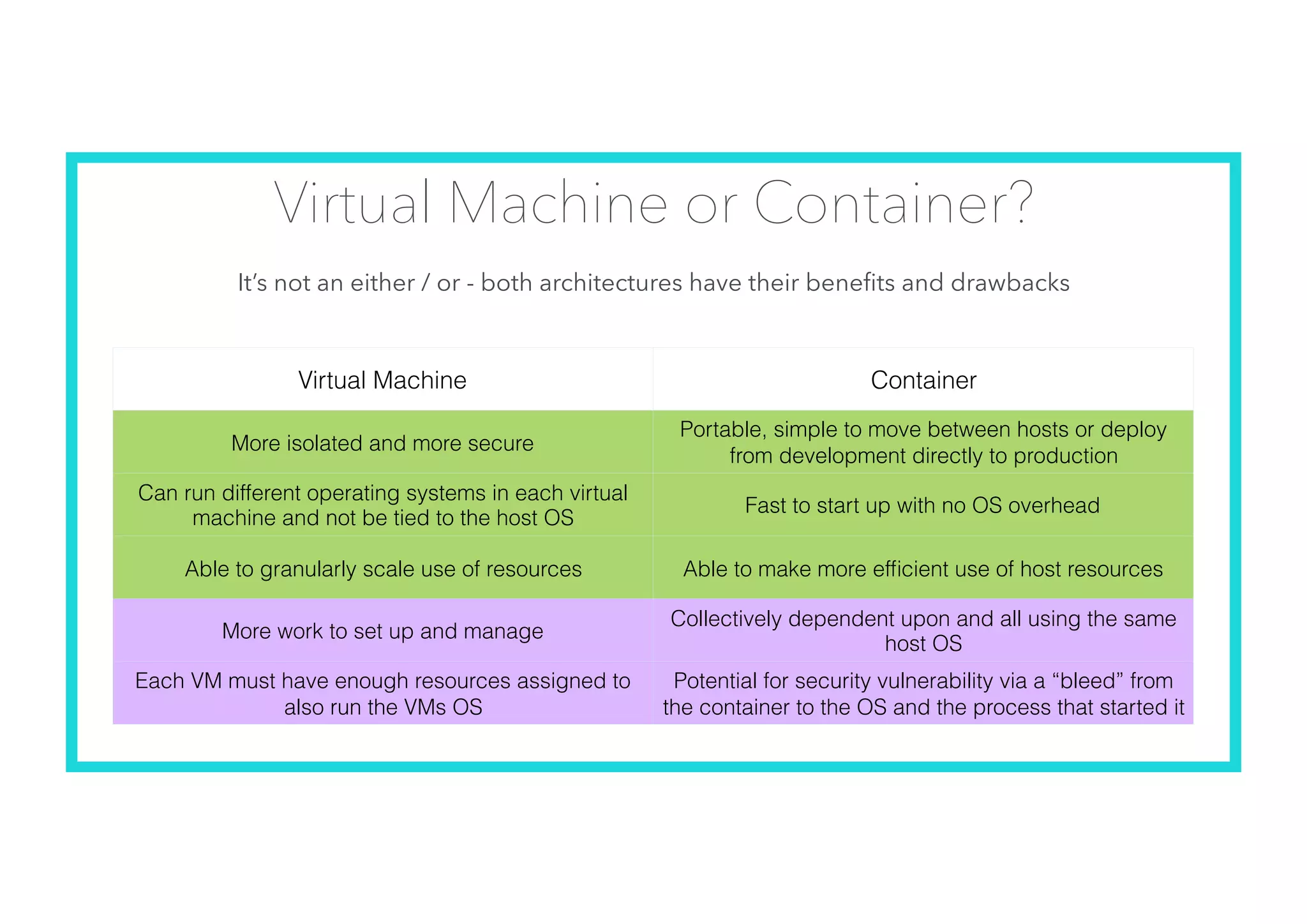

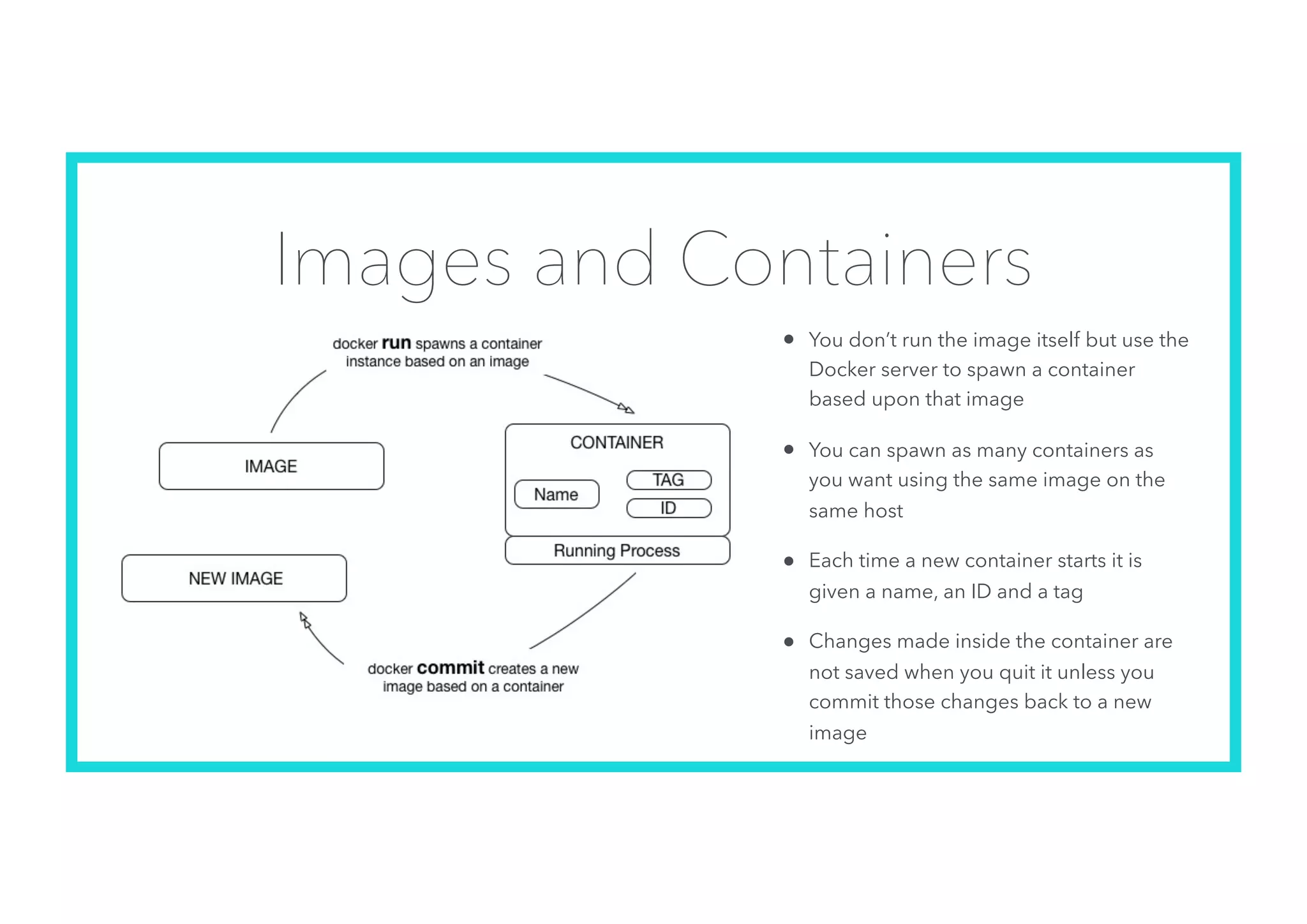

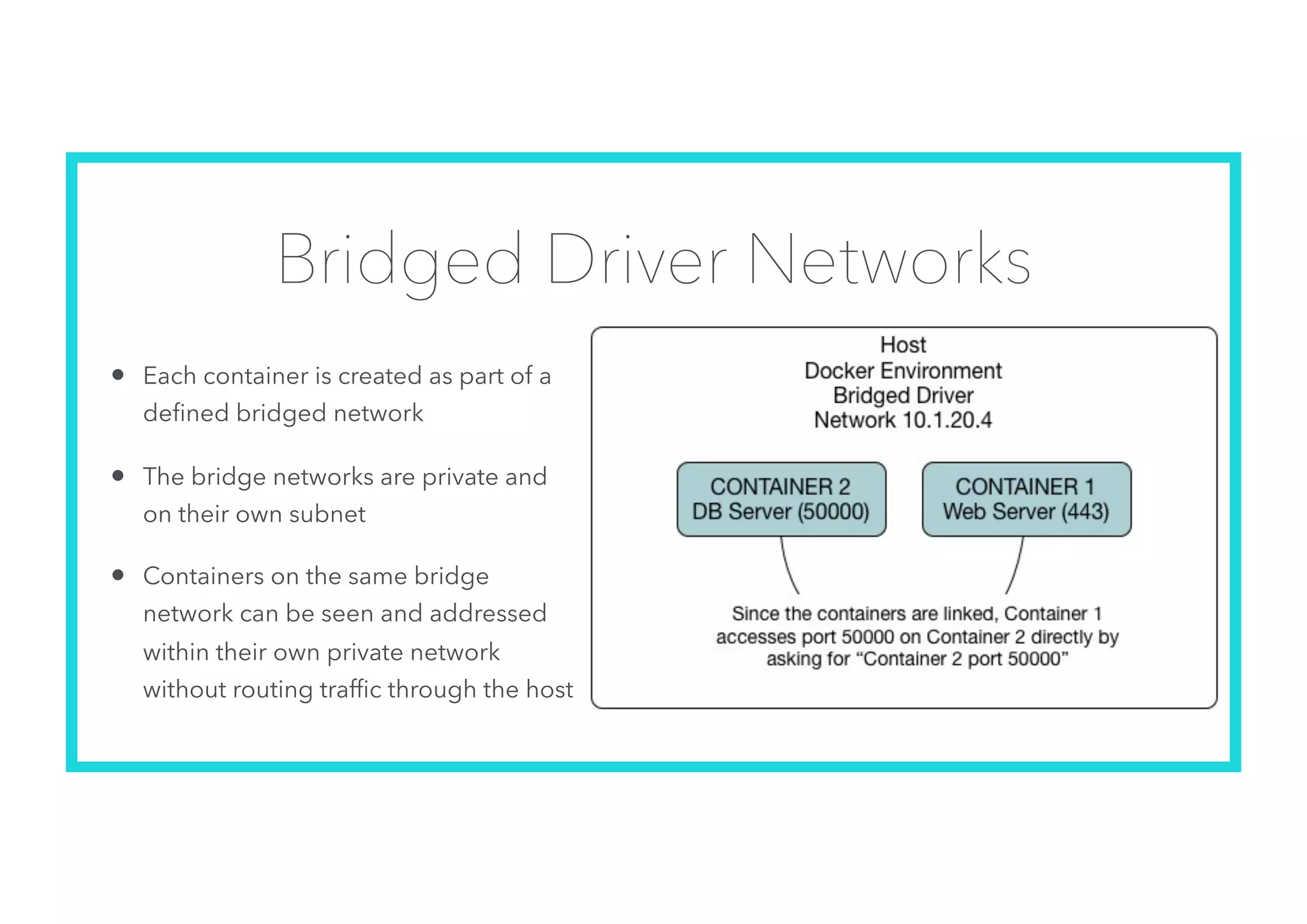

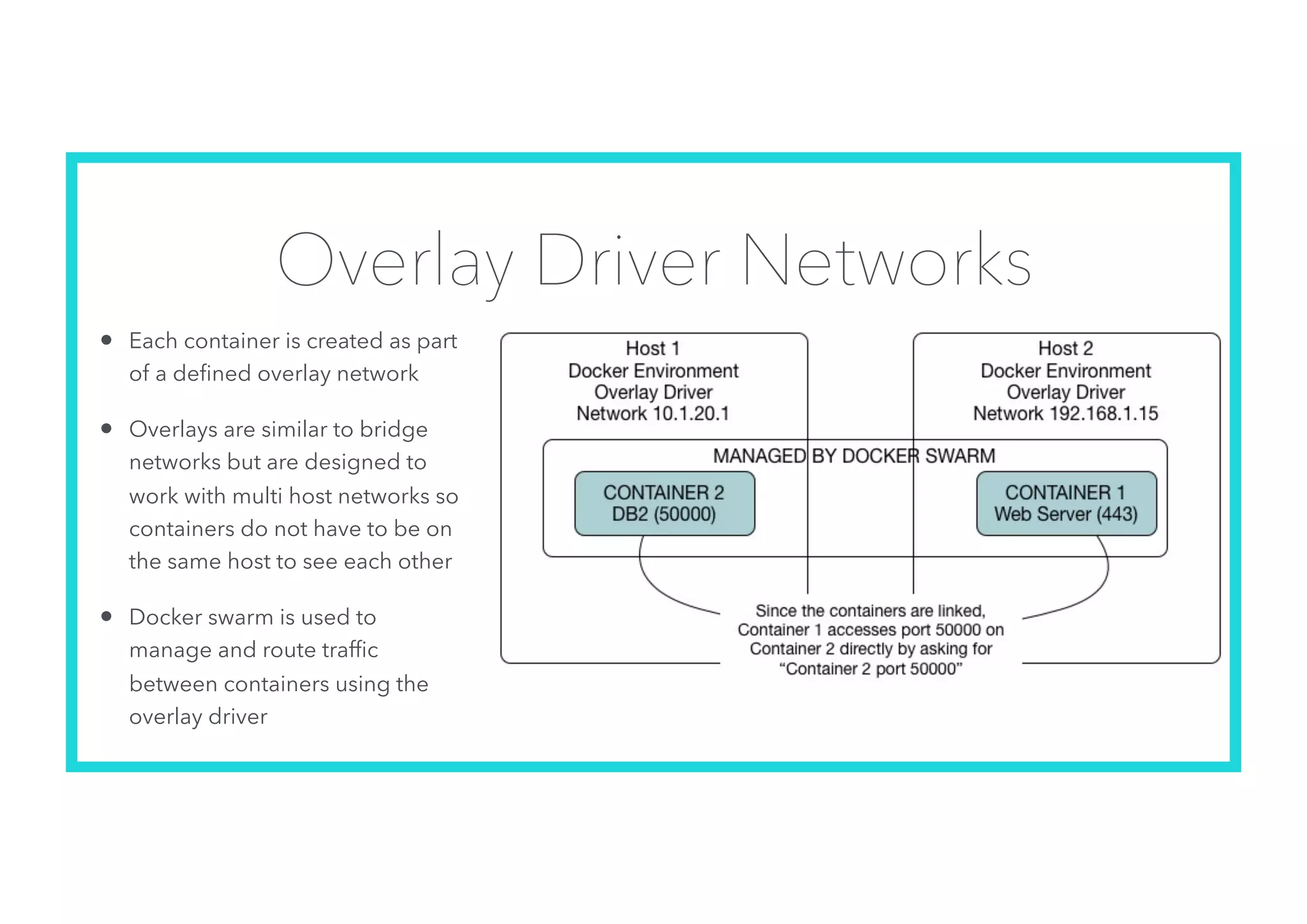

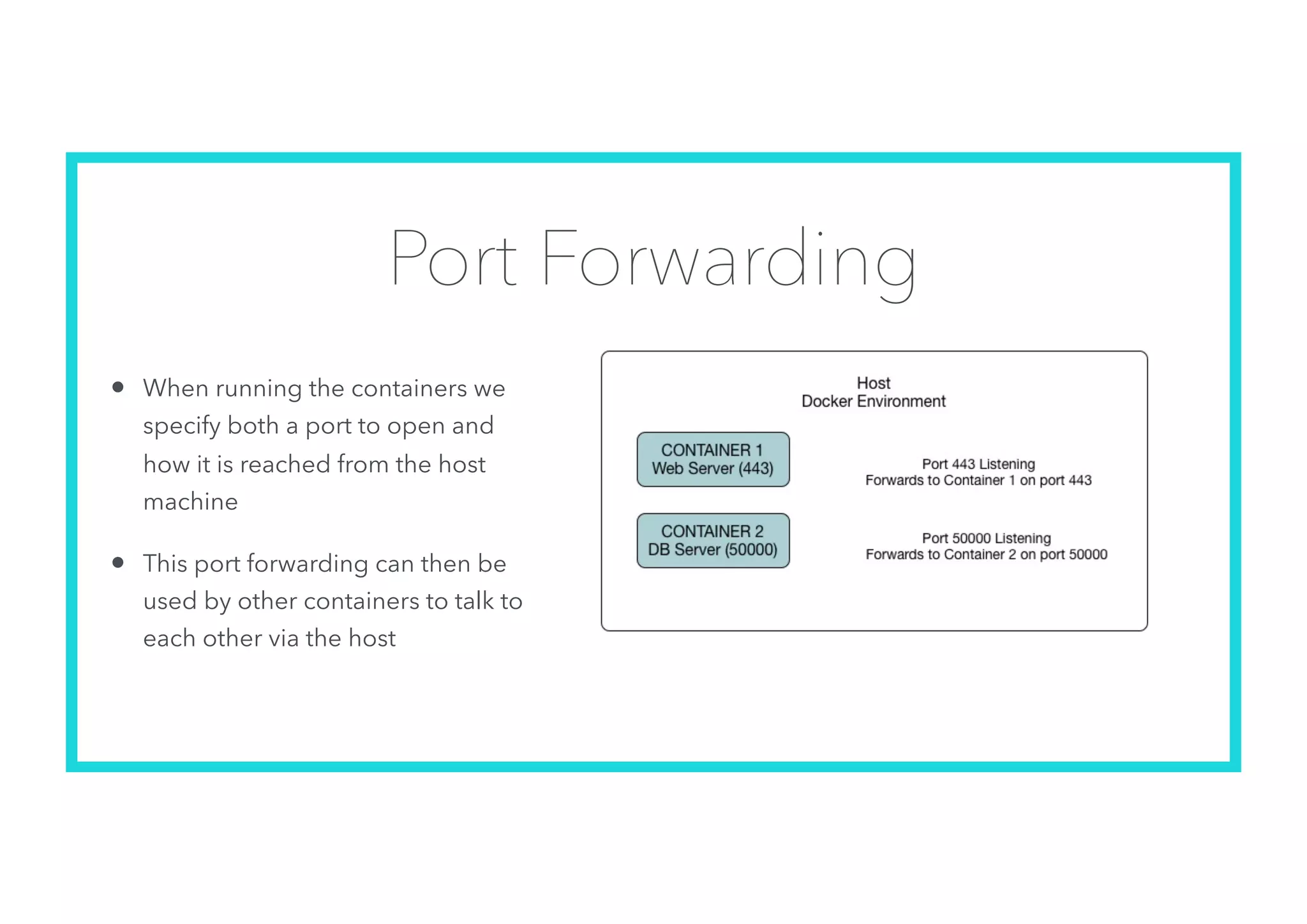

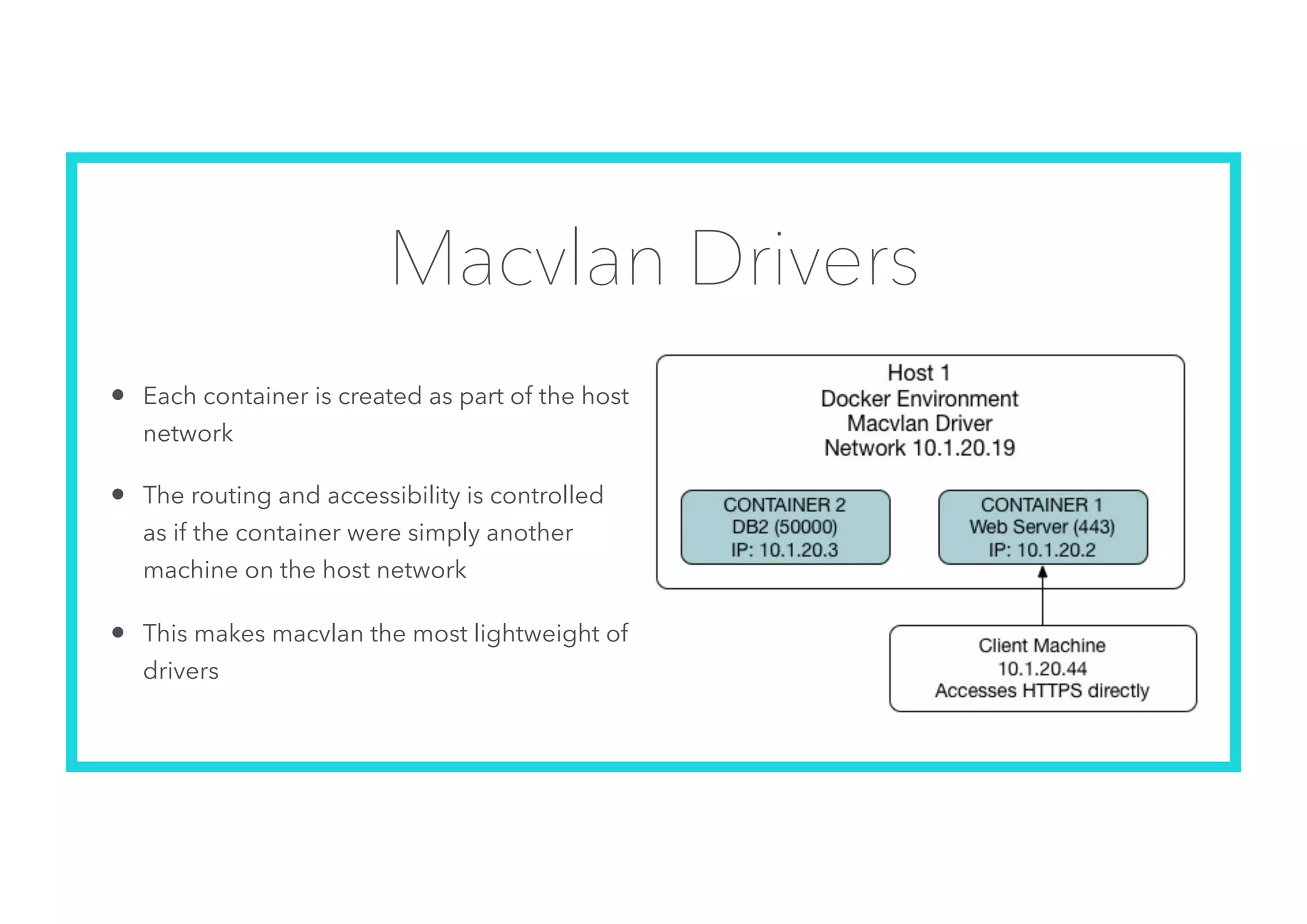

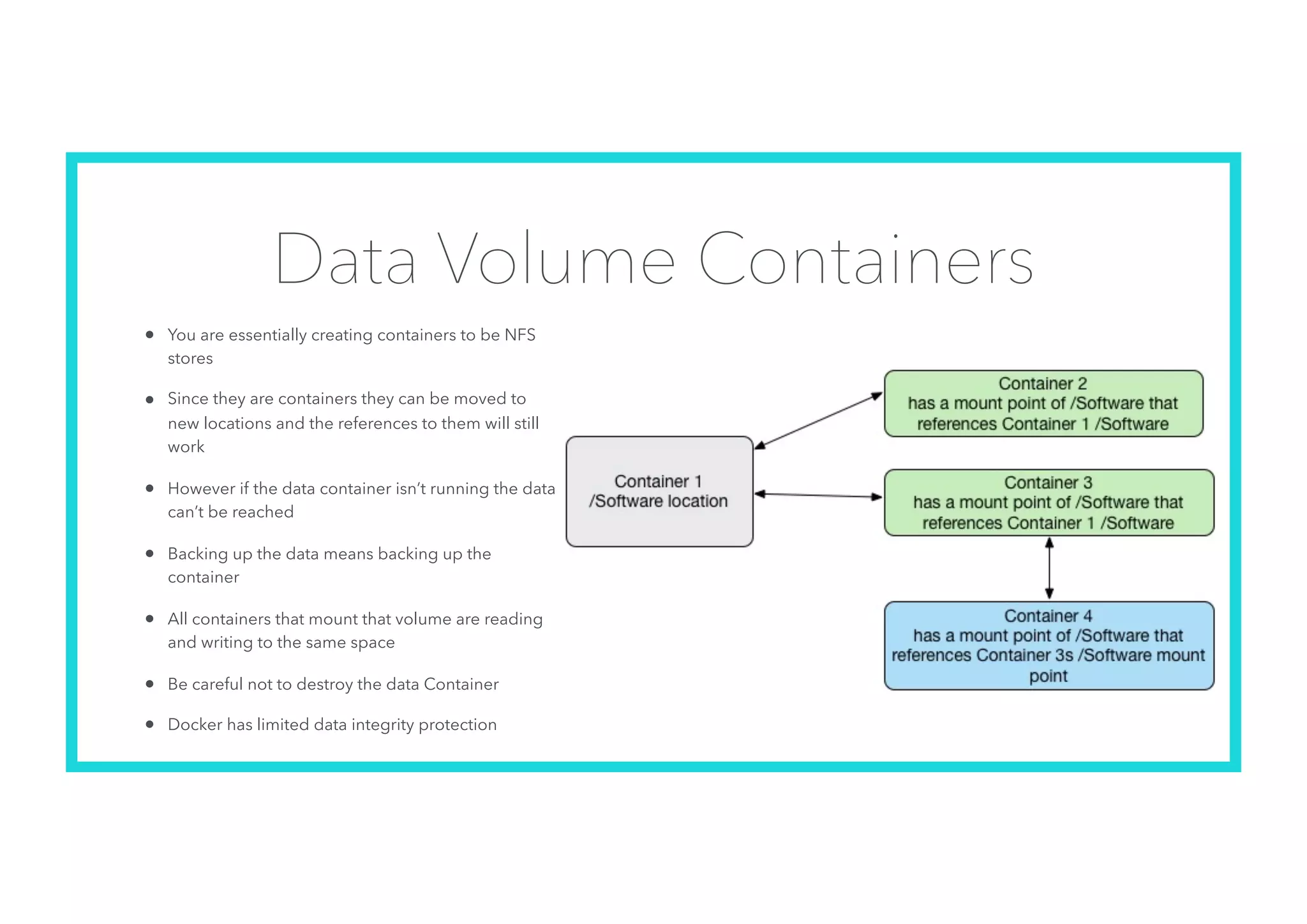

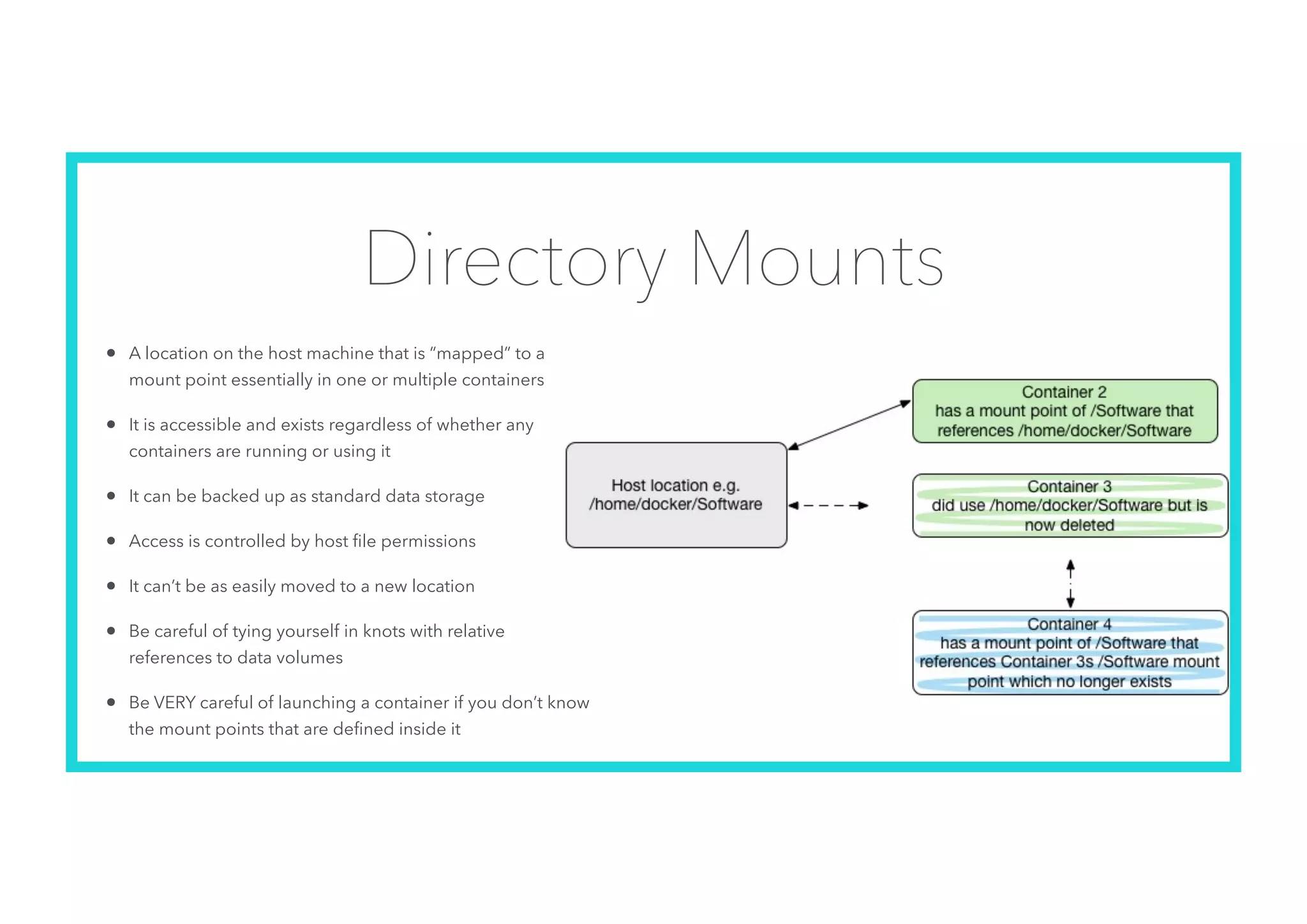

The document offers an introduction to Docker and containerization, emphasizing its significance in modern production architecture and DevOps practices. It contrasts traditional virtual machines with containers, highlighting the benefits of containers in terms of efficiency, portability, and simplified updates through microservices architecture. Additionally, it discusses Docker's architecture, the use of images and networking, storage options, and essential commands for managing containers.