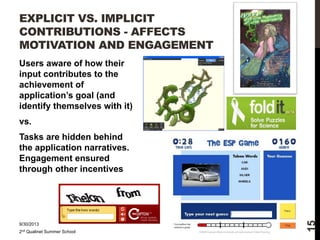

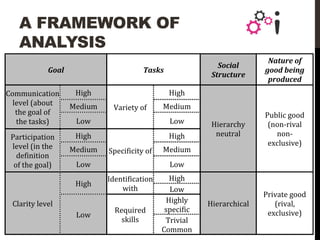

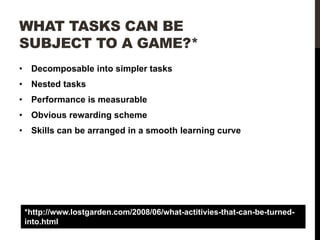

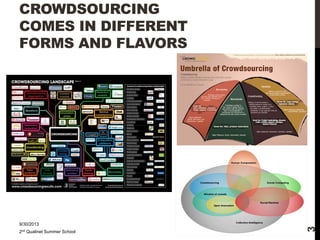

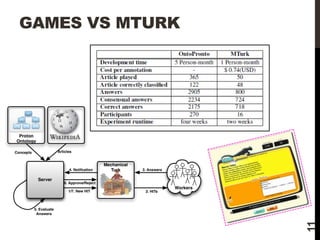

This document discusses crowdsourcing and incentives-driven technology design. It defines crowdsourcing as outsourcing tasks traditionally performed by employees to a large, undefined network of people through an open call. Crowdsourcing comes in different forms like human computation, volunteering projects, and challenges. Successful crowdsourcing crucially depends on understanding user behavior and aligning incentives with their motivations, which can be intrinsic or extrinsic. Games are discussed as a form of crowdsourcing that frames tasks as gameplay. Proper design of games with a purpose requires decomposing tasks, measuring performance, and configuring rewards and challenges.

![CROWDSOURCING:

PROBLEM SOLVING VIA

OPEN CALLS

"Simply defined, crowdsourcing represents the act of a

company or institution taking a function once performed by

employees and outsourcing it to an undefined (and generally

large) network of people in the form of an open call. This can

take the form of peer-production (when the job is performed

collaboratively), but is also often undertaken by sole

individuals. The crucial prerequisite is the use of the open

call format and the large network of potential laborers.“

9/30/2013

2nd Qualinet Summer School

2

[Howe, 2006]](https://image.slidesharecdn.com/qualinetsummerschool2013final-131119075650-phpapp02/85/Incentives-driven-technology-design-2-320.jpg)

![INTRINSIC/EXTRINSIC

MOTIVATIONS ARE WELL-STUDIED

IN THE LITERATURE

[Kaufman, Schulze, Veit]](https://image.slidesharecdn.com/qualinetsummerschool2013final-131119075650-phpapp02/85/Incentives-driven-technology-design-13-320.jpg)