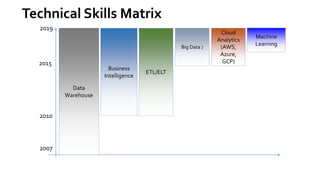

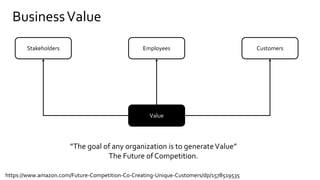

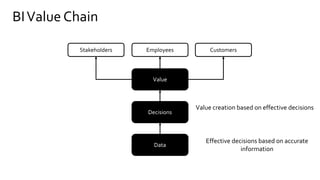

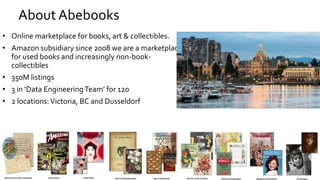

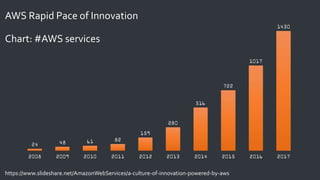

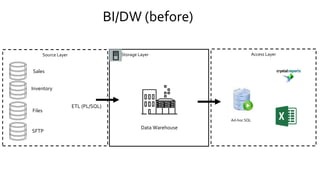

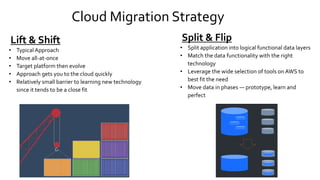

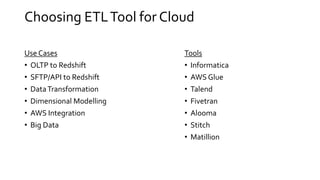

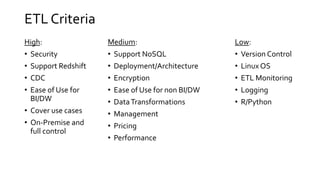

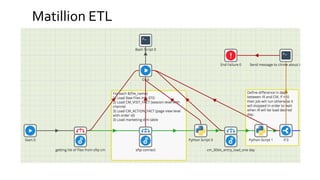

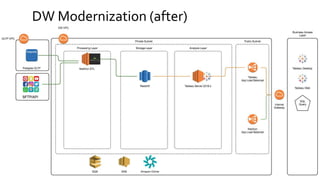

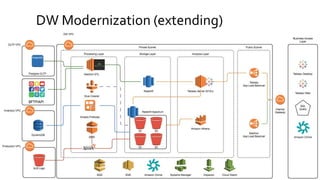

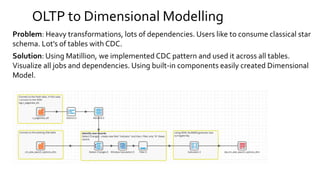

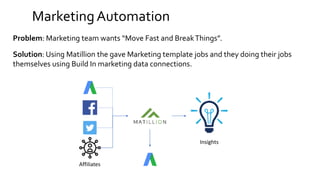

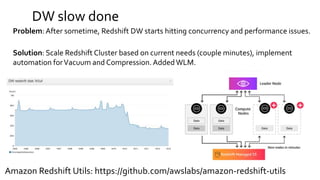

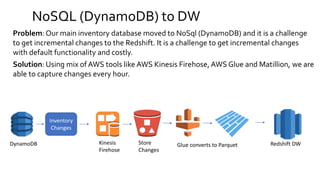

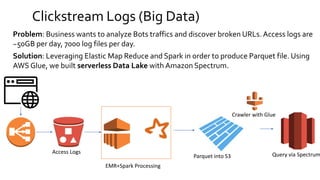

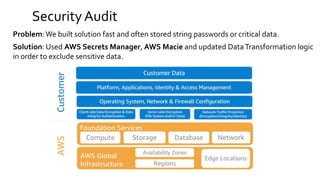

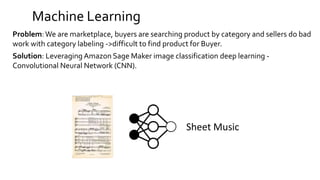

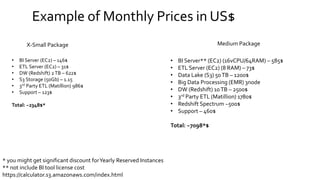

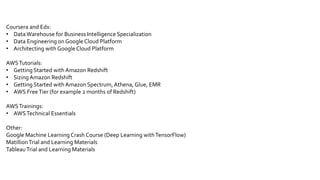

This document provides an outline for a presentation on analytics solutions powered by AWS. It introduces the presenter and their background in business intelligence. It then discusses the role of analytics, an overview of Abebooks, innovation and data, DW modernization at Abebooks using Matillion ETL and Redshift, use cases and challenges, example pricing models, and free learning resources. The document aims to provide an overview of analytics solutions and the presenter's experience implementing solutions on AWS.