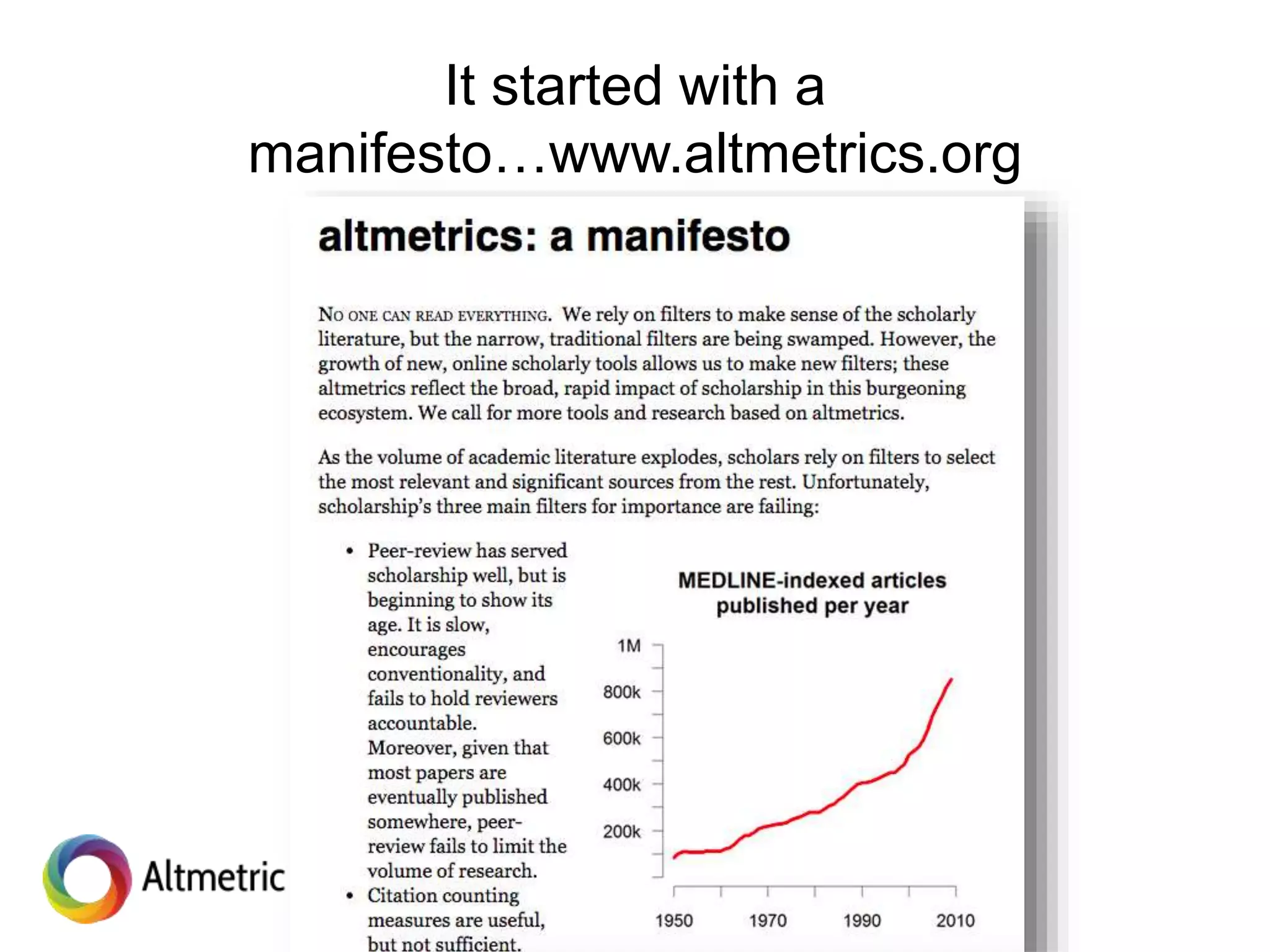

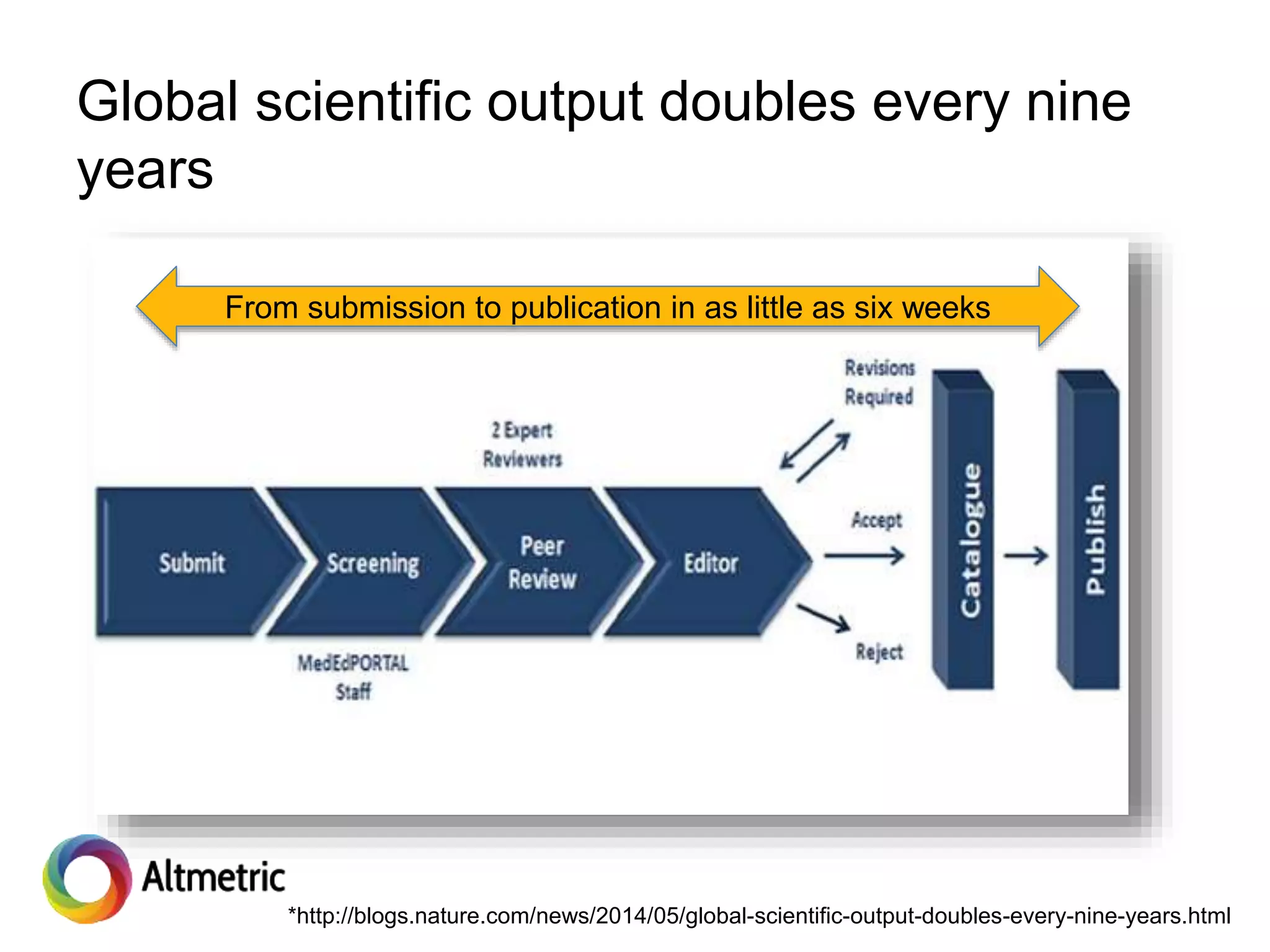

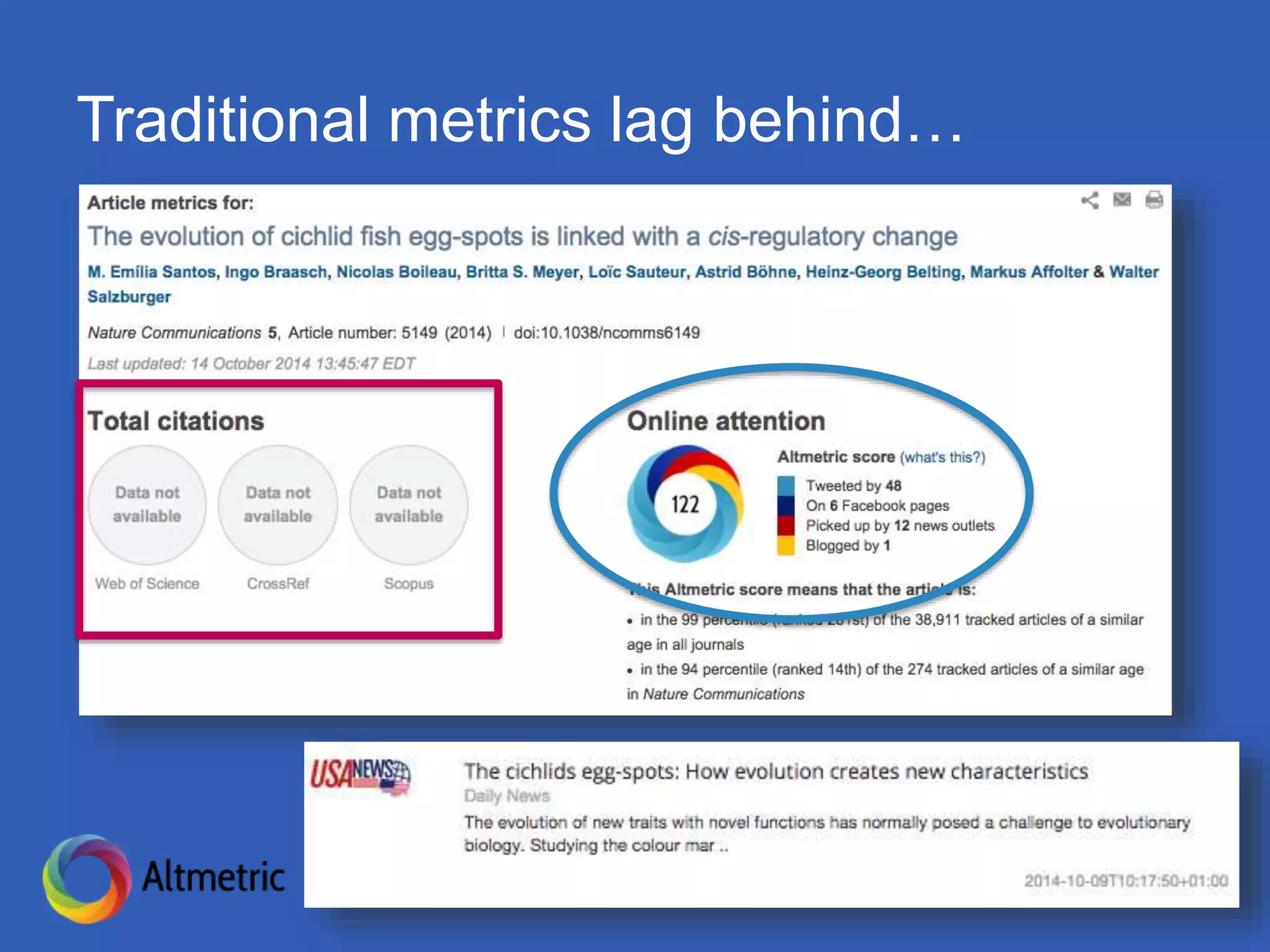

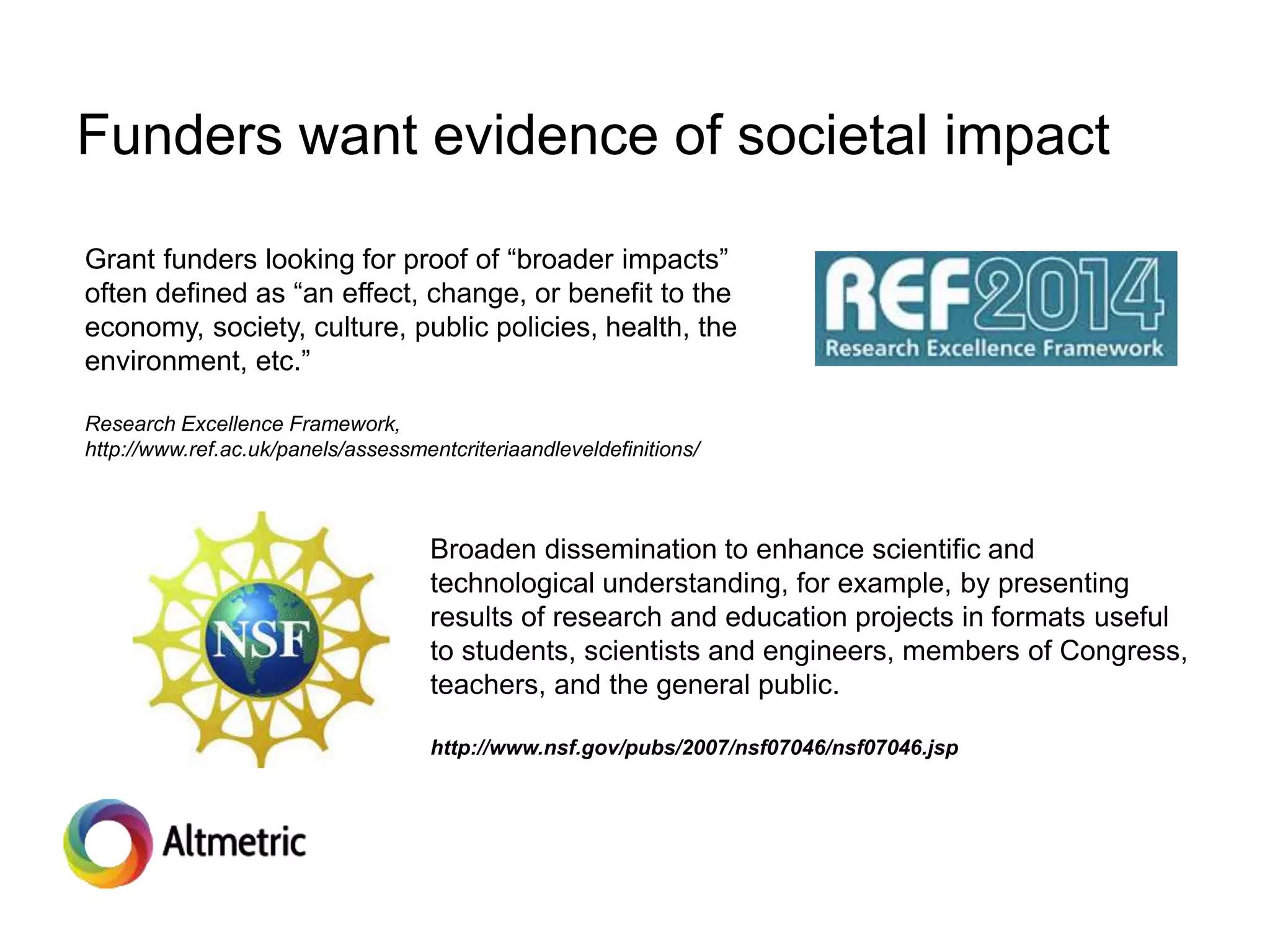

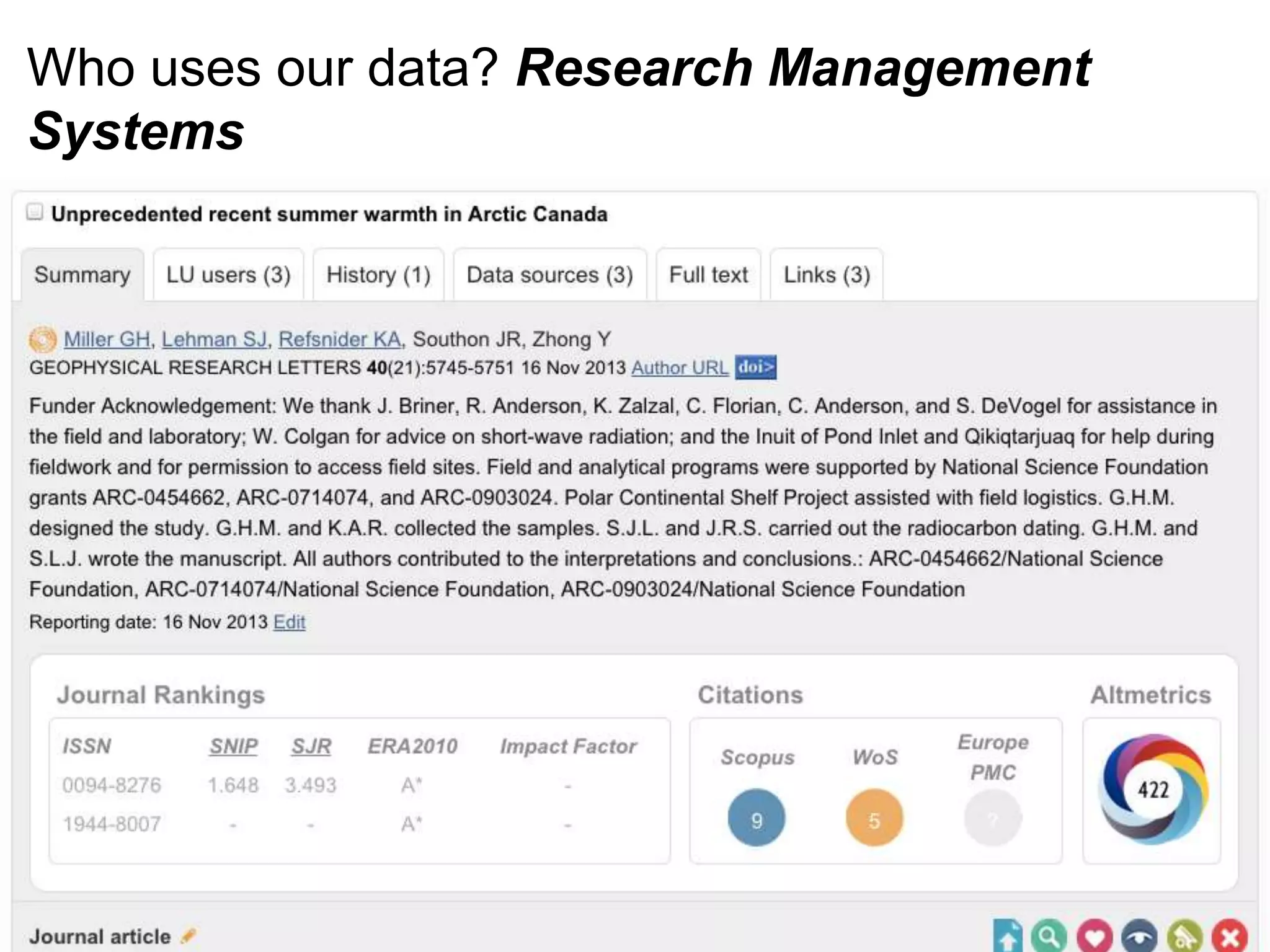

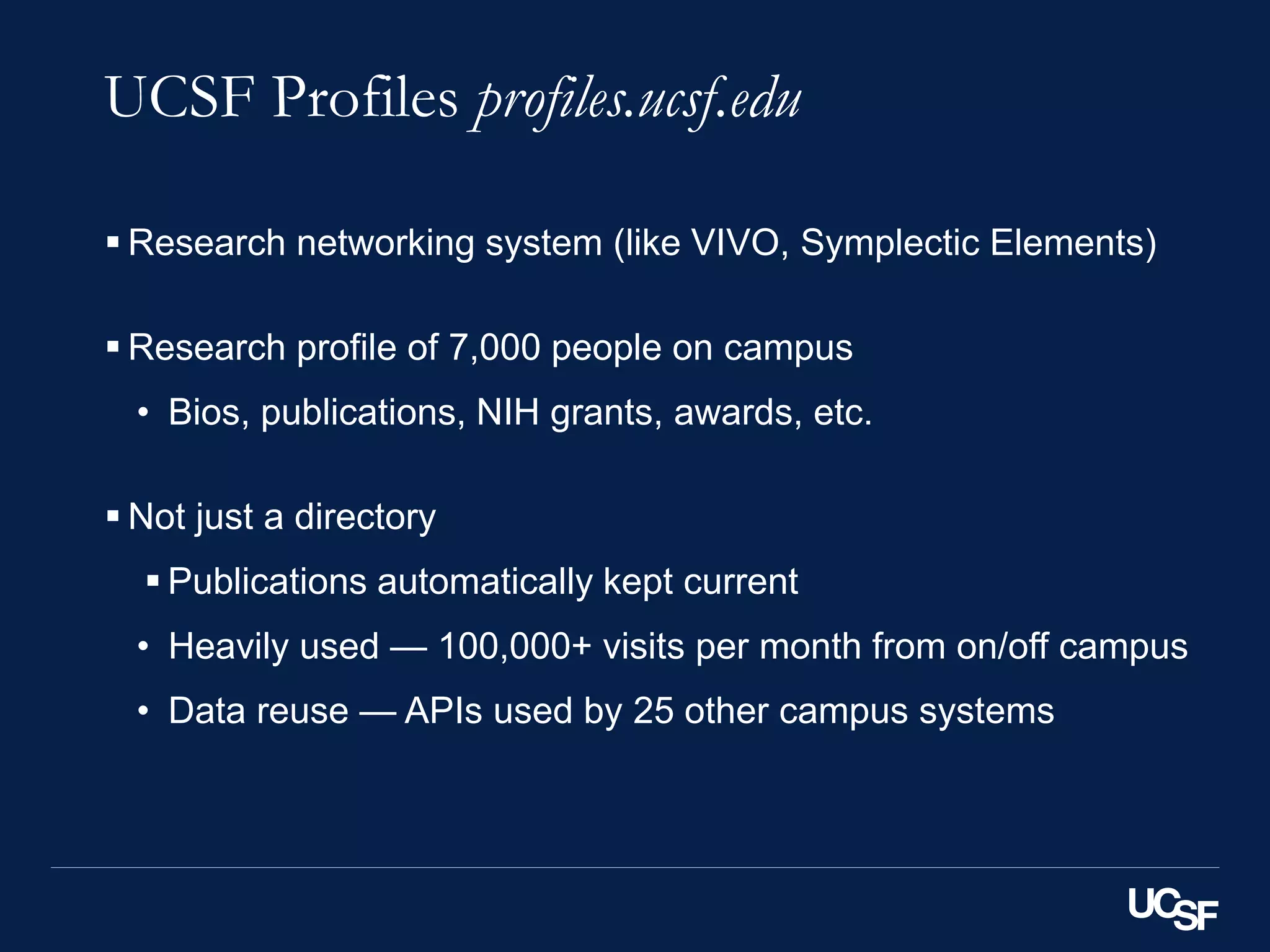

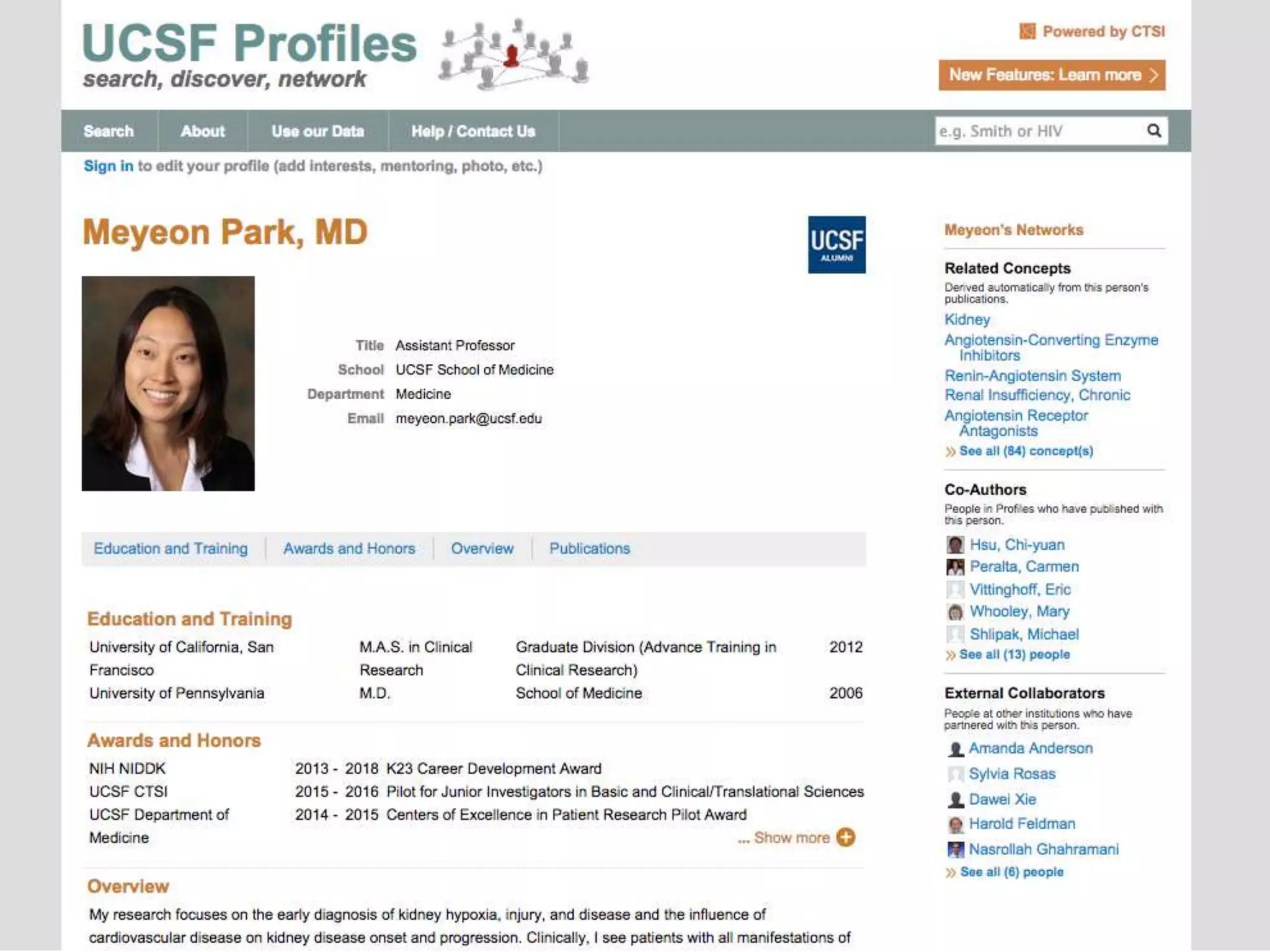

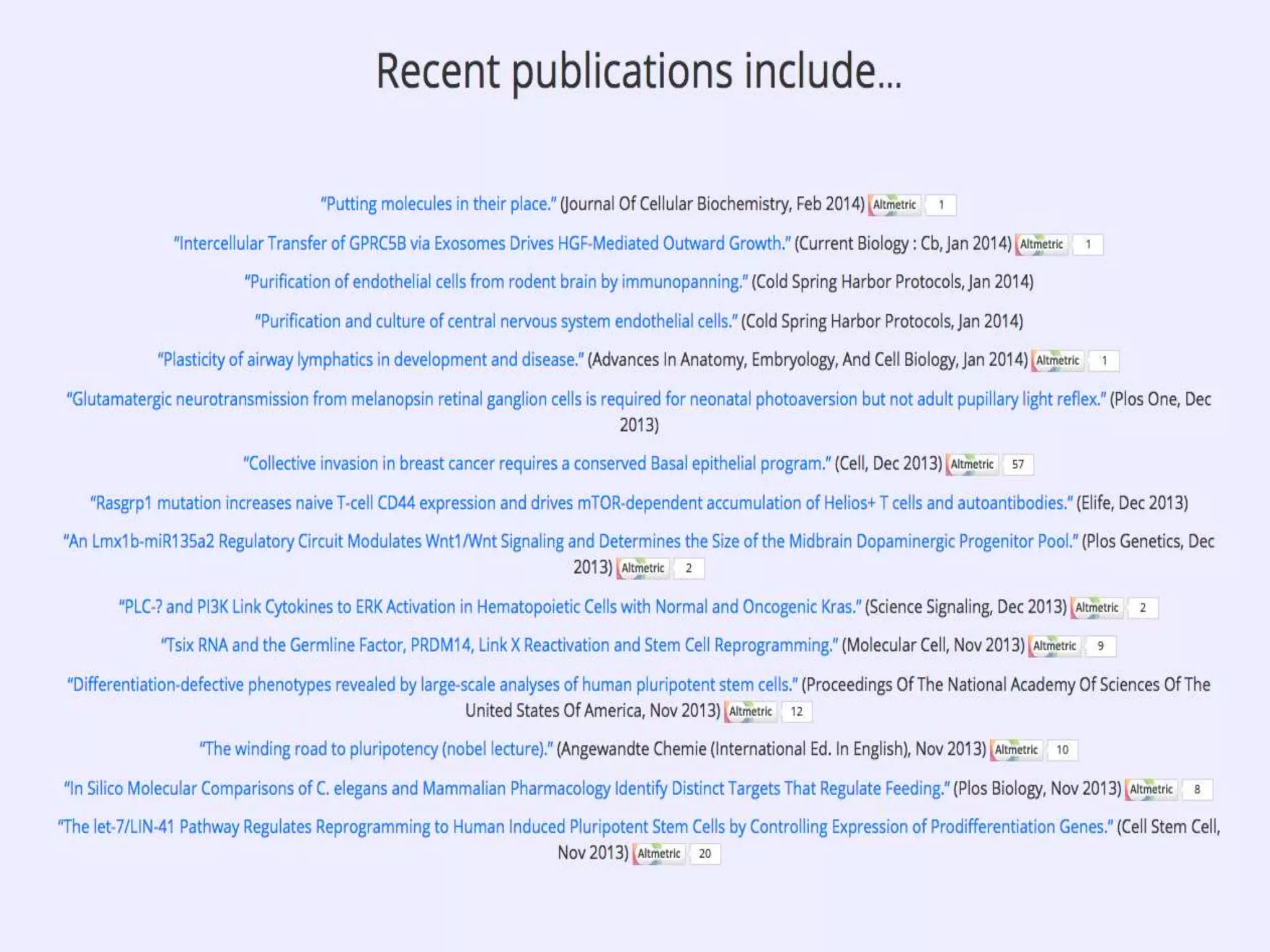

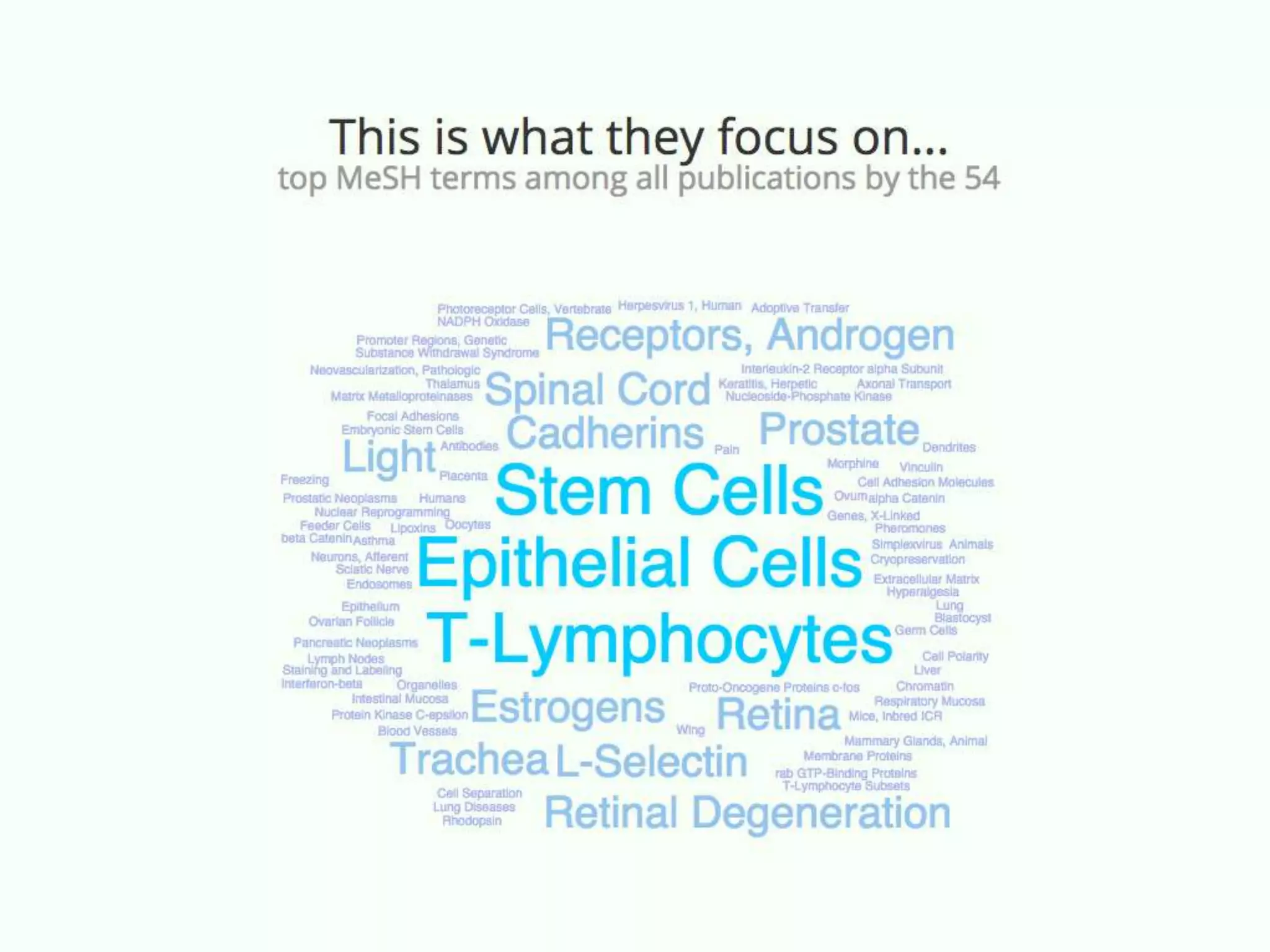

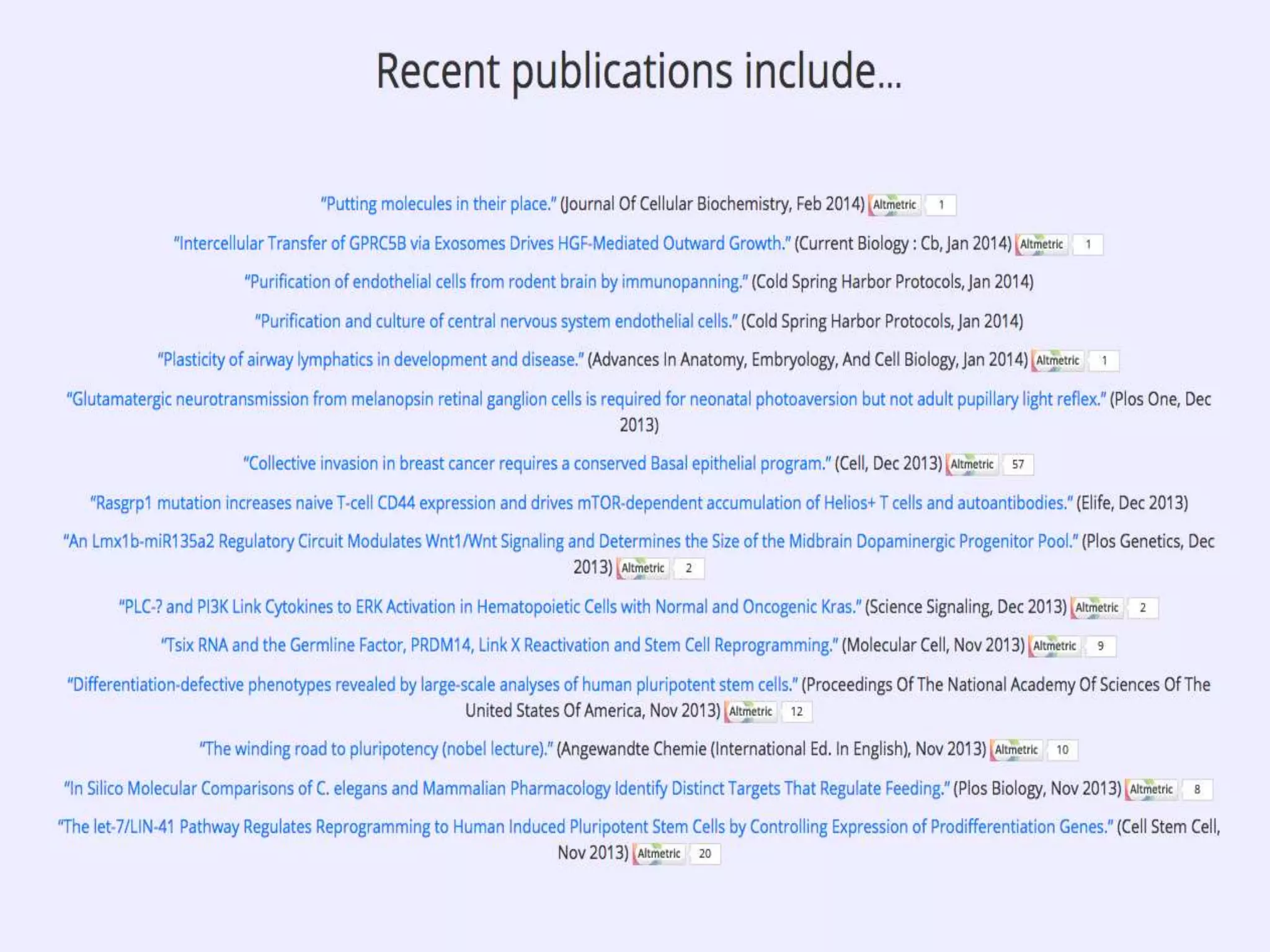

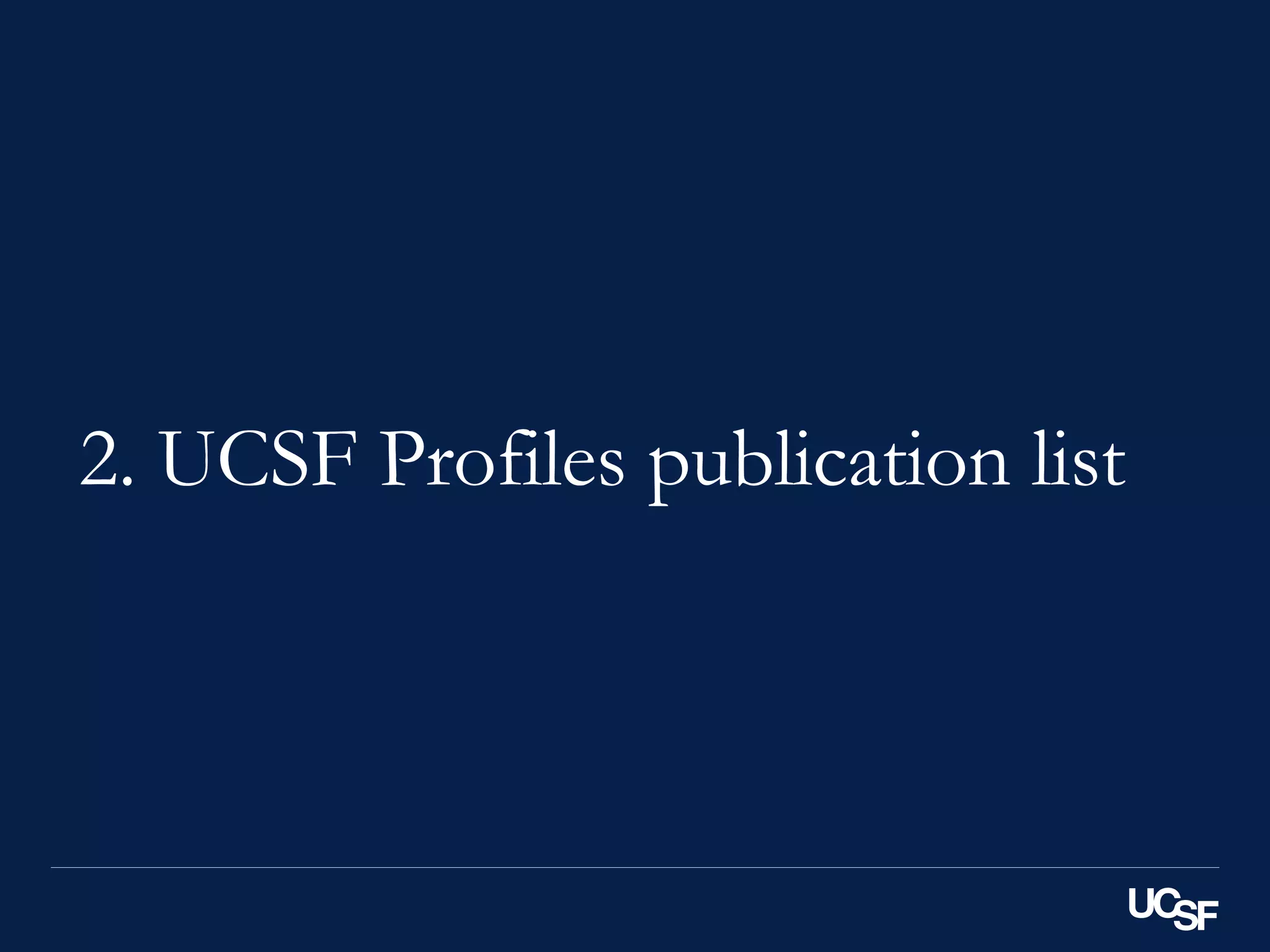

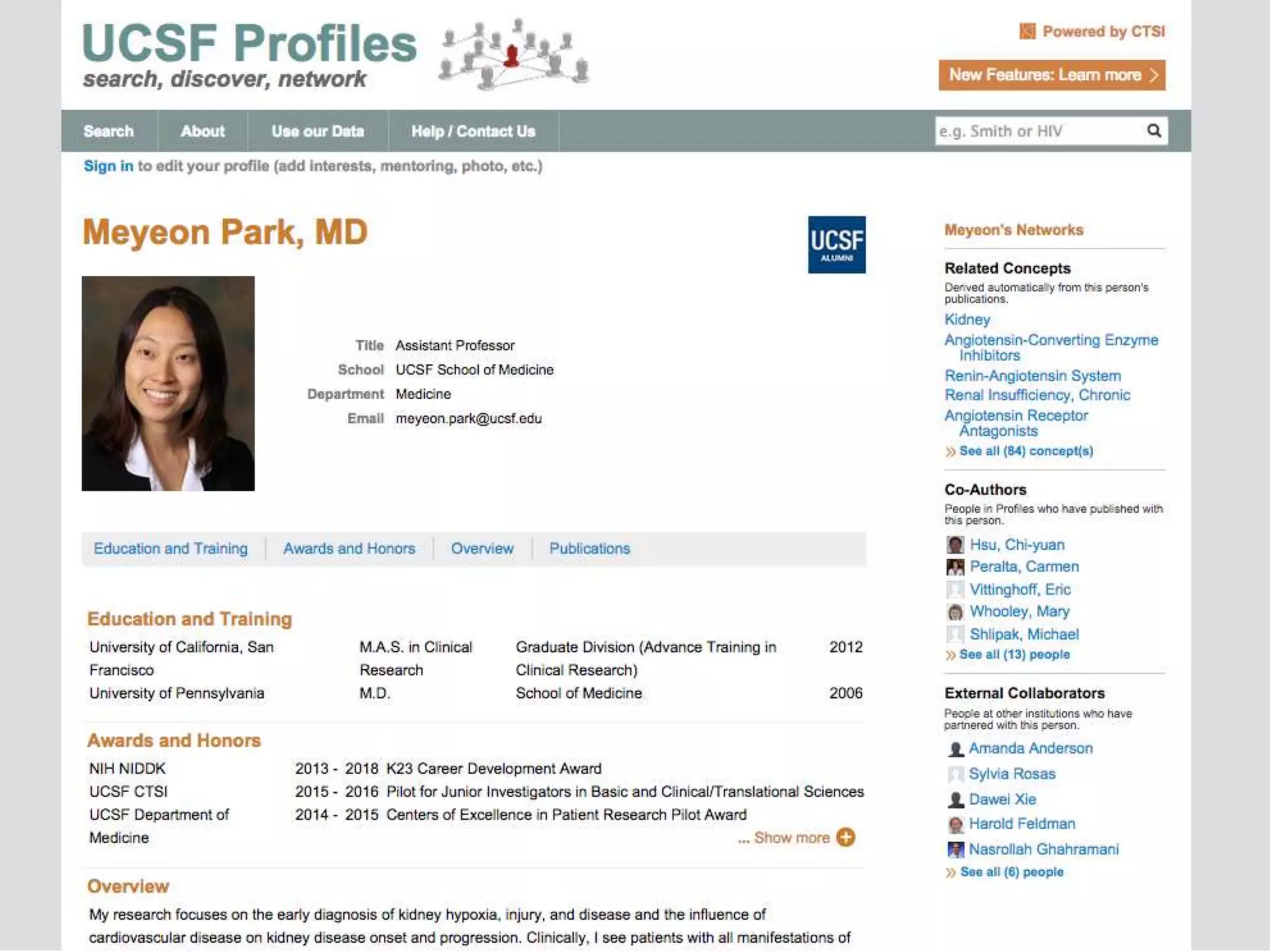

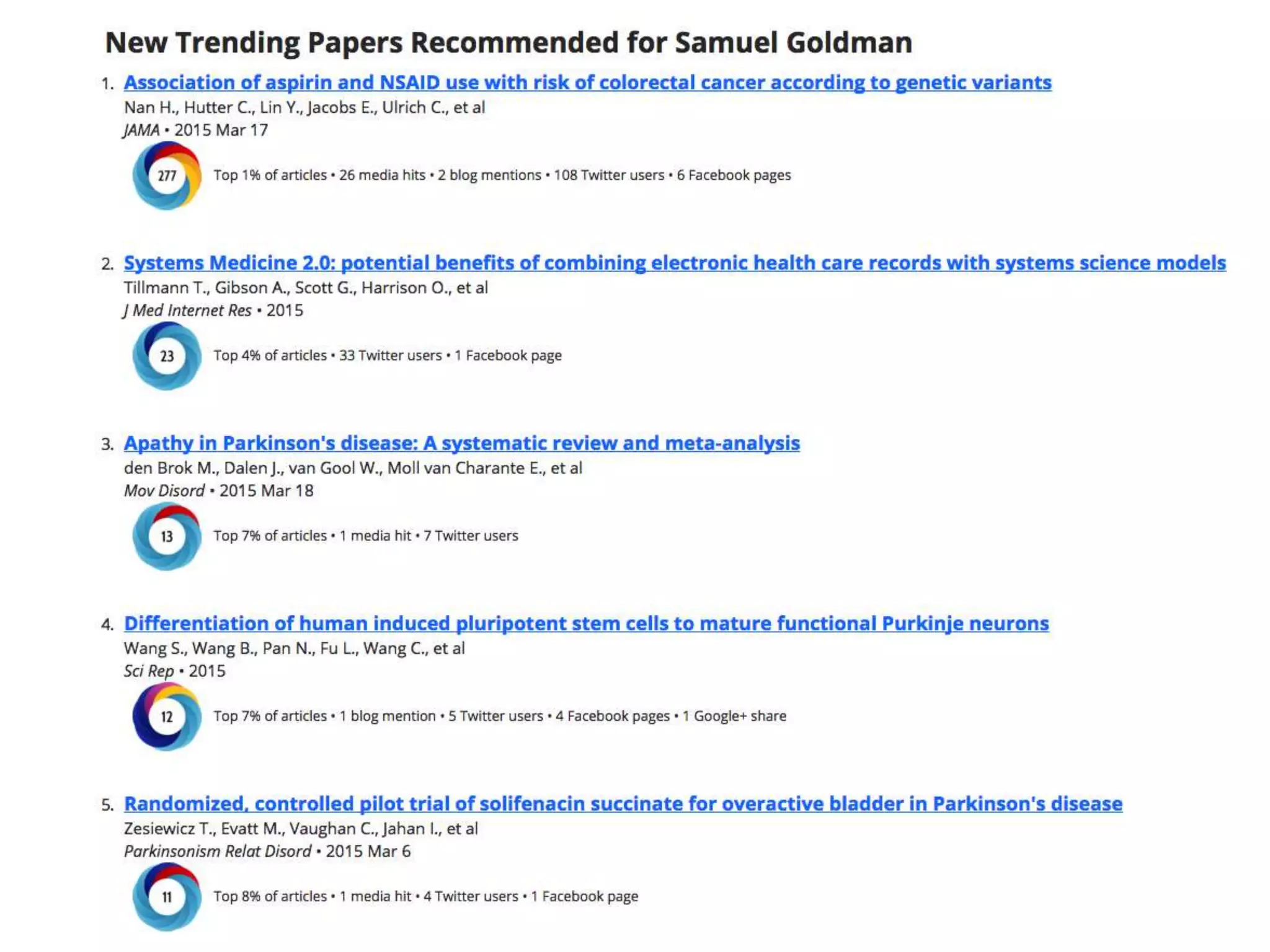

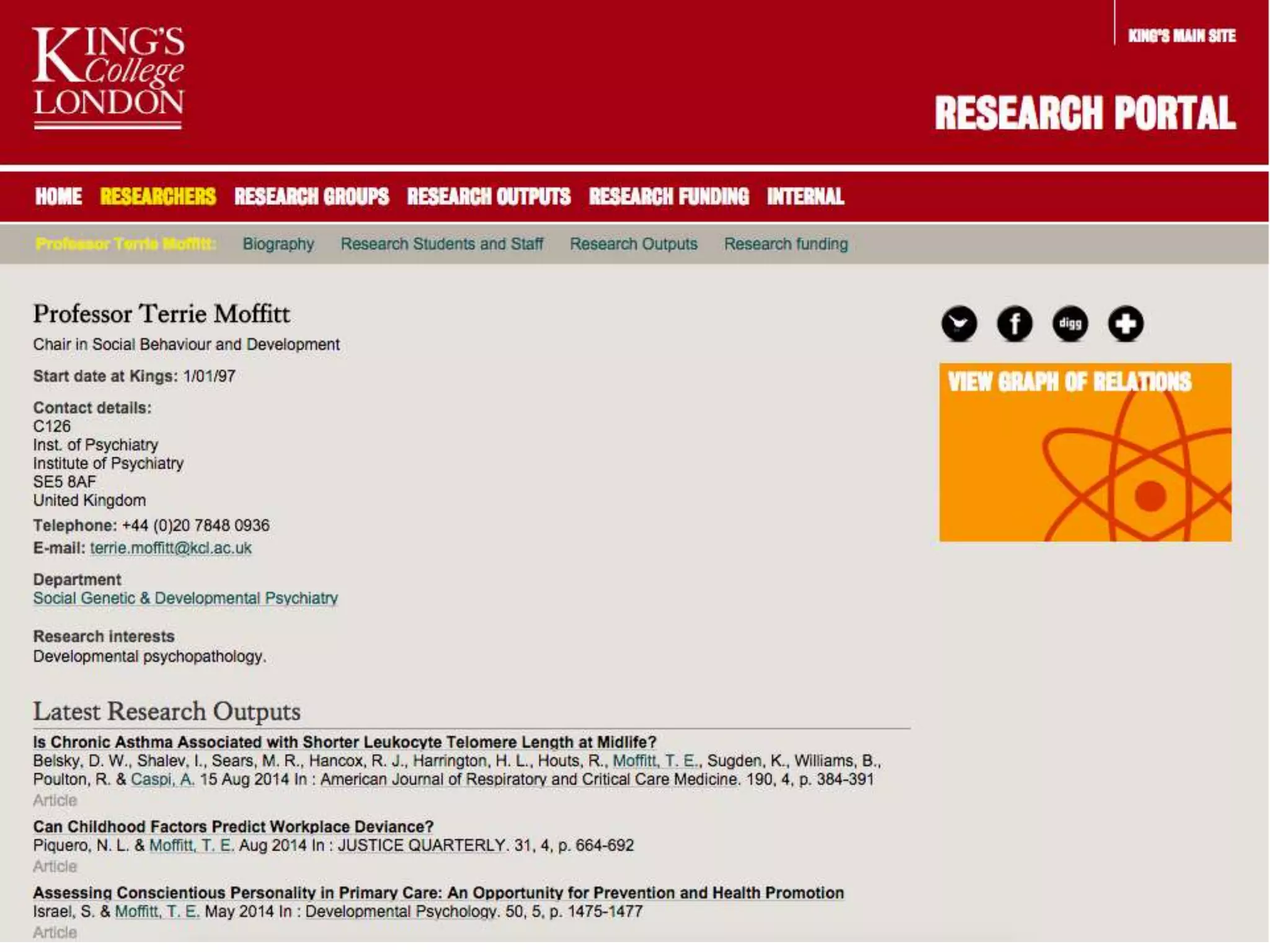

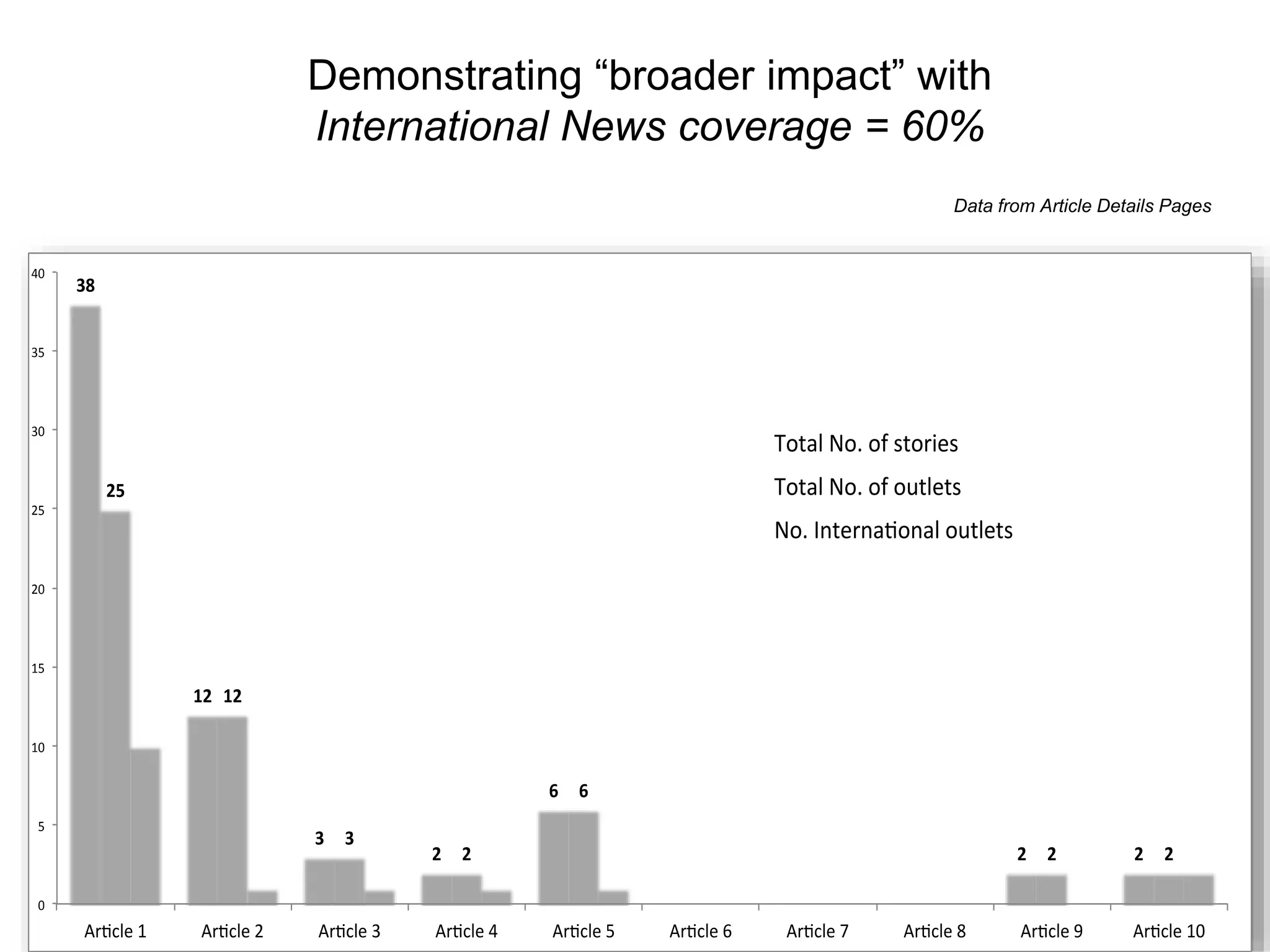

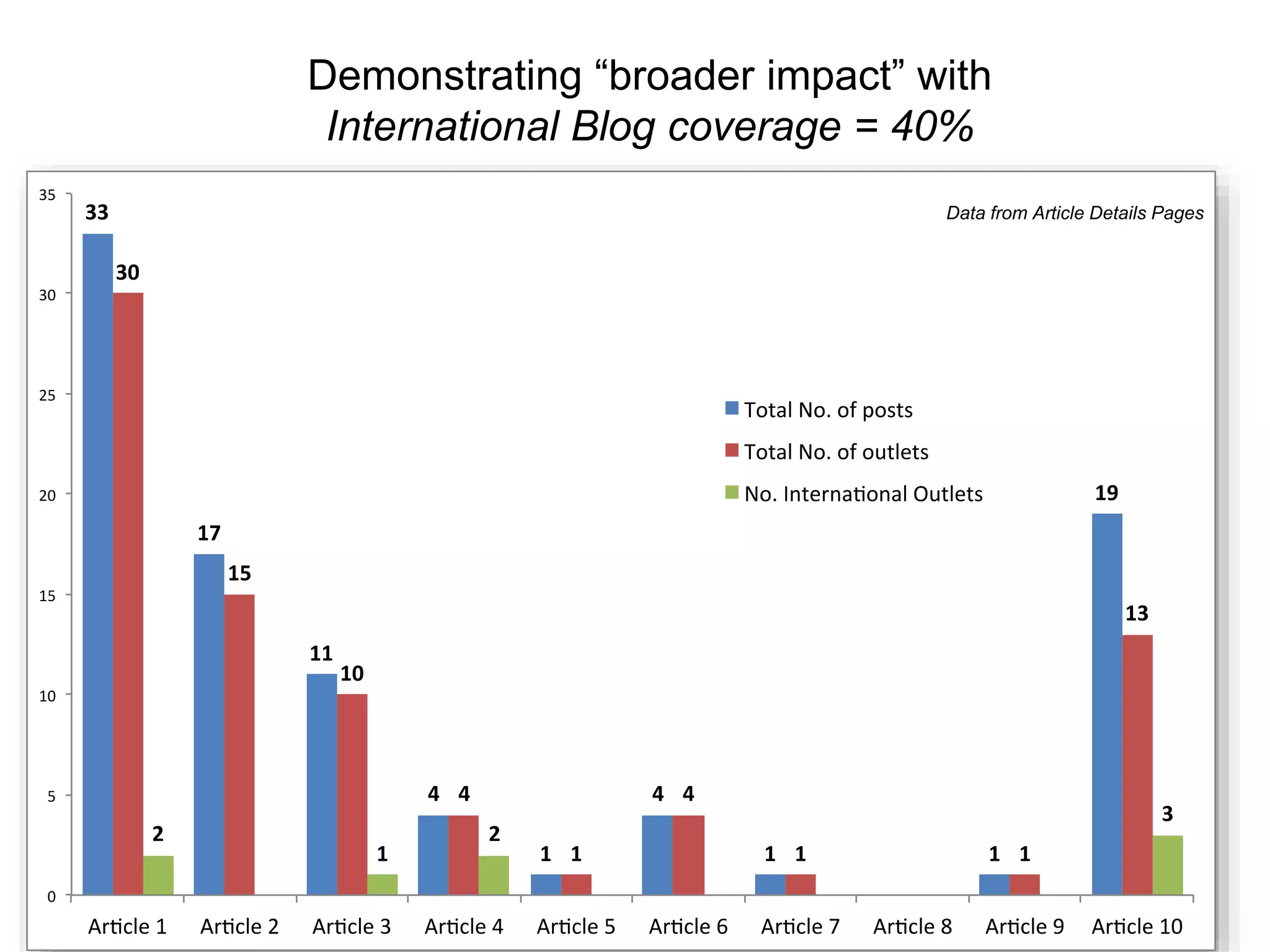

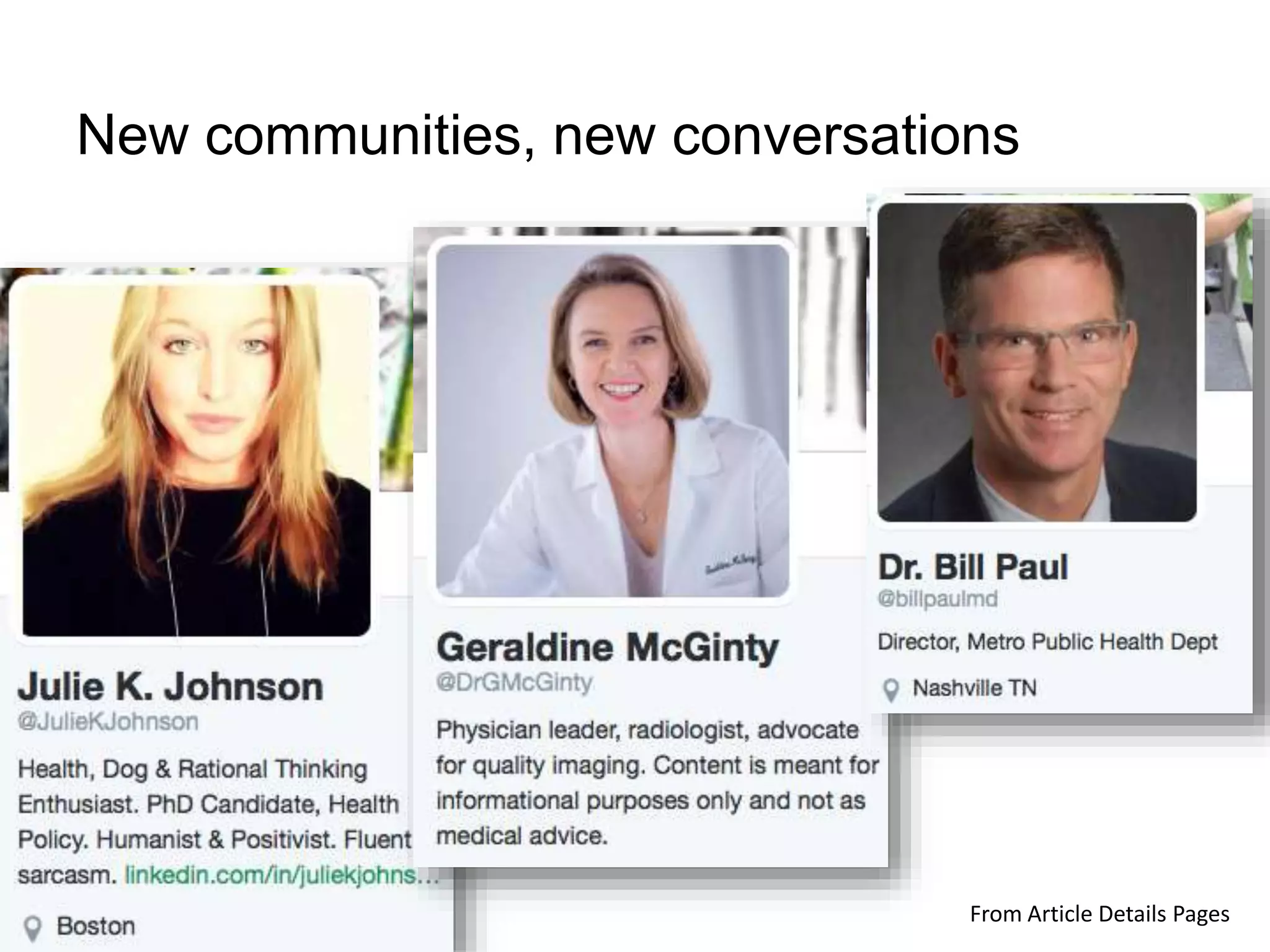

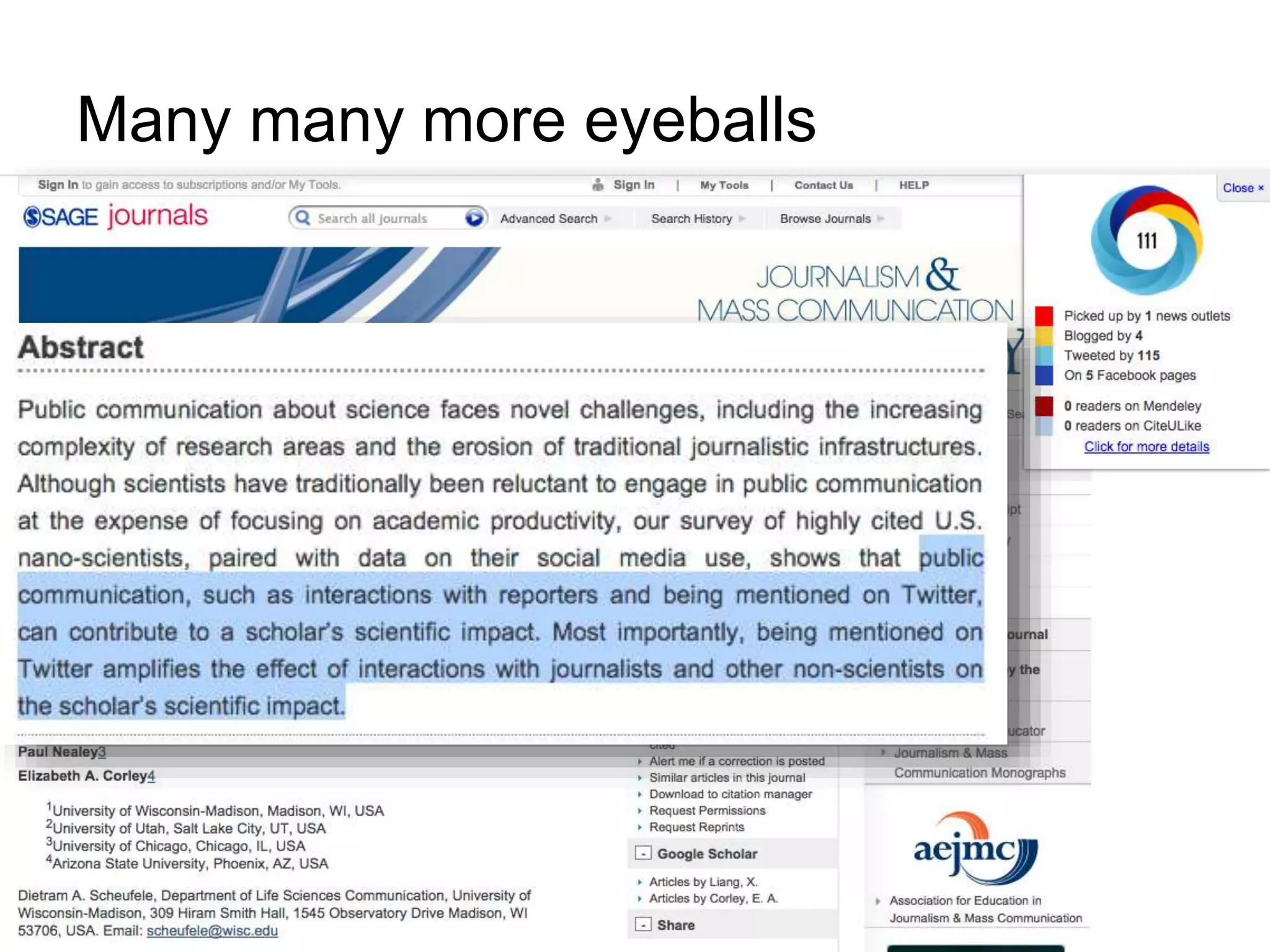

The document discusses the evolution and practical applications of altmetrics, highlighting their importance in providing immediate measures of research impact beyond traditional metrics like citations and journal impact factors. It emphasizes the need for these alternative metrics in compliance with grant requirements and to demonstrate societal impact, with examples from institutions like UCSF and Duke University. The authors present various experiments and data integration efforts to showcase how altmetrics can enhance visibility and engagement with research outputs.

![Where will you see our data? Author Tools

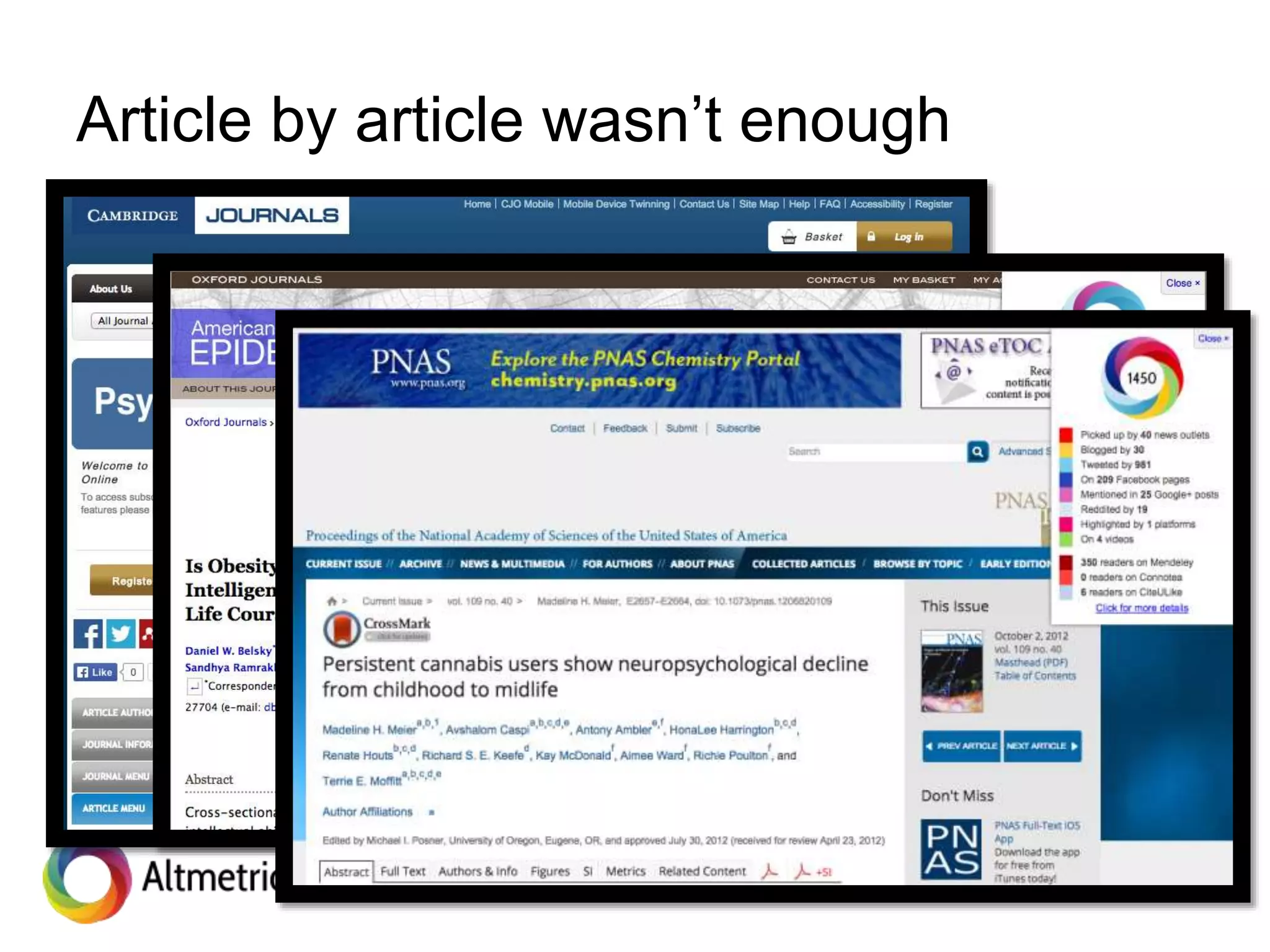

“A CV that documents alternative metrics […]

offers a much more compelling argument to a

tenure committee of their research impact

than a traditional publication list.”

- Donald Samulack, Editage](https://image.slidesharecdn.com/practicalapplicationsforaltmetricsinachangingmetricslandscape-150518093210-lva1-app6891/75/Practical-applications-for-altmetrics-in-a-changing-metrics-landscape-25-2048.jpg)

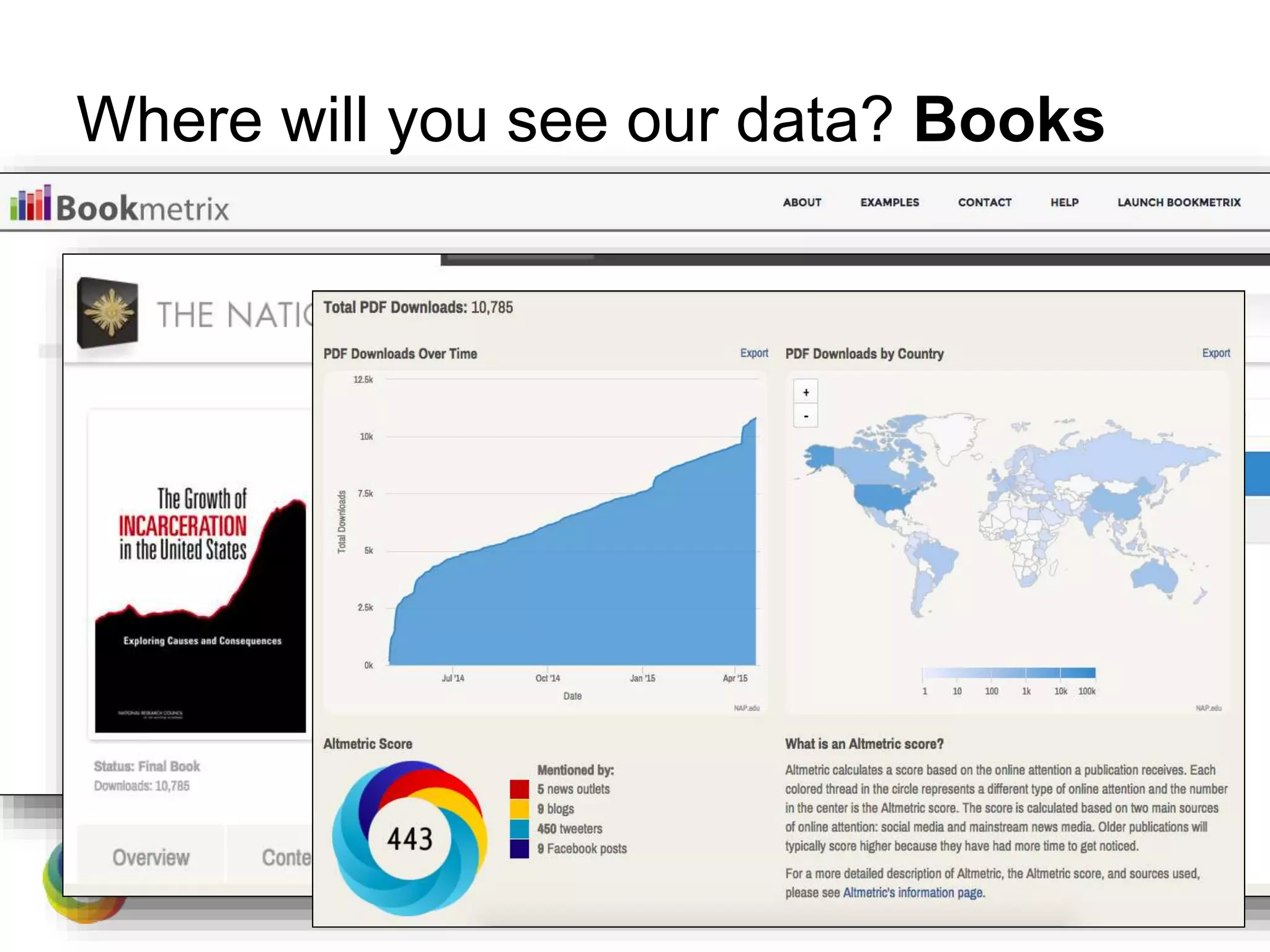

![NSF Broader Impacts Criterion

To what extent will [the research] enhance the

infrastructure for research and education, such as

facilities, instrumentation, networks, and partnerships?

Will the results be disseminated broadly to enhance

scientific and technological understanding?

What may be the benefits of the proposed activity to

society?

http://www.nsf.gov/pubs/2007/nsf07046/nsf07046.jsp

NSF Broader Impacts Criterion](https://image.slidesharecdn.com/practicalapplicationsforaltmetricsinachangingmetricslandscape-150518093210-lva1-app6891/75/Practical-applications-for-altmetrics-in-a-changing-metrics-landscape-61-2048.jpg)

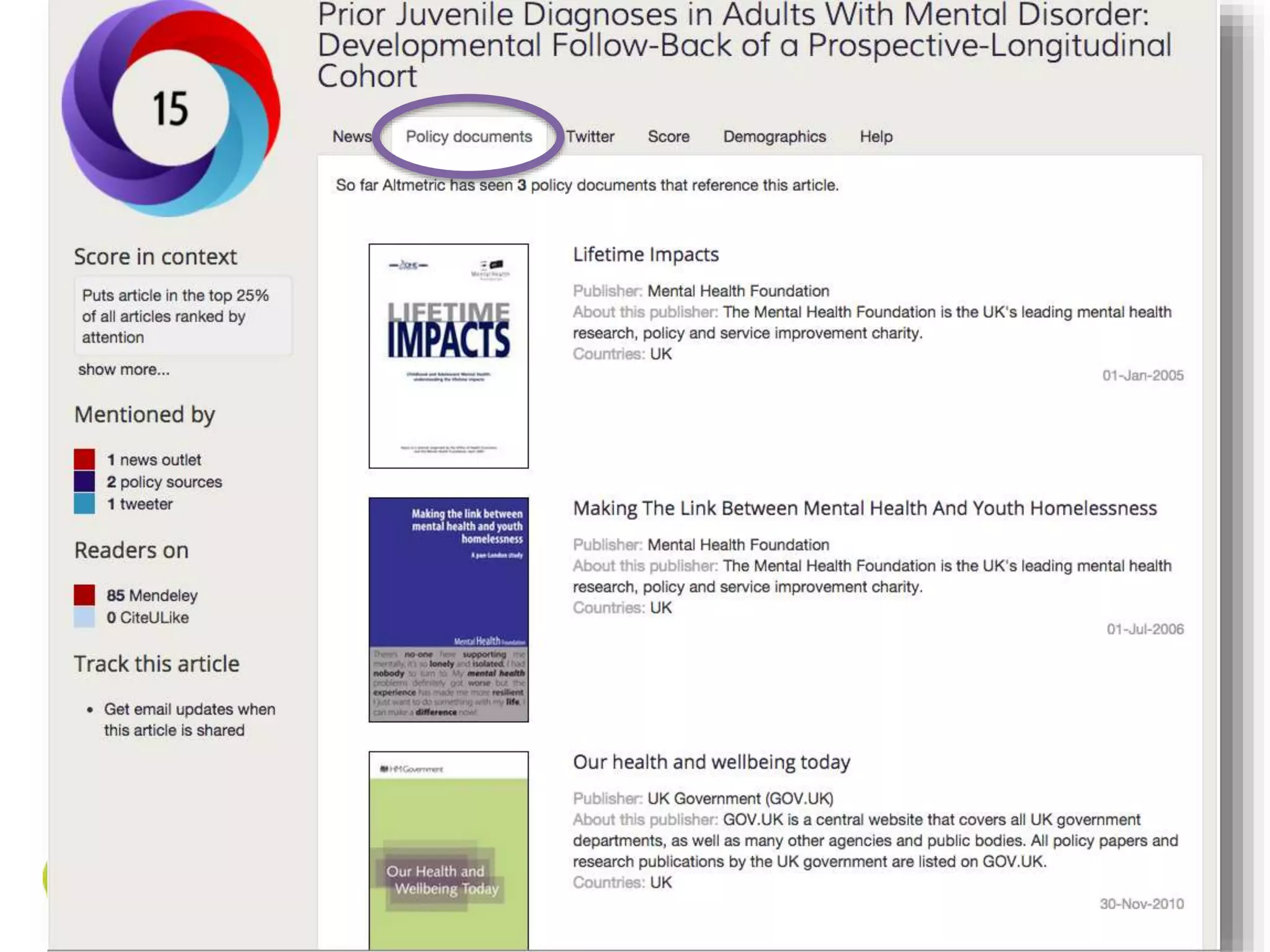

![Saved Terrie time; saved her program

manager time…

NIH Program Manager:

“[This Altmetric data is]

fantastic information for

[our] budget report.”](https://image.slidesharecdn.com/practicalapplicationsforaltmetricsinachangingmetricslandscape-150518093210-lva1-app6891/75/Practical-applications-for-altmetrics-in-a-changing-metrics-landscape-71-2048.jpg)