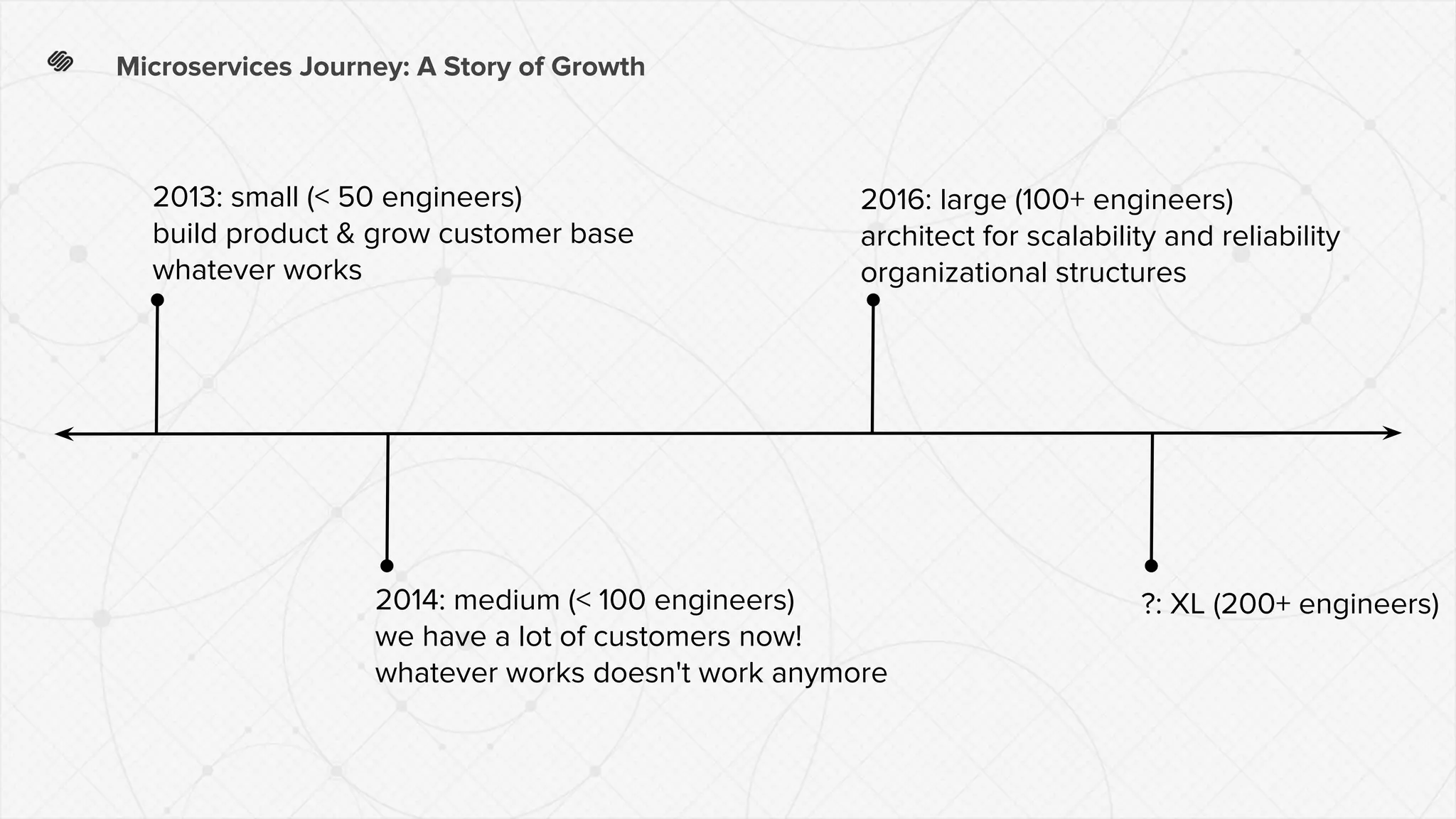

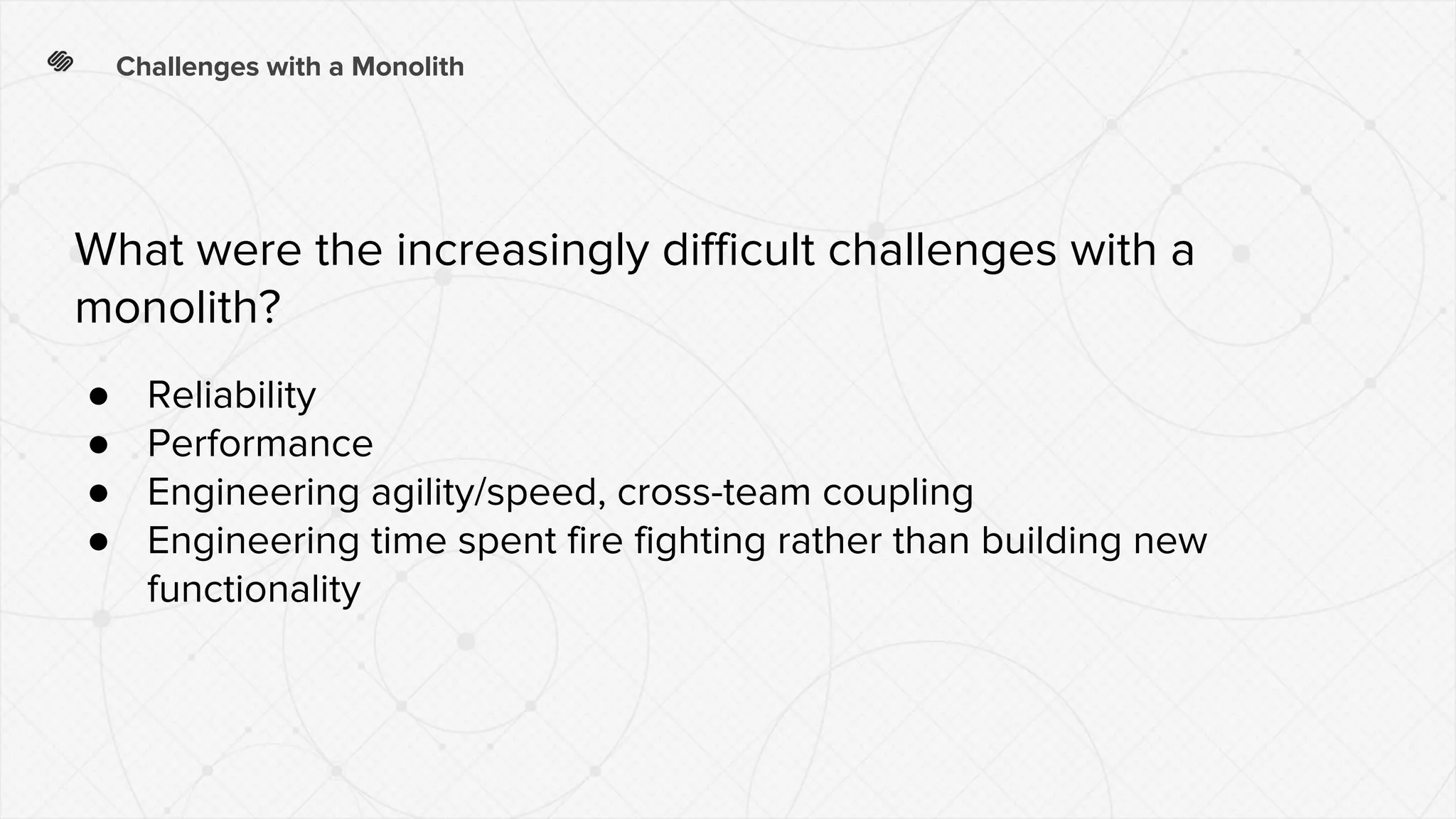

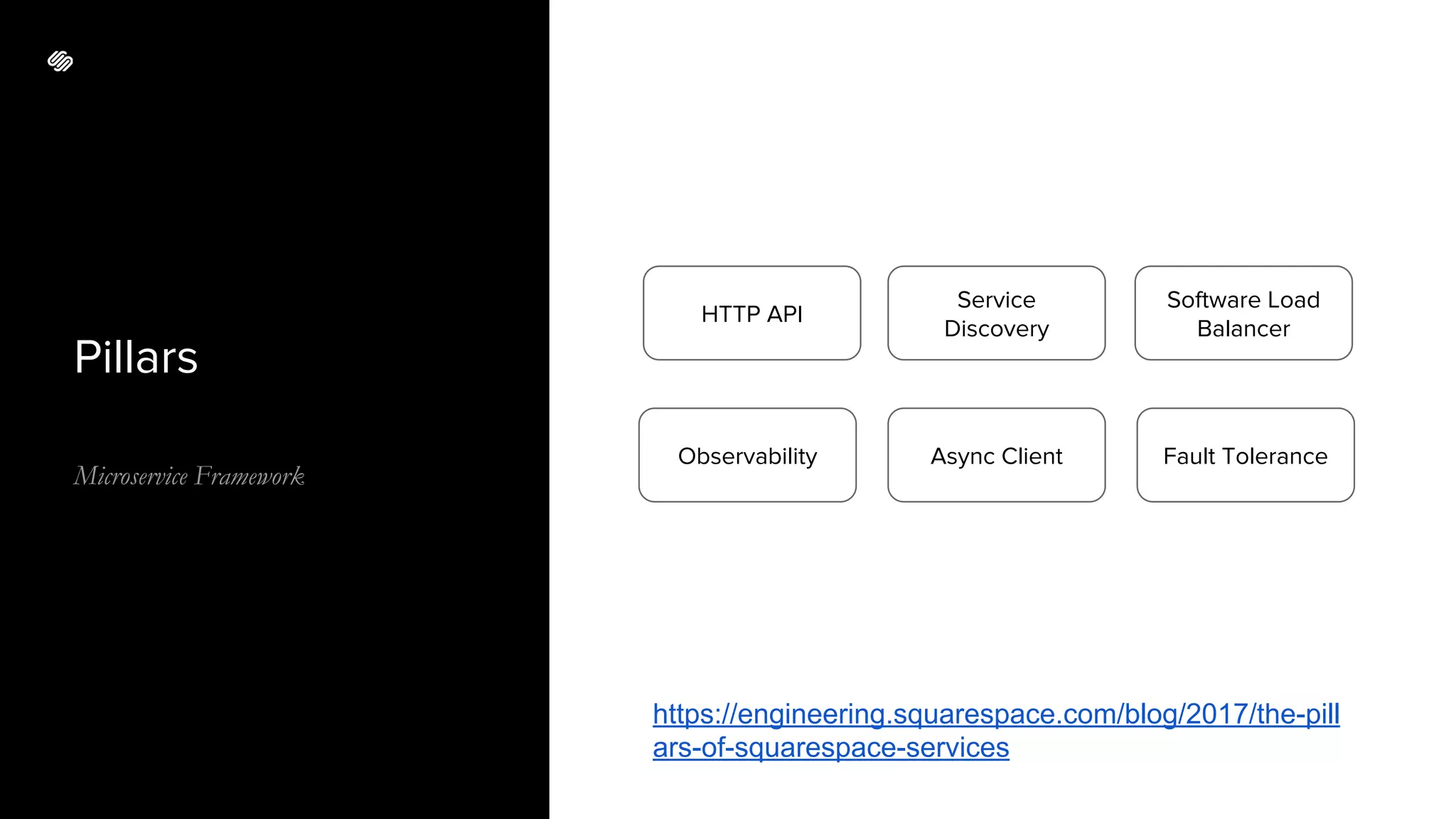

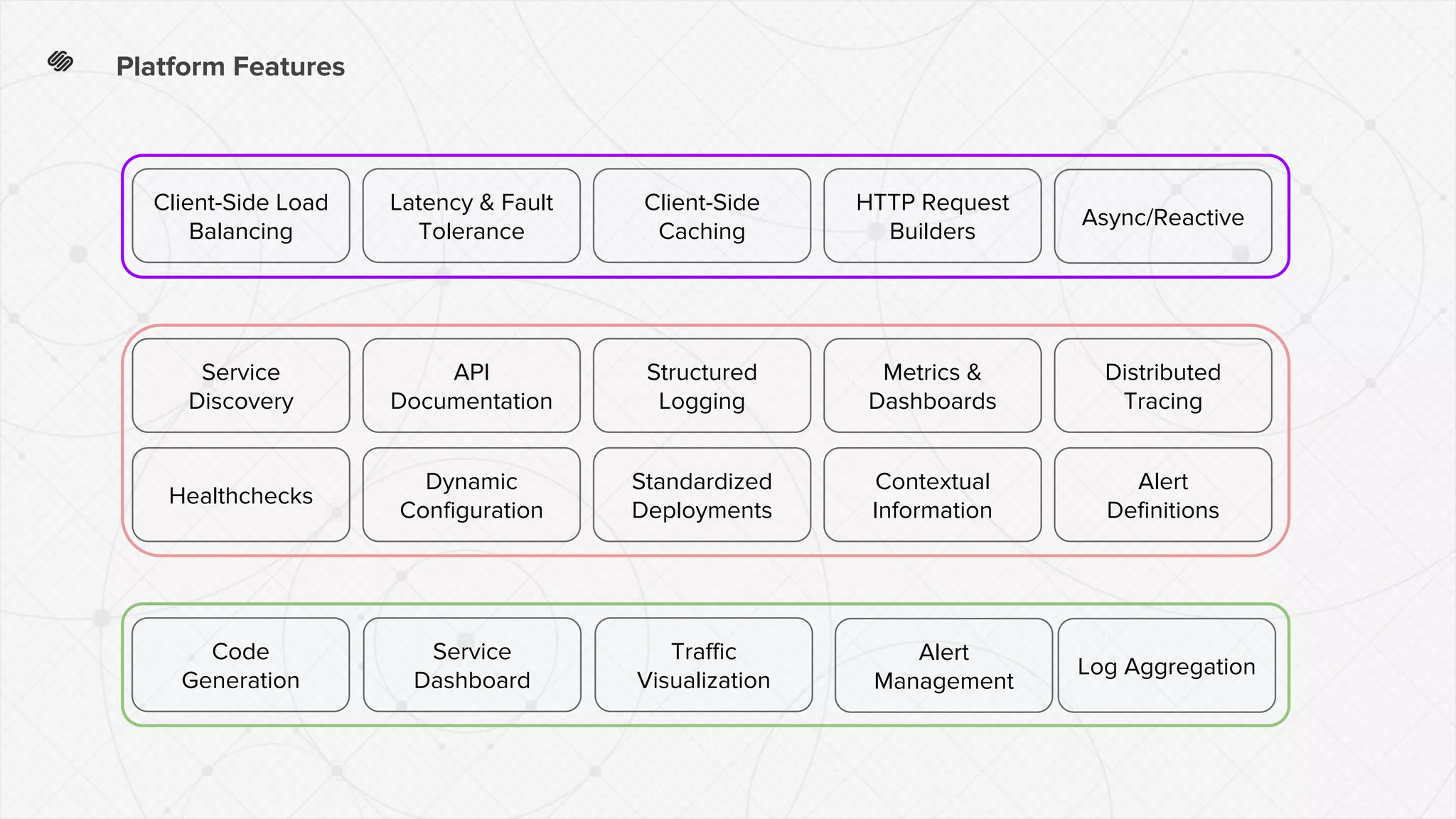

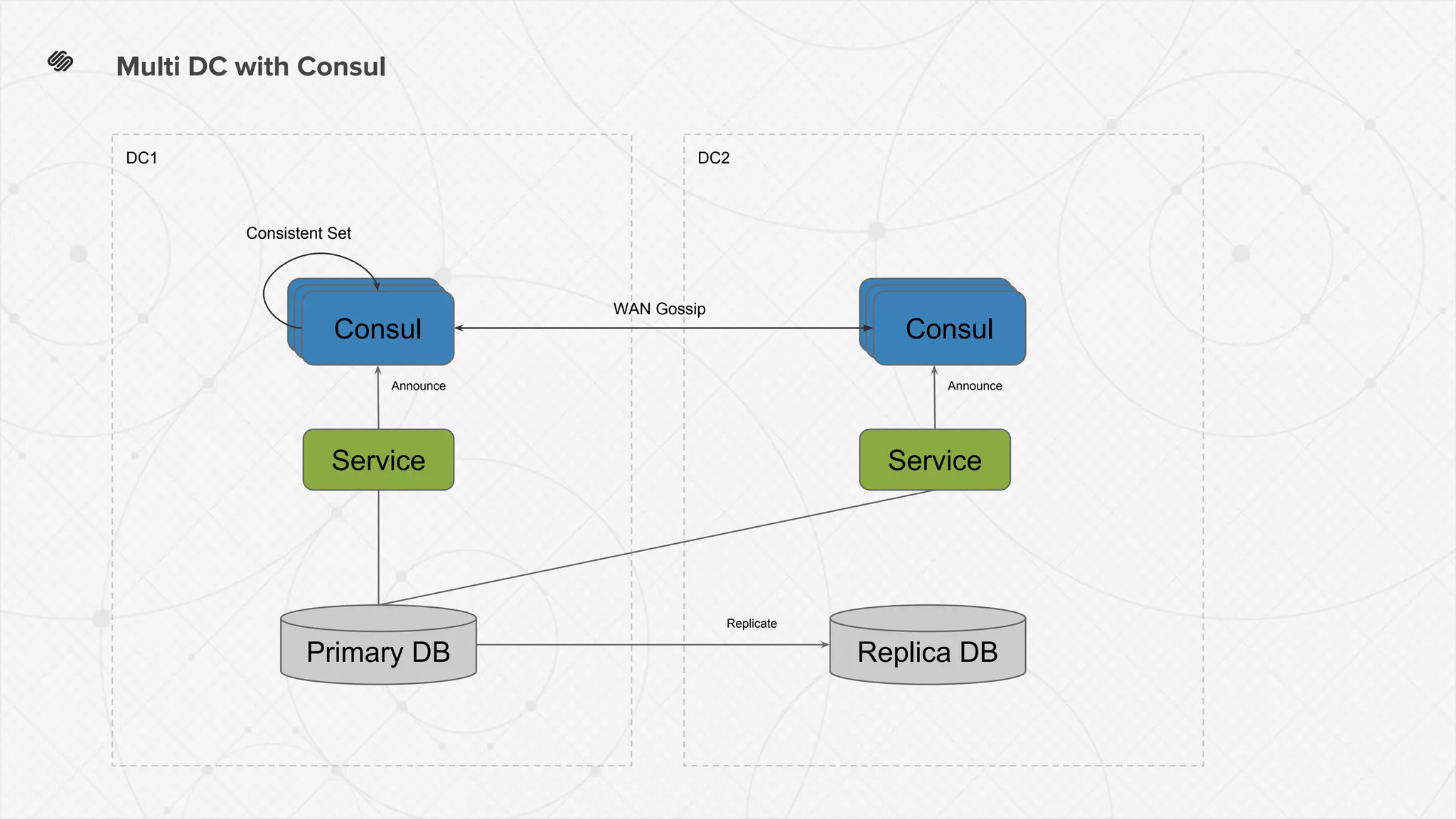

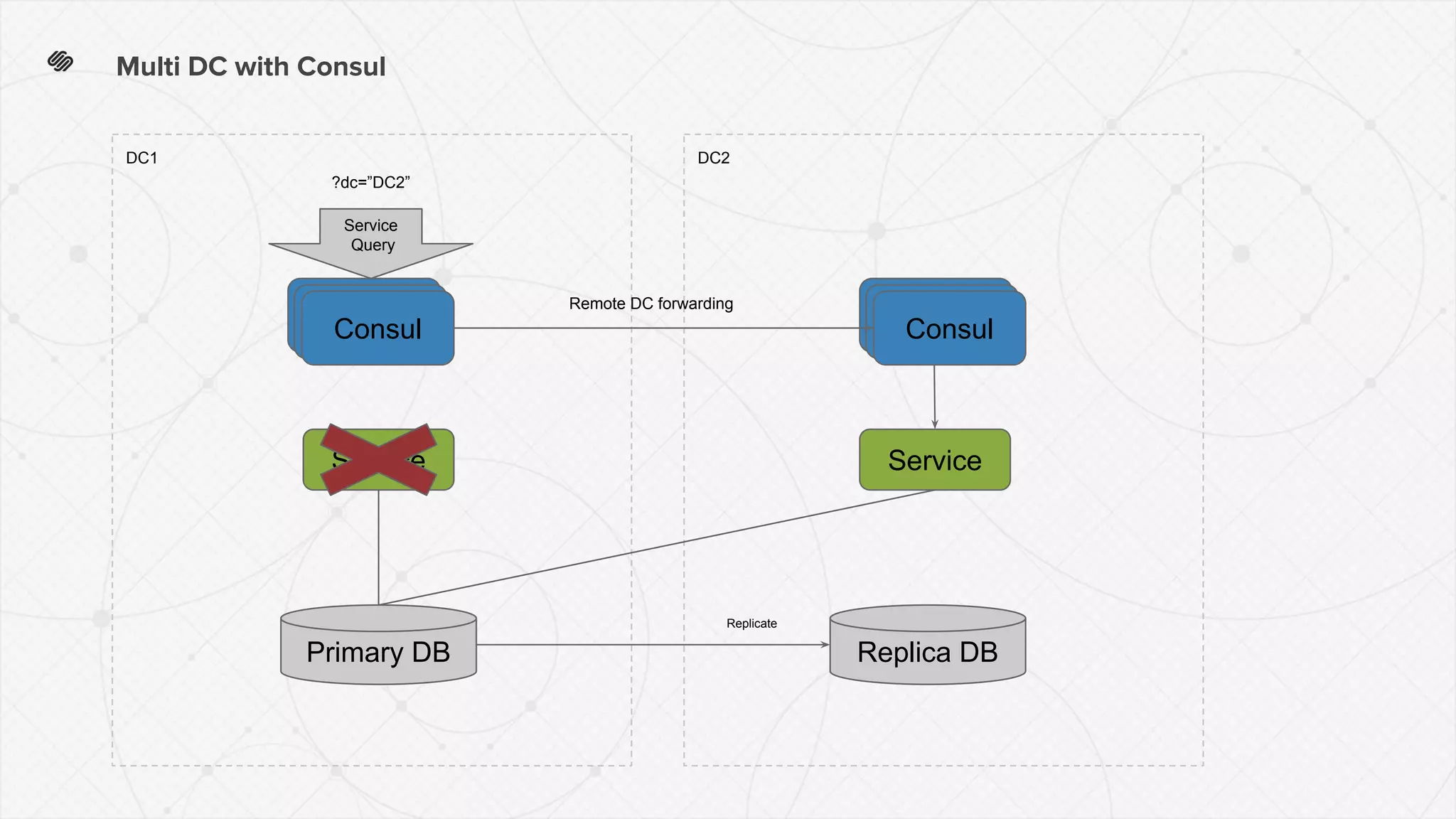

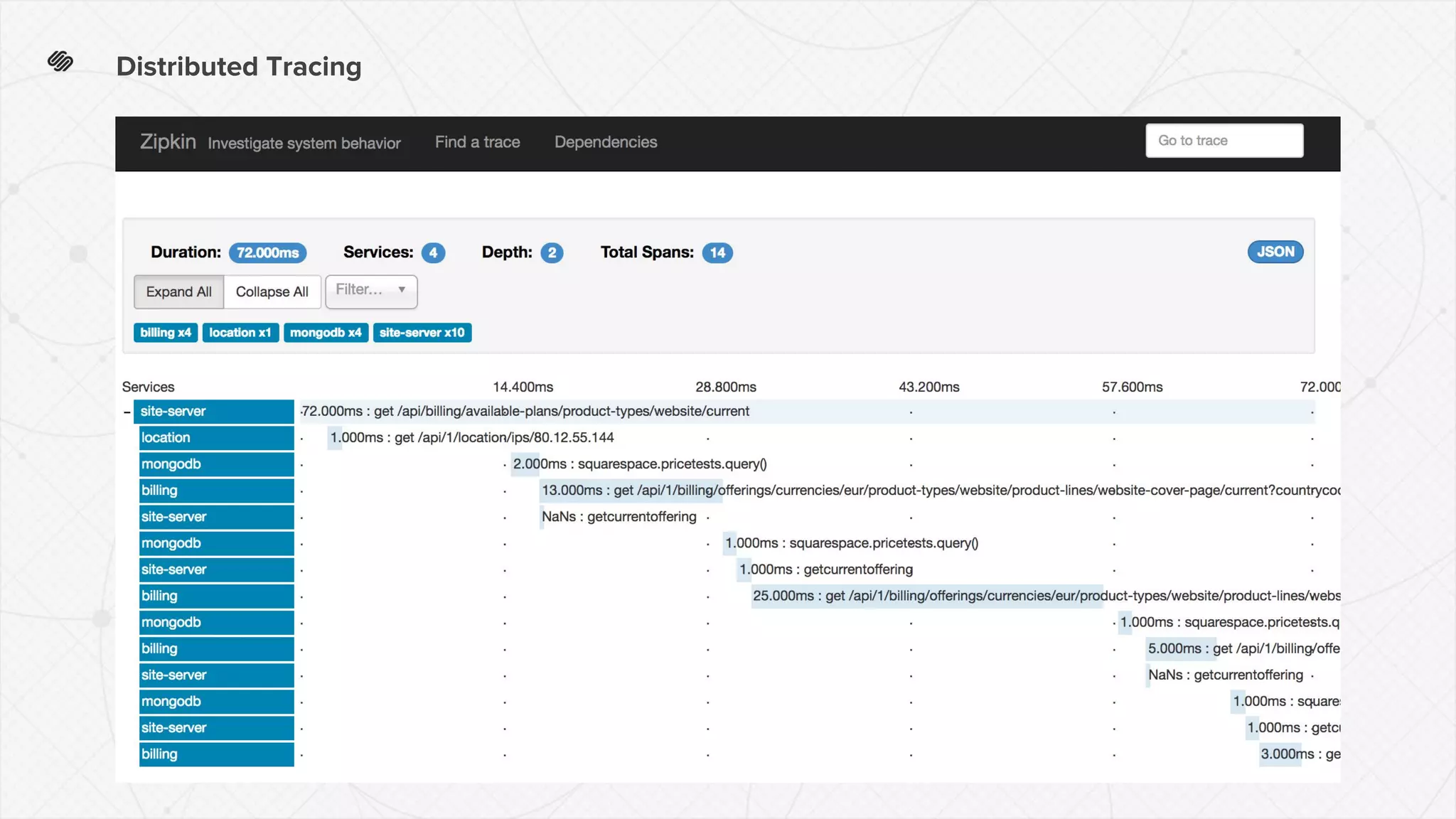

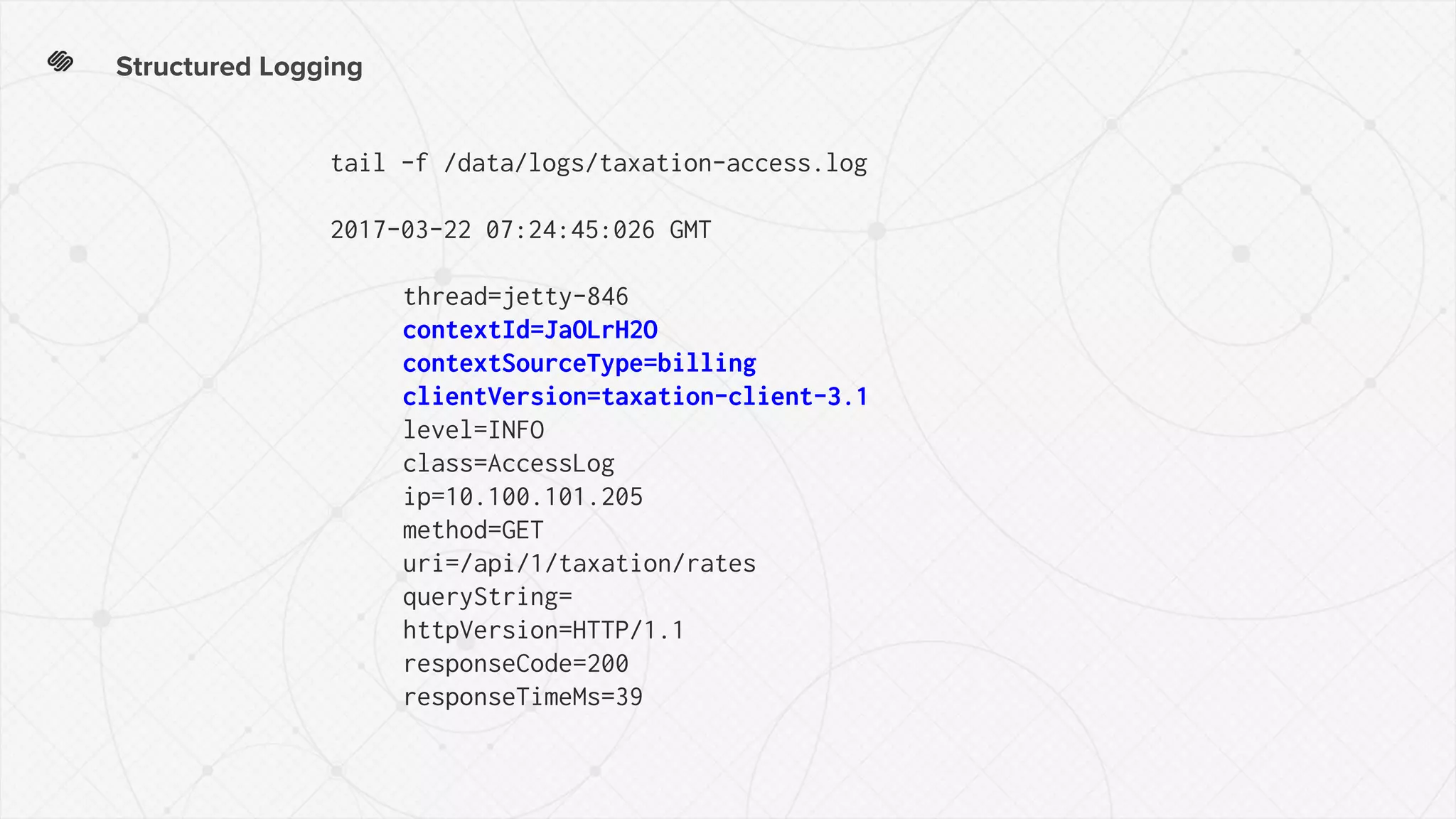

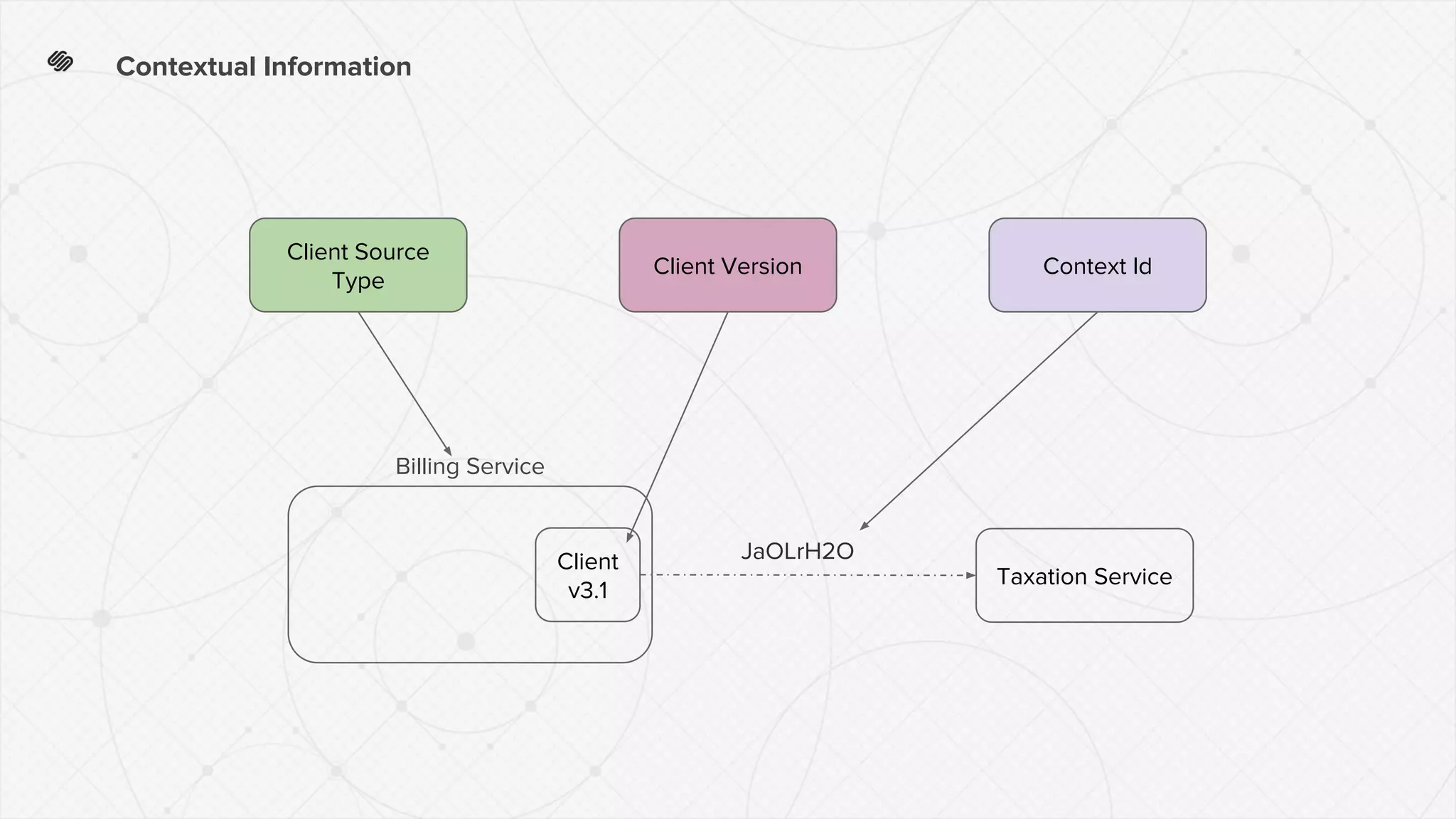

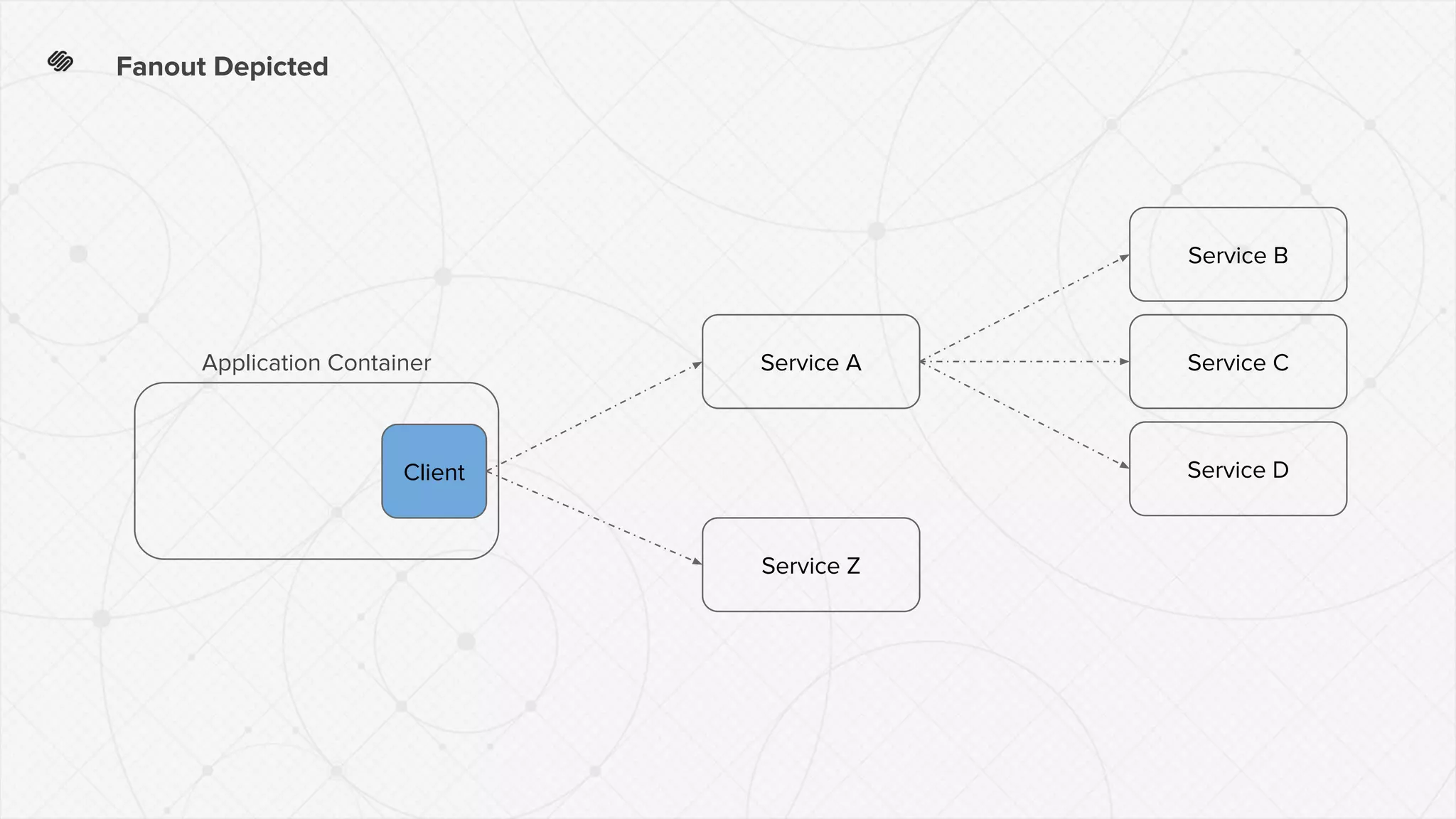

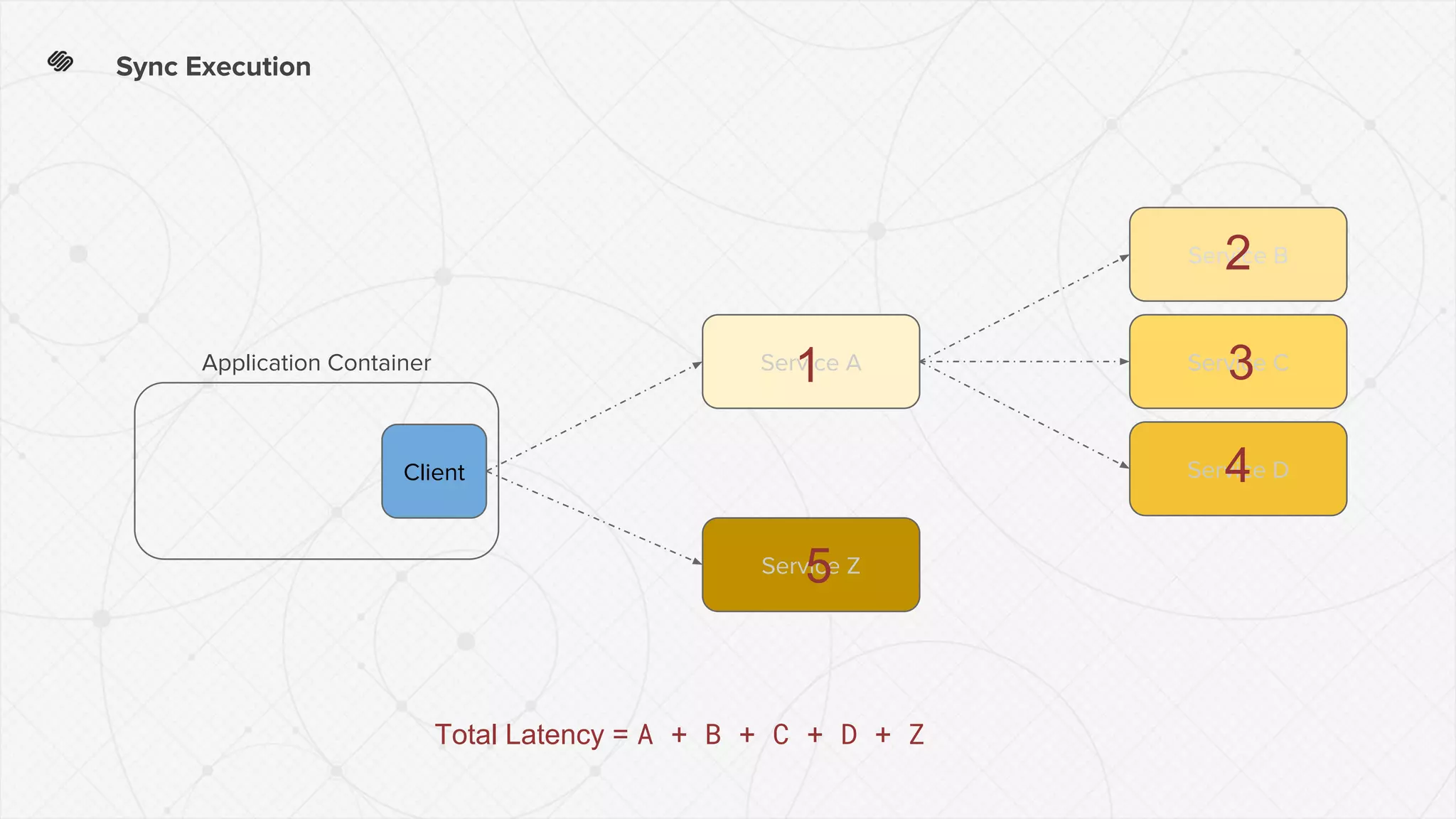

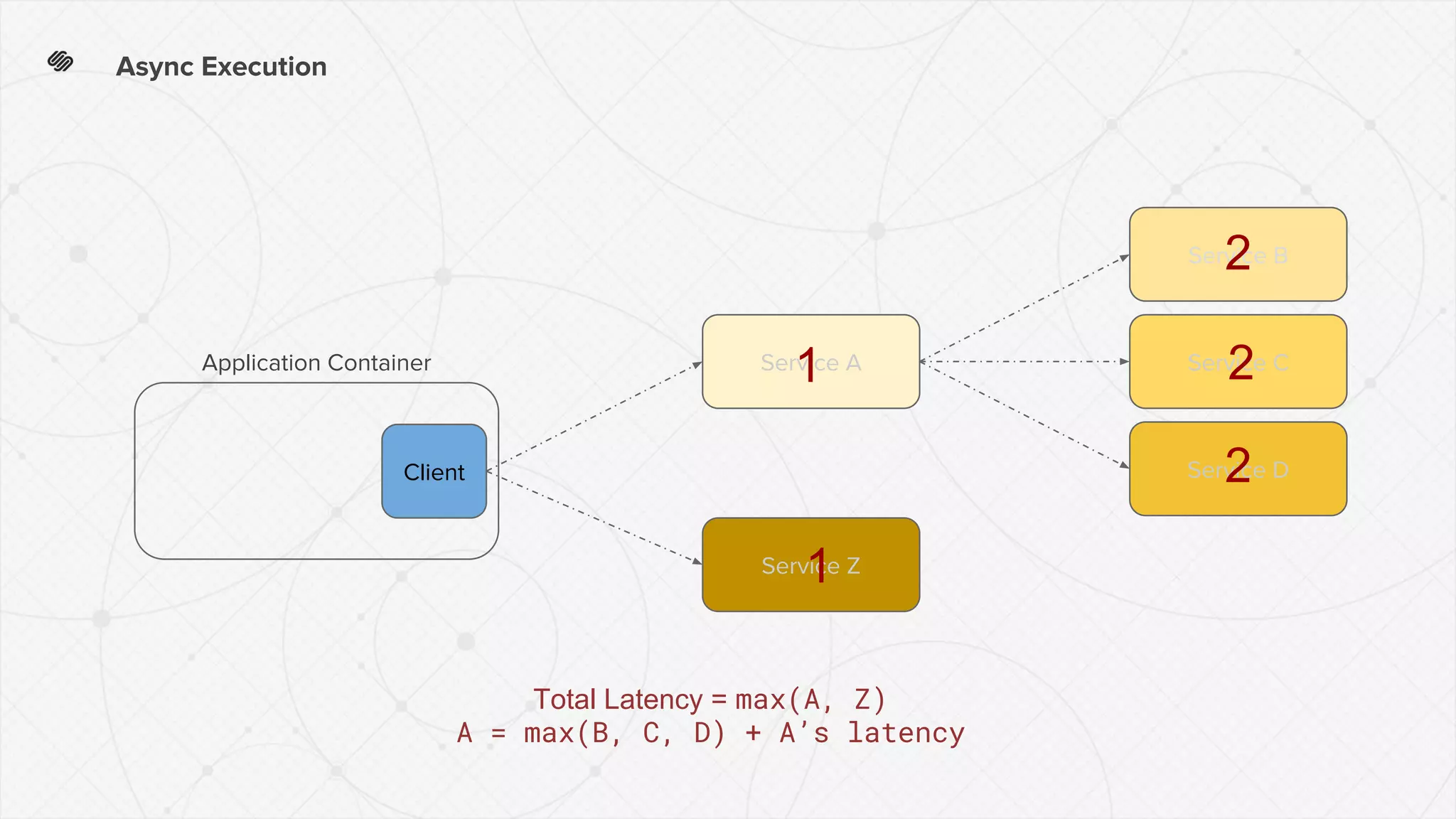

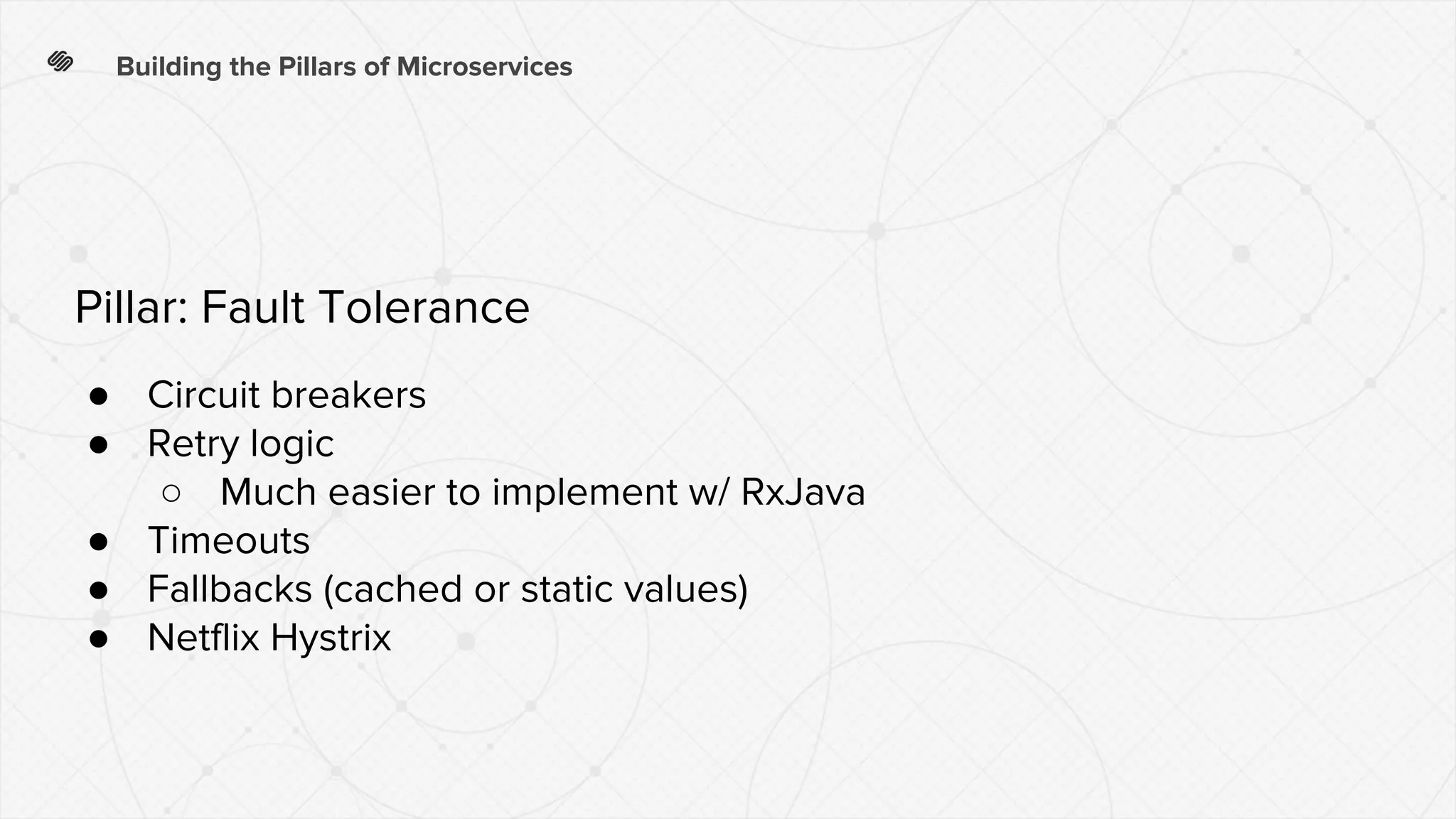

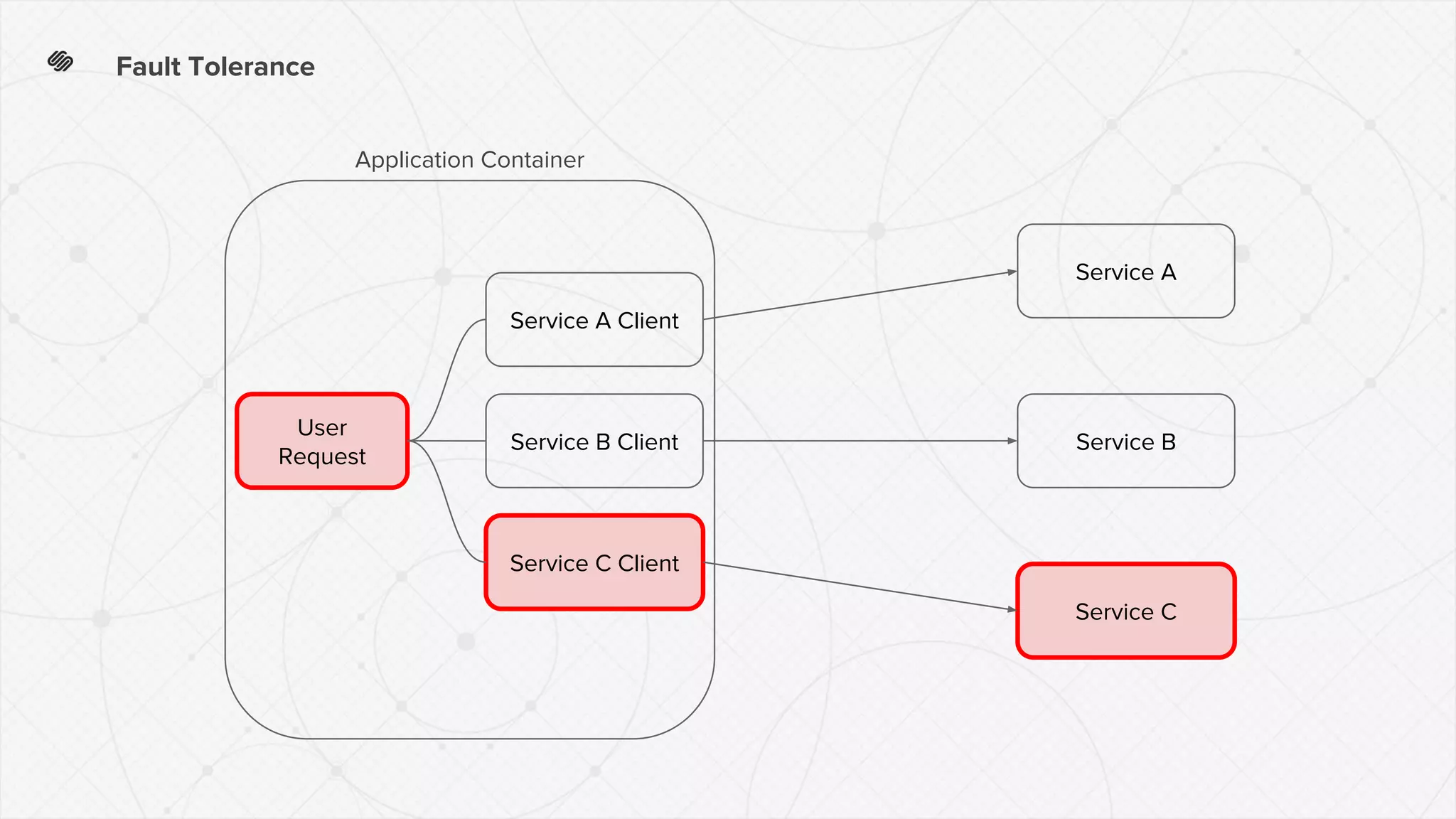

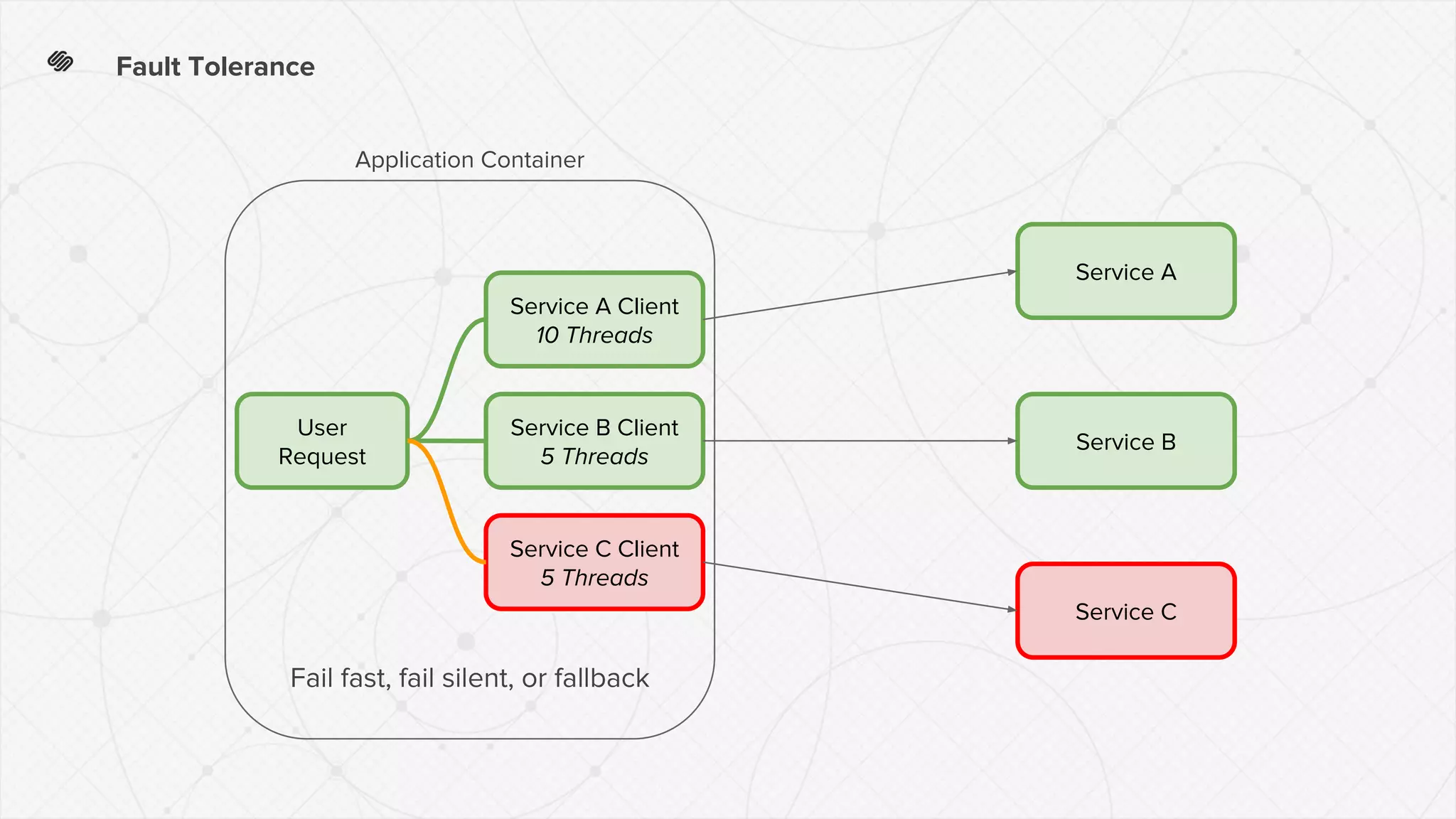

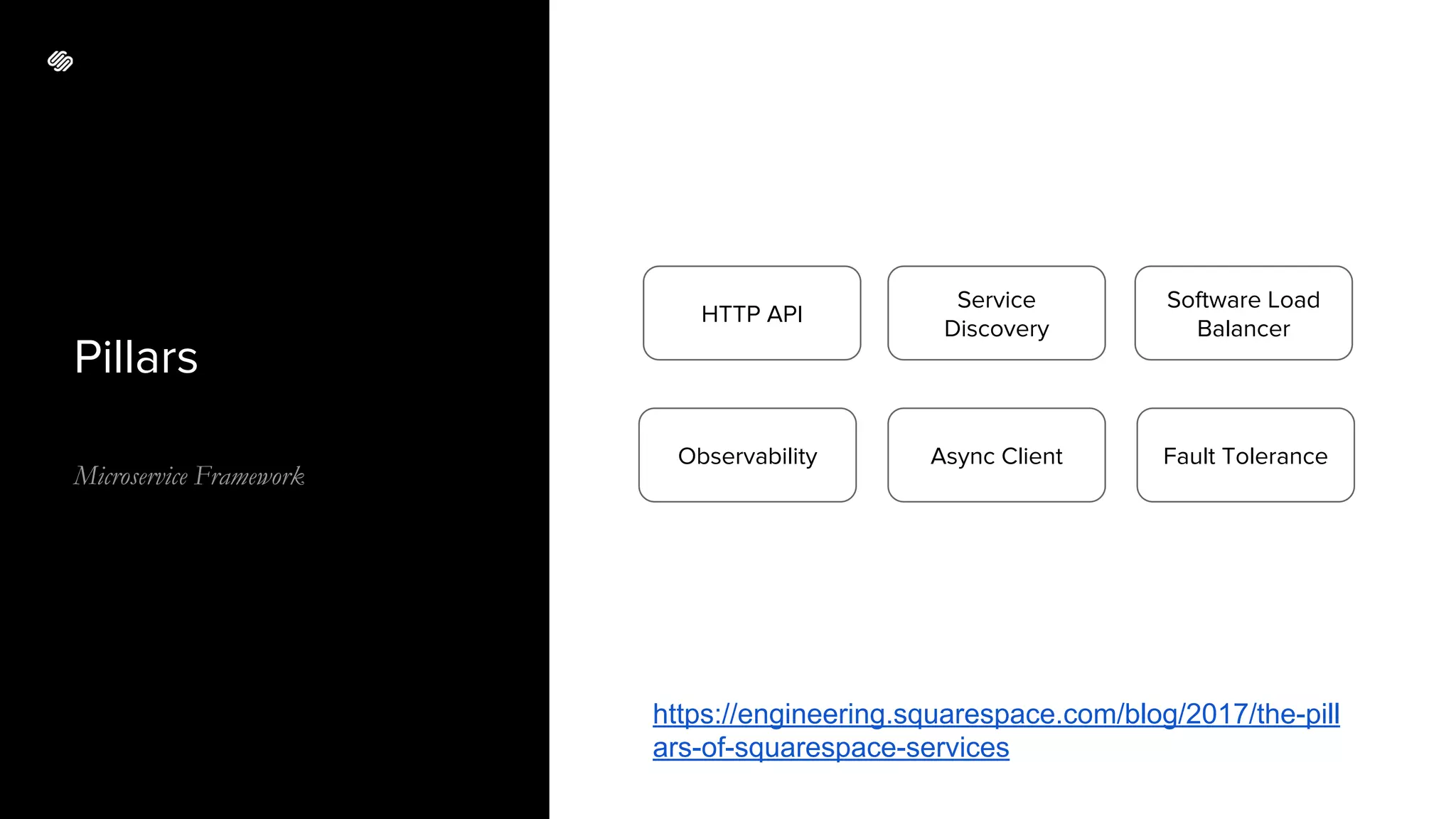

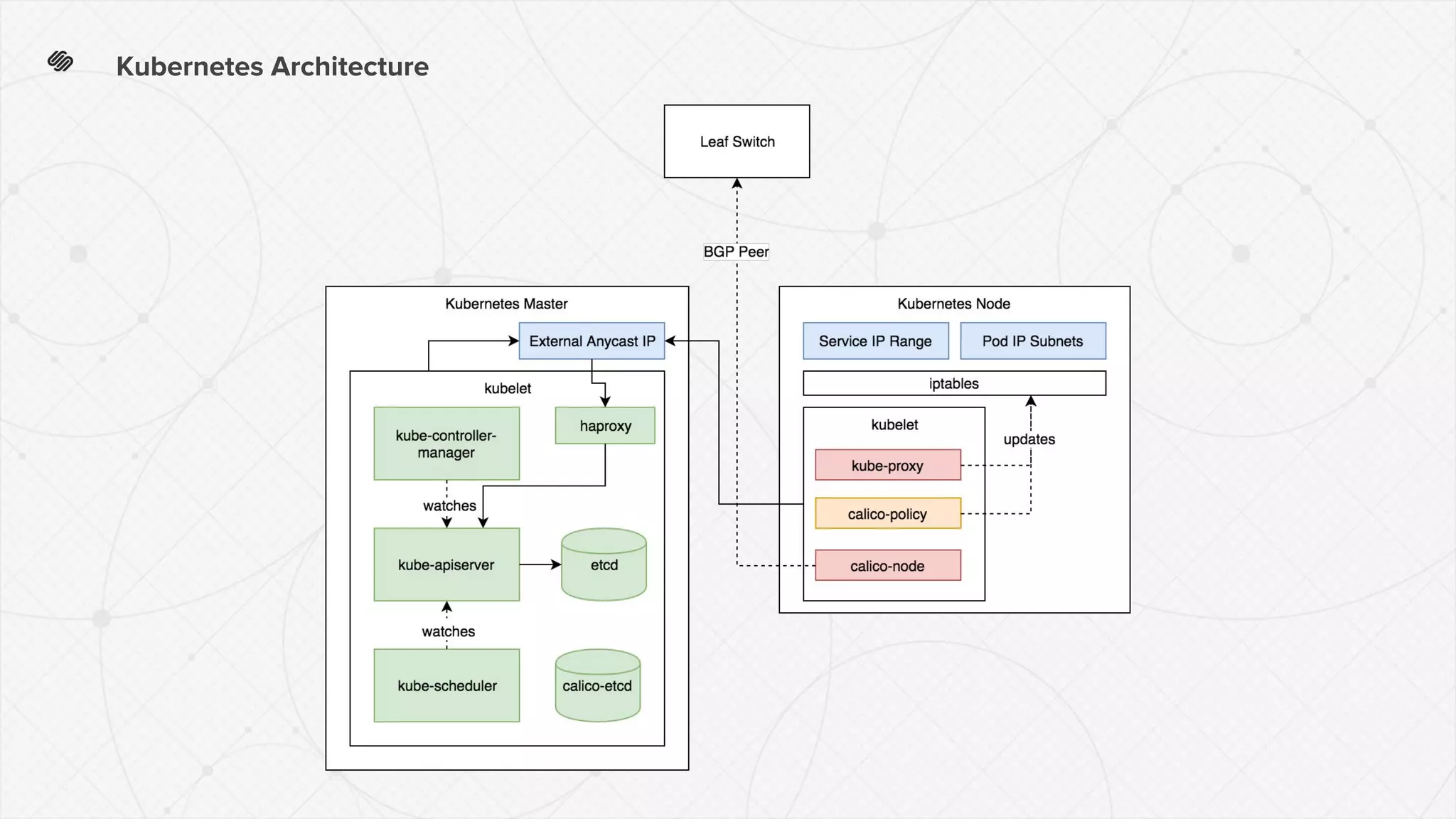

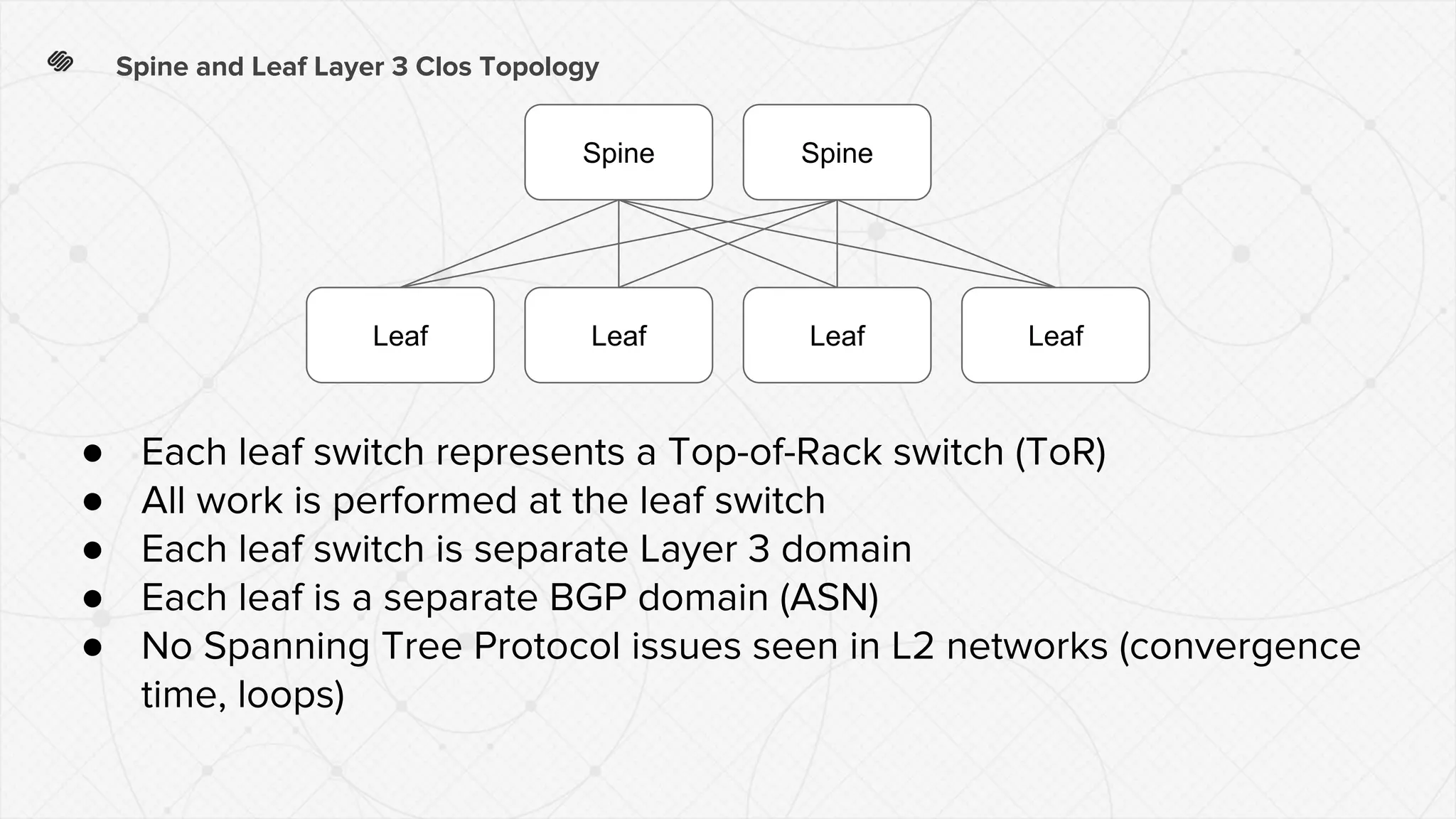

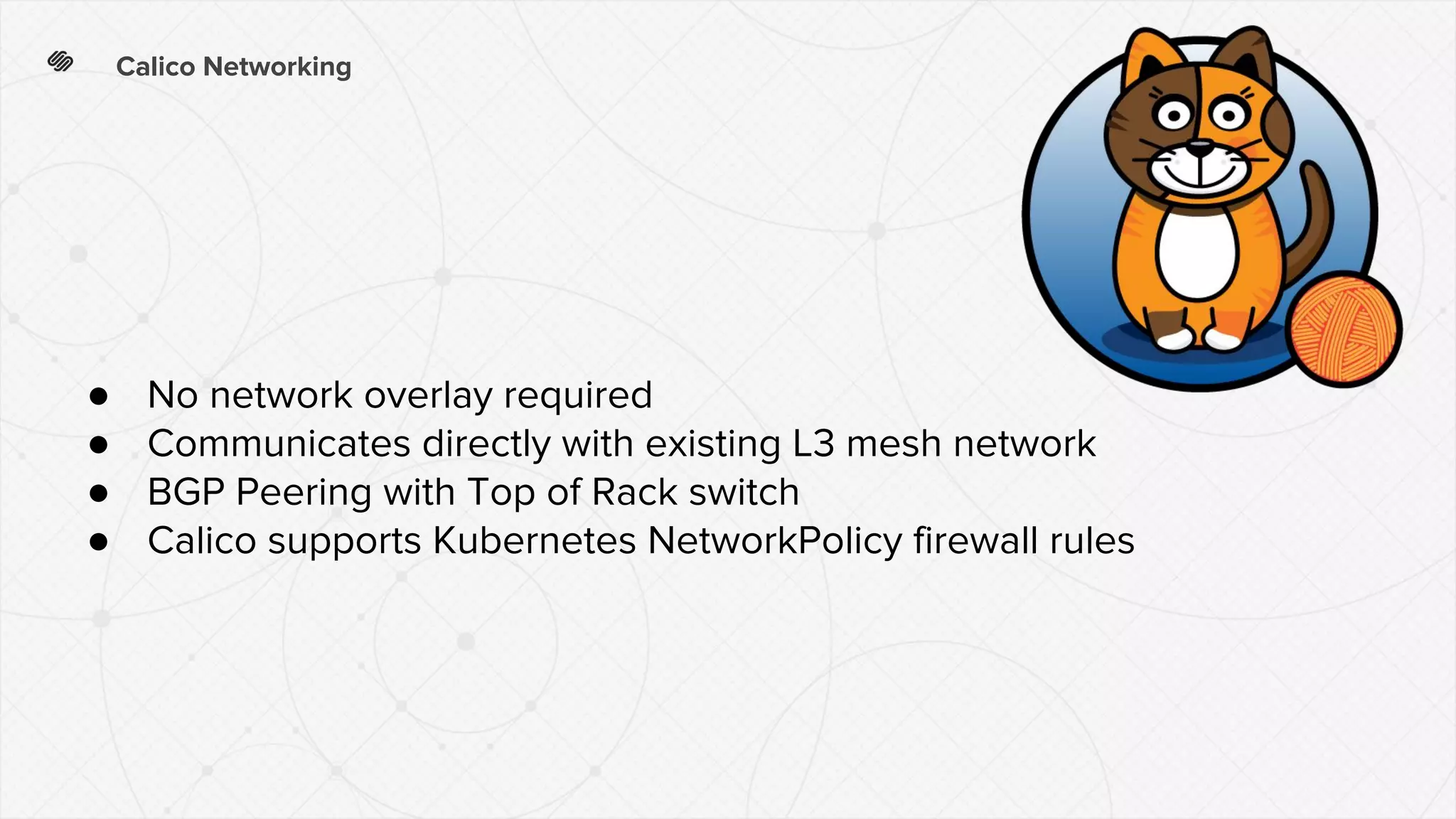

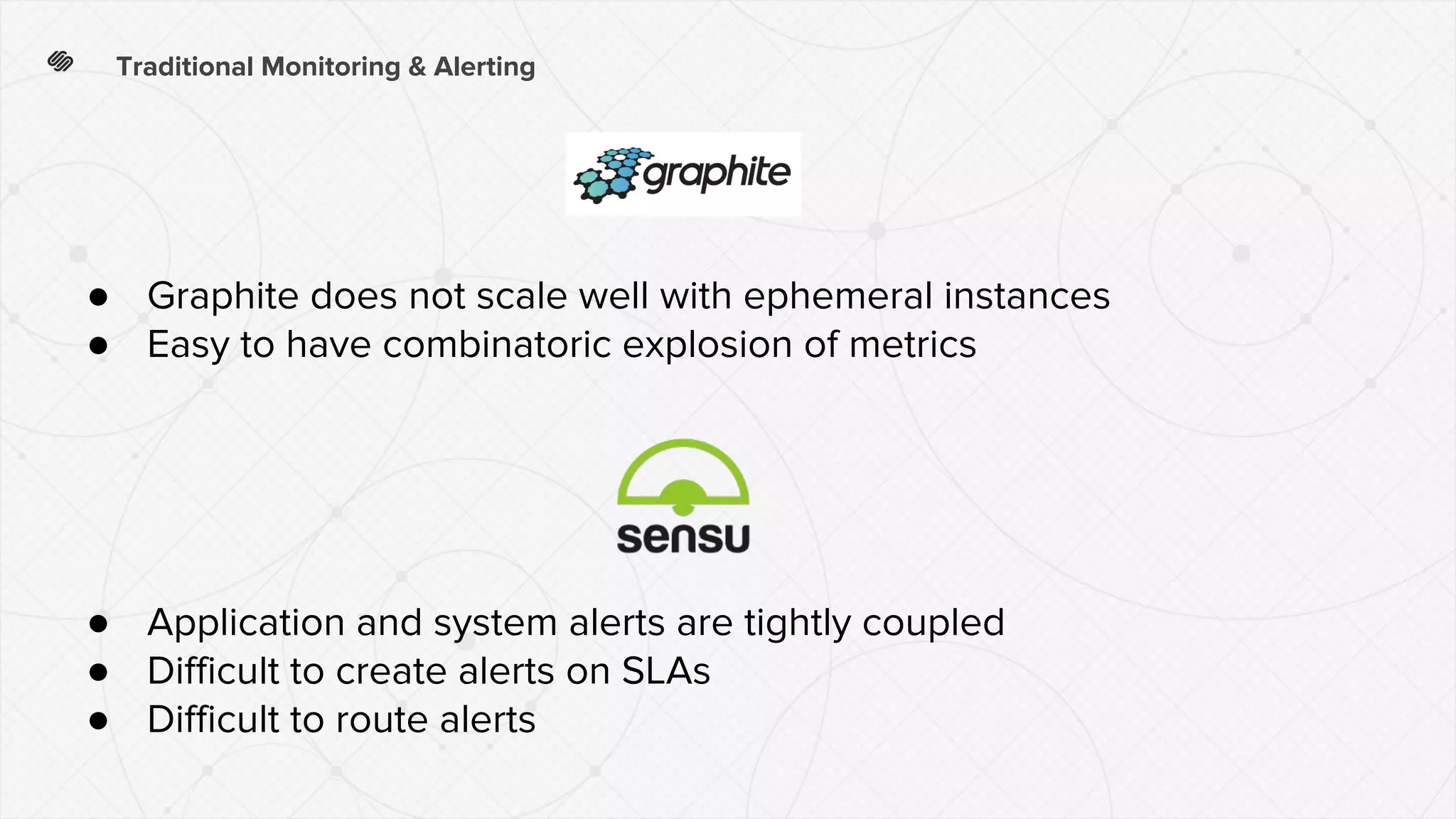

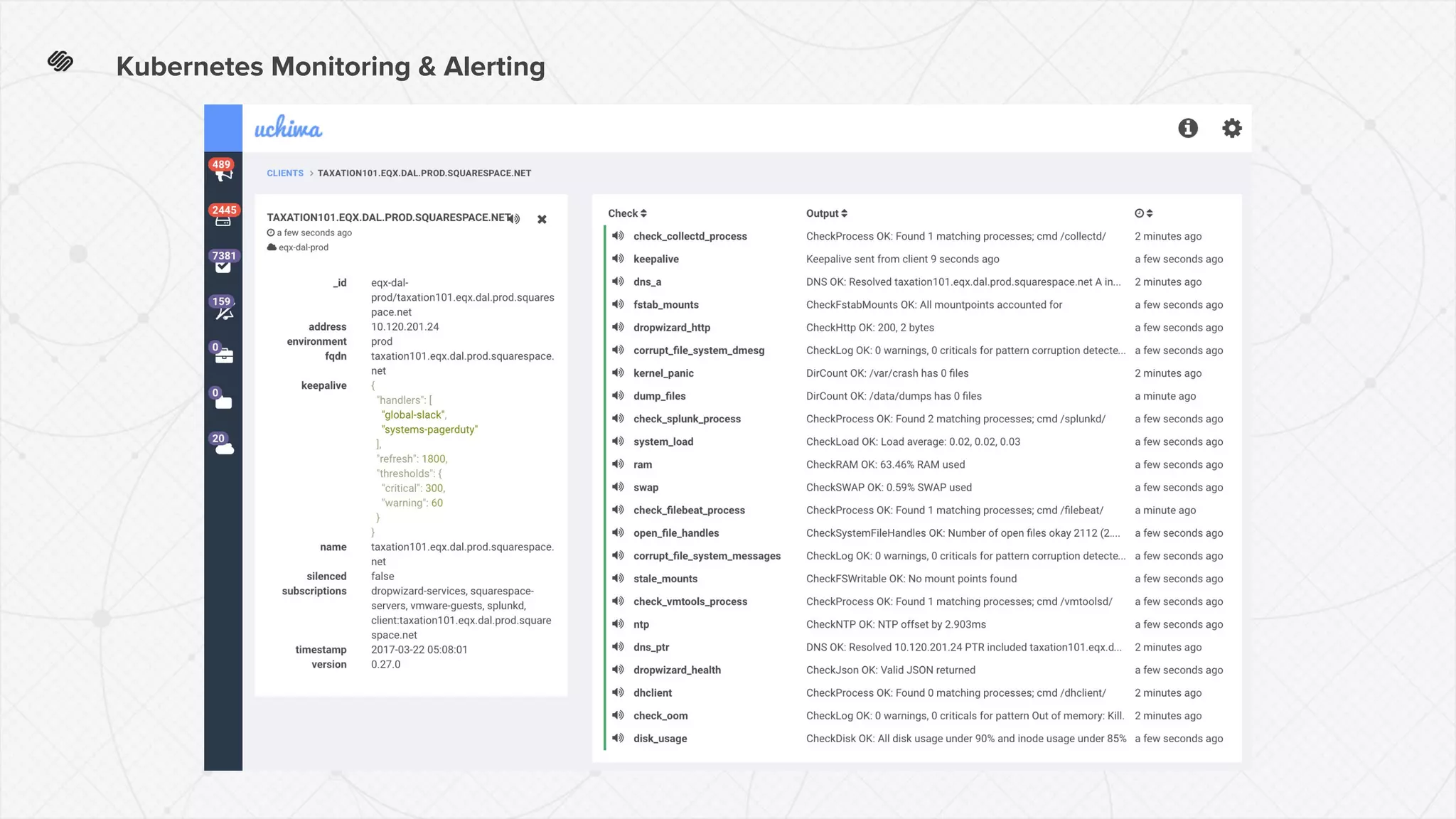

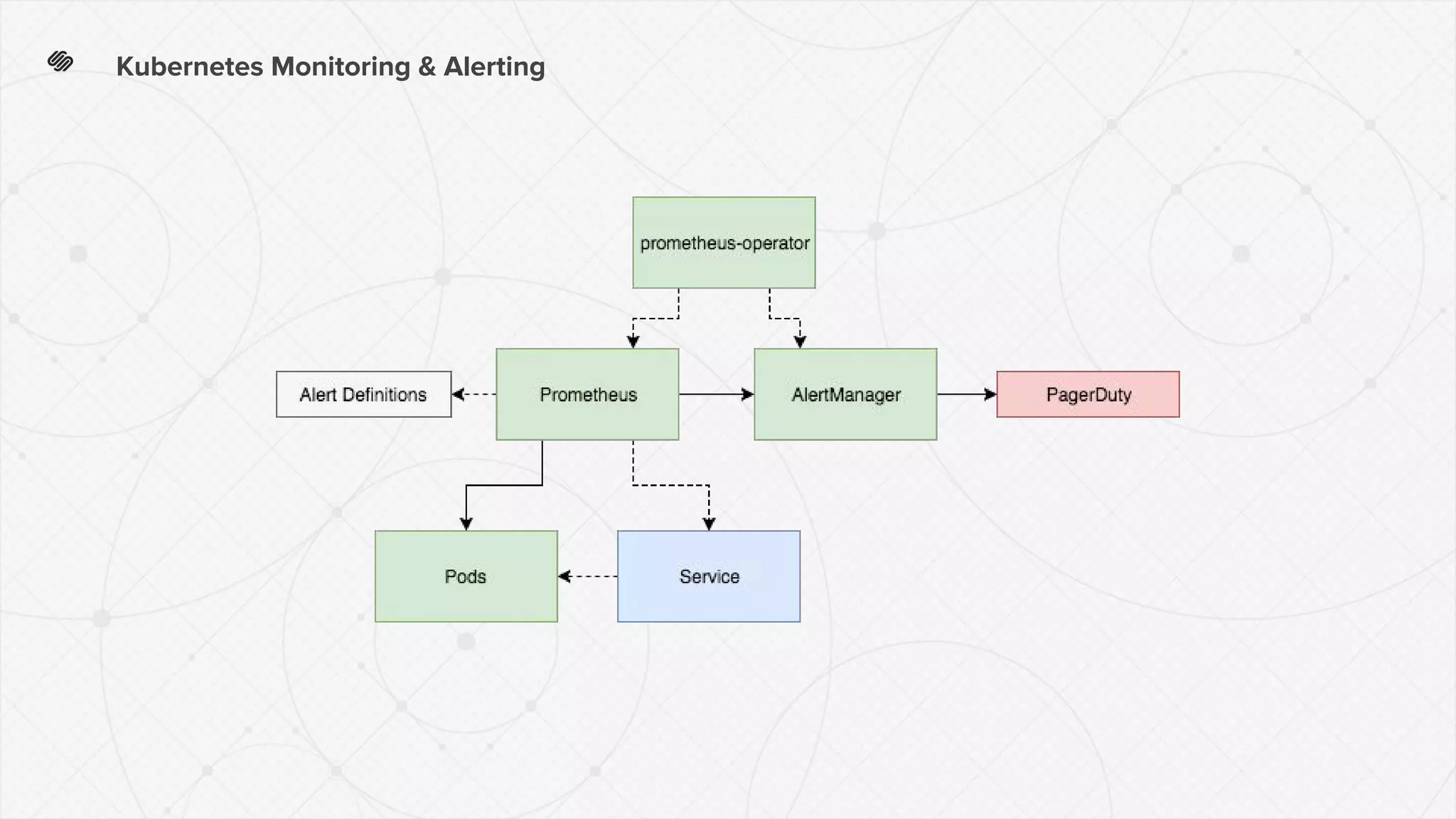

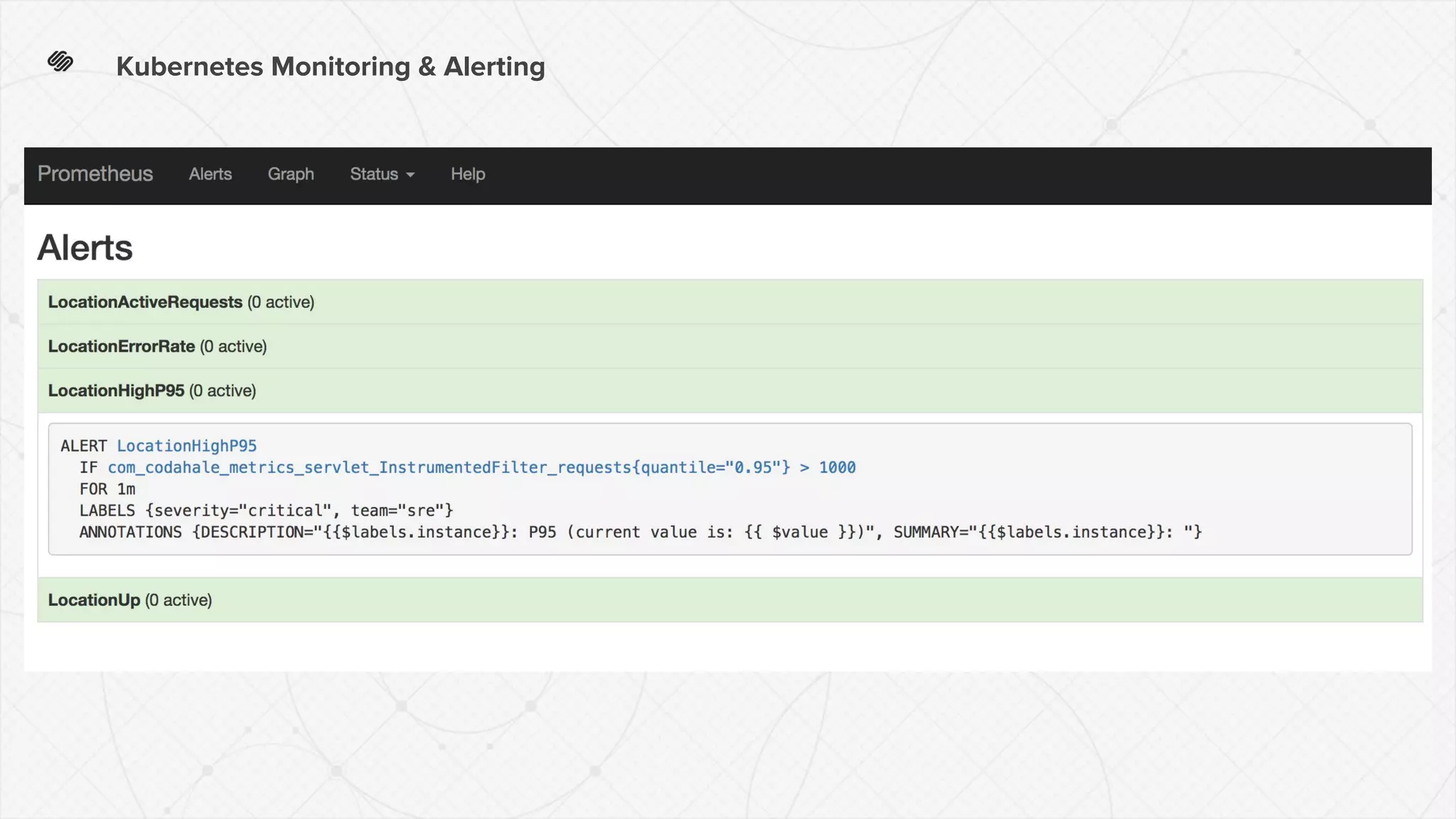

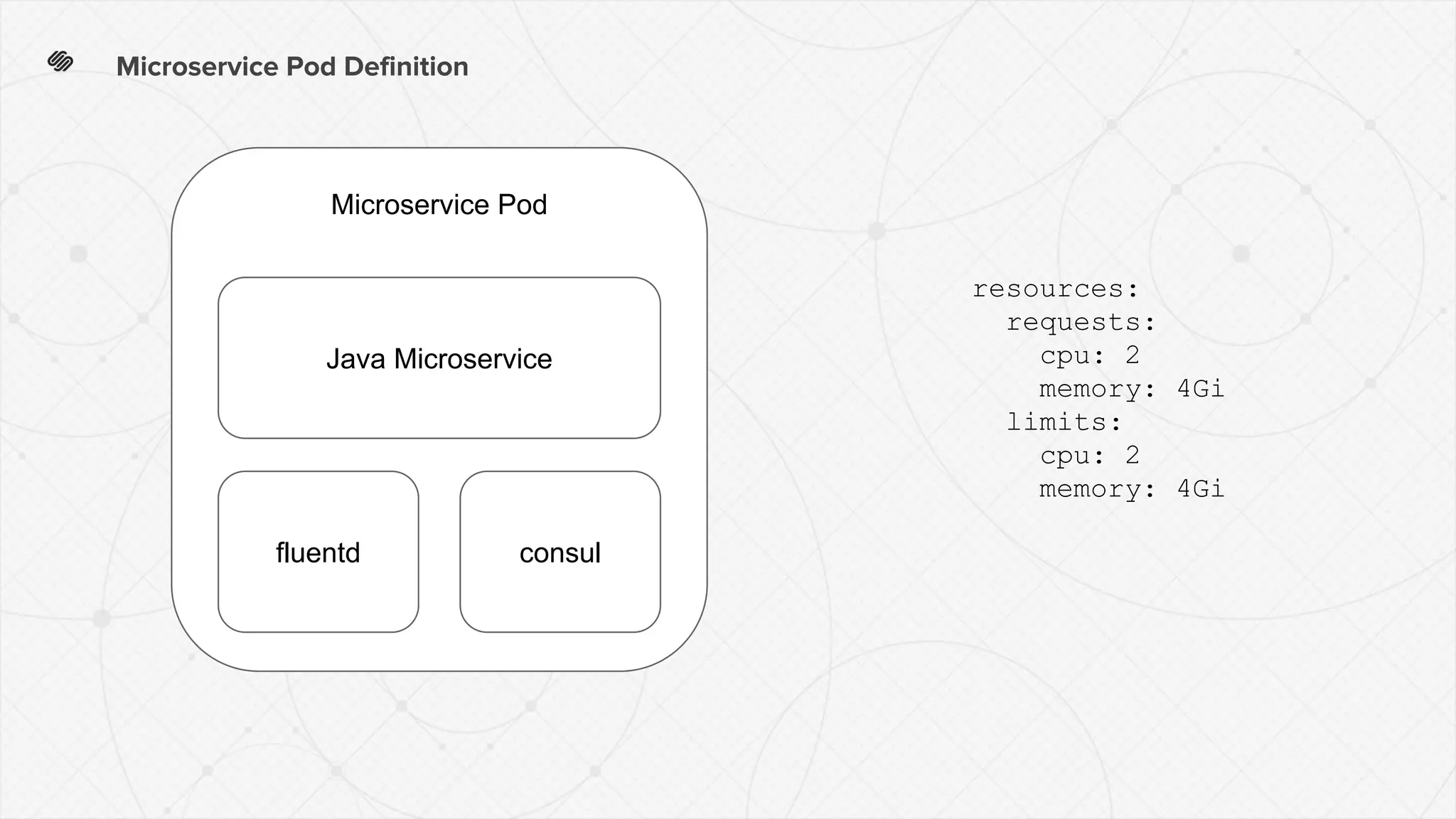

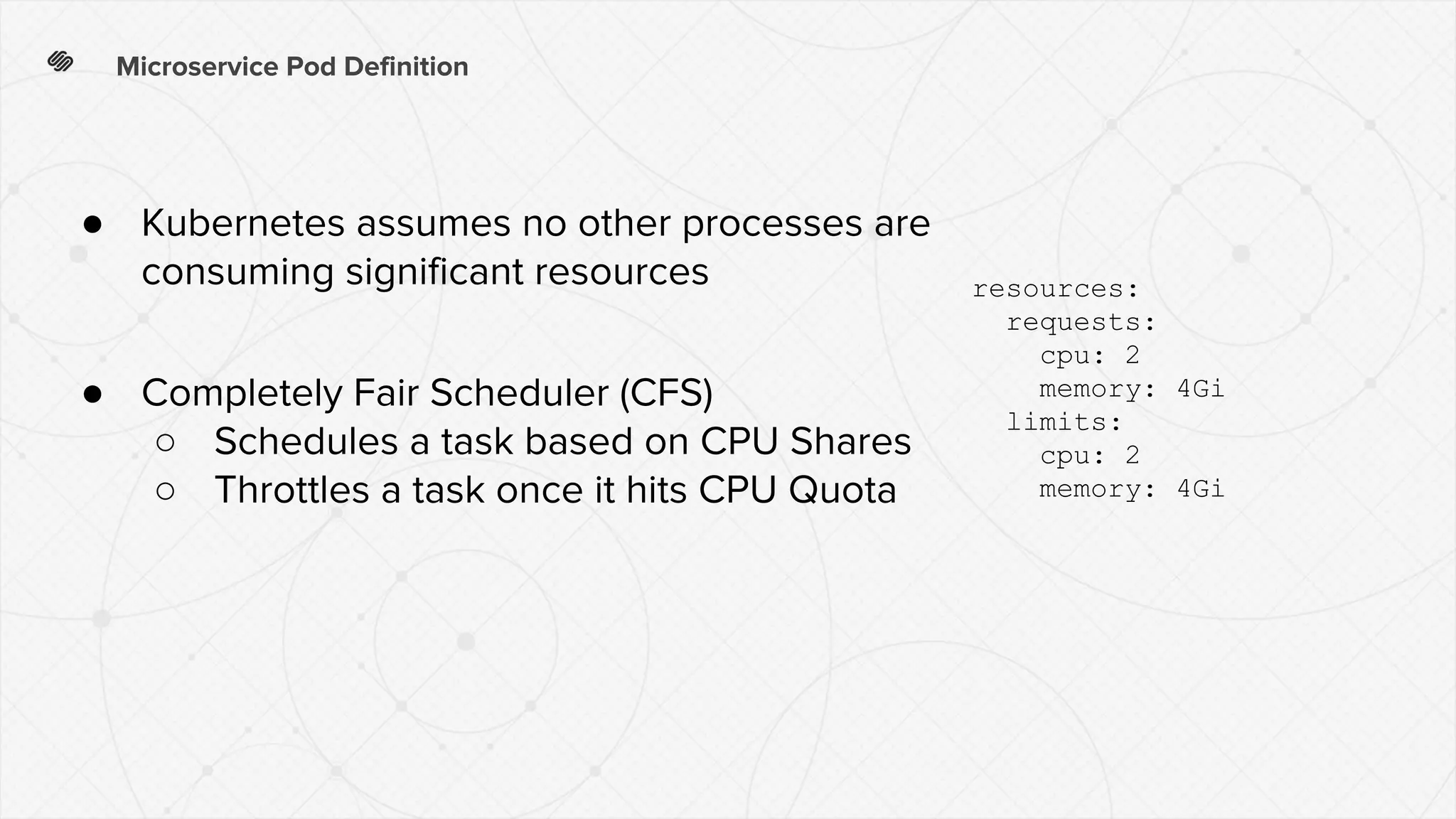

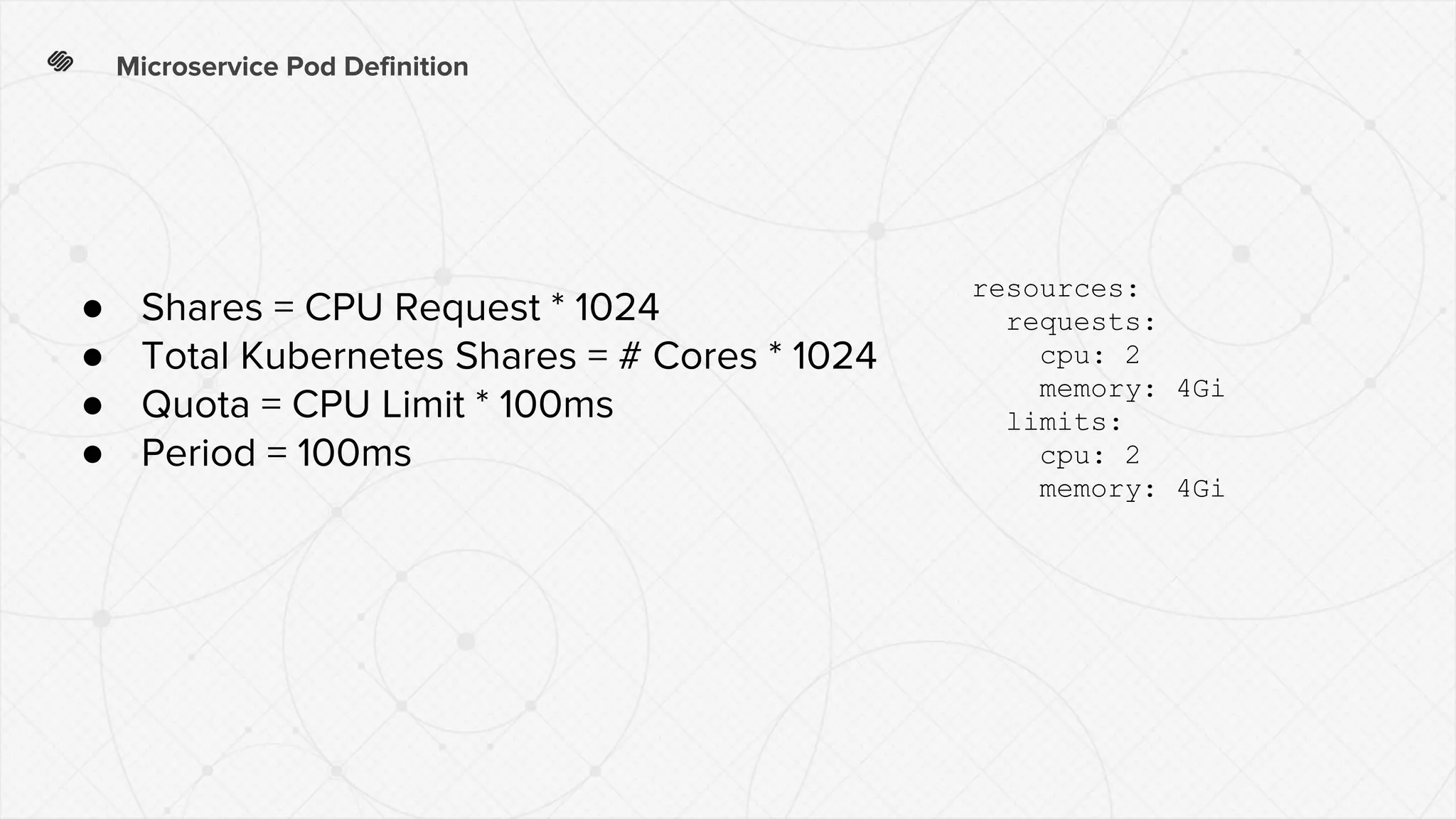

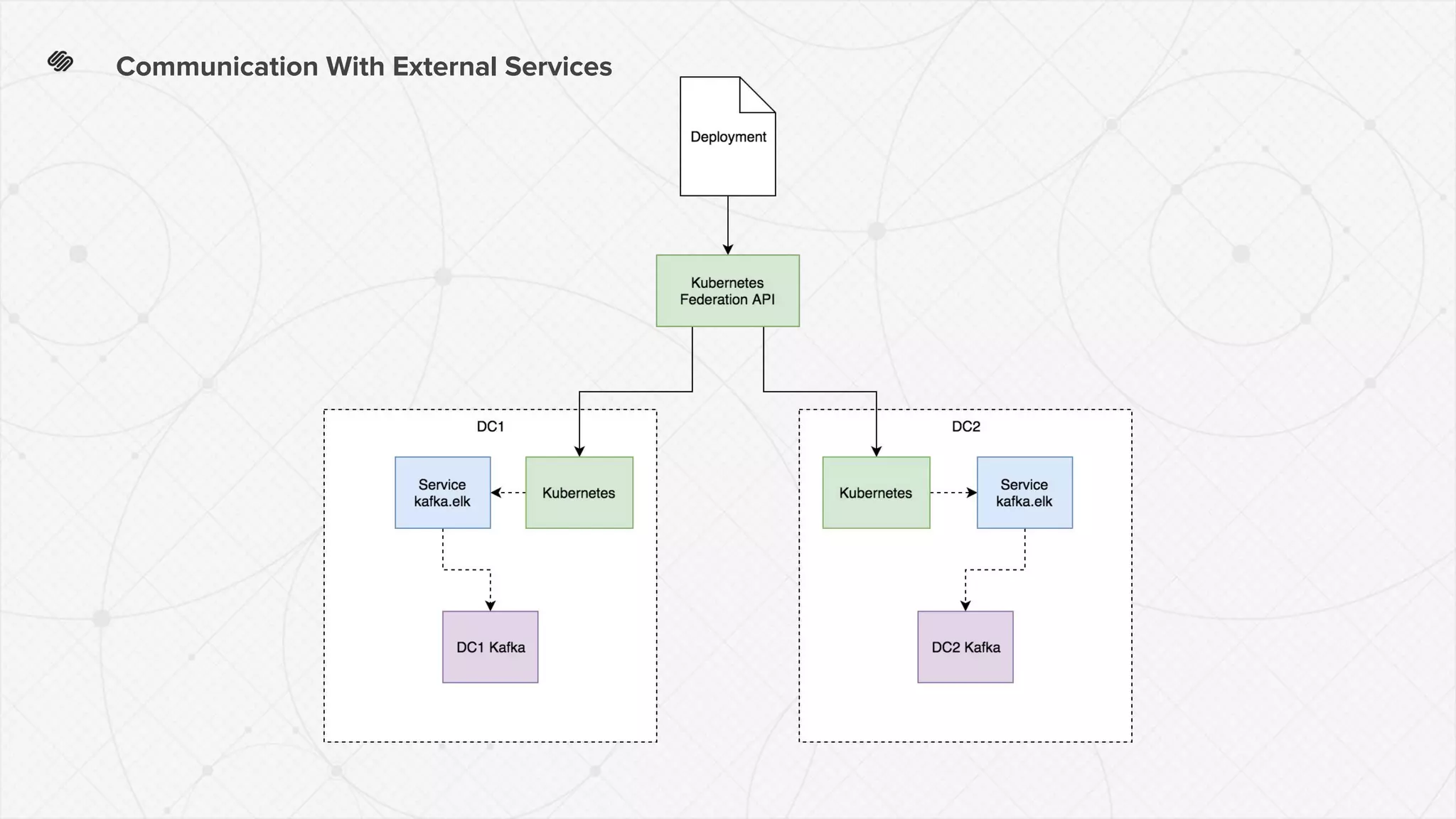

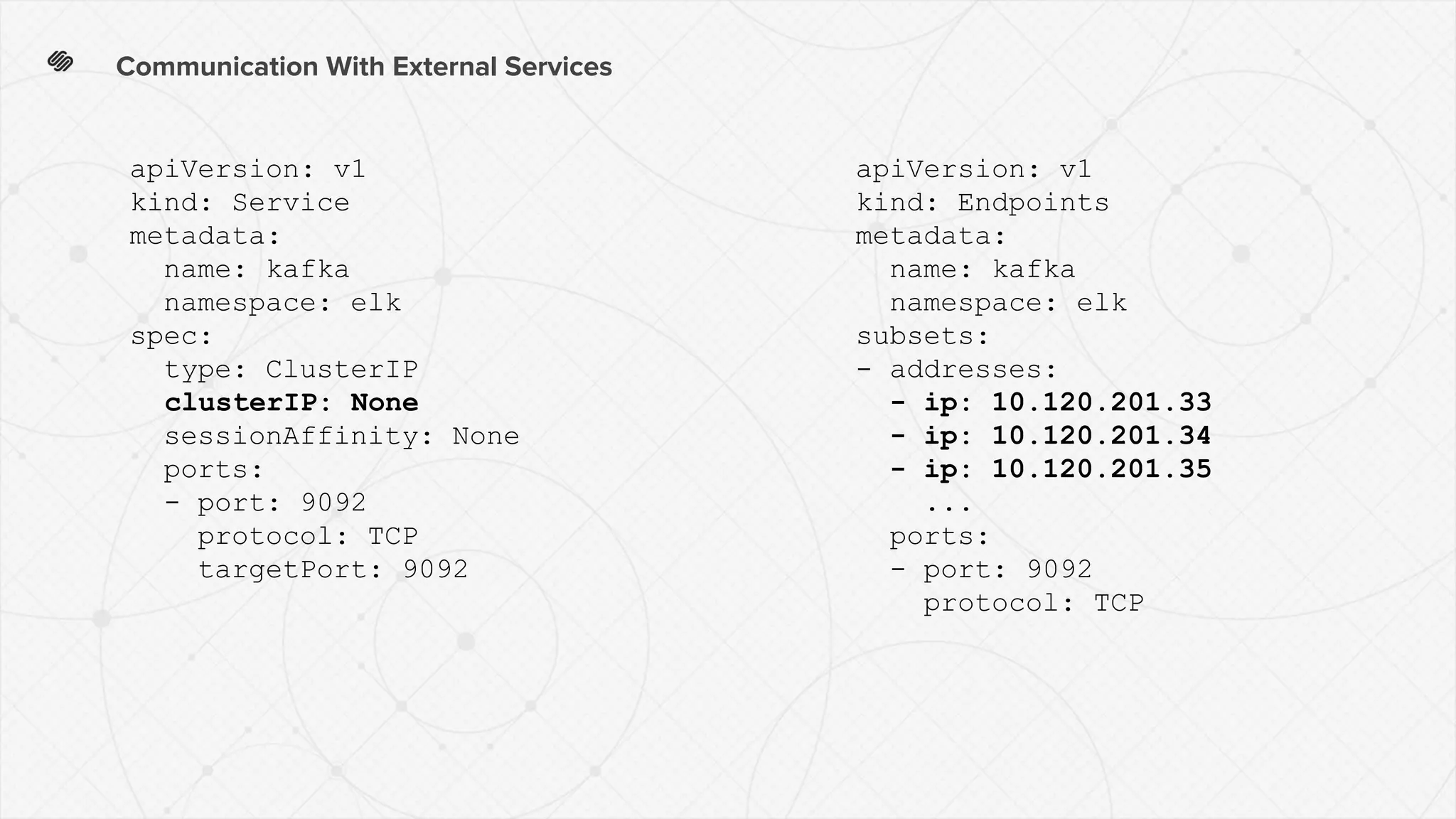

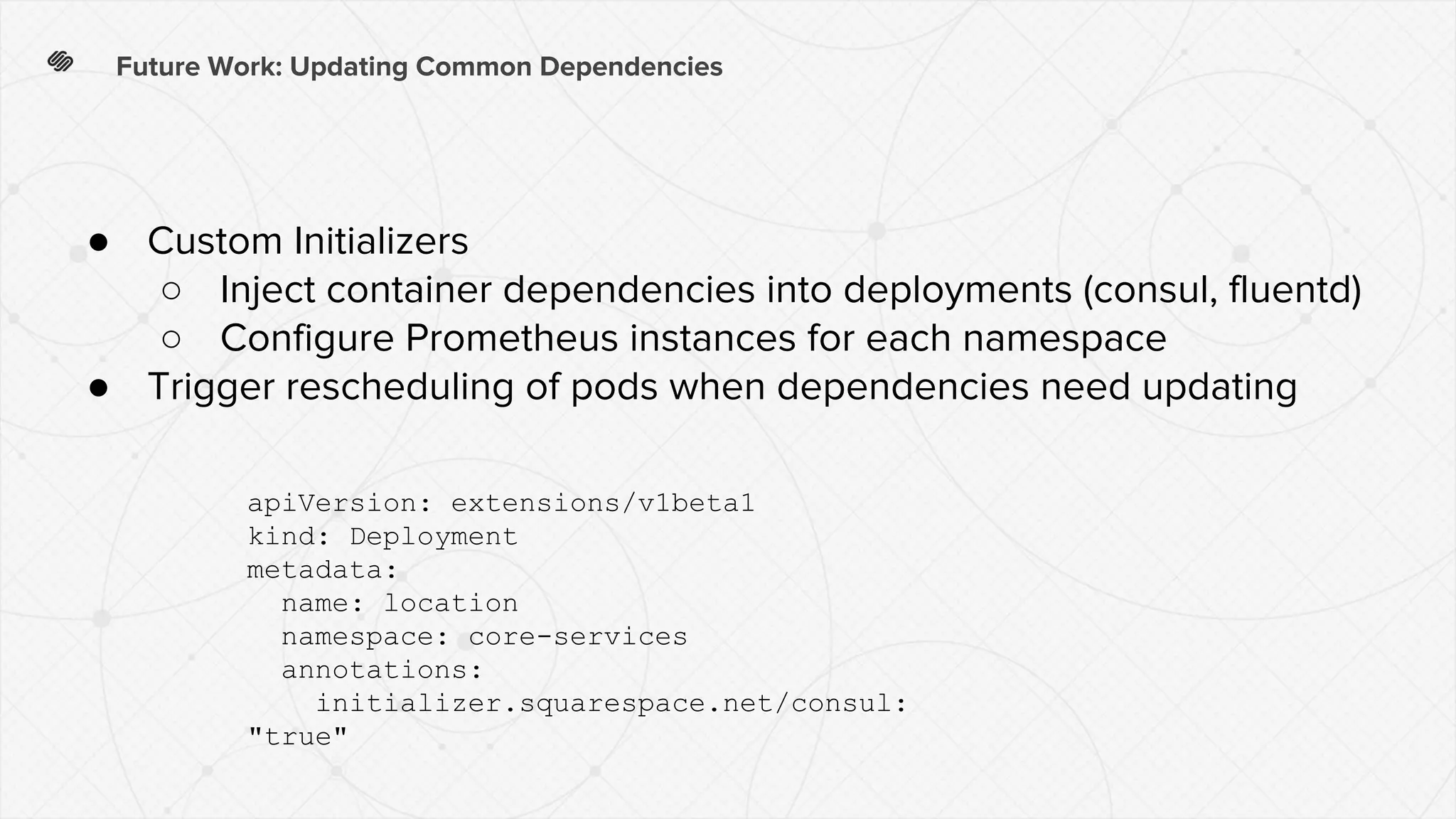

The document outlines the evolution and architecture of microservices at Squarespace, detailing the transition from monolithic systems to microservices for scalability and reliability. It discusses key pillars such as service discovery, observability, fault tolerance, and the need for efficient monitoring and orchestration using Kubernetes. Additionally, it highlights challenges faced with static infrastructure and emphasizes the adoption of containerization for improved resource management and deployment processes.