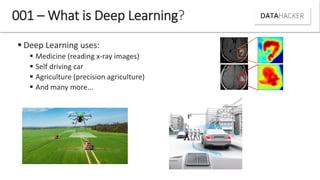

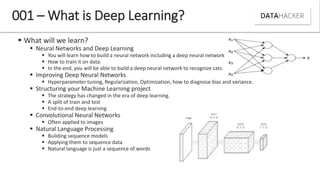

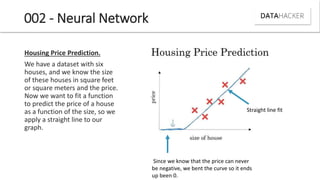

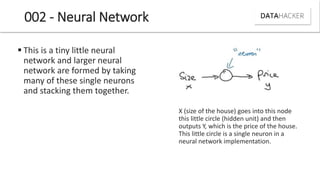

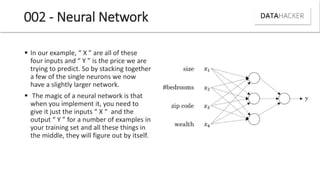

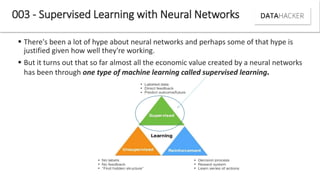

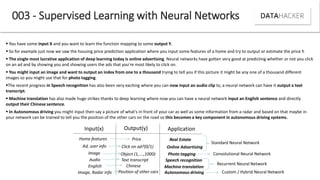

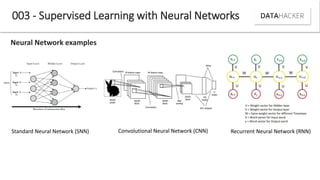

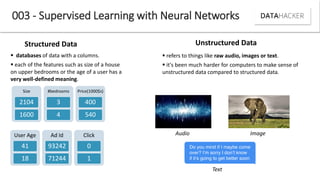

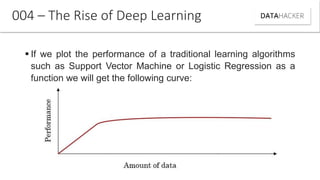

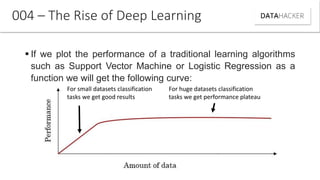

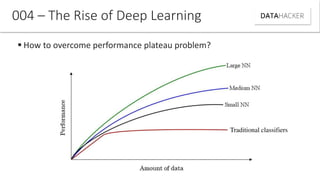

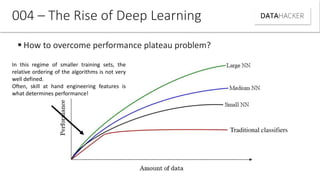

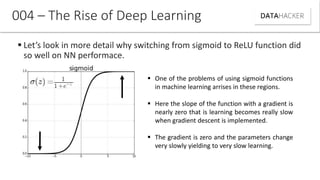

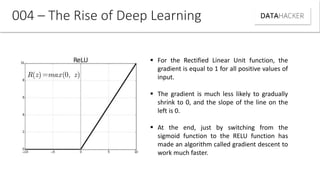

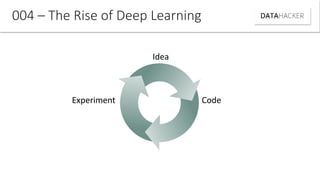

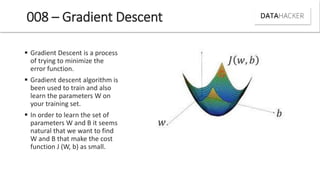

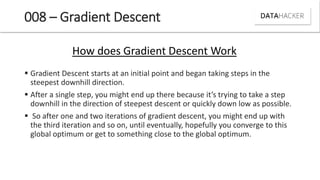

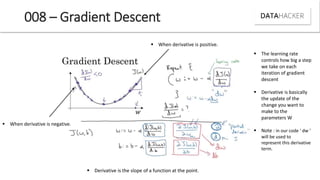

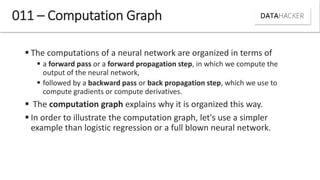

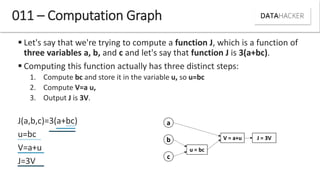

Deep learning uses neural networks to process data and create patterns in a way that imitates the human brain. It has transformed industries like web search and advertising by enabling tasks like image recognition. This document discusses neural networks, deep learning, and their various applications. It also explains how recent advances in algorithms and increased data availability have driven the rise of deep learning by allowing neural networks to train on larger datasets and overcome performance plateaus.