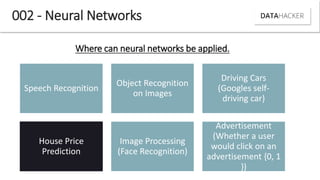

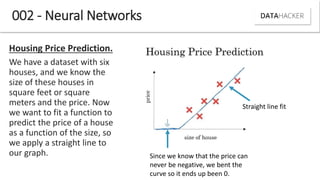

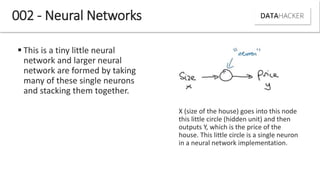

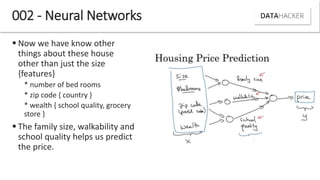

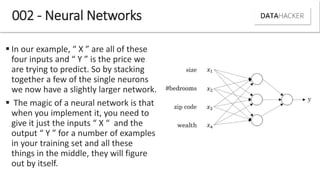

Neural networks are modeled after the human brain and are useful for problems involving pattern recognition and classification. They involve input data passing through multiple layers of simulated neurons that are activated based on the input. Neural networks have achieved remarkable results in applications like speech recognition, image recognition, driving cars, predicting house prices, and targeting online advertisements. Larger neural networks are formed by connecting many single neuron units together. For example, a simple neural network could take inputs like a house's size, number of bedrooms, zip code, and school quality to predict the house's price.