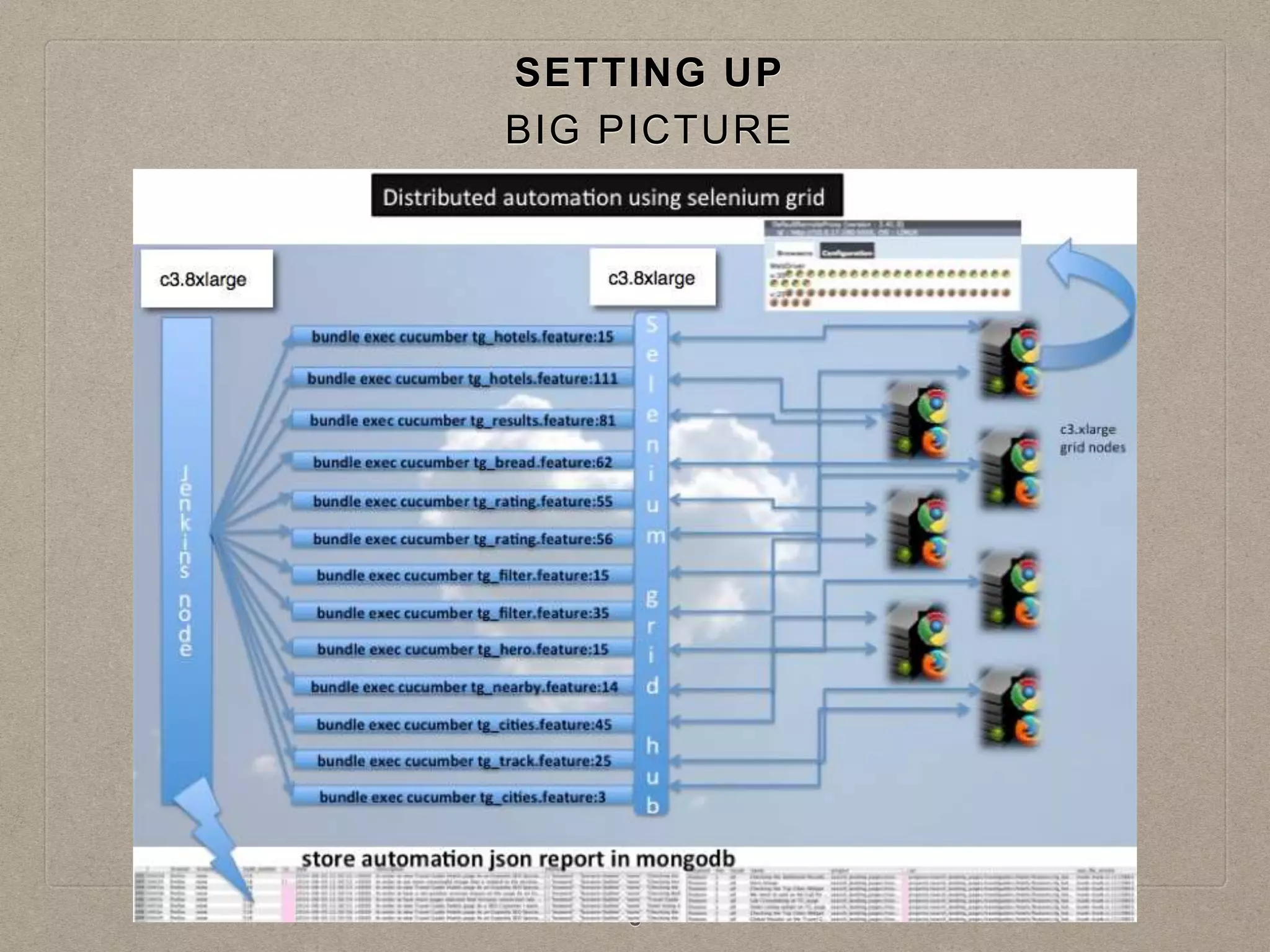

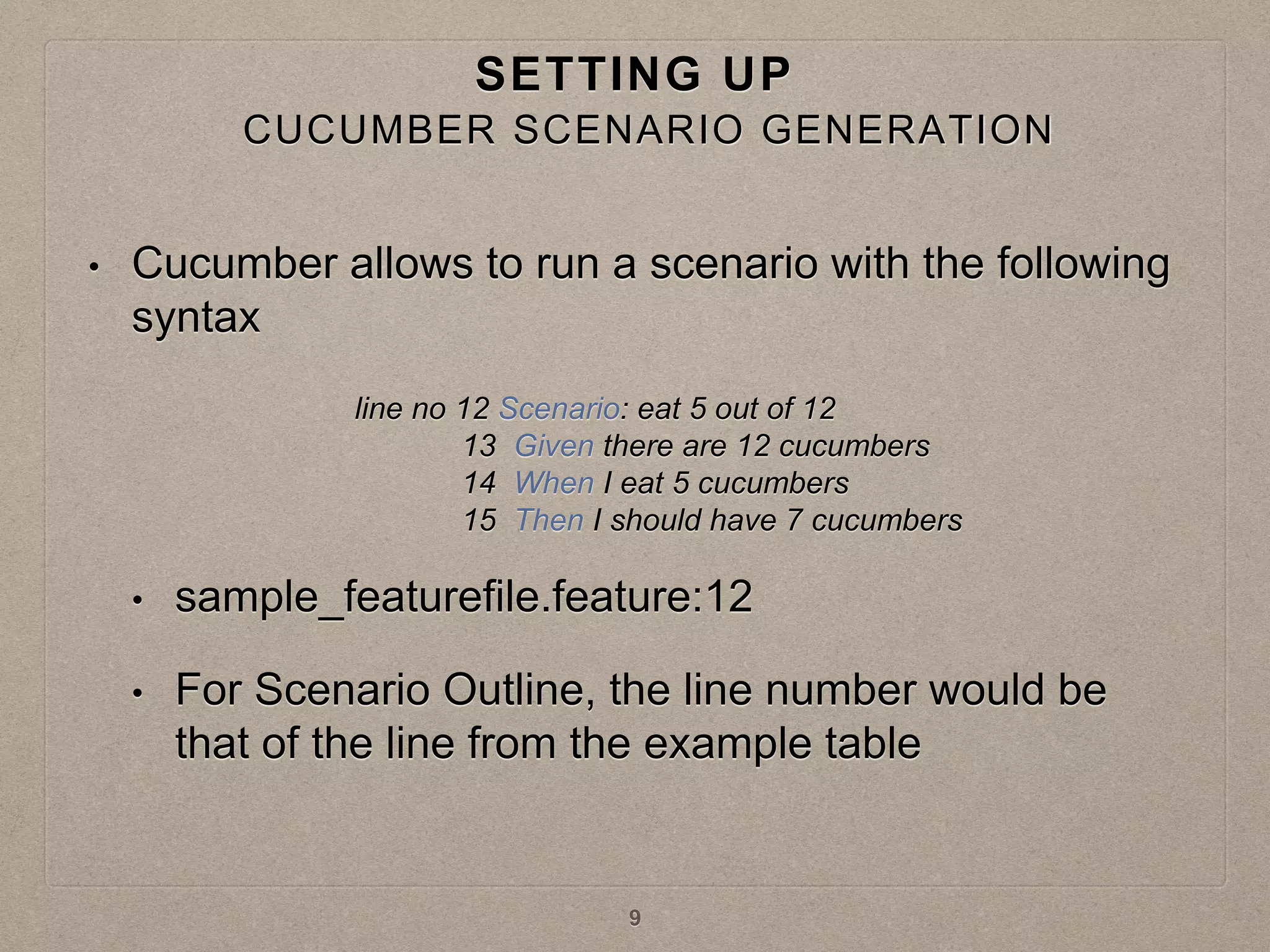

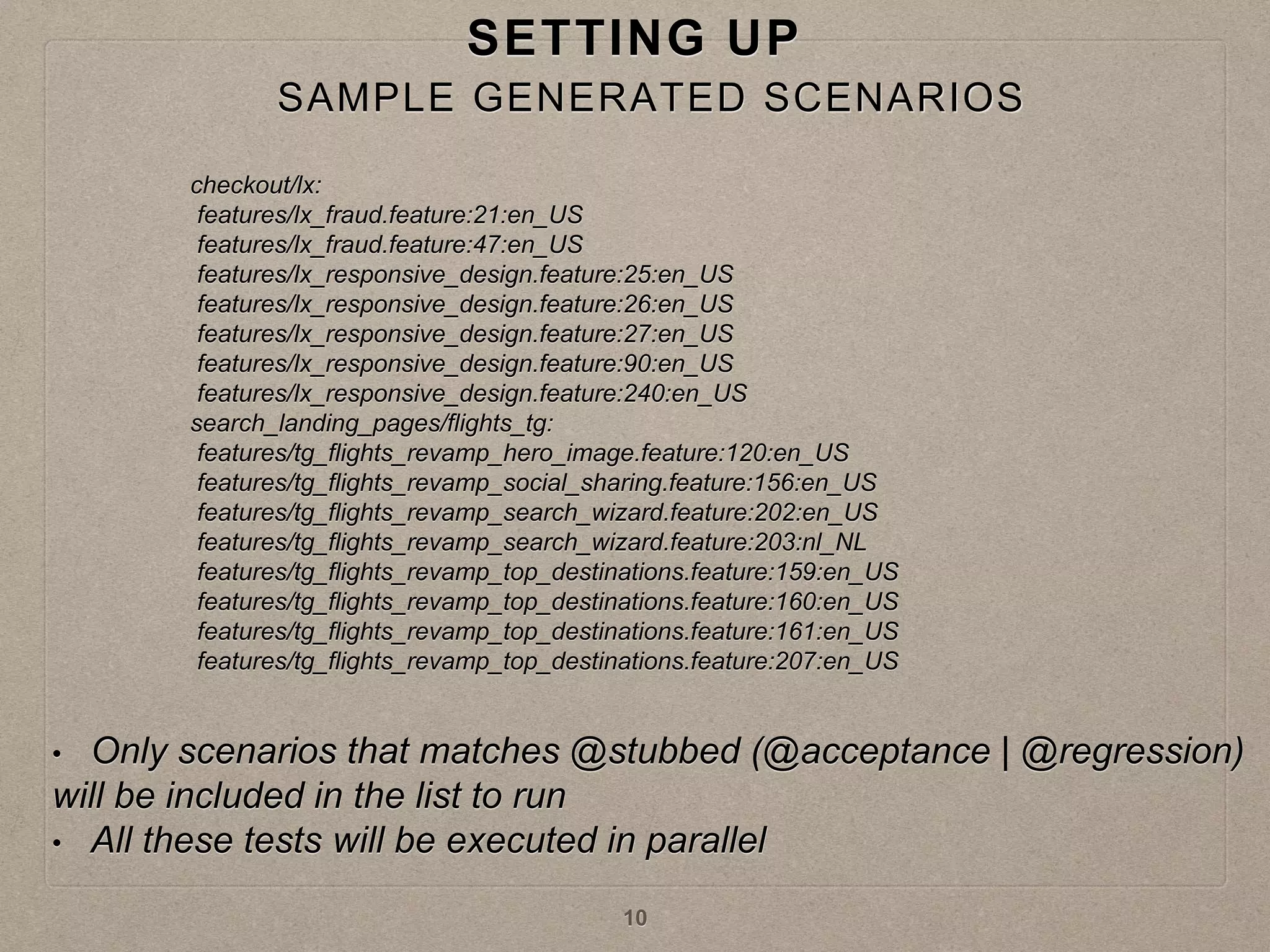

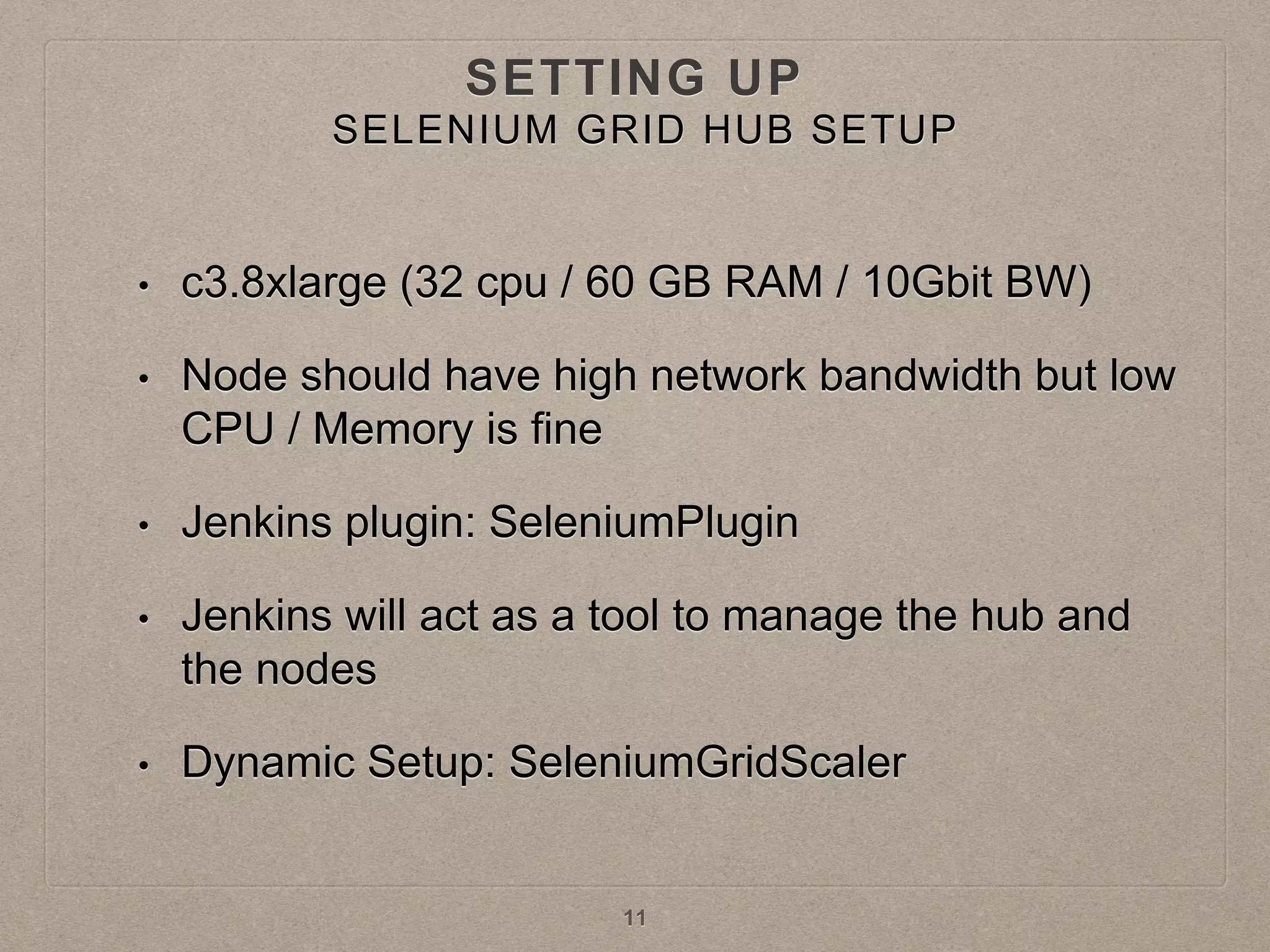

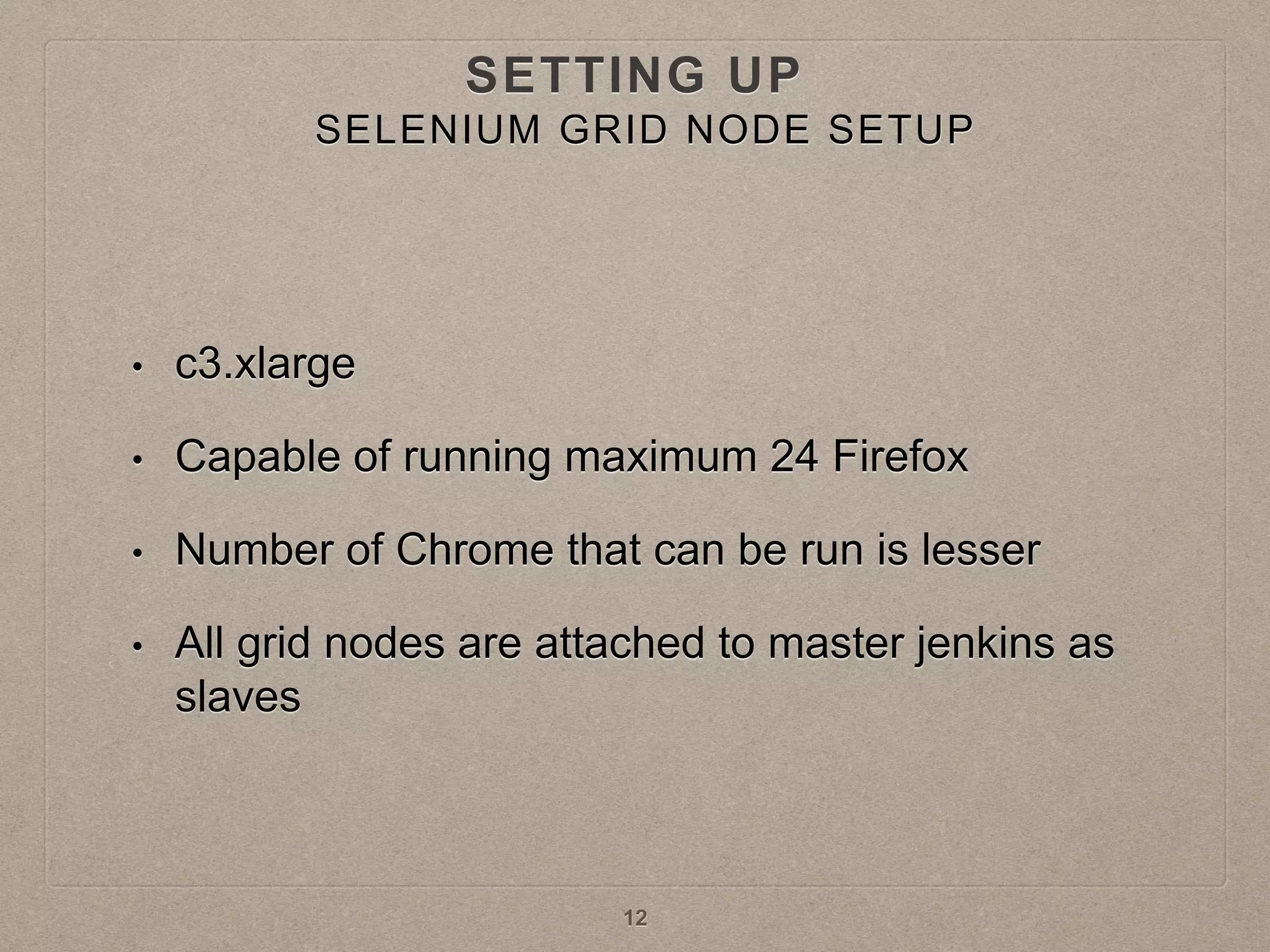

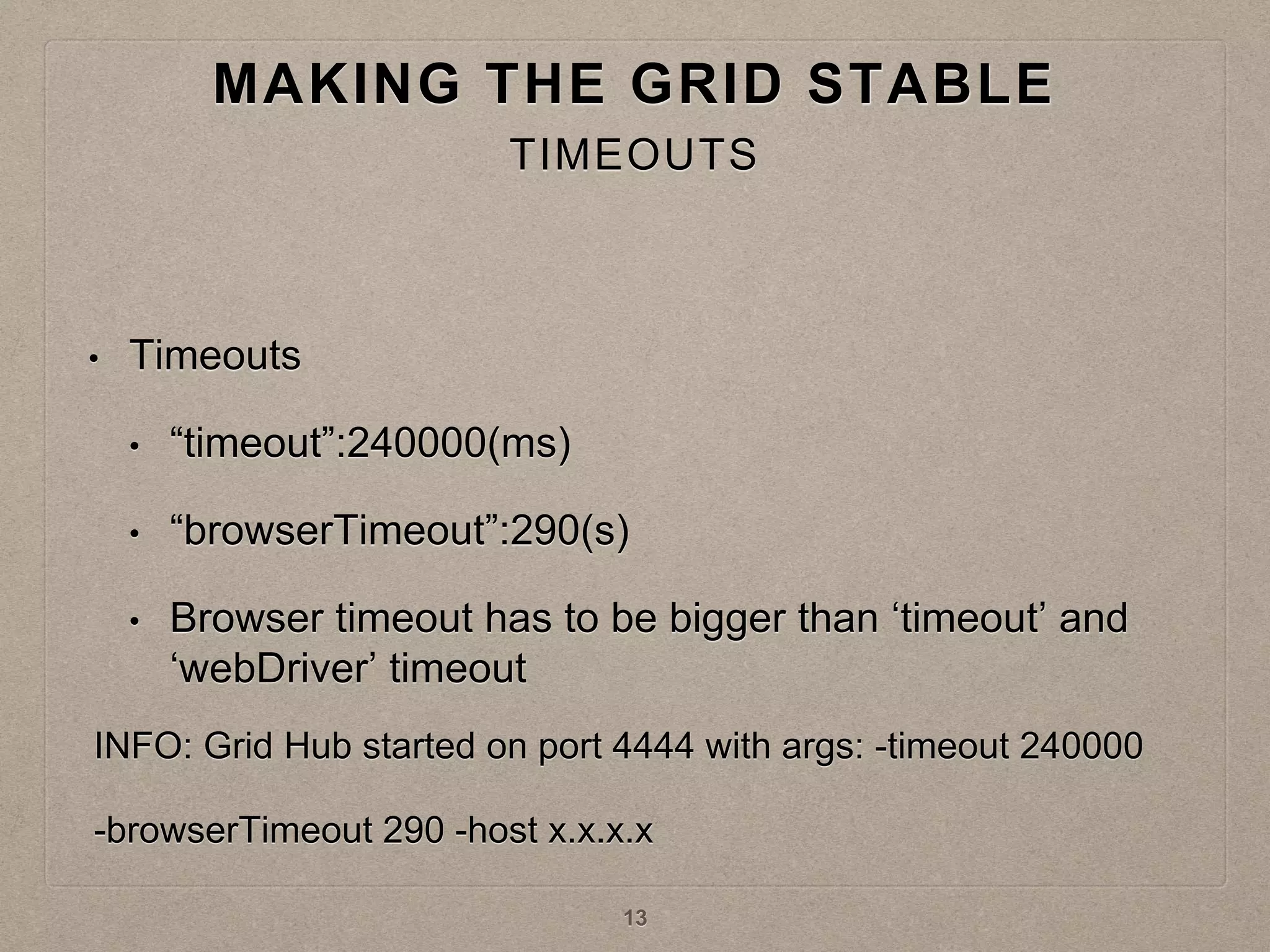

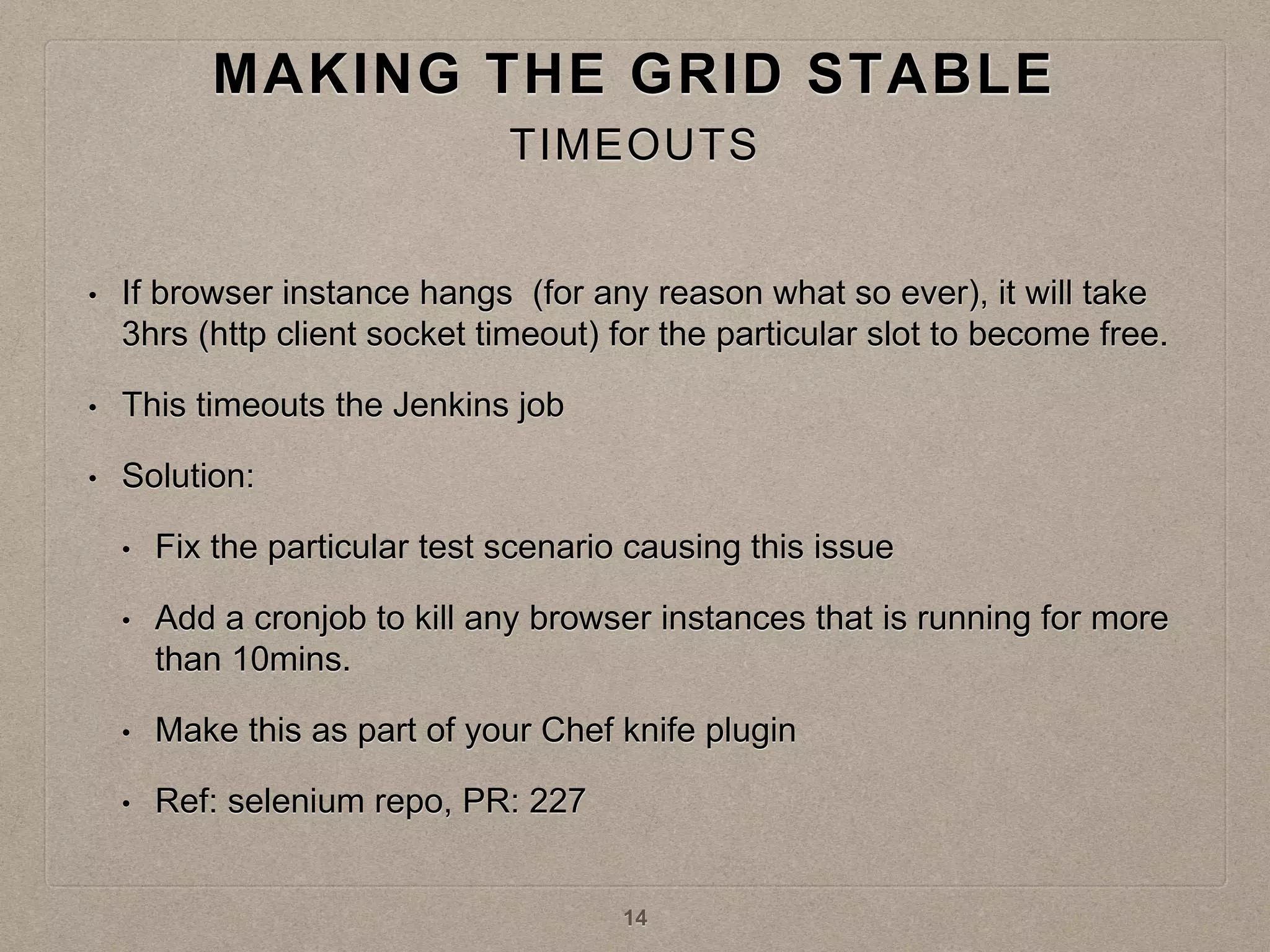

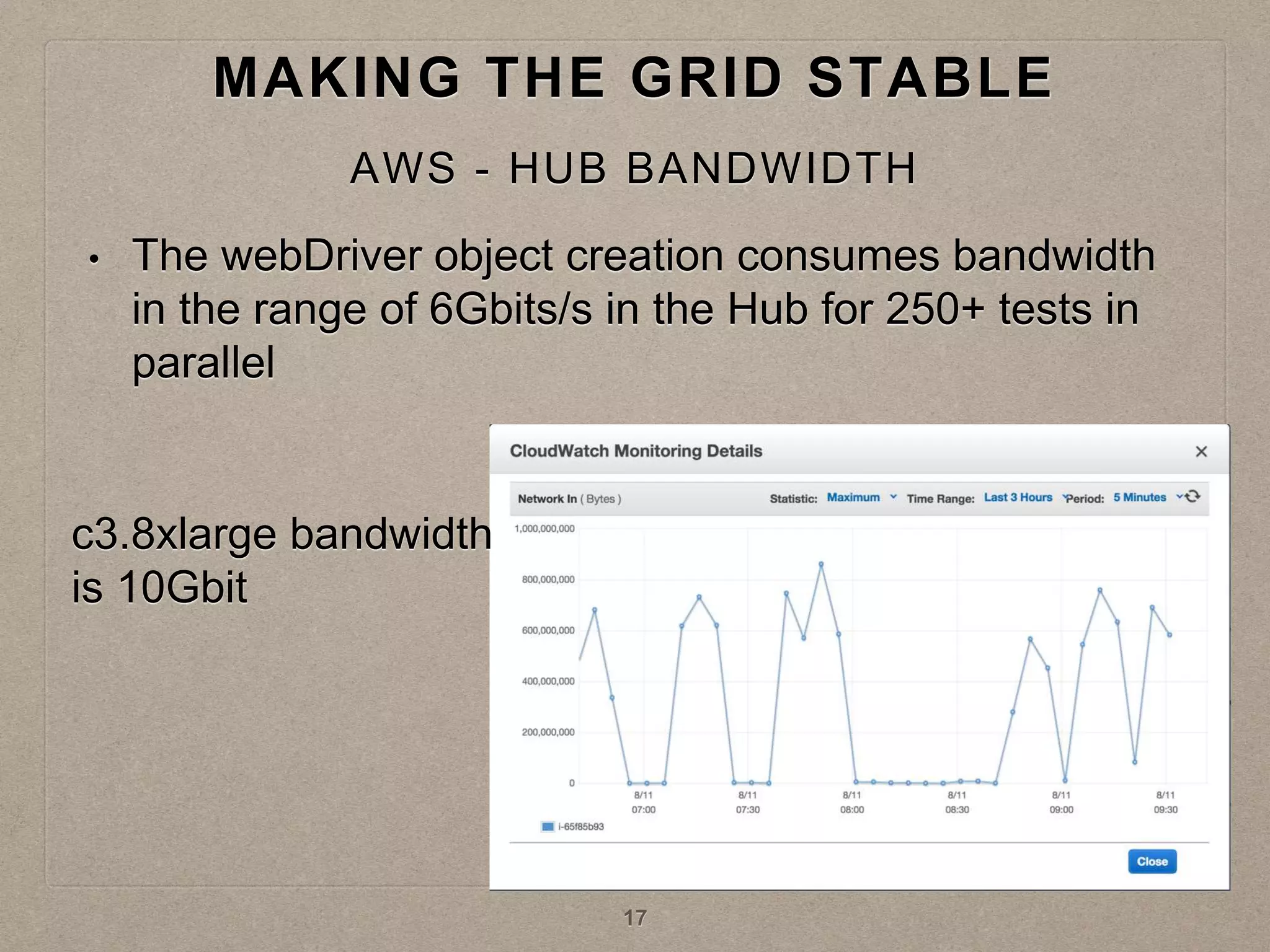

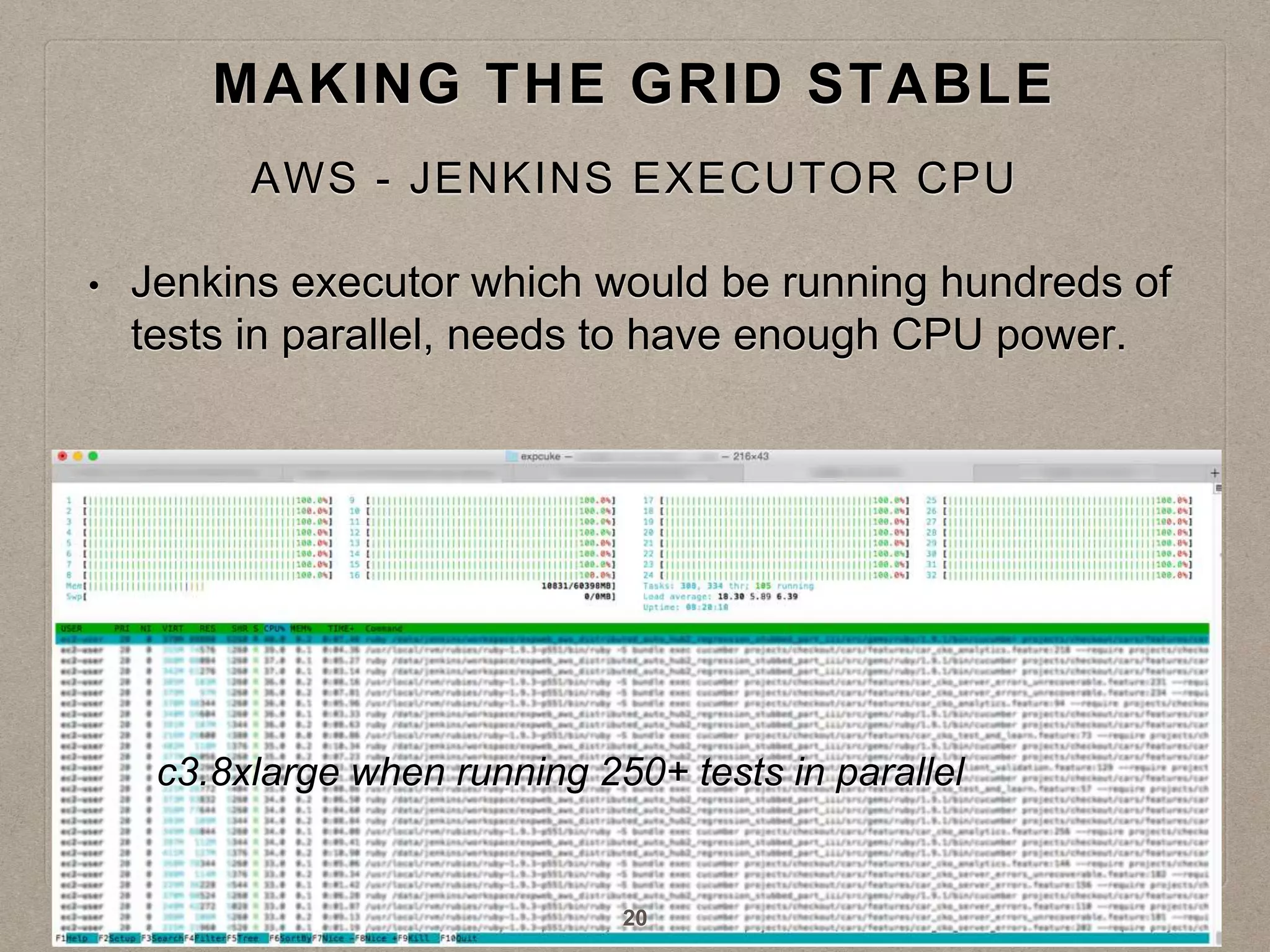

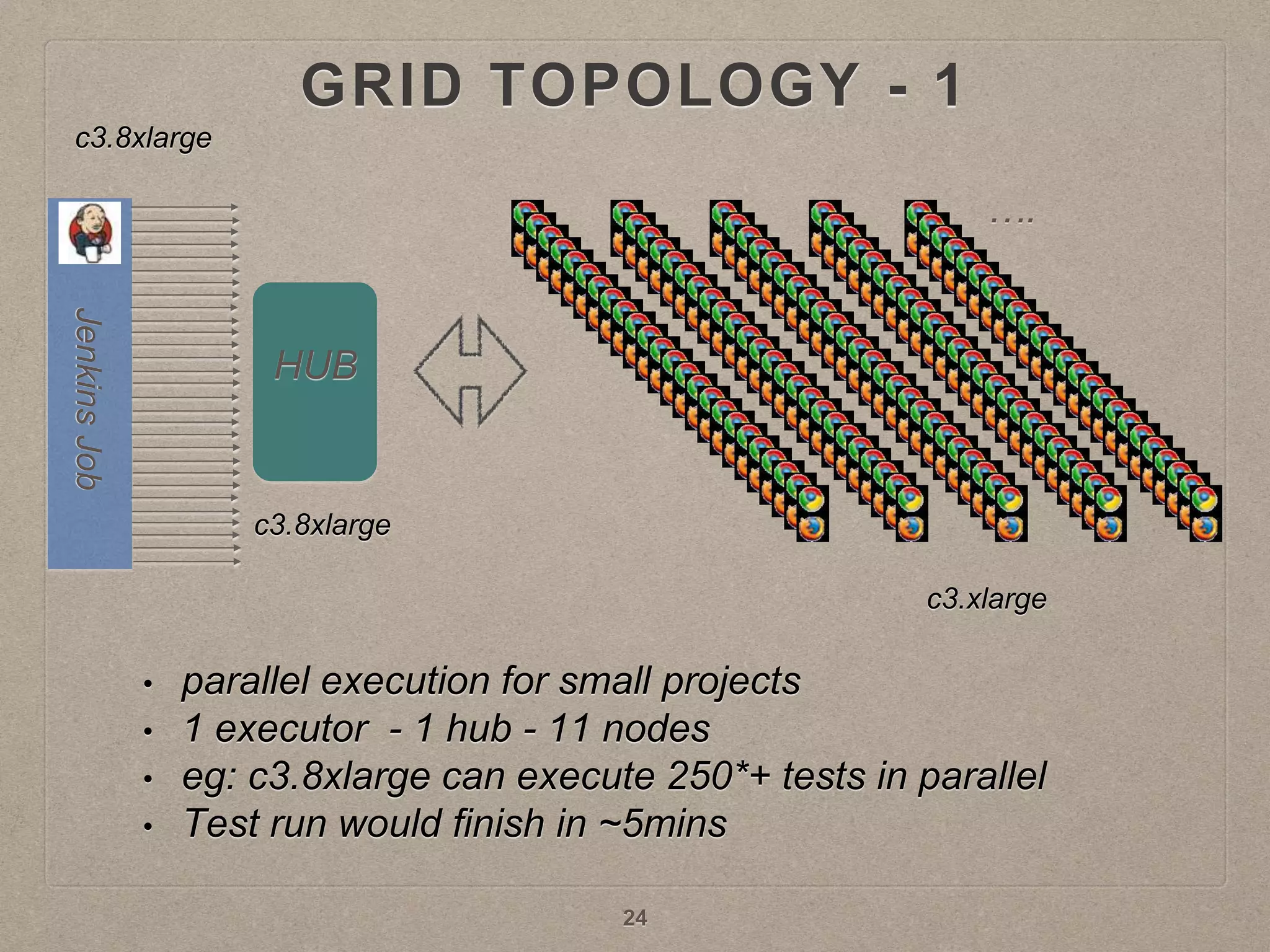

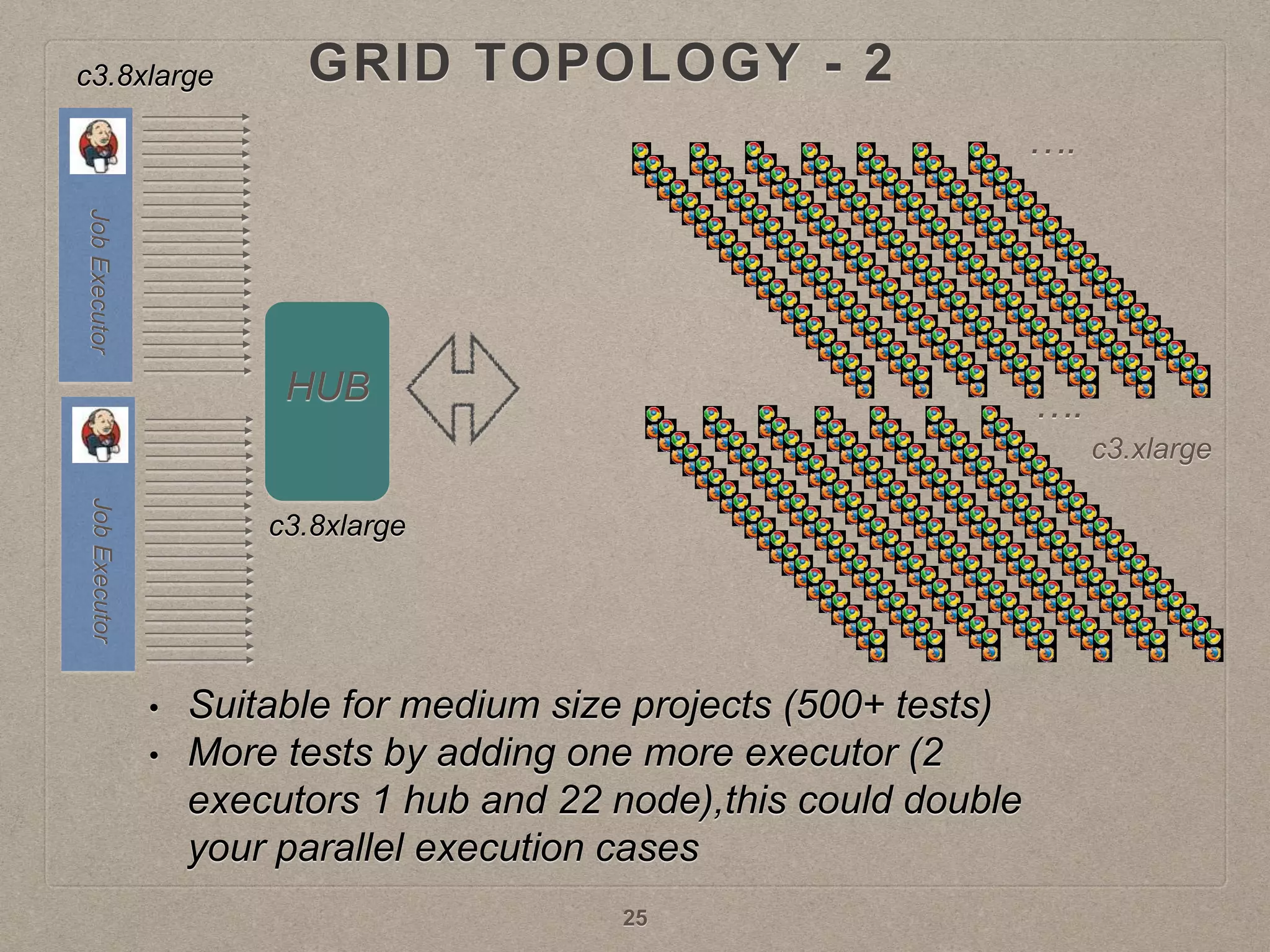

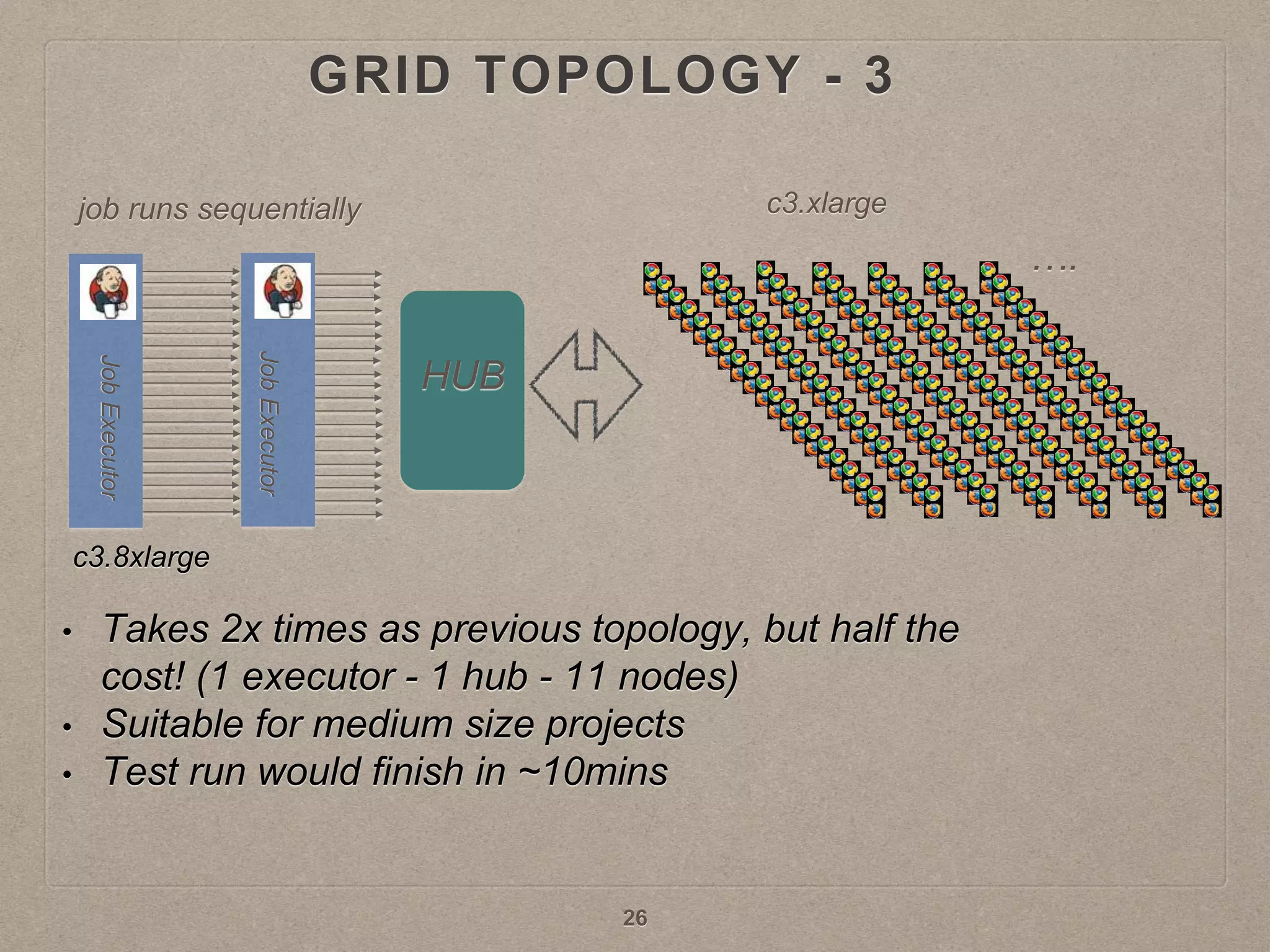

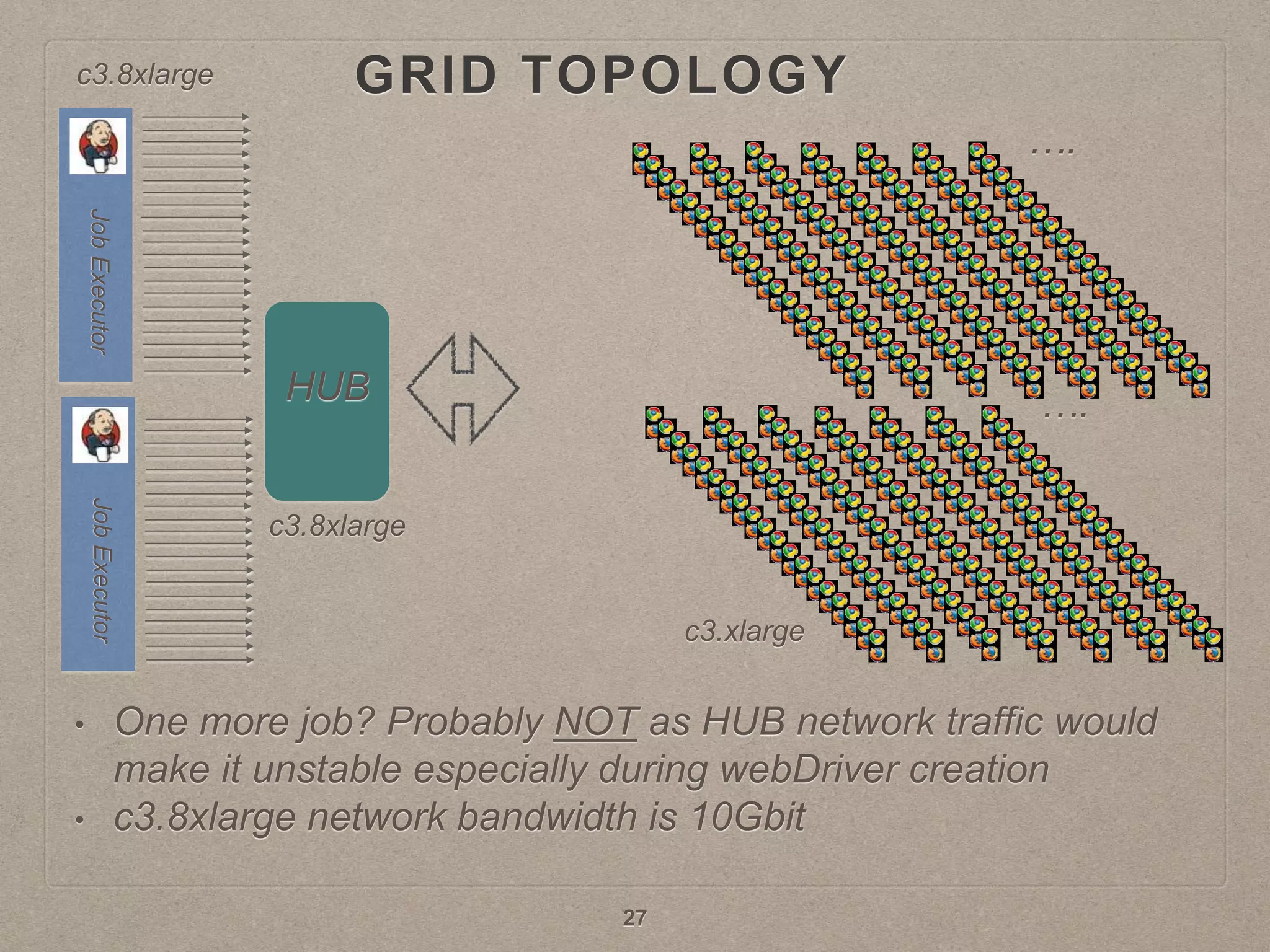

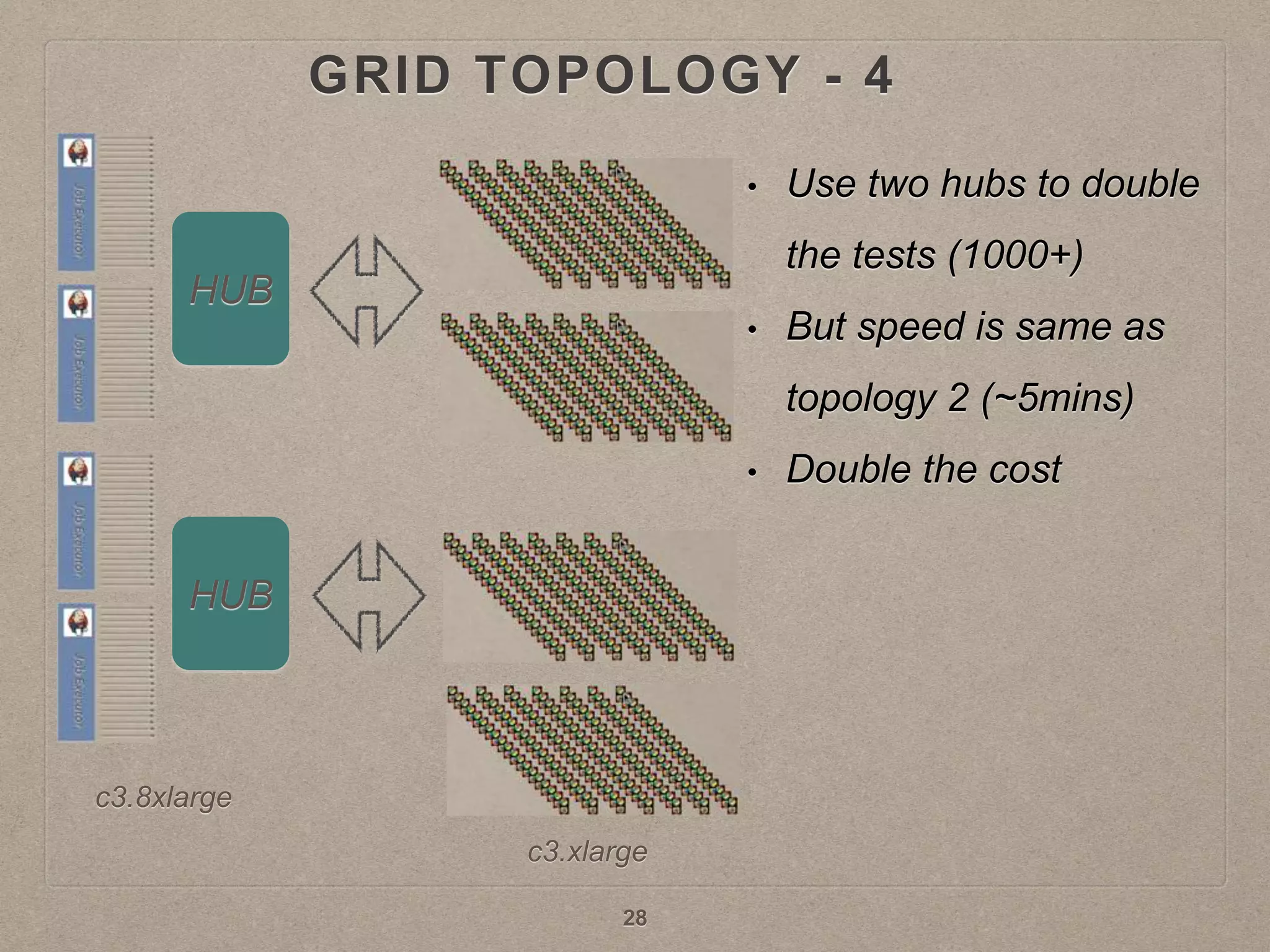

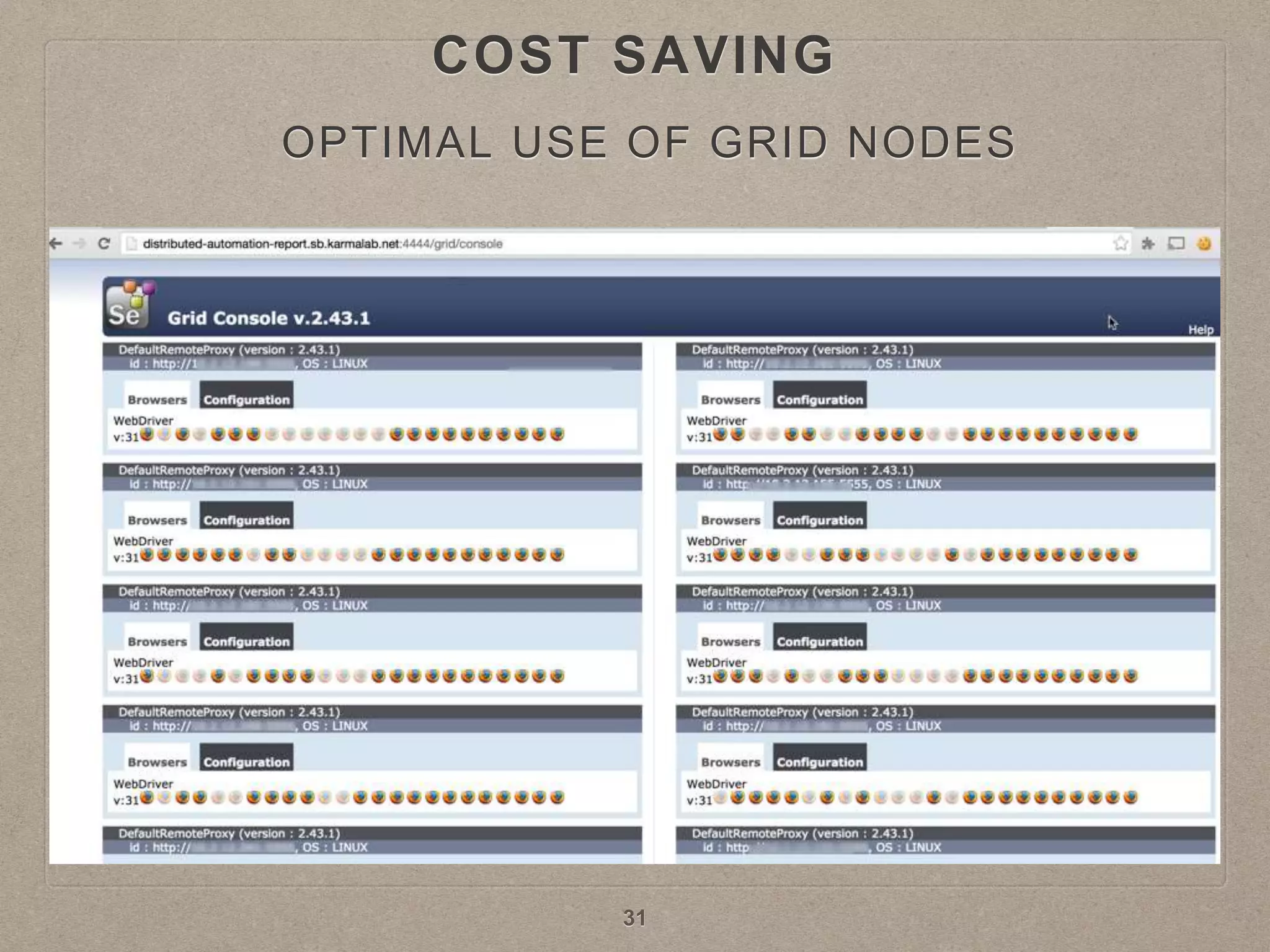

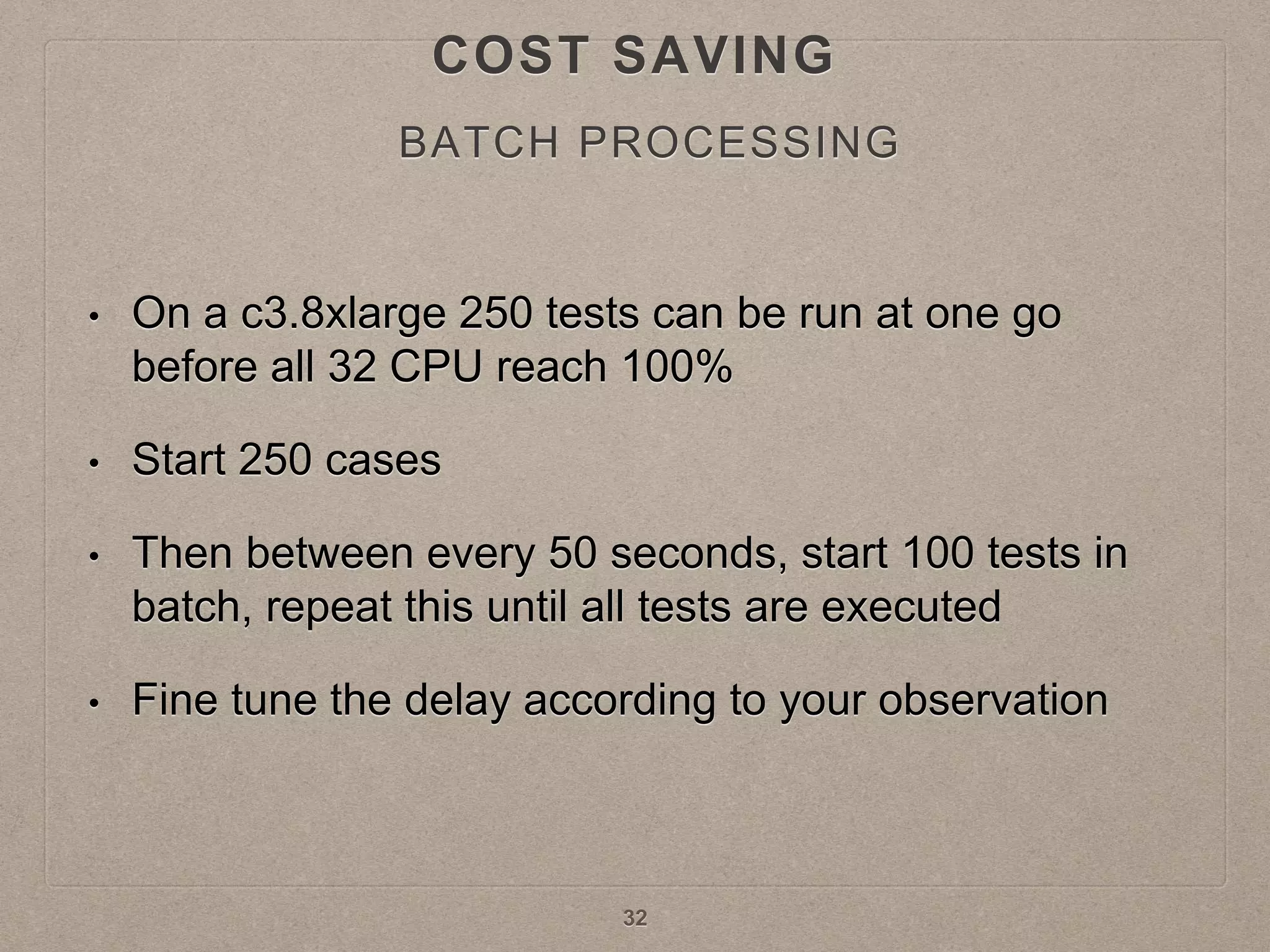

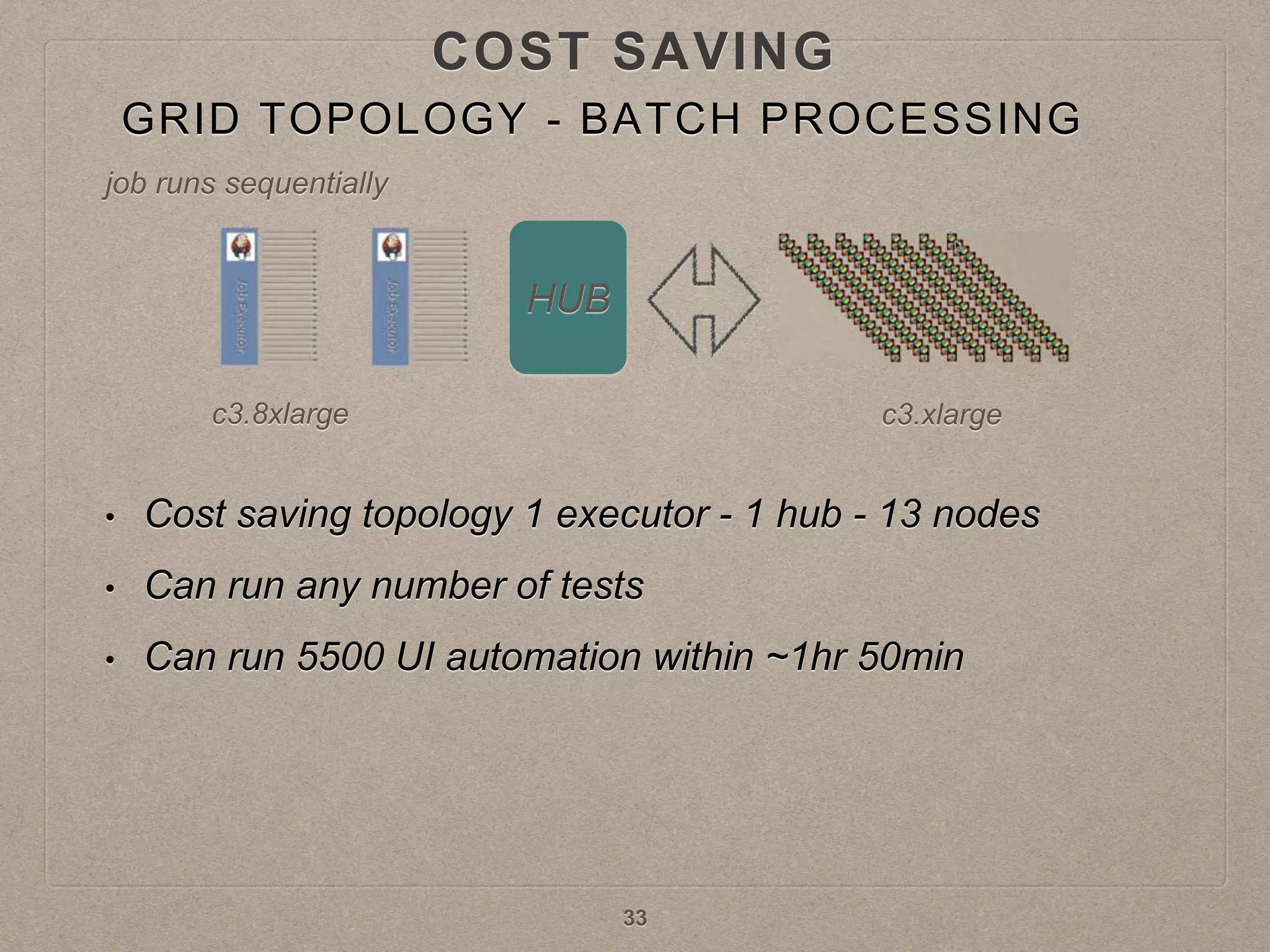

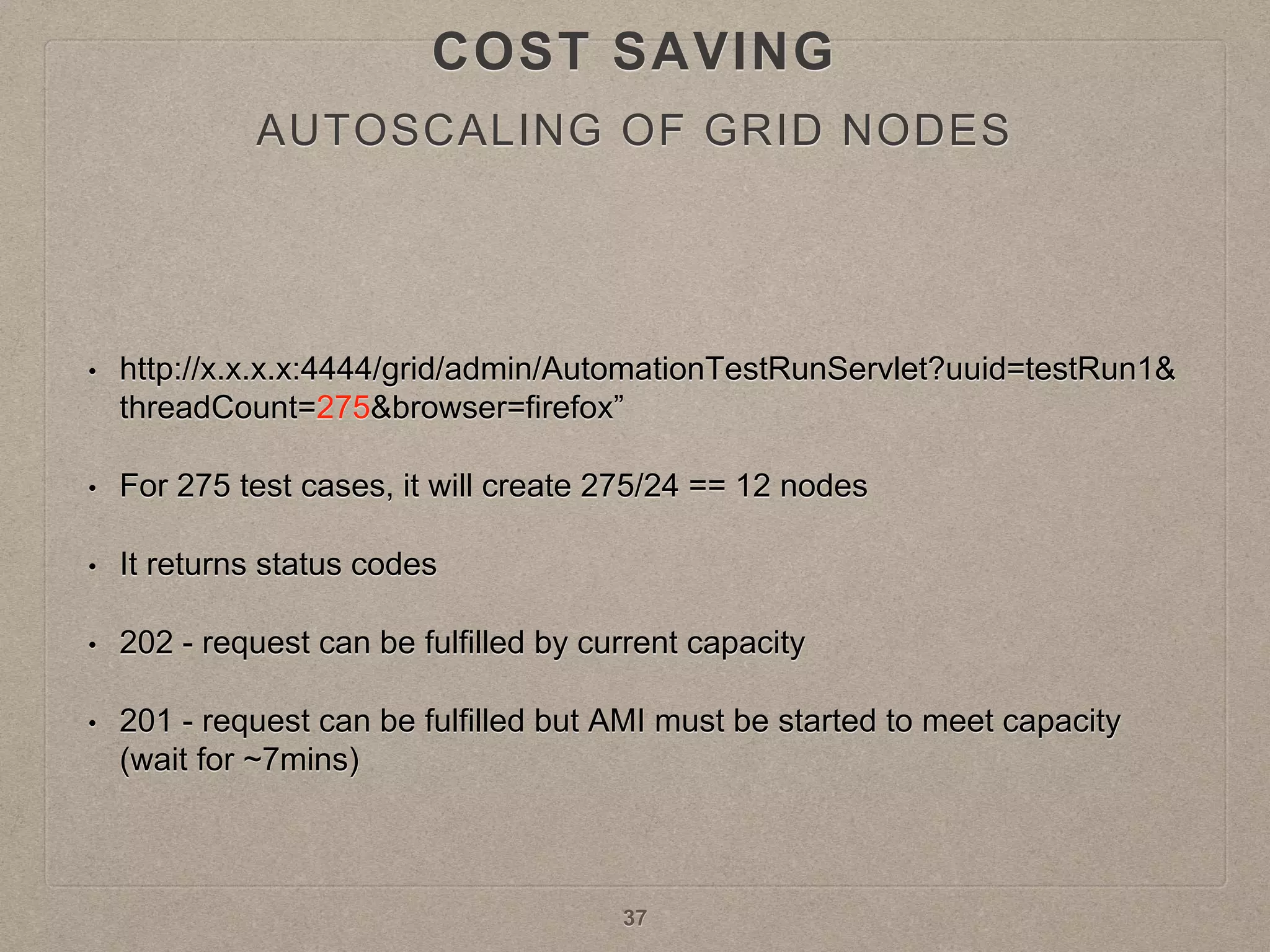

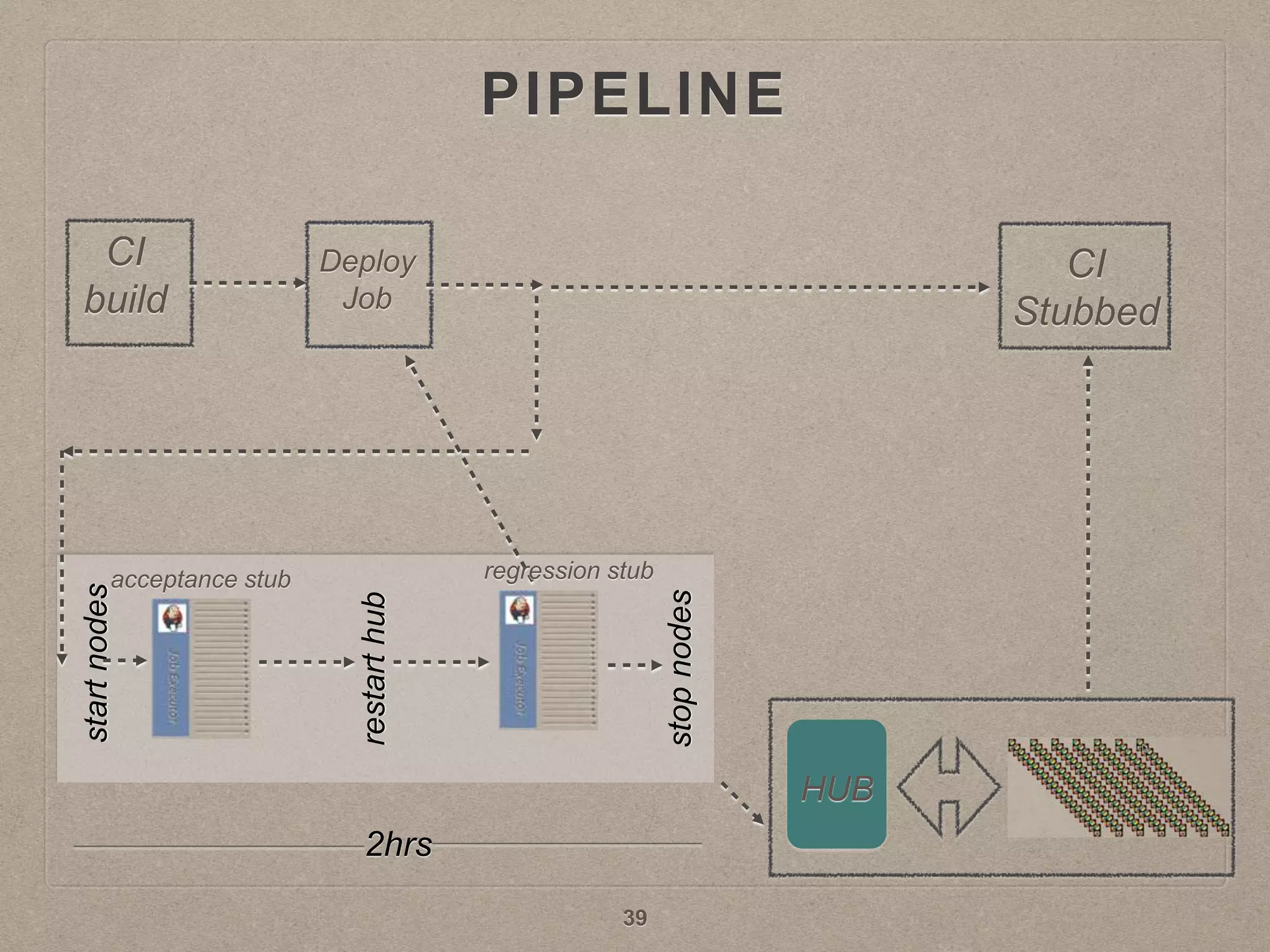

The document discusses implementing distributed automation using Selenium Grid, AWS, and autoscaling to enhance UI automation efficiency, significantly reducing runtime and improving feedback cycles. It outlines various strategies for setting up a stable grid environment, managing resources, and cost-saving measures such as optimizing node usage and implementing autoscaling. Additionally, it addresses best practices for running tests in parallel and provides insights into reporting and monitoring automation results.