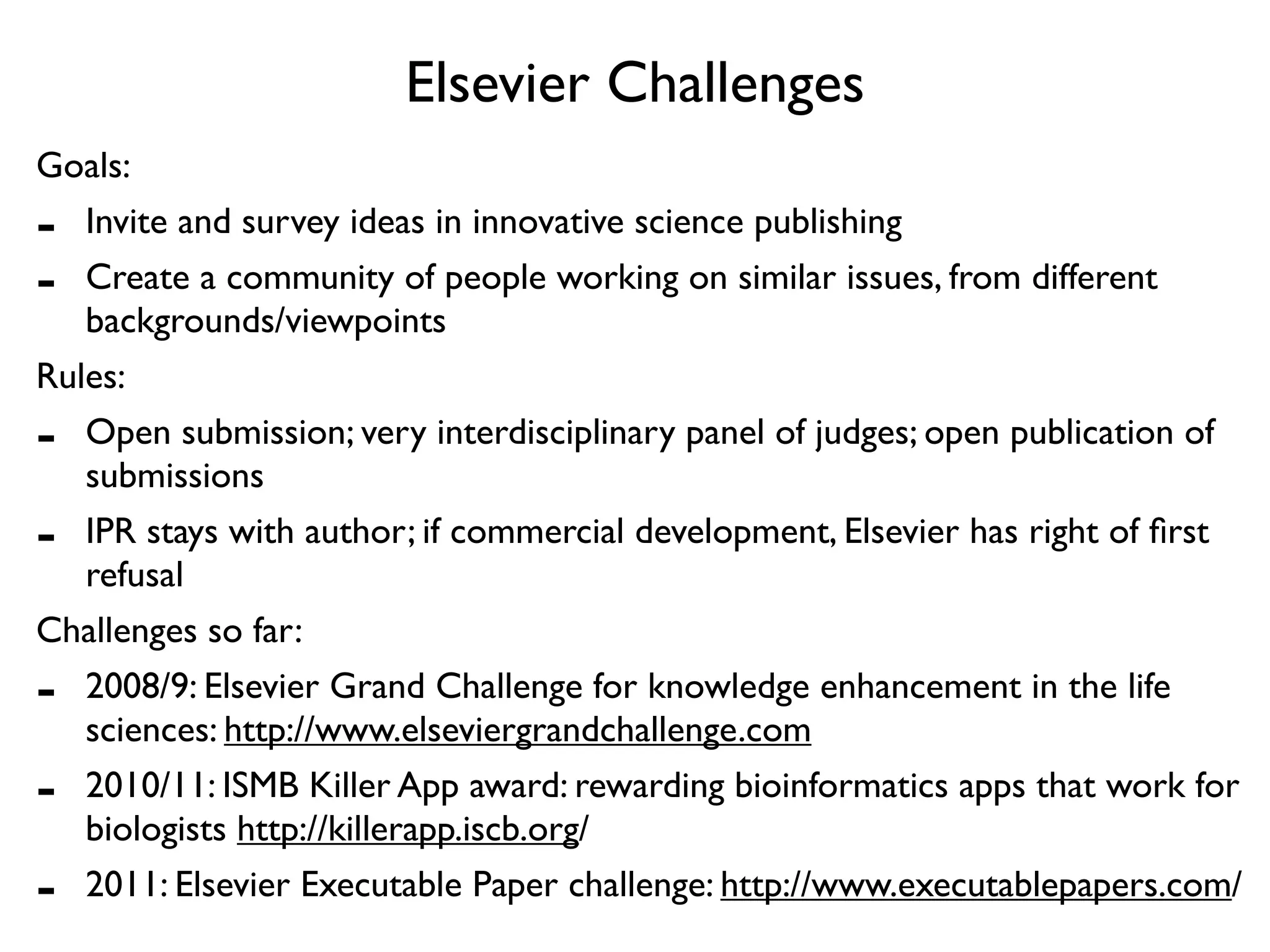

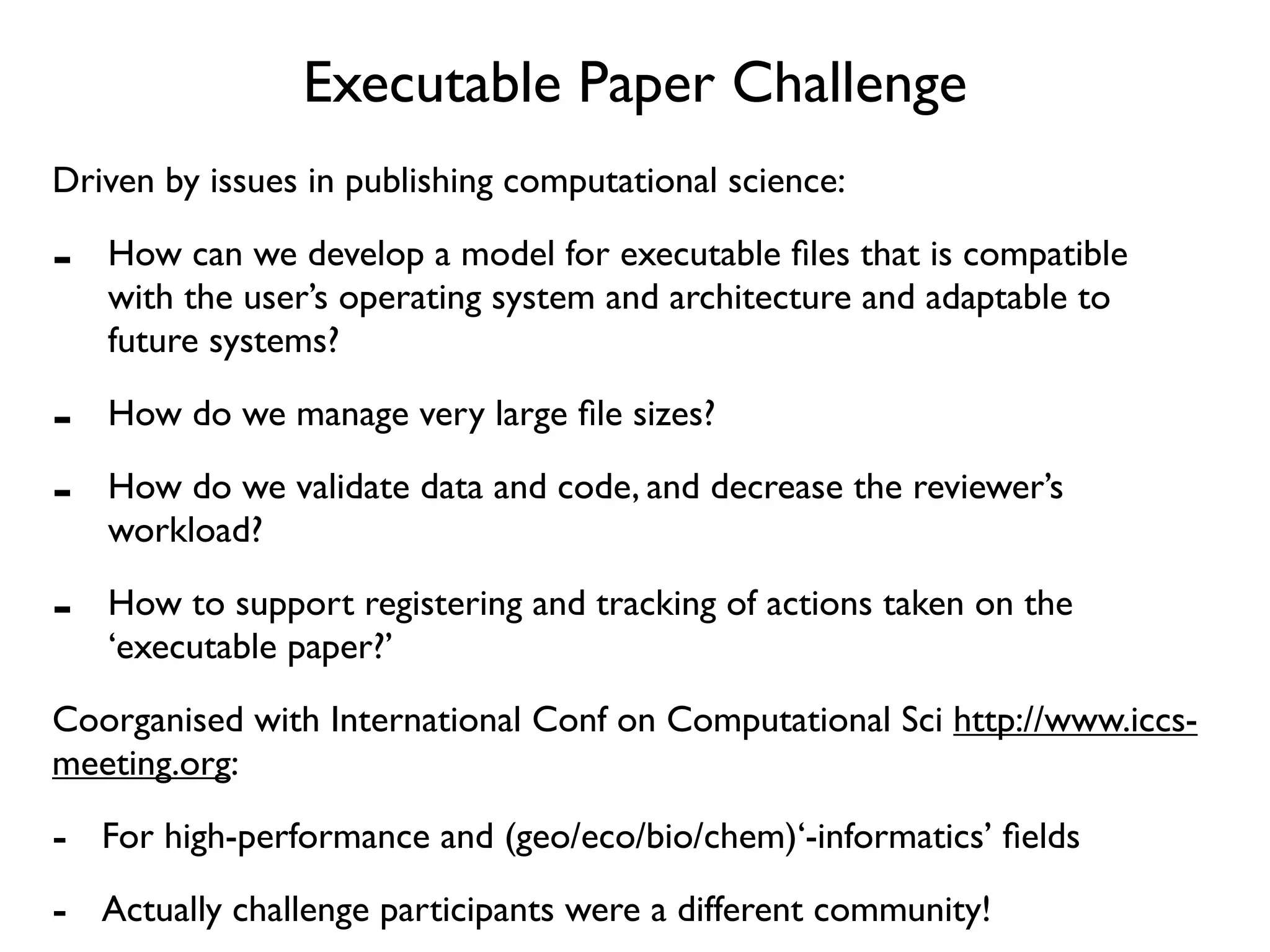

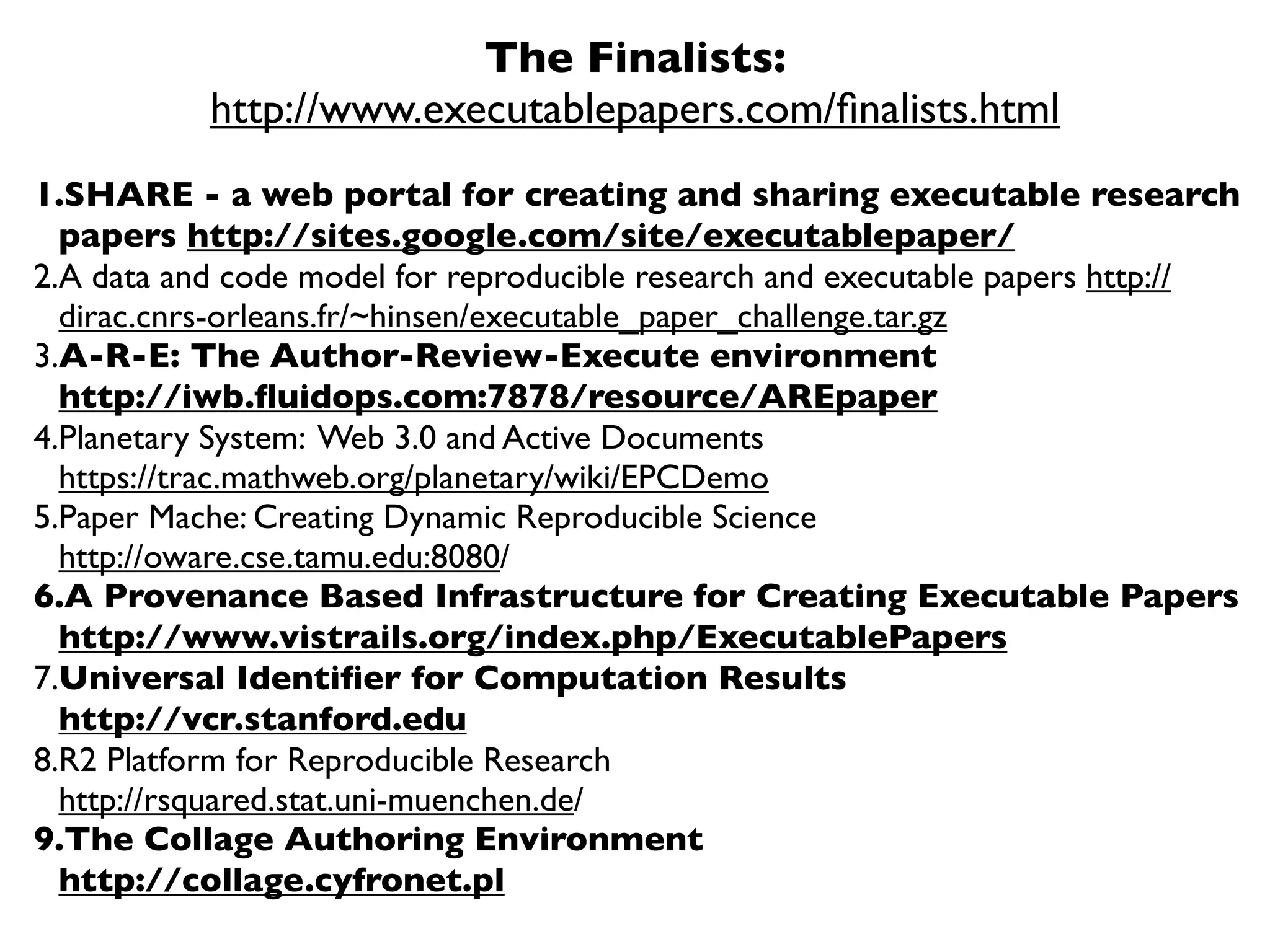

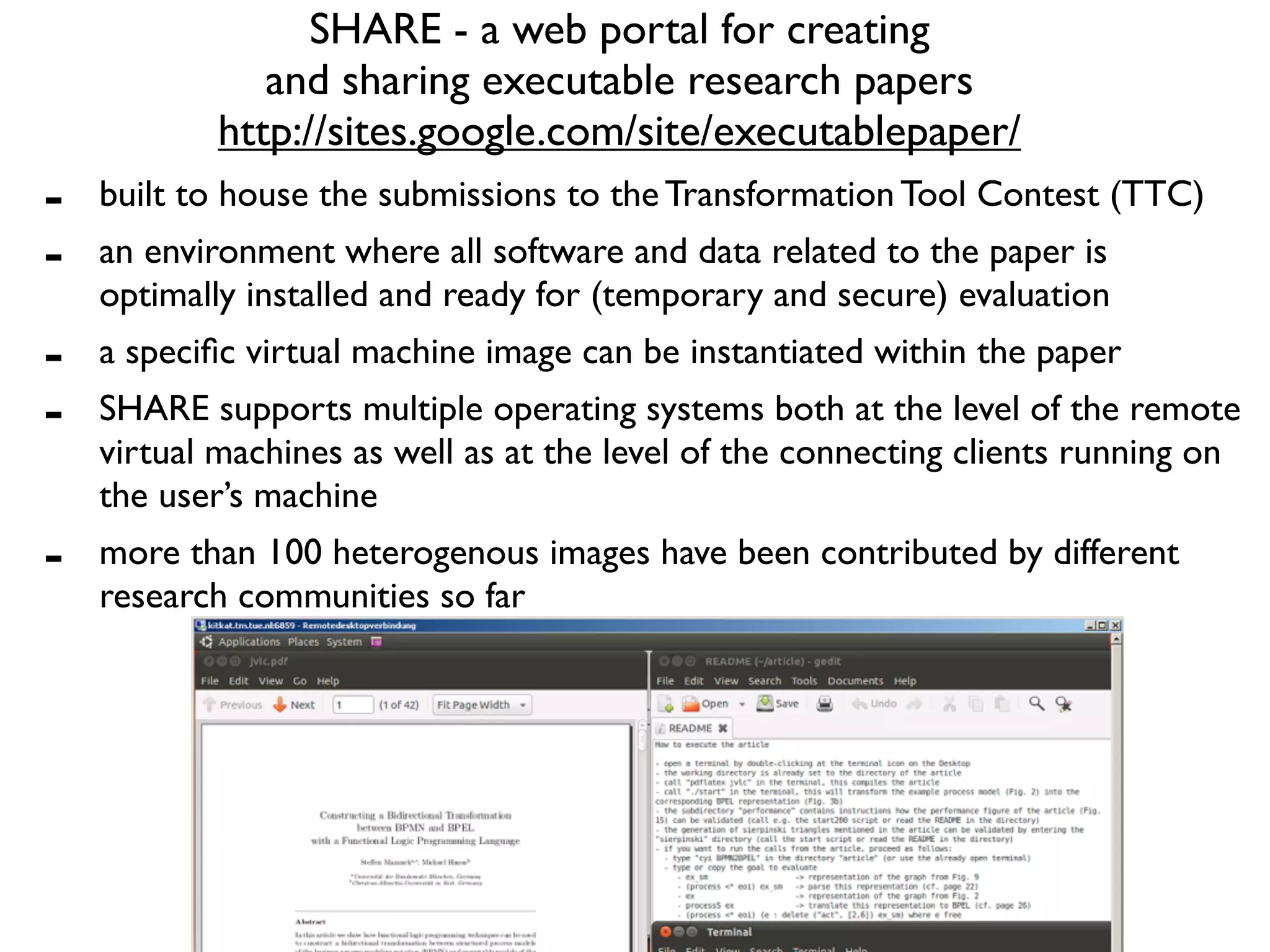

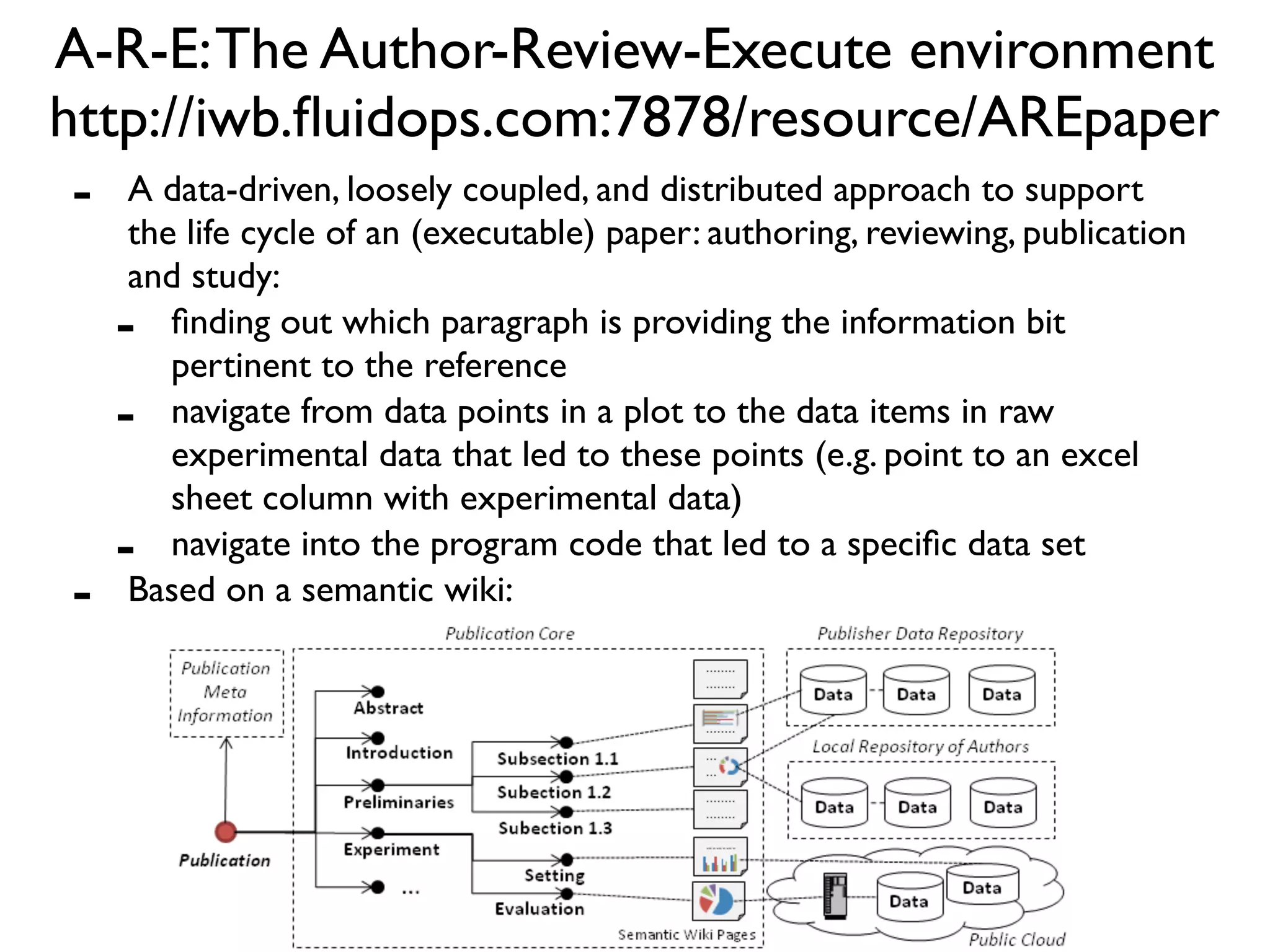

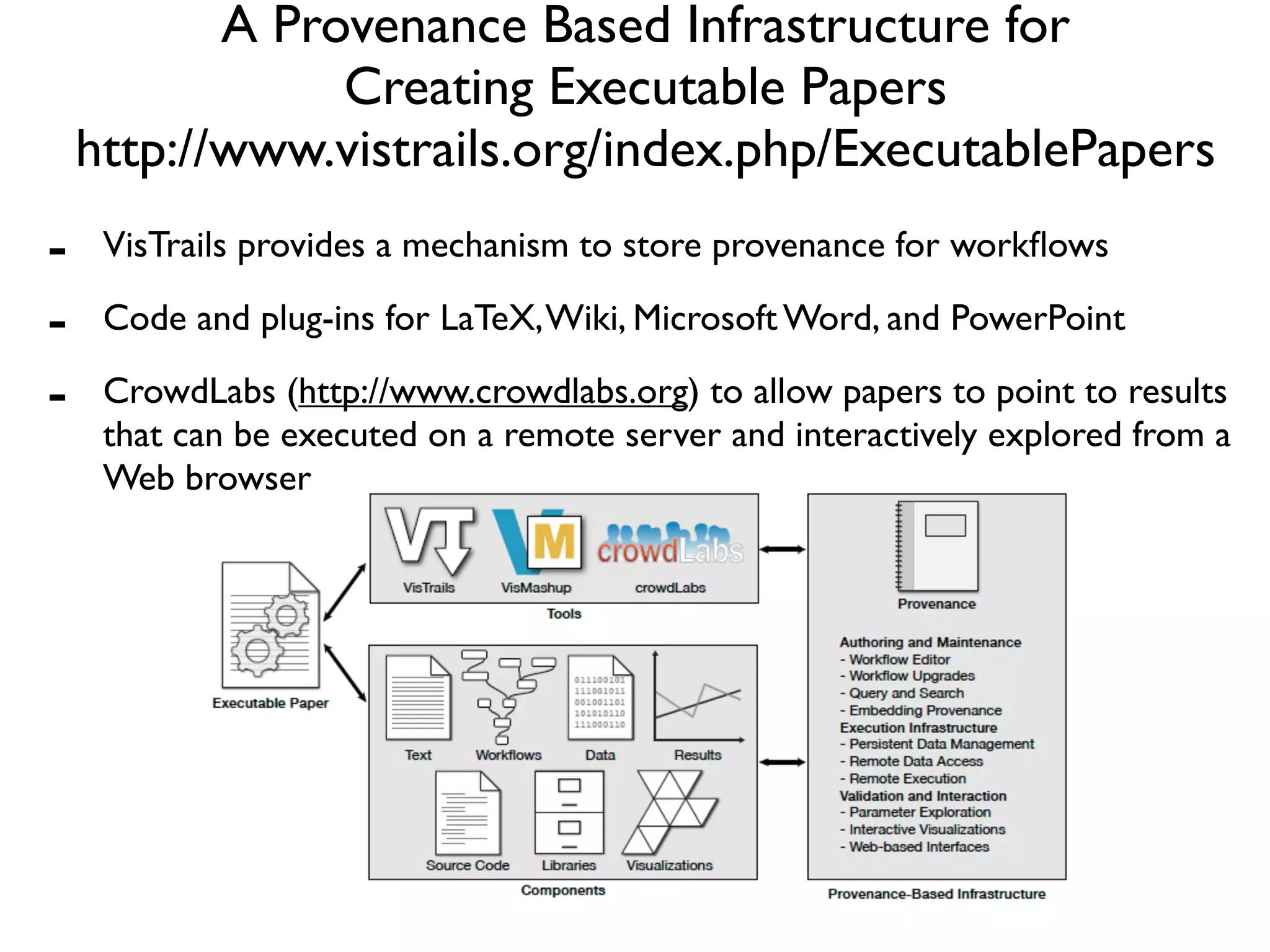

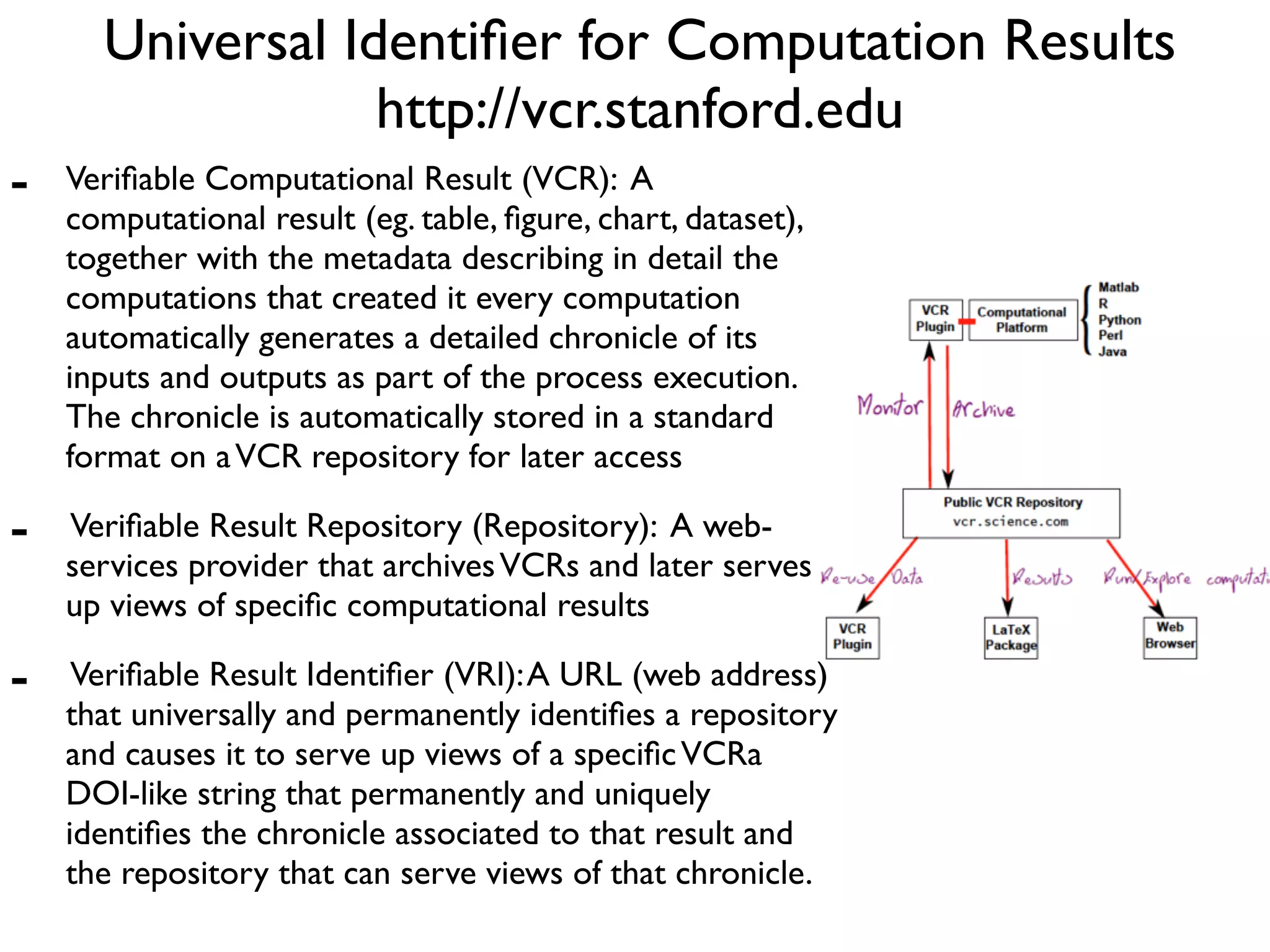

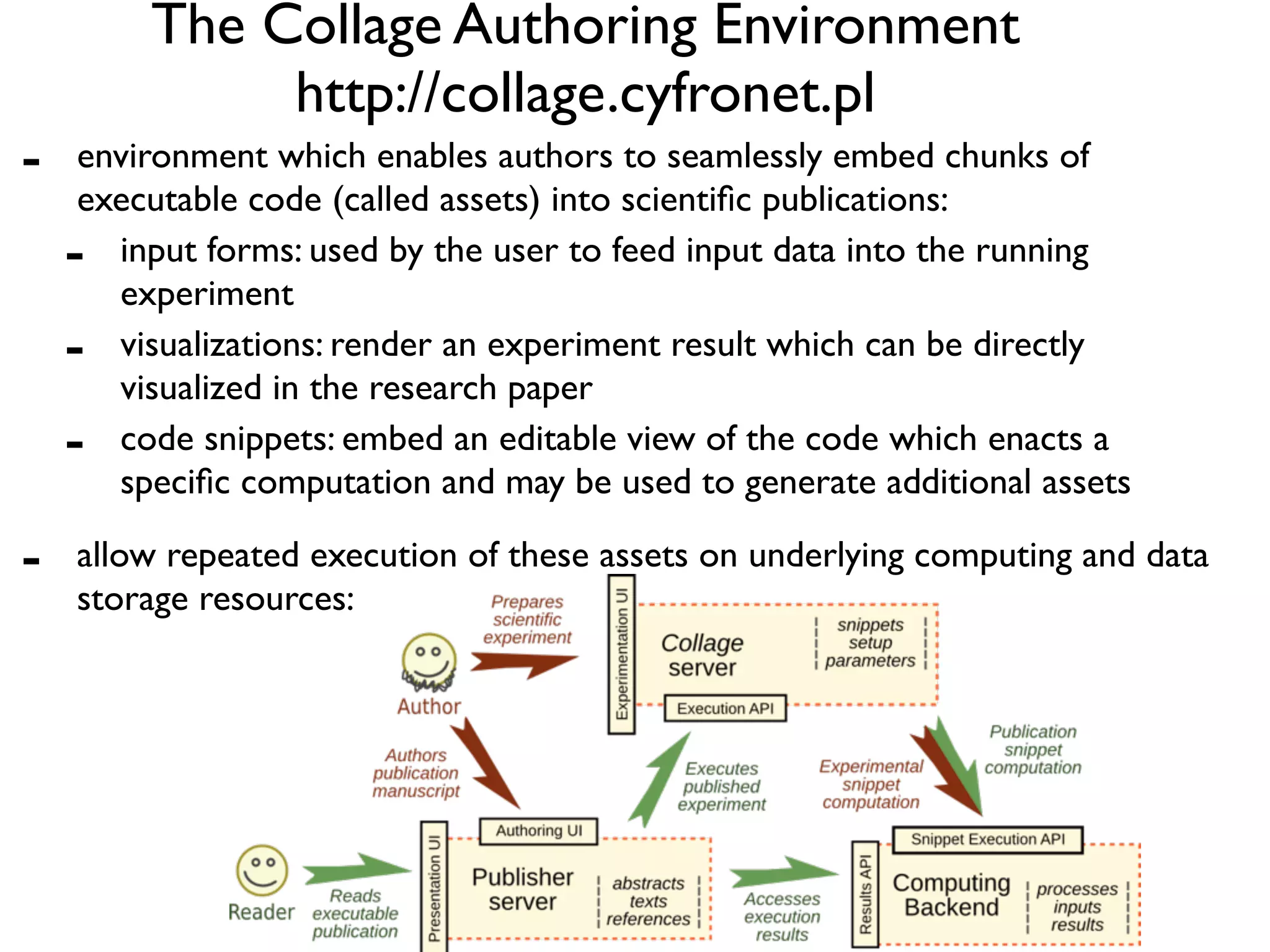

The document discusses the Elsevier Executable Papers Challenge which aims to develop models for publishing computational science papers that are executable. It provides an overview of several finalist submissions that developed platforms and environments for creating executable papers, including SHARE which hosts virtual machines for paper submissions and A-R-E which supports the full paper lifecycle from authoring to publication. The document advocates for the idea of executable journals where submitted papers include working code that can be executed on a shared platform and remain available for other papers to build upon, clearly communicating methods and reducing duplication of work.

![In other words:

“I like the idea of [...] a research object corresponding to a

PhD thesis sitting on the (digital) library shelf and then being

re-executed as new data comes along. So the thesis sits

there and new results (or papers, or research objects) pop

out. I like this example because it involves tying down the

method and letting the data flow, instead of the widely held

view that the data sits there and methods are applied to it.

[...]

These papers then become a way of distributing data and

methods in a highly usable and user-centric way [...]. So

scientists don't need to download and install tools and learn

user interfaces.They just interact with the published

executable papers...”

Dave De Roure, email to Wf4ever group](https://image.slidesharecdn.com/executablepapers-110617160838-phpapp01/75/Executable-papers-11-2048.jpg)