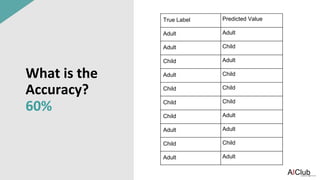

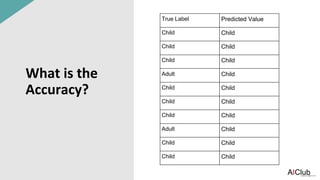

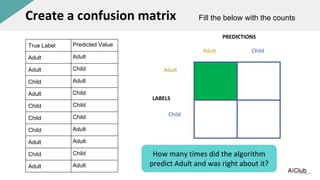

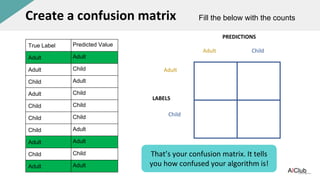

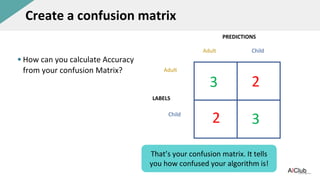

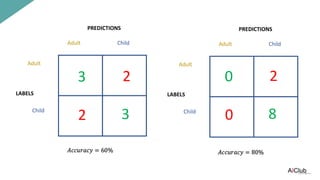

This document discusses accuracy and confusion matrices for evaluating classification models. It explains that accuracy alone is not enough to evaluate a model and that a confusion matrix provides more information. A confusion matrix tracks the number of correct and incorrect predictions made by a model compared to the actual labels. The document provides an example confusion matrix and shows how to calculate accuracy from a confusion matrix by taking the sum of the diagonal (correct predictions) and dividing by the total number of predictions.