The document presents a method for identifying and addressing neglected classes in deep neural networks. It proposes identifying neglected classes based on the length of gradient updates during training, which can be small despite large errors. These neglected classes are then augmented by partitioning them into subclasses. A subclass deep neural network is trained using the subclass labels, improving classification performance for the originally neglected classes. Experiments on image and video datasets demonstrate the approach leads to better results than conventional deep networks.

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

3

• Deep neural networks (DNNs) have shown remarkable performance in many

machine learning problems

• They are being commercially deployed in various domains

Problem statement

• Multimedia

understanding

• Self-driving cars • Edge computing

Image Credits:V2Gov

ImageCredits: [1]

[1]Chen, J., Ran, X.: Deep LearningWith Edge Computing:A Review, Proc. of the IEEE, vol. 107, no. 8, (Aug. 2019)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-3-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

5

Related work

• The problem of neglected classes sometimes is related with imbalanced class

learning, where the distribution of training data across different classes is skewed

• There are relatively few works studying the class imbalance problem in DNNs

(e.g. see [1, 2])

As observed in our experiments neglected classes may also appear with balanced

training datasets (e.g. CIFAR datasets)

The identification of classes getting less attention from the DNN during the

training procedure is a relatively unexplored topic

[1] Sarafianos, N., Xu, X., Kakadiaris, I.A.: Deep imbalanced attribute classification using visual attention aggregation. ECCV. Munich, Germany

(Sep 2018)

[2] Lin,T., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal Loss for DenseObject Detection, 2017 IEEE ICCV,Venice, 2017.](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-5-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

14

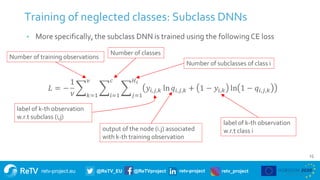

Training of neglected classes: Subclass DNNs

• A subclass-based learning framework is applied to improve the classification

performance of neglected classes

• Subclass-based classification techniques have been successfully used in the

shallow learning paradigm [1-5].

• To this end, neglected classes are augmented and partitioned to subclasses and

the created labeling information is used to create and train a subclass DNN

[1] Hastie,T.,Tibshirani, R.: Discriminant analysis by Gaussian mixtures. Journal of the Royal Statistical Society. Series B 58(1), (Jul 1996)

[2] Manli, Z., Martinez, A.M.: Subclass discriminant analysis. IEEETrans. PatternAnal. Mach. Intell. 28(8), (Aug 2006)

[3] Escalera, S. et al.: Subclass problem-dependent design for error-correcting output codes. IEEETrans. PatternAnal. Mach. Intell. 30(6), (Jun

2008)

[4]You, D., Hamsici, O.C., Martinez, A.M.: Kernel optimization in discriminant analysis. IEEETrans. PatternAnal. Mach. Intell. 33(3), (Mar 2011)

[5] Gkalelis, N., Mezaris,V., Kompatsiaris, I., Stathaki,T.: Mixture subclass discriminant analysis link to restricted Gaussian model and other

generalizations. IEEETrans. Neural Netw. Learn. Syst. 24(1), (Jan 2013)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-14-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

16

Multiclass classification

• CIFAR10 [1]: 10 classes, 32 x 32 color images, 50000 training and 10000 testing

observations

• CIFAR100 [1]: as CIFAR10 but with 100 classes

• SVHN [2]: 10 classes, 32 x 32 color images, 73257 training, 26032 testing and

531131 extra observations

[1] Krizhevsky, A.: Learning multiple layers of features from tiny images.Tech. rep. (2009)

[2] Netzer,Y.,Wang,T., Coates, A., Bissacco, A.,Wu, B., Ng, A.Y.: Reading digits in natural images with unsupervised feature learning. NIPS

Workshops.Venice, Italy, (Oct 2017)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-16-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

17

Experimental setup (similar setup with [1])

• Images are normalized to zero mean and unit variance

• Data augmentation is applied as in [1] (cropping, mirroring, cutout, etc.)

• DNNs [2,3]: VGG16, WRN-28-10 (CIFAR-10, -100), WRN-16-8 (SVHN)

• CE loss, Minibatch SGD, Nesterov momentum 0.9, batch size 128, weight decay

0.0005, 200 epochs, initial learning rate 0.1, exponential schedule (learning rate

multiplied by 0.1 for CIFAR or 0.2 for SVHN, at epochs 60, 120 and 160)

[1] Devries,T.,Taylor, G.W.: Improved regularization of convolutional neural networks with cutout. arXiv:1708.04552 (2017)

[2]Zagoruyko, S., Komodakis, N.:Wide residual networks. BMVC.York, UK, (Sep 2016)

[3] Simonyan, K., Zisserman, A.:Very deep convolutional networks for large-scale image recognition. ICLR. San Diego, CA, USA (May 2015)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-17-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

18

SDNN training

• DNN is trained for 30 epochs in order to compute a reliable neglection score for

each class

• The classes with highest neglection score are selected: 2 classes for CIFAR-10 and

SVHN, 10 classes for CIFAR-100

• Observations of the selected classes are doubled using the augmentation

approach presented in [1]

• Selected classes are partitioned to 2 subclasses using k-means

• SDNN is trained using the annotations at class and subclass level

[1] Inoue, H.: Data augmentation by pairing samples for images classification. arXiv:1801.02929 (2018)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-18-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

19

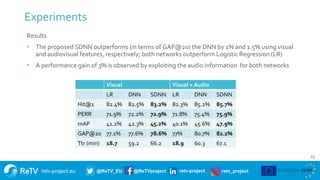

Results

• The proposed subclass DNNs (SVGG16, SWRN) provide improved performance over their

conventional variants (VGG16 [1], WRN [2])

• Considering that [2] is among the state-of-the-art approaches, the attained CCR performance

improvement is significant

• Training time overhead is negligible for the medium-size datasets (CIFAR-10, -100) and relatively

small for the larger SVHN; testing time is only a few seconds for all DNNs

VGG16 [1] SVGG16 WRN [2] SWRN

CIFAR-10 93.5% (2.6 h) 94.8% (2.7 h) 96.92% (7.1 h) 97.14% (8.1 h)

CIFAR-100 71.24% (2.6 h) 73.67% (2.6 h) 81.59% (7.1 h) 82.17% (7.5 h)

SVHN 98.16% (29.1 h) 98.35 (34.1 h) 98.7% (33.1 h) 98.81% (42.7 h)

[1] Simonyan, K., Zisserman, A.:Very deep convolutional networks for large-scale image recognition. In: ICLR. San Diego, CA, USA (May 2015)

[2] Devries,T.,Taylor, G.W.: Improved regularization of convolutional neural networks with cutout. arXiv:1708.04552 (2017)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-19-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

20

Multilabel classification

• YouTube-8M (YT8M) [1]: largest public dataset for multilabel video classification

• 3862 classes, 3888919 training, 1112356 evaluation and 1133323 testing videos

• 1024- and 128-dimensional visual and audio feature vectors are provided for each

video

[1]Abu-El-Haija, S., Kothari, N., Lee, J., Natsev, A.P.,Toderici, G.,Varadarajan, B.,Vijayanarasimhan, S.:YouTube-8M:A large-scale video

classification benchmark. In: arXiv:1609.08675 (2016)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-20-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

21

Experimental setup

• Two experiments: using the visual feature vectors directly; concatenating the

visual and audio feature vectors

• L2-normalization is applied to feature vectors in all cases

• DNN: 1 conv layer with 64 1d-filters, max-pooling, dropout, SG output layer

• CE loss, Minibatch SGD, batch size 512, weight decay 0.0005, 5 epochs, initial

learning rate 0.001, exponential schedule (learning rate multiplied by 0.95 at

every epoch)

• The evaluation metrics of YT8M challenge [1] are utilized for performance

evaluation; GAP@20 is used as the primary evaluation metric

[1]Abu-El-Haija, S., Kothari, N., Lee, J., Natsev, A.P.,Toderici, G.,Varadarajan, B.,Vijayanarasimhan, S.:YouTube-8M:A large-scale video

classification benchmark. In: arXiv:1609.08675 (2016)](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-21-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

22

SDNN training

• DNN is trained for 1/3 of an epoch; a neglection score for each class is computed

• 386 classes with highest neglection score are selected

• Observations of the selected classes are doubled by applying extrapolation in

feature space [1]

• A efficient variant of the nearest neighbor-based clustering algorithm [2,3] is

used to partition the neglected classes to subclasses

• SDNN is trained using the annotations at class and subclass level

[1] DeVries,T.,Taylor, G.W.: Dataset augmentation in feature space. ICLRWorkshops.Toulon, France (Apr 2017)

[2] Manli, Z., Martinez, A.M.: Subclass discriminant analysis. IEEETrans. PatternAnal. Mach. Intell. 28(8), 1274-1286 (Aug 2006)

[3]You, D., Hamsici, O.C., Martinez, A.M.: Kernel optimization in discriminant analysis. IEEE PAMI 33(3), 631-638 (Mar 2011)

Experiments](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-22-320.jpg)

![retv-project.eu @ReTV_EU @ReTVproject retv-project retv_project

Experiments

24

Results

• Comparison with best single-model results in YT8M

• These methods use frame-level features and build upon stronger and computationally

demanding feature descriptors (e.g. Fisher Vectors, VLAD, FVNet, DBoF and other)

• The proposed SDNN performs in par with top-performers in the literature despite the fact that we

utilize only the video-level features provided from YT8M

[1] Huang, P.,Yuan,Y., Lan, Z., Jiang, L., Hauptmann,A.G.:Video representation learning and latent concept mining for large-scale multi-label

video classification. arXiv:1707.01408 (2017)

[2] Na, S.,Yu,Y., Lee, S., Kim, J., Kim, G.: Encoding video and label priors for multilabel video classification onYouTube-8M dataset. In: CVPR

Workshops (2017)

[3] Bober-Irizar, M., Husain, S., Ong, E.J., Bober, M.: Cultivating DNN diversity for large scale video labelling. In: CVPRWorkshops (2017)

[4] Skalic, M., Pekalski, M., Pan, X.E.: Deep learning methods for ecient large scale video labeling. In: CVPRWorkshops (2017)

[1] [2] [3] [4] SDNN

GAP@20 82.15% 80.9% 82.5% 82.25% 82.2%](https://image.slidesharecdn.com/mmm2020deeplearningneglected-200110184708/85/Subclass-deep-neural-networks-24-320.jpg)