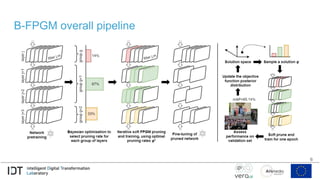

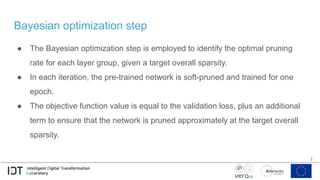

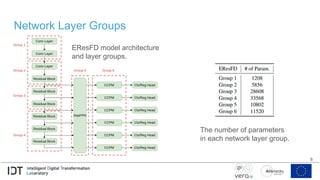

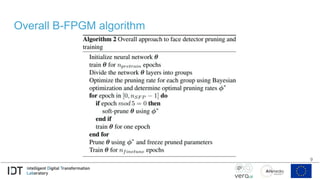

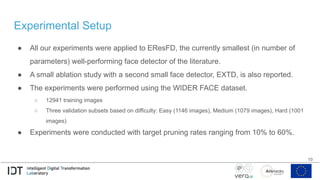

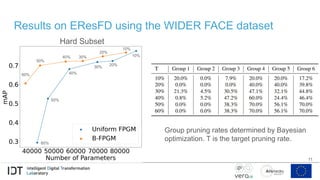

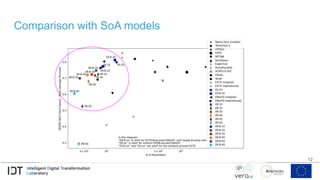

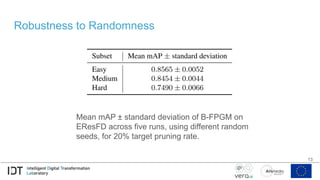

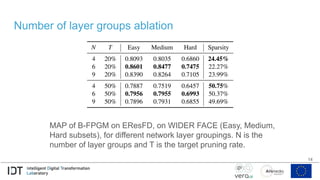

Presentation of our paper, "B-FPGM: Lightweight Face Detection via Bayesian-Optimized Soft FPGM Pruning", by N. Kaparinos and V. Mezaris. Presented at the RWS Workshop of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2025), Tucson, AZ, USA, Feb. 2025. Preprint and software available at http://arxiv.org/abs/2501.16917 https://github.com/IDT-ITI/B-FPGM