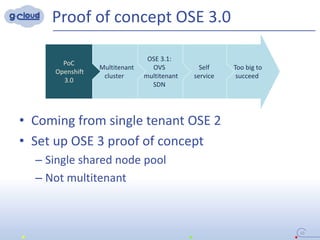

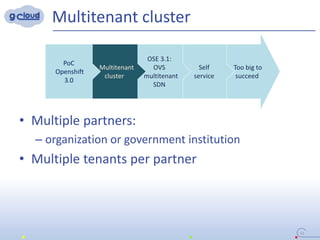

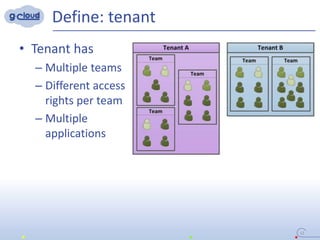

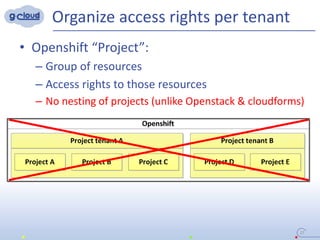

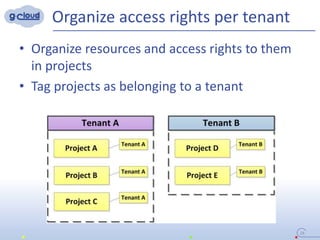

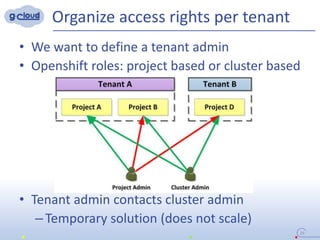

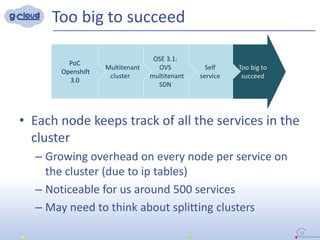

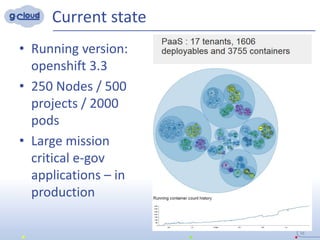

1) The document discusses implementing a multi-tenant isolation approach in a single Openshift cluster to provide isolated environments for multiple tenants.

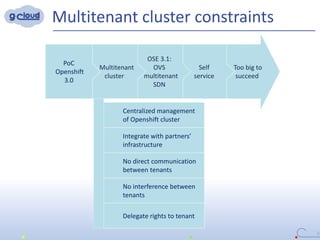

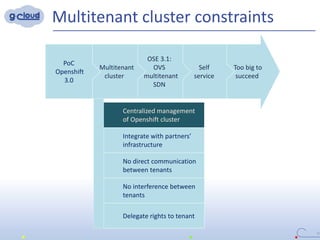

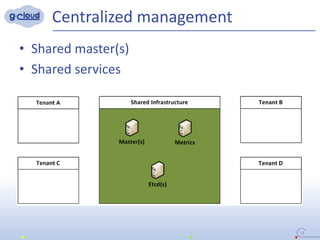

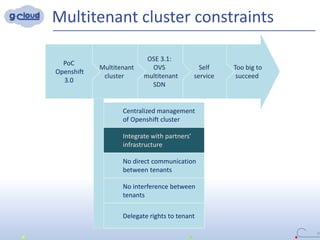

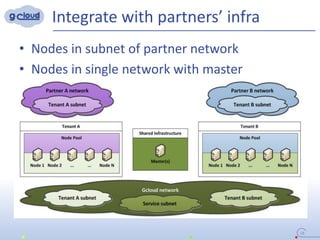

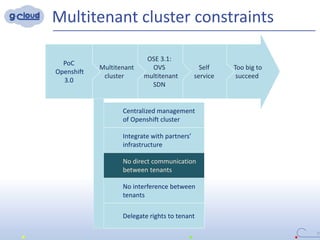

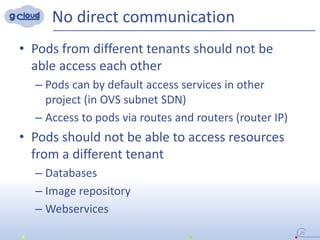

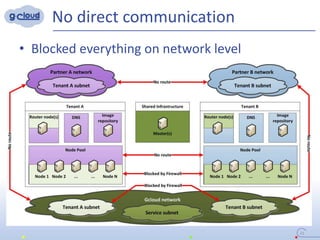

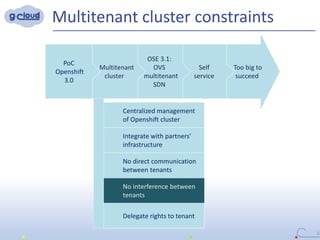

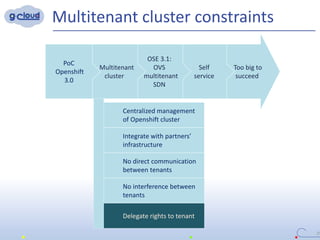

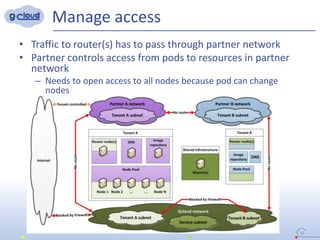

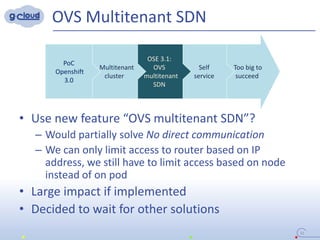

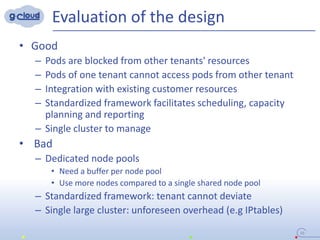

2) Some key constraints of the multi-tenant approach are centralized management of the Openshift cluster, no direct communication between tenants, integrating tenants' infrastructure, and no interference between tenants.

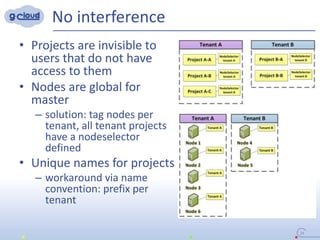

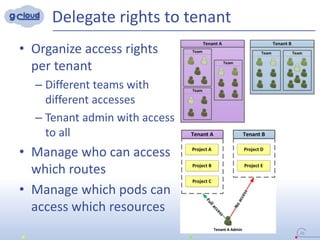

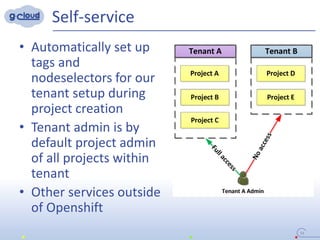

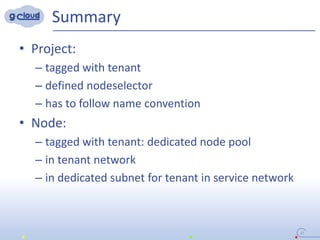

3) The implementation uses techniques like tagging projects and nodes per tenant, blocking network access between tenants, and delegating access rights to provide isolated environments for each tenant in the shared Openshift cluster.